-

Hadoop3.3.4 + HDFS Router-Based Federation配置

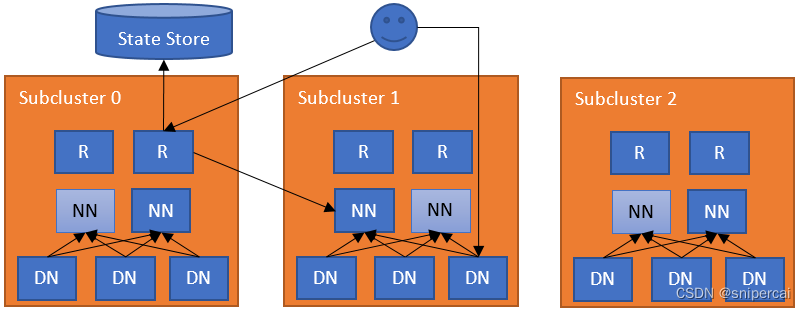

一、HDFS Router-based Federation

官方文档见:

二、集群规划

本次测试采用6台虚拟机创建了2套HDFS集群,操作系统版本为centos7.6,Hadoop版本为3.3.4,其中Namenode采用HA高可用架构。

集群 IP地址 主机名 fc zk HDFS router statestore zk ClusterA

ccns192.168.121.101 node101.cc.local server.1 NameNode

DataNode

JournalNode

ClusterA

ccns192.168.121.102 node102.cc.local server.2 NameNode

DataNode

JournalNode

ClusterA

ccns192.168.121.103 node103.cc.local server.3 DataNode

JournalNode

dfsrouter ClusterB

ccns02192.168.121.104 node104.cc.local server.1 NameNode

DataNode

JournalNode

server.1 ClusterB

ccns02192.168.121.105 node105.cc.local server.2 NameNode

DataNode

JournalNode

server.1 ClusterB

ccns02192.168.121.106 node106.cc.local server.3 DataNode

JournalNode

dfsrouter server.1 三、配置ClusterA集群

1、配置core-site.xml

fs.defaultFS

hdfs://ccns

2、配置hdfs-site.xml

dfs.nameservices

ccns,ccns02,ccrbf

dfs.ha.namenodes.ccns

nn1,nn2

dfs.ha.namenodes.ccns02

nn1,nn2

dfs.namenode.rpc-address.ccns.nn1

node101.cc.local:9000

dfs.namenode.servicerpc-address.ccns.nn1

node101.cc.local:9040

dfs.namenode.https-address.ccns.nn1

node101.cc.local:9871

dfs.namenode.rpc-address.ccns.nn2

node102.cc.local:9000

dfs.namenode.servicerpc-address.ccns.nn2

node102.cc.local:9040

dfs.namenode.https-address.ccns.nn2

node102.cc.local:9871

dfs.namenode.rpc-address.ccns02.nn1

node104.cc.local:9000

dfs.namenode.servicerpc-address.ccns02.nn1

node104.cc.local:9040

dfs.namenode.https-address.ccns02.nn1

node104.cc.local:9871

dfs.namenode.rpc-address.ccns02.nn2

node105.cc.local:9000

dfs.namenode.servicerpc-address.ccns02.nn2

node105.cc.local:9040

dfs.namenode.https-address.ccns02.nn2

node105.cc.local:9871

dfs.namenode.shared.edits.dir

qjournal://node101.cc.local:8485;node102.cc.local:8485;node103.cc.local:8485;node104.cc.local:8485;node105.cc.local:8485;node106.cc.local:8485/ccns

dfs.client.failover.proxy.provider.ccns

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.ha.fencing.methods

sshfence

dfs.ha.automatic-failover.enabled

true

dfs.ha.fencing.ssh.private-key-files

/home/hadoop/.ssh/id_rsa

dfs.journalnode.edits.dir

/opt/hadoop/hadoop-3.3.4/data/journalnode

dfs.namenode.name.dir

file:///opt/hadoop/hadoop-3.3.4/data/namenode

dfs.datanode.data.dir

file:///opt/hadoop/hadoop-3.3.4/data/datanode

hadoop.caller.context.enabled

true

dfs.replication

3

dfs.journalnode.http-address

0.0.0.0:8480

dfs.journalnode.rpc-address

0.0.0.0:8485

dfs.permissions

true

dfs.permissions.enabled

true

dfs.namenode.inode.attributes.provider.class

org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer

dfs.permissions.ContentSummary.subAccess

true

dfs.block.access.token.enable

true

dfs.namenode.kerberos.principal

hadoop/_HOST@CC.LOCAL

dfs.namenode.keytab.file

/etc/security/keytab/hadoop.keytab

dfs.datanode.kerberos.principal

hadoop/_HOST@CC.LOCAL

dfs.datanode.keytab.file

/etc/security/keytab/hadoop.keytab

dfs.journalnode.kerberos.principal

hadoop/_HOST@CC.LOCAL

dfs.journalnode.keytab.file

/etc/security/keytab/hadoop.keytab

dfs.namenode.kerberos.internal.spnego.principal

hadoop/_HOST@CC.LOCAL

dfs.web.authentication.kerberos.principal

hadoop/_HOST@CC.LOCAL

dfs.web.authentication.kerberos.keytab

/etc/security/keytab/hadoop.keytab

dfs.datanode.peer.stats.enabled

true

dfs.data.transfer.protection

authentication

dfs.http.policy

HTTPS_ONLY

注意:

1、rbf服务会监听namenode的jxm数据,需要请求web地址获取监控数据,如果因为HDFS安全模式仅开放https的web访问即dfs.http.policy为HTTPS_ONLY,需要配置dfs.namenode.https-address项,而不能配置dfs.namenode.http-address

2、rbf服务启动后需要3、配置hdfs-rbf-site.xml

从Hadoop-3.3.0 及以后版本,RBF作为一个单独模块从HDFS独立出来了,配置文件为hdfs-rbf-site.xml,其中Router主要的端口有3个:

- dfs.federation.router.rpc-address: Router的默认RPC端口8888, Client发送RPC到此

- dfs.federation.router.admin-address: Router的默认routeradmin命令端口8111

- dfs.federation.router.https-address: Router的默认UI地址50072

dfs.federation.router.default.nameserviceId

ccns

dfs.federation.router.default.nameservice.enable

true

dfs.federation.router.store.driver.class

org.apache.hadoop.hdfs.server.federation.store.driver.impl.StateStoreZooKeeperImpl

hadoop.zk.address

node104.cc.local:2181,node105.cc.local:2181,node106.cc.local:2181

dfs.federation.router.store.driver.zk.parent-path

/hdfs-federation

dfs.federation.router.monitor.localnamenode.enable

false

dfs.federation.router.monitor.namenode

ccns.nn1,ccns.nn2,ccns02.nn1,ccns02.nn2

dfs.federation.router.quota.enable

true

dfs.federation.router.cache.ttl

10s

dfs.federation.router.rpc.enable

true

dfs.federation.router.rpc-address

0.0.0.0:8888

dfs.federation.router.rpc-bind-host

0.0.0.0

dfs.federation.router.handler.count

20

dfs.federation.router.handler.queue.size

200

dfs.federation.router.reader.count

5

dfs.federation.router.reader.queue.size

100

dfs.federation.router.connection.pool-size

6

dfs.federation.router.metrics.enable

true

dfs.client.failover.random.order

true

dfs.federation.router.file.resolver.client.class

org.apache.hadoop.hdfs.server.federation.resolver.MultipleDestinationMountTableResolver

dfs.federation.router.keytab.file

/etc/security/keytab/hadoop.keytab

dfs.federation.router.kerberos.principal

hadoop/_HOST@CC.LOCAL

dfs.federation.router.kerberos.internal.spnego.principal

hadoop/_HOST@CC.LOCAL

dfs.federation.router.secret.manager.class

org.apache.hadoop.hdfs.server.federation.router.security.token.ZKDelegationTokenSecretManagerImpl

zk-dt-secret-manager.zkAuthType

none

zk-dt-secret-manager.zkConnectionString

node104.cc.local:2181,node105.cc.local:2181,node106.cc.local:2181

zk-dt-secret-manager.kerberos.keytab

/etc/security/keytab/hadoop.keytab

zk-dt-secret-manager.kerberos.principal

hadoop/_HOST@CC.LOCAL

注意:

1、默认router的namespace需要配置为本集群的服务名称,ClusterA集群为ccns,CluserB集群就应该为ccns02

2、选择zk来作为statestore需要配置hadoop.zk.address,用来指定zk地址

3、dfs.federation.router.monitor.localnamenode.enable配置为false,因为rbf跟namenode不是在同个节点,监听时就会从配置文件中查找namenode的web地址,而不是查找localhost

4、dfs.federation.router.monitor.namenode配置所有需要监听的namenode

5、需要指定secret manager相关配置,不然rbf启动时,secret manager启动报错zookeeper connectione string为null,导致rbf启动失败,相关配置项如下:dfs.federation.router.secret.manager.class,zk-dt-secret-manager.zkAuthType,zk-dt-secret-manager.zkConnectionString,zk-dt-secret-manager.kerberos.keytab,zk-dt-secret-manager.kerberos.principal四、配置ClusterB集群

1、配置core-site.xml

fs.defaultFS

hdfs://ccns02

2、配置hdfs-site.xml

与ClusterA集群配置基本相同,可参考。

3、配置配置hdfs-rbf-site.xml

与ClusterA集群配置基本相同,唯一区别在于默认router的namespace需要配置为本集群的服务名称,ClusterA集群为ccns,ClusterB集群就应该时ccns02

五、启动集群服务

1、初始化ClusterB集群

因为ClusterA之前验证中已创建好,所以本次除了修改其配置文件后重启之外,为了不丢失其上已有数据,只需要初始化ClusterB集群,相关步骤可参考新建集群部分的内容。

###在新集群的所有namenode节点上执行###

hdfs zkfc -formatZK

hdfs --daemon start zkfc

###在所有分配journalnode的节点上执行###

hdfs --daemon start journalnode

###选择NN1节点执行初始化,指定与Cluster A 相同的clusterId###

hdfs namenode -format -clusterId CID-a39855c3-952d-4d1f-86d7-eadf282d9000

hdfs --daemon start namenode

###选择NN2节点执行并启动服务###

hdfs namenode -bootstrapStandby

hdfs --daemon start namenode

###启动datanode###

hdfs --daemon start datanode注意:clusterId可以在data目录下/opt/hadoop/hadoop-3.3.4/data/namenode/current/VERSION文件中查询获得。

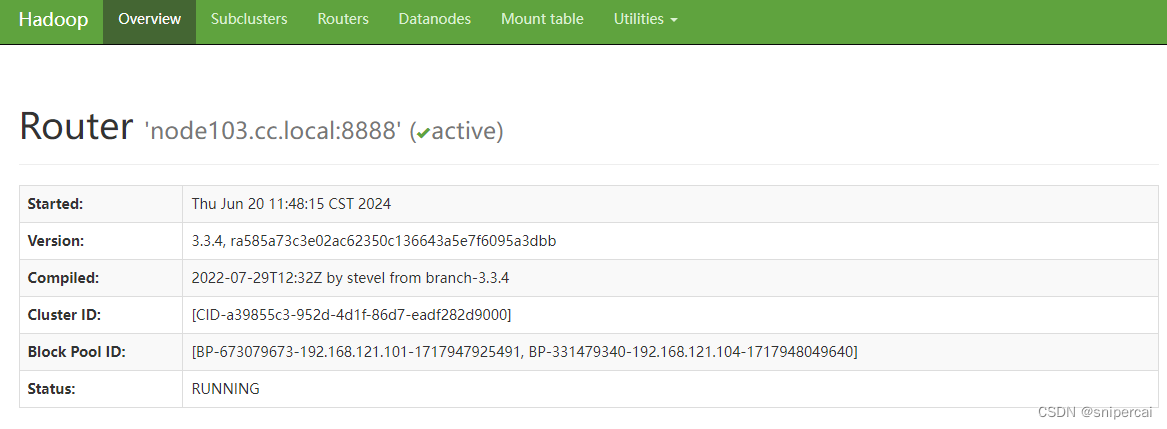

2、启动router服务

hdfs --daemon start dfsrouter

Router WebUI:https://192.168.121.103:50072/

3、配置挂载目录

通过routeradmin命令增删改查路由表,dfsrouteradmin命令只能在 router所在节点执行

hdfs dfsrouteradmin -add /ccnsRoot ccns /

hdfs dfsrouteradmin -add /ccns02Root ccns02 /通过router查看

$ hdfs dfs -ls hdfs://node103.cc.local:8888/

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2024-06-20 17:12 hdfs://node103.cc.local:8888/ccns02Root

drwxr-xr-x - hadoop supergroup 0 2024-06-20 17:12 hdfs://node103.cc.local:8888/ccnsRoot六、附常用命令

# 核心是add(添加)命令, 有这些选项:

# -readonly -owner-group -mode ?(通用)

# -faulttolerant -order [HASH|LOCAL|RANDOM|HASH_ALL|SPACE] (多映射专用)1、添加一个映射, 用户test, 组admin

hdfs dfsrouteradmin -add /ccnsRoot/test ccns /test -owner test -group admin2、添加一个映射, 读写权限设置为750

hdfs dfsrouteradmin -add /ccnsRoot/test2 ccns /test2 -mode 7503、添加一个映射, 希望此目录是只读, 任何用户不可写入的

hdfs dfsrouteradmin -add /ccnsRoot/test3 sc1 /test3 -readonly4、添加多(NS)对一映射, 让2个NS指向1个目录, 容忍多操作失败 (默认hash策略)

hdfs dfsrouteradmin -add /rbftmp1 ccns,ccns02 /tmp1 -faulttolerant5、添加多对一映射, 让2个NS指向1个目录 (使用优选最近策略)

hdfs dfsrouteradmin -add /rbftmp2 ccns,ccns02 /tmp2 -order LOCAL6、查看目前的mount信息

hdfs dfsrouteradmin -ls7、修改已经创建过的映射, 参数同add (重复add不会覆盖已有)

hdfs dfsrouteradmin -update /ccnsRoot/test2 ccns /test2 -mode -mode 5008、删除映射记录, 只用写映射的路径(source)

hdfs dfsrouteradmin -rm /ccnsRoot/test29、立刻刷新本机router同步操作, 默认ns才会同步刷新

/hdfs dfsrouteradmin -refresh10、开启/关闭NS (后跟NS名)

hdfs dfsrouteradmin -nameservice enable/disbale sc111、获取关闭的NS

hdfs dfsrouteradmin -getDisabledNameservices12、设置Router-Quota (生效需要修改配置文件么?)

# nsQuota代表文件数, ssQuota代表大小(单位字节)

hdfs dfsrouteradmin -setQuota /rbftmp1 -nsQuota 2 -ssQuota 2048 -

相关阅读:

杭电多校-Map-(模拟退火)

C#中关于字符串的使用

Go目录文件路径操作

【MySQL】操作表DML相关语句

1.SpringSecurity -快速入门、加密、基础授权

最长公共子序列 递归

企业如何通过推特群推创造商机

Vue项目中ESLint配置(VScode)

FileReader文件字符输入流

想了一个月都不知道如何开始做自媒体

- 原文地址:https://blog.csdn.net/snipercai/article/details/139805766

https://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs-rbf/HDFSRouterFederation.html

https://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs-rbf/HDFSRouterFederation.html