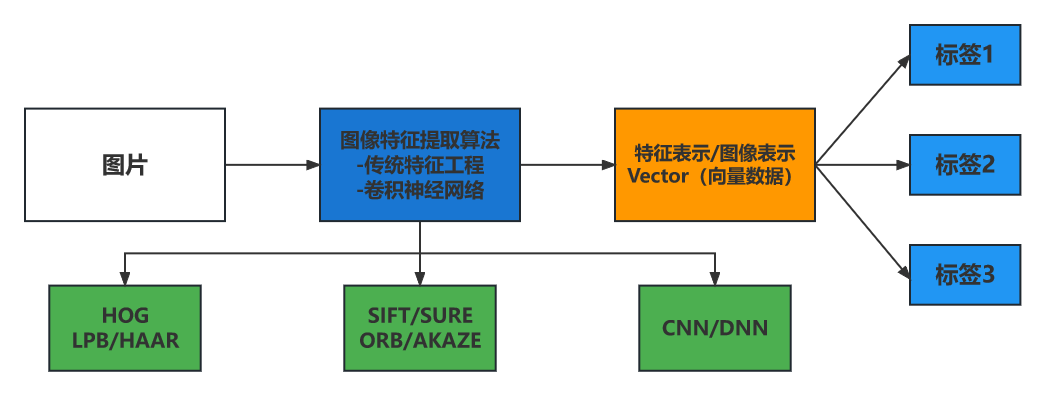

1、图像特征概述

图像特征的定义与表示

图像特征表示是该图像唯一的表述,是图像的DNA

图像特征提取概述

- 传统图像特征提取 - 主要基于纹理、角点、颜色分布、梯度、边缘等

- 深度卷积神经网络特征提取 - 基于监督学习、自动提取特征

- 特征数据/特征属性

-

- 尺度空间不变性

- 像素迁移不变性

- 光照一致性原则

- 旋转不变性原则

图像特征应用

图像分类、对象识别、特征检测、图像对齐/匹配、对象检测、图像搜索/比对

- 图像处理:从图像到图像

- 特征提取:从图像到向量(数据)

2、角点检测

-

什么是角点

-

各个方向的梯度变化

-

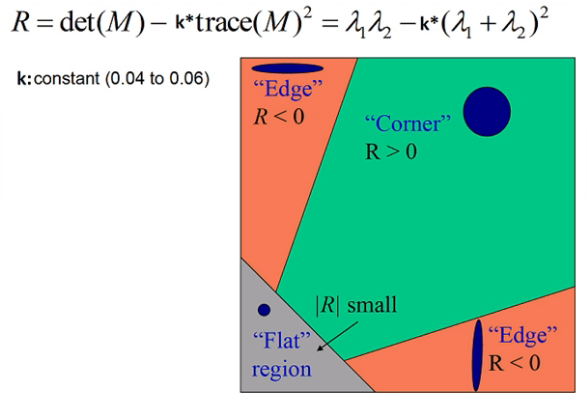

Harris角点检测算法

//函数说明: void cv::cornerHarris( InputArray src, //输入 OutputArray dst, //输出 int blockSize, //块大小 int ksize, //Sobel double k, //常量系数 int borderType = BORDER_DEFAULT // ) -

Shi-tomas角点检测算法

//函数说明: void cv::goodFeaturesToTrack( InputArray image, //输入图像 OutputArray corners, //输出的角点坐标 int maxCorners, //最大角点数目 double qualityLevel, //质量控制,即λ1与λ2的最小阈值 double minDistance, //重叠控制,忽略多少像素值范围内重叠的角点 InputArray mask = noArray(), int blockSize = 3, bool useHarrisDetector = false, double k = 0.04 ) -

代码实现

#include#include using namespace cv; using namespace std; int main(int argc, char** argv) { Mat src = imread("D:/images/building.png"); Mat gray; cvtColor(src, gray, COLOR_BGR2GRAY); namedWindow("src", WINDOW_FREERATIO); imshow("src", src); RNG rng(12345); vector points; goodFeaturesToTrack(gray, points, 400, 0.05, 10); for (size_t t = 0; t < points.size(); t++) { circle(src, points[t], 3, Scalar(rng.uniform(0, 255), rng.uniform(0, 255), rng.uniform(0, 255)), 1.5, LINE_AA); } namedWindow("out", WINDOW_FREERATIO); imshow("out", src); waitKey(0); destroyAllWindows(); return 0; } -

效果:

3、关键点检测

- 图像特征点/关键点

- 关键点检测函数

- 代码演示

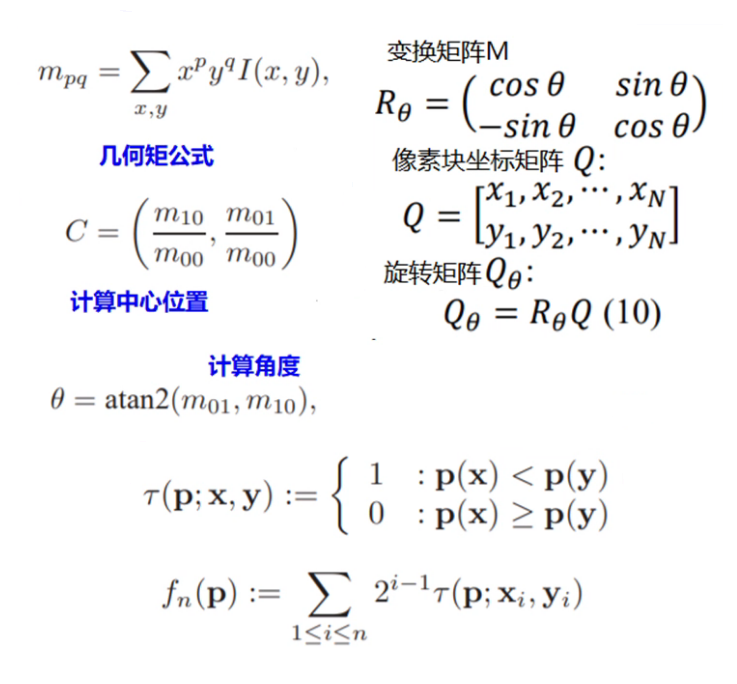

ORB关键点检测(快速)

-

ORB算法由两个部分组成:快速关键点定位+BRIEF描述子生成

-

Fast关键点检测:选择当前像素点P,阈值T,周围16个像素点,超过连续N=12个像素点大于或者小于P,Fast1:优先检测1、5、9、13,循环所有像素点

关键点检测函数

//ORB对象创建

Orb = cv::ORB::create(500)

virtual void cv::Feature2D::detect(

InputArray image, //输入图像

std::vector& keypoints, //关键点

InputArray mask = noArray() //支持mask

)

KeyPoint数据结构-四个最重要属性:

- pt

- angle

- response

- size

代码实现:

#include kypts;

orb->detect(src, kypts);

Mat result01, result02, result03;

drawKeypoints(src, kypts, result01, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

drawKeypoints(src, kypts, result02, Scalar::all(-1), DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

imshow("ORB关键点检测default", result01);

imshow("ORB关键点检测rich", result02);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

4、特征描述子

- 基于关键点周围区域

- 浮点数表示与二值编码

- 描述子长度

ORB特征描述子生成步骤:

- 提取特征关键点

- 描述子方向指派

- 特征描述子编码(二值编码32位)

代码实现:

#include kypts;

orb->detect(src, kypts);

Mat result01, result02, result03;

//drawKeypoints(src, kypts, result01, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

//不同半径代表不同层级高斯金字塔中的关键点,即图像不同尺度中的关键点

drawKeypoints(src, kypts, result02, Scalar::all(-1), DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

Mat desc_orb;

orb->compute(src, kypts, desc_orb);

std::cout << desc_orb.rows << " x " << desc_orb.cols << std::endl;

//imshow("ORB关键点检测default", result01);

imshow("ORB关键点检测rich", result02);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

SIFT(尺度不变特征转换,Scale-invariant feature transform)特征描述子

- 尺度空间不变性

- 像素迁移不变性

- 角度旋转不变性

SIFT特征提取步骤

- 尺度空间极值检测

- 关键点定位

- 方向指派

- 特征描述子

尺度空间极值检测

- 构建尺度空间 -- 图像金字塔 + 高斯尺度空间

- 三层空间中的极值查找

关键点定位

- 极值点定位 - 求导拟合

- 删除低对比度与低响应候选点

方向指派

- 关键点方向指派

- Scale尺度最近的图像,1.5倍大小的高斯窗口

特征描述子

- 128维向量/特征描述子

- 描述子编码方式

代码实现:

#include kypts;

sift->detect(src, kypts);

Mat result01, result02, result03;

//drawKeypoints(src, kypts, result01, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

drawKeypoints(src, kypts, result02, Scalar::all(-1), DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

std::cout << kypts.size() << std::endl;

for (int i = 0; i < kypts.size(); i++) {

std::cout << "pt: " << kypts[i].pt << " angle: " << kypts[i].angle << " size: " << kypts[i].size << std::endl;

}

Mat desc_orb;

sift->compute(src, kypts, desc_orb);

std::cout << desc_orb.rows << " x " << desc_orb.cols << std::endl;

//imshow("ORB关键点检测default", result01);

imshow("ORB关键点检测rich", result02);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

5、特征匹配

- 特征匹配算法

- 特征匹配函数

- 特征匹配方法对比

特征匹配算法

-

暴力匹配,全局搜索,计算最小距离,返回相似描述子合集

-

FLANN匹配,2009年发布的开源高维数据匹配算法库,全称Fast Library for Approximate Nearest Neighbors

-

支持KMeans、KDTree、KNN、多探针LSH等搜索与匹配算法

| 描述子 | 匹配方法 |

|---|---|

| SIFT, SURF, and KAZE | L1 Norm |

| AKAZE, ORB, and BRISK | Hamming distance(二值编码) |

// 暴力匹配

auto bfMatcher = BFMatcher::create(NORM_HAMMING, false);

std::vector matches;

bfMatcher->match(box_descriptors, scene_descriptors, matches);

Mat img_orb_matches;

drawMatches(box, box_kpts, box_in_scene, scene_kpts, matches, img_orb_matches);

imshow("ORB暴力匹配演示", img_orb_matches);

// FLANN匹配

auto flannMatcher = FlannBasedMatcher(new flann::LshIndexParams(6, 12, 2));

flannMatcher.match(box_descriptors, scene_descriptors, matches);

Mat img_flann_matches;

drawMatches(box, box_kpts, box_in_scene, scene_kpts, matches, img_flann_matches);

namedWindow("FLANN匹配演示", WINDOW_FREERATIO);

imshow("FLANN匹配演示", img_flann_matches);

特征匹配DMatch数据结构

DMatch数据结构:

- queryIdx

- trainIdx

- distance

distance表示距离,值越小表示匹配程度越高。

OpenCV特征匹配方法对比

代码实现:

#include kypts_book;

vector kypts_book_on_desk;

Mat desc_book, desc_book_on_desk;

//auto orb = ORB::create(500);

//orb->detectAndCompute(book, Mat(), kypts_book, desc_book);

//orb->detectAndCompute(book_on_desk, Mat(), kypts_book_on_desk, desc_book_on_desk);

auto sift = SIFT::create(500);

sift->detectAndCompute(book, Mat(), kypts_book, desc_book);

sift->detectAndCompute(book_on_desk, Mat(), kypts_book_on_desk, desc_book_on_desk);

Mat result;

vector matches;

//// 暴力匹配

//auto bf_matcher = BFMatcher::create(NORM_HAMMING, false);

//bf_matcher->match(desc_book, desc_book_on_desk, matches);

//drawMatches(book, kypts_book, book_on_desk, kypts_book_on_desk, matches, result);

// FLANN匹配

//auto flannMatcher = FlannBasedMatcher(new flann::LshIndexParams(6, 12, 2));

auto flannMatcher = FlannBasedMatcher();

flannMatcher.match(desc_book, desc_book_on_desk, matches);

Mat img_flann_matches;

drawMatches(book, kypts_book, book_on_desk, kypts_book_on_desk, matches, img_flann_matches);

namedWindow("SIFT-FLANN匹配演示", WINDOW_FREERATIO);

imshow("SIFT-FLANN匹配演示", img_flann_matches);

//namedWindow("ORB暴力匹配演示", WINDOW_FREERATIO);

//imshow("ORB暴力匹配演示", result);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

1、ORB描述子匹配效果

2、SIFT描述子匹配效果

6、单应性变换/透视变换

Mat cv::findHomography(

InputArray srcPoints, // 输入

InputArray dstPoints, // 输出

int method = 0,

double ransacReprojThreshold = 3,

OuputArray mask = noArray(),

const int maxIters = 2000,

const double confidence = 0.995

)

拟合方法:

- 最小二乘法(0)

- 随机采样一致性(RANSC)

- 渐进采样一致性(RHO)

代码实现:

#include > contours;

vector hierachy;

findContours(binary, contours, hierachy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

int index = 0;

for (size_t i = 0; i < contours.size(); i++) {

if (contourArea(contours[i]) > contourArea(contours[index])) {

index = i;

}

}

Mat approxCurve;

approxPolyDP(contours[index], approxCurve, contours[index].size() / 10, true);

//imshow("approx", approxCurve);

//std::cout << contours.size() << std::endl;

vector srcPts;

vector dstPts;

for (int i = 0; i < approxCurve.rows; i++) {

Vec2i pt = approxCurve.at(i, 0);

srcPts.push_back(Point(pt[0], pt[1]));

circle(input, Point(pt[0], pt[1]), 12, Scalar(0, 0, 255), 2, 8, 0);

putText(input, std::to_string(i), Point(pt[0], pt[1]), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(255, 0, 0), 1);

}

dstPts.push_back(Point2f(0, 0));

dstPts.push_back(Point2f(0, 760));

dstPts.push_back(Point2f(585, 760));

dstPts.push_back(Point2f(585, 0));

Mat h = findHomography(srcPts, dstPts, RANSAC); //计算单应性矩阵

Mat result;

warpPerspective(input, result, h, Size(585, 760)); //对原图进行透视变换获得校正后的目标区域

namedWindow("result", WINDOW_FREERATIO);

imshow("result", result);

drawContours(input, contours, index, Scalar(0, 255, 0), 2, 8);

namedWindow("轮廓", WINDOW_FREERATIO);

imshow("轮廓", input);

waitKey(0);

return 0;

}

效果:

7、基于匹配的对象检测

- 基于特征的匹配与对象检测

- ORB/AKAZE/SIFT

- 暴力/FLANN

- 透视变换

- 检测框

代码实现:

#include kypts_book;

vector kypts_book_on_desk;

Mat desc_book, desc_book_on_desk;

orb->detectAndCompute(book, Mat(), kypts_book, desc_book);

orb->detectAndCompute(book_on_desk, Mat(), kypts_book_on_desk, desc_book_on_desk);

Mat result;

auto bf_matcher = BFMatcher::create(NORM_HAMMING, false);

vector matches;

bf_matcher->match(desc_book, desc_book_on_desk, matches);

float good_rate = 0.15f;

int num_good_matches = matches.size() * good_rate;

std::cout << num_good_matches << std::endl;

std::sort(matches.begin(), matches.end());

matches.erase(matches.begin() + num_good_matches, matches.end());

drawMatches(book, kypts_book, book_on_desk, kypts_book_on_desk, matches, result);

vector obj_pts;

vector scene_pts;

for (size_t t = 0; t < matches.size(); t++) {

obj_pts.push_back(kypts_book[matches[t].queryIdx].pt);

scene_pts.push_back(kypts_book_on_desk[matches[t].trainIdx].pt);

}

Mat h = findHomography(obj_pts, scene_pts, RANSAC); // 计算单应性矩阵h

vector srcPts;

srcPts.push_back(Point2f(0, 0));

srcPts.push_back(Point2f(book.cols, 0));

srcPts.push_back(Point2f(book.cols, book.rows));

srcPts.push_back(Point2f(0, book.rows));

std::vector dstPts(4) ;

perspectiveTransform(srcPts, dstPts, h); // 计算转换后书的四个顶点

for (int i = 0; i < 4; i++) {

line(book_on_desk, dstPts[i], dstPts[(i + 1) % 4], Scalar(0, 0, 255), 2, 8, 0);

}

namedWindow("暴力匹配", WINDOW_FREERATIO);

imshow("暴力匹配", result);

namedWindow("对象检测", WINDOW_FREERATIO);

imshow("对象检测", book_on_desk);

//imwrite("D:/object_find.png", book_on_desk);

waitKey(0);

return 0;

}

效果:

8、文档对齐

- 模板表单/文档

- 特征匹配与对齐

代码实现:

#include kypts_ref;

vector kypts_img;

Mat desc_book, desc_book_on_desk;

orb->detectAndCompute(ref_img, Mat(), kypts_ref, desc_book);

orb->detectAndCompute(img, Mat(), kypts_img, desc_book_on_desk);

Mat result;

auto bf_matcher = BFMatcher::create(NORM_HAMMING, false);

vector matches;

bf_matcher->match(desc_book_on_desk, desc_book, matches);

float good_rate = 0.15f;

int num_good_matches = matches.size() * good_rate;

std::cout << num_good_matches << std::endl;

std::sort(matches.begin(), matches.end());

matches.erase(matches.begin() + num_good_matches, matches.end());

drawMatches(ref_img, kypts_ref, img, kypts_img, matches, result);

imshow("匹配", result);

imwrite("D:/images/result_doc.png", result);

// Extract location of good matches

std::vector points1, points2;

for (size_t i = 0; i < matches.size(); i++)

{

points1.push_back(kypts_img[matches[i].queryIdx].pt);

points2.push_back(kypts_ref[matches[i].trainIdx].pt);

}

Mat h = findHomography(points1, points2, RANSAC); // 尽量用RANSAC,比最小二乘法效果好一些

Mat aligned_doc;

warpPerspective(img, aligned_doc, h, ref_img.size()); // 单应性矩阵h决定了其他无效区域不会被变换,只会变换target区域

imwrite("D:/images/aligned_doc.png", aligned_doc);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

9、图像拼接

- 特征检测与匹配

- 图像对齐与变换

- 图像边缘融合

代码实现:

#include keypoints_right, keypoints_left;

Mat descriptors_right, descriptors_left;

auto detector = AKAZE::create();

detector->detectAndCompute(left, Mat(), keypoints_left, descriptors_left);

detector->detectAndCompute(right, Mat(), keypoints_right, descriptors_right);

// 暴力匹配

vector matches;

auto matcher = DescriptorMatcher::create(DescriptorMatcher::BRUTEFORCE);

// 发现匹配

std::vector< std::vector > knn_matches;

matcher->knnMatch(descriptors_left, descriptors_right, knn_matches, 2);

const float ratio_thresh = 0.7f;

std::vector good_matches;

for (size_t i = 0; i < knn_matches.size(); i++)

{

if (knn_matches[i][0].distance < ratio_thresh * knn_matches[i][1].distance)

{

good_matches.push_back(knn_matches[i][0]);

}

}

printf("total good match points : %d\n", good_matches.size());

std::cout << std::endl;

Mat dst;

drawMatches(left, keypoints_left, right, keypoints_right, good_matches, dst);

imshow("output", dst);

imwrite("D:/images/good_matches.png", dst);

//-- Localize the object

std::vector left_pts;

std::vector right_pts;

for (size_t i = 0; i < good_matches.size(); i++)

{

// 收集所有好的匹配点

left_pts.push_back(keypoints_left[good_matches[i].queryIdx].pt);

right_pts.push_back(keypoints_right[good_matches[i].trainIdx].pt);

}

// 配准与对齐,对齐到第一张

Mat H = findHomography(right_pts, left_pts, RANSAC);

// 获取全景图大小

int h = max(left.rows, right.rows);

int w = left.cols + right.cols;

Mat panorama_01 = Mat::zeros(Size(w, h), CV_8UC3);

Rect roi;

roi.x = 0;

roi.y = 0;

roi.width = left.cols;

roi.height = left.rows;

// 获取左侧与右侧对齐图像

left.copyTo(panorama_01(roi));

imwrite("D:/images/panorama_01.png", panorama_01);

Mat panorama_02;

warpPerspective(right, panorama_02, H, Size(w, h));

imwrite("D:/images/panorama_02.png", panorama_02);

// 计算融合重叠区域mask

Mat mask = Mat::zeros(Size(w, h), CV_8UC1);

generate_mask(panorama_02, mask);

// 创建遮罩层并根据mask完成权重初始化

Mat mask1 = Mat::ones(Size(w, h), CV_32FC1);

Mat mask2 = Mat::ones(Size(w, h), CV_32FC1);

// left mask

linspace(mask1, 1, 0, left.cols, mask);

// right mask

linspace(mask2, 0, 1, left.cols, mask);

namedWindow("mask1", WINDOW_FREERATIO);

imshow("mask1", mask1);

namedWindow("mask2", WINDOW_FREERATIO);

imshow("mask2", mask2);

// 左侧融合

Mat m1;

vector mv;

mv.push_back(mask1);

mv.push_back(mask1);

mv.push_back(mask1);

merge(mv, m1);

panorama_01.convertTo(panorama_01, CV_32F);

multiply(panorama_01, m1, panorama_01);

// 右侧融合

mv.clear();

mv.push_back(mask2);

mv.push_back(mask2);

mv.push_back(mask2);

Mat m2;

merge(mv, m2);

panorama_02.convertTo(panorama_02, CV_32F);

multiply(panorama_02, m2, panorama_02);

// 合并全景图

Mat panorama;

add(panorama_01, panorama_02, panorama);

panorama.convertTo(panorama, CV_8U);

imwrite("D:/images/panorama.png", panorama);

waitKey(0);

return 0;

}

void generate_mask(Mat &img, Mat &mask) {

int w = img.cols;

int h = img.rows;

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

Vec3b p = img.at(row, col);

int b = p[0];

int g = p[1];

int r = p[2];

if (b == g && g == r && r == 0) {

mask.at(row, col) = 255;

}

}

}

imwrite("D:/images/mask.png", mask);

}

// 对mask中的0区域,进行逐行计算每个像素的权重值

void linspace(Mat& image, float begin, float finish, int w1, Mat &mask) {

int offsetx = 0;

float interval = 0;

float delta = 0;

for (int i = 0; i < image.rows; i++) {

offsetx = 0;

interval = 0;

delta = 0;

for (int j = 0; j < image.cols; j++) {

int pv = mask.at(i, j);

if (pv == 0 && offsetx == 0) {

offsetx = j;

delta = w1 - offsetx;

interval = (finish - begin) / (delta - 1); // 计算每个像素变化的大小

image.at<float>(i, j) = begin + (j - offsetx)*interval;

}

else if (pv == 0 && offsetx > 0 && (j - offsetx) < delta) {

image.at<float>(i, j) = begin + (j - offsetx)*interval;

}

}

}

}

效果:

1、图像拼接重合区域mask

2、拼接前图像

3、图像拼接效果图

10、条码标签定位与有无判定

代码实现:

ORBDetector.h

#pragma once

#include orb = cv::ORB::create(500);

std::vector tpl_kps;

cv::Mat tpl_descriptors;

cv::Mat tpl;

};

ORBDetector.cpp

#include "ORBDetector.h"

ORBDetector::ORBDetector() {

std::cout << "create orb detector..." << std::endl;

}

ORBDetector::~ORBDetector() {

this->tpl_descriptors.release();

this->tpl_kps.clear();

this->orb.release();

this->tpl.release();

std::cout << "destory instance..." << std::endl;

}

void ORBDetector::initORB(cv::Mat &refImg) {

if (!refImg.empty()) {

cv::Mat tplGray;

cv::cvtColor(refImg, tplGray, cv::COLOR_BGR2GRAY);

orb->detectAndCompute(tplGray, cv::Mat(), this->tpl_kps, this->tpl_descriptors);

tplGray.copyTo(this->tpl);

}

}

bool ORBDetector::detect_and_analysis(cv::Mat &image, cv::Mat &aligned) {

// keypoints and match threshold

float GOOD_MATCH_PERCENT = 0.15f;

bool found = true;

// 处理数据集中每一张数据

cv::Mat img2Gray;

cv::cvtColor(image, img2Gray, cv::COLOR_BGR2GRAY);

std::vector img_kps;

cv::Mat img_descriptors;

orb->detectAndCompute(img2Gray, cv::Mat(), img_kps, img_descriptors);

std::vector matches;

cv::Ptr matcher = cv::DescriptorMatcher::create("BruteForce-Hamming");

// auto flann_matcher = cv::FlannBasedMatcher(new cv::flann::LshIndexParams(6, 12, 2));

matcher->match(img_descriptors, this->tpl_descriptors, matches, cv::Mat());

// Sort matches by score

std::sort(matches.begin(), matches.end());

// Remove not so good matches

const int numGoodMatches = matches.size() * GOOD_MATCH_PERCENT;

matches.erase(matches.begin() + numGoodMatches, matches.end());

// std::cout << numGoodMatches <<"distance:"<

if (matches[0].distance > 30) {

found = false;

}

// Extract location of good matches

std::vector points1, points2;

for (size_t i = 0; i < matches.size(); i++)

{

points1.push_back(img_kps[matches[i].queryIdx].pt);

points2.push_back(tpl_kps[matches[i].trainIdx].pt);

}

cv::Mat H = findHomography(points1, points2, cv::RANSAC);

cv::Mat im2Reg;

warpPerspective(image, im2Reg, H, tpl.size());

// 逆时针旋转90度

cv::Mat result;

cv::rotate(im2Reg, result, cv::ROTATE_90_COUNTERCLOCKWISE);

result.copyTo(aligned);

return found;

}

object_analysis.cpp

#include "ORBDetector.h"

#include files;

glob("D:/facedb/orb_barcode", files);

cv::Mat temp;

for (auto file : files) {

std::cout << file << std::endl;

cv::Mat image = imread(file);

int64 start = getTickCount();

bool OK = orb_detector.detect_and_analysis(image, temp);

double ct = (getTickCount() - start) / getTickFrequency();

printf("decode time: %.5f ms\n", ct * 1000);

std::cout << "标签: " << (OK == true) << std::endl;

imshow("temp", temp);

waitKey(0);

}

}

效果:

1、检测图片

2、模板图片

11、DNN概述

DNN模块介绍:

- DNN - Deep Neutal Network

- OpenCV3.3 开始发布

- 支持VOC与COCO数据集的对象检测模型

- 包括SSD/Faster-RCNN/YOLOv4等

- 支持自定义对象检测

- 支持人脸检测

函数知识:

- 读取模型

- 转换数据与设置

- 推理输出

Net net = readNetFromTensorflow(model, config); // 支持tensorflow

Net net = readNetFromCaffe(config, model); // 支持caffe

Net net = readNetFromONNX(onnxfile);

// 读取数据

Mat image = imread("D:/images/example.png");

Mat blob_img = blobFromImage(image, scalefactor, size, mean, swapRB);

net.setInput(blob_img);

// 推理输出

Mat result = net.forward();

后处理/输出解析:

- 不同网络的输出不同

- 如何解析要根据模型输出

- 对象检测网络SSD/Faster-RCNN解析

SSD的输出解析:

- http://download.tensorflow.org/models/object_detection/ssd_mobilenet_v1_coco_2017_11_17.tar.gz

- 1 x 1 x N x 7 - DetectOutput

- [image_id, label, conf, x_min, y_min, x_max, y_max]

Faster-RCNN输出解析:

- http://download.tensorflow.org/models/object_detection/faster_rcnn_inception_v2_coco_2018_01_28.tar.gz

- 1 x 1 x N x 7 - DetectOutput

- [image_id, label, conf, x_min, y_min, x_max, y_max]

YOLOv4输出解析:

- 解析多个输出层,80个类别 - N x W x H x D

- 4 + 80 预测,三个输出层

- centerx,centery,width,height

- 13 x 13 x 255 = 13 x 13 x 3 x 85

- NMS

- https://github.com/AlexeyAB/darknet/wiki/YOLOv4-model-zoo