-

内核中内存数据迁移速度对比

前言

我这样干不知道合理不合理啊,路过的大神给指点指点啊。

本次实验完整以下任务:

1 申请两块内核 abuf和bbuf,大小是1280*800

2 执行memcpy(abuf,bbuf,1280*800),计算消耗的时间

3 在定时器处理函数中执行memcpy(abuf,bbuf,1280*800),计算消耗的时间

4 使用dmaengine 复制两块内存的数据。并计算每次消耗的时间。也是计算十次

5

一 涉及到的函数

使用__get_free_pages分配内存

#define CSS_DMA_IMAGE_SIZE 1280*800

css_dev->buf_size = CSS_DMA_IMAGE_SIZE;

css_dev->buf_size_order = get_order(CSS_DMA_IMAGE_SIZE );

p = (void*)__get_free_pages(GFP_KERNEL|GFP_DMA,css_dev->buf_size_order);

if(p == NULL || IS_ERR(p)){

DEBUG_CSS("devm_kmalloc error");

return -ENOMEM;

}在驱动初始化函数中使用memcpy复制内存:

memcpy(css_dev->dst_buf,css_dev->src_buf,css_dev->buf_size);

计算时间

struct timeval {

__kernel_time_t tv_sec; /* seconds */

__kernel_suseconds_t tv_usec; /* microseconds */

};struct timeval tv_start,tv_end;

do_gettimeofday(&tv_start);

memcpy(css_dev->dst_buf,css_dev->src_buf,css_dev->buf_size);

do_gettimeofday(&tv_end);DEBUG_CSS("used time = %d us",

tv_end.tv_sec - tv_start.tv_sec)*1000000 +

tv_end.tv_usec - tv_start.tv_usec

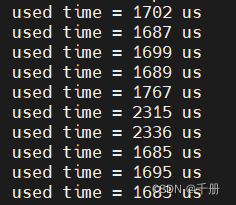

);测试结果,如下图,最小值1683微秒,最大值2315微秒。

二 计算memcpy消耗的时间

在定时器函数中使用memcpy复制内存:

- static void __maybe_unused css_timer_function(unsigned long arg)

- {

- struct _css_dev_ *css_dev = (struct _css_dev_ *)arg;

- static int count = 0;

- struct timeval tv_start,tv_end;

- if(count < 10){

- do_gettimeofday(&tv_start);

- memcpy(css_dev->dst_buf,css_dev->src_buf,css_dev->buf_size);

- do_gettimeofday(&tv_end);

- DEBUG_CSS("used time = %d us",

- (tv_end.tv_sec - tv_start.tv_sec)*1000000 + (tv_end.tv_usec - tv_start.tv_usec));

- count++;

- mod_timer(&css_dev->timer,jiffies + msecs_to_jiffies(1000));

- }

- }

- init_timer(&css_dev->timer);

- css_dev->timer.function = css_timer_function;

- css_dev->timer.data = (unsigned long)css_dev;

- mod_timer(&css_dev->timer,jiffies + msecs_to_jiffies(1000));

执行结果如下所示:由结果可知,在定时器中执行memcpy还是时间比较稳定的。

三 计算dmaengine消耗的时间

源码:

实验内容等同于memcpy(css_dev->dst_buf,css_dev->src_buf,css_dev->buf_size);

第一次使用dmaengine的时候,经过测试,发现复制后,src和dst数据不一致,经过多次修改后,终于数据正常了

- #include

- #include

- #include

- #include

- #include

- #include

- #include

- #include

- #include

- #include

- #include

- #include

- #include

- #include

- #include

- #include

- #include

- #define DEBUG_CSS(format,...)\

- printk(KERN_INFO"info:%s:%s:%d: "format"\n",\

- __FILE__,__func__,__LINE__,\

- ##__VA_ARGS__)

- #define DEBUG_CSS_ERR(format,...)\

- printk("\001" "1""error:%s:%s:%d: "format"\n",\

- __FILE__,__func__,__LINE__,\

- ##__VA_ARGS__)

- #define CSS_DMA_IMAGE_SIZE (1280*800)

- #define CSI_DMA_SET_CUR_MAP_BUF_TYPE_IOCTL 0x1001

- enum _css_dev_buf_type{

- _CSS_DEV_READ_BUF = 0,

- _CSS_DEV_WRITE_BUF,

- _CSS_DEV_UNKNOWN_BUF_TYPE,

- _CSS_DEV_MAX_BUF_TYPE,

- };

- struct _css_dev_{

- struct file_operations _css_fops;

- struct miscdevice misc;

- int buf_size;

- int buf_size_order;

- char *src_buf;

- char *dst_buf;

- char *user_src_buf_vaddr;

- char *user_dst_buf_vaddr;

- dma_addr_t src_addr;

- dma_addr_t dst_addr;

- struct spinlock slock;

- struct mutex open_lock;

- char name[10];

- enum _css_dev_buf_type buf_type;

- struct device *dev;

- struct dma_chan * dma_m2m_chan;

- struct completion dma_m2m_ok;

- struct imx_dma_data m2m_dma_data;

- struct timeval tv_start,tv_end;

- };

- #define _to_css_dev_(file) (struct _css_dev_ *)container_of(file->f_op,struct _css_dev_,_css_fops)

- static int _css_open(struct inode *inode, struct file *file)

- {

- struct _css_dev_ *css_dev = _to_css_dev_(file);

- DEBUG_CSS("css_dev->name = %s",css_dev->name);

- return 0;

- }

- static ssize_t _css_read(struct file *file, char __user *ubuf, size_t size, loff_t *ppos)

- {

- struct _css_dev_ *css_dev = _to_css_dev_(file);

- DEBUG_CSS("css_dev->name = %s",css_dev->name);

- return 0;

- }

- static int _css_mmap (struct file *file, struct vm_area_struct *vma)

- {

- struct _css_dev_ *css_dev = _to_css_dev_(file);

- char *p = NULL;

- char **user_addr;

- switch(css_dev->buf_type){

- case _CSS_DEV_READ_BUF:

- p = css_dev->src_buf;

- user_addr = (char**)&css_dev->user_src_buf_vaddr;

- break;

- case _CSS_DEV_WRITE_BUF:

- p = css_dev->dst_buf;

- user_addr = (char**)&css_dev->user_dst_buf_vaddr;

- break;

- default:

- p = NULL;

- return -EINVAL;

- break;

- }

- if (remap_pfn_range(vma, vma->vm_start, virt_to_phys(p) >> PAGE_SHIFT,

- vma->vm_end-vma->vm_start, vma->vm_page_prot)) {

- DEBUG_CSS_ERR( "remap_pfn_range error\n");

- return -EAGAIN;

- }

- css_dev->buf_type = _CSS_DEV_UNKNOWN_BUF_TYPE;

- *user_addr = (void*)vma->vm_start;

- DEBUG_CSS("mmap ok user_addr = %p kernel addr = %p",*user_addr,p);

- return 0;

- }

- static ssize_t _css_write(struct file *file, const char __user *ubuf, size_t size, loff_t *ppos)

- {

- struct _css_dev_ *css_dev = _to_css_dev_(file);

- DEBUG_CSS("css_dev->name = %s",css_dev->name);

- return size;

- }

- static int _css_release (struct inode *inode, struct file *file)

- {

- struct _css_dev_ *css_dev = _to_css_dev_(file);

- DEBUG_CSS("css_dev->name = %s",css_dev->name);

- DEBUG_CSS("css_dev->src_buf[%d] = %c",css_dev->buf_size - 1,

- ((char*)css_dev->src_buf)[css_dev->buf_size - 1]);

- DEBUG_CSS("css_dev->dst_buf[%d] = %c",css_dev->buf_size - 1,

- ((char*)css_dev->dst_buf)[css_dev->buf_size - 1]);

- return 0;

- }

- static int _css_set_buf_type(struct file *file, enum _css_dev_buf_type buf_type)

- {

- unsigned long flags;

- struct _css_dev_ *css_dev = _to_css_dev_(file);

- DEBUG_CSS("buf_type=%d",buf_type);

- if(buf_type >= _CSS_DEV_MAX_BUF_TYPE){

- DEBUG_CSS_ERR("invalid buf type");

- return -EINVAL;

- }

- spin_lock_irqsave(&css_dev->slock,flags);

- css_dev->buf_type = buf_type;

- spin_unlock_irqrestore(&css_dev->slock,flags);

- return 0;

- }

- static long _css_unlocked_ioctl(struct file *file, unsigned int cmd, unsigned long arg)

- {

- int ret = 0;

- switch(cmd){

- case CSI_DMA_SET_CUR_MAP_BUF_TYPE_IOCTL:

- ret = _css_set_buf_type(file,(enum _css_dev_buf_type)arg);

- break;

- default:

- DEBUG_CSS_ERR("unknown cmd = %x",cmd);

- ret = -EINVAL;

- break;

- }

- return ret;

- }

- static struct _css_dev_ _global_css_dev = {

- .name = "lkmao",

- ._css_fops = {

- .owner = THIS_MODULE,

- .mmap = _css_mmap,

- .open = _css_open,

- .release = _css_release,

- .read = _css_read,

- .write = _css_write,

- .unlocked_ioctl = _css_unlocked_ioctl,

- },

- .misc = {

- .minor = MISC_DYNAMIC_MINOR,

- .name = "css_dma",

- },

- .buf_type = _CSS_DEV_UNKNOWN_BUF_TYPE,

- .user_src_buf_vaddr = NULL,

- .user_dst_buf_vaddr = NULL,

- .m2m_dma_data = {

- .peripheral_type = IMX_DMATYPE_MEMORY,

- .priority = DMA_PRIO_HIGH,

- },

- };

- static int css_dev_get_dma_addr(struct _css_dev_ *css_dev,char **vaddr,dma_addr_t *phys, int direction)

- {

- char *p;

- dma_addr_t dma_addr;

- p = (char*)__get_free_pages(GFP_KERNEL|GFP_DMA,css_dev->buf_size_order);

- if(p == NULL || IS_ERR(p)){

- DEBUG_CSS("devm_kmalloc error");

- return -ENOMEM;

- }

- dma_addr = dma_map_single(css_dev->dev, p, css_dev->buf_size, direction);

- *vaddr = p;

- *phys = dma_addr;

- DEBUG_CSS("32bit:p = %p,dma_addr = %x",p,dma_addr);

- return 0;

- }

- static bool css_dma_filter_fn(struct dma_chan *chan, void *filter_param)

- {

- if(!imx_dma_is_general_purpose(chan)){

- DEBUG_CSS("css_dma_filter_fn error");

- return false;

- }

- chan->private = filter_param;

- return true;

- }

- static void css_dma_async_tx_callback(void *dma_async_param)

- {

- struct _css_dev_ *css_dev = (struct _css_dev_ *)dma_async_param;

- complete(&css_dev->dma_m2m_ok);

- }

- static int css_dmaengine_init(struct _css_dev_ *css_dev)

- {

- dma_cap_mask_t dma_m2m_mask;

- struct dma_slave_config dma_m2m_config = {0};

- css_dev->m2m_dma_data.peripheral_type = IMX_DMATYPE_MEMORY;

- css_dev->m2m_dma_data.priority = DMA_PRIO_HIGH;

- dma_cap_zero(dma_m2m_mask);

- dma_cap_set(DMA_MEMCPY,dma_m2m_mask);

- css_dev->dma_m2m_chan = dma_request_channel(dma_m2m_mask,css_dma_filter_fn,&css_dev->m2m_dma_data);

- if(!css_dev->dma_m2m_chan){

- DEBUG_CSS("dma_request_channel error");

- return -EINVAL;

- }

- dma_m2m_config.direction = DMA_MEM_TO_MEM;

- dma_m2m_config.dst_addr_width = DMA_SLAVE_BUSWIDTH_4_BYTES;

- dmaengine_slave_config(css_dev->dma_m2m_chan,&dma_m2m_config);

- return 0;

- }

- static int css_dmaengine_test(unsigned long arg)

- {

- struct _css_dev_ *css_dev = (struct _css_dev_ *)arg;

- struct dma_async_tx_descriptor *dma_m2m_desc;

- struct dma_device *dma_dev;

- struct device *chan_dev;

- dma_cookie_t cookie;

- enum dma_status dma_status;

- do_gettimeofday(&css_dev->tv_start);

- dma_dev = css_dev->dma_m2m_chan->device;

- chan_dev = css_dev->dma_m2m_chan->device->dev;

- css_dev->src_addr = dma_map_single(chan_dev, css_dev->src_buf, css_dev->buf_size, DMA_TO_DEVICE);

- css_dev->dst_addr = dma_map_single(chan_dev, css_dev->dst_buf, css_dev->buf_size, DMA_FROM_DEVICE);

- //DEBUG_CSS("32bit:css_dev->src_addr = %x,css_dev->dst_addr = %x",css_dev->src_addr,css_dev->dst_addr);

- dma_m2m_desc = dma_dev->device_prep_dma_memcpy(css_dev->dma_m2m_chan,

- css_dev->dst_addr,

- css_dev->src_addr,

- css_dev->buf_size,0);

- dma_m2m_desc->callback = css_dma_async_tx_callback;

- dma_m2m_desc->callback_param = css_dev;

- init_completion(&css_dev->dma_m2m_ok);

- cookie = dmaengine_submit(dma_m2m_desc);

- if(dma_submit_error(cookie)){

- DEBUG_CSS("dmaengine_submit error");

- return -EINVAL;

- }

- dma_async_issue_pending(css_dev->dma_m2m_chan);

- wait_for_completion(&css_dev->dma_m2m_ok);

- dma_status = dma_async_is_tx_complete(css_dev->dma_m2m_chan,cookie,NULL,NULL);

- if(DMA_COMPLETE != dma_status){

- DEBUG_CSS("dma_status = %d",dma_status);

- }

- dma_unmap_single(chan_dev,css_dev->src_addr,css_dev->buf_size,DMA_TO_DEVICE);

- dma_unmap_single(chan_dev,css_dev->dst_addr,css_dev->buf_size,DMA_FROM_DEVICE);

- do_gettimeofday(&css_dev->tv_end);

- DEBUG_CSS("used time = %ld us",

- (css_dev->tv_end.tv_sec - css_dev->tv_start.tv_sec)*1000000

- + (css_dev->tv_end.tv_usec - css_dev->tv_start.tv_usec));

- return 0;

- }

- static int css_dev_init(struct platform_device *pdev,struct _css_dev_ *css_dev)

- {

- int i = 0,j = 0;

- css_dev->misc.fops = &css_dev->_css_fops;

- pr_debug("css_init init ok");

- mutex_init(&css_dev->open_lock);

- spin_lock_init(&css_dev->slock);

- printk("KERN_ALERT = %s",KERN_ALERT);

- css_dev->dev = &pdev->dev;

- css_dev->buf_size = CSS_DMA_IMAGE_SIZE;

- css_dev->buf_size_order = get_order(css_dev->buf_size);

- if(css_dev_get_dma_addr(css_dev,&css_dev->src_buf,&css_dev->src_addr,DMA_TO_DEVICE)){

- return -ENOMEM;

- }

- css_dev->src_buf = (char*)__get_free_pages(GFP_KERNEL|GFP_DMA,css_dev->buf_size_order);

- if(css_dev->src_buf == NULL || IS_ERR(css_dev->src_buf)){

- DEBUG_CSS("devm_kmalloc error");

- return -ENOMEM;

- }

- css_dev->dst_buf = (char*)__get_free_pages(GFP_KERNEL|GFP_DMA,css_dev->buf_size_order);

- if(css_dev->dst_buf == NULL || IS_ERR(css_dev->dst_buf)){

- DEBUG_CSS("devm_kmalloc error");

- return -ENOMEM;

- }

- DEBUG_CSS("32bit:css_dev->src_buf = %p,css_dev->dst_buf = %p",css_dev->src_buf,css_dev->dst_buf);

- if(misc_register(&css_dev->misc) != 0){

- DEBUG_CSS("misc_register error");

- return -EINVAL;

- }

- platform_set_drvdata(pdev,css_dev);

- css_dmaengine_init(css_dev);

- for(i = 0;i < 10;i++){

- memset(css_dev->src_buf,5,css_dev->buf_size);

- memset(css_dev->dst_buf,6,css_dev->buf_size);

- #if 1

- css_dmaengine_test((unsigned long)css_dev);

- #else

- memcpy(css_dev->dst_buf,css_dev->src_buf,css_dev->buf_size);

- #endif

- for(j = 0;j < css_dev->buf_size;j++){

- if(css_dev->dst_buf[j] != 5){

- DEBUG_CSS("css_dev->dst_buf[%d] = %d",j,css_dev->dst_buf[j]);

- DEBUG_CSS("css_dev->src_buf[%d] = %d",j,css_dev->src_buf[j]);

- break;

- }

- }

- if(j != css_dev->buf_size){

- DEBUG_CSS("i = %d,css_dmaengine_test error",i);

- return -EINVAL;

- }else{

- DEBUG_CSS("copy ok %d times",i+1);

- }

- }

- return 0;

- }

- static int css_probe(struct platform_device *pdev)

- {

- struct _css_dev_ *css_dev = (struct _css_dev_ *)&_global_css_dev;

- if(css_dev_init(pdev,css_dev)){

- return -EINVAL;

- }

- DEBUG_CSS("init ok");

- return 0;

- }

- static int css_remove(struct platform_device *pdev)

- {

- struct _css_dev_ *css_dev = &_global_css_dev;

- dma_unmap_single(css_dev->dev,css_dev->src_addr,css_dev->buf_size,DMA_FROM_DEVICE);

- dma_unmap_single(css_dev->dev,css_dev->dst_addr,css_dev->buf_size,DMA_FROM_DEVICE);

- free_page((unsigned long )css_dev->dst_buf);

- free_page((unsigned long )css_dev->src_buf);

- misc_deregister(&css_dev->misc);

- dma_release_channel(css_dev->dma_m2m_chan);

- DEBUG_CSS("exit ok");

- return 0;

- }

- static const struct of_device_id css_of_ids[] = {

- {.compatible = "css_dma"},

- {},

- };

- MODULE_DEVICE_TABLE(of,css_of_ids);

- static struct platform_driver css_platform_driver = {

- .probe = css_probe,

- .remove = css_remove,

- .driver = {

- .name = "css_dma",

- .of_match_table = css_of_ids,

- .owner = THIS_MODULE,

- },

- };

- static int __init css_init(void)

- {

- int ret_val;

- ret_val = platform_driver_register(&css_platform_driver);

- if(ret_val != 0){

- DEBUG_CSS("platform_driver_register error");

- return ret_val;

- }

- DEBUG_CSS("platform_driver_register ok");

- return 0;

- }

- static void __exit css_exit(void)

- {

- platform_driver_unregister(&css_platform_driver);

- }

- module_init(css_init);

- module_exit(css_exit);

- MODULE_LICENSE("GPL");

设备树:

- sdma_m2m{

- compatible = "css_dma";

- };

测试结果,这个有点恐怖了,需要20多毫秒,是memcpy的十几倍啊,

小结

-

相关阅读:

单向环形链表构建(思路分析) [Java][数据结构]

TensorRT+Yolov7-tiny:基于TensorRT+API部署YoloV7-tiny模型

shopify目录层级释义

13.从架构设计角度分析AAC源码-Room源码解析第2篇:RoomCompilerProcessing源码解析

ip子网的划分方法

iOS18新增通话录音和应用锁!附升级教程及内置壁纸

入门googletest

回顾复习【矩阵分析】初等因子 和 矩阵的相似 || 由不变因子求初等因子 || 由初等因子和秩求Smith标准形(不变因子)

ROS 消息订阅 节点发布 通信方式

java计算机毕业设计快滴预约平台源码+mysql数据库+系统+lw文档+部署

- 原文地址:https://blog.csdn.net/yueni_zhao/article/details/127730383