-

部署Zookeeper集群和Kafka集群

一、部署Zookeeper集群

规划三个节点,分别为:bigdata01、bigdata02、bigdata03

1、上传压缩包到指定文件夹中,并解压

tar -zxvf apache-zookeeper-3.7.0-bin.tar.gz- 1

2、重命名zoo_sample.cfg为zoo.cfg

cd apache-zookeeper-3.7.0-bin/conf/ mv zoo_sample.cfg zoo.cfg- 1

- 2

3、修改配置

修改zoo.cfg中的dataDir参数的值,dataDir指向的目录存储的是zookeeper的核心数据,所以这个目录不能使用tmp目录,然后增加server.0、server.1、server.2这三行内容;

vi zoo.cfg- 1

编辑之后的内容如下:

dataDir=/data/software/apache-zookeeper-3.7.0-bin/data server.0=bigdata01:2888:3888 server.1=bigdata02:2888:3888 server.2=bigdata03:2888:3888- 1

- 2

- 3

- 4

创建目录保存myid文件,并且向myid文件写入内容

myid中的值其实是zoo.cfg中server后面指定的编号一一对应的

编号0对应的是bigdata01这台机器,所以在这里指定0

在这里使用echo 和 重定向 实现数据写入

[root@BigData01 conf]# cd /data/software/apache-zookeeper-3.7.0-bin [root@BigData01 apache-zookeeper-3.7.0-bin]# mkdir data [root@BigData01 apache-zookeeper-3.7.0-bin]# cd data [root@BigData01 data]# echo 0 > myid- 1

- 2

- 3

- 4

4、配置好之后,将zookeeper拷贝到其他两个节点上

[root@BigData01 software]# scp -rq apache-zookeeper-3.7.0-bin bigdata02:/data/software/ [root@BigData01 software]# scp -rq apache-zookeeper-3.7.0-bin bigdata03:/data/software/- 1

- 2

5、修改bigdata02和bigdata03上zookeeper中myid文件中的内容:

首先修改bigdata02上的

[root@BigData02 ~]# cd /data/software/apache-zookeeper-3.7.0-bin/data/ You have new mail in /var/spool/mail/root [root@BigData02 data]# ll total 4 -rw-r--r--. 1 root root 2 Apr 8 22:49 myid [root@BigData02 data]# echo 1 > myid- 1

- 2

- 3

- 4

- 5

- 6

然后修改bigdata03上的

[root@BigData03 ~]# cd /data/software/apache-zookeeper-3.7.0-bin/data/ You have new mail in /var/spool/mail/root [root@BigData03 data]# ll total 4 -rw-r--r--. 1 root root 2 Apr 8 22:49 myid [root@BigData03 data]# echo 2 > myid- 1

- 2

- 3

- 4

- 5

- 6

6、启动zookeeper集群

分别在bigdata01、bigdata02和bigdata03上启动zookeeper进程

在bigdata01上启动

[root@BigData01 software]# cd /data/software/apache-zookeeper-3.7.0-bin [root@BigData01 apache-zookeeper-3.7.0-bin]# bin/zkServer.sh start ZooKeeper JMX enabled by default Using config: /data/software/apache-zookeeper-3.7.0-bin/bin/../conf/zoo.cfg Starting zookeeper ... STARTED- 1

- 2

- 3

- 4

- 5

在bigdata02上启动

[root@BigData02 data]# cd /data/software/apache-zookeeper-3.7.0-bin/ [root@BigData02 apache-zookeeper-3.7.0-bin]# bin/zkServer.sh start ZooKeeper JMX enabled by default Using config: /data/software/apache-zookeeper-3.7.0-bin/bin/../conf/zoo.cfg Starting zookeeper ... STARTED- 1

- 2

- 3

- 4

- 5

在bigdata03上启动

[root@BigData03 data]# cd /data/software/apache-zookeeper-3.7.0-bin/ You have new mail in /var/spool/mail/root [root@BigData03 apache-zookeeper-3.7.0-bin]# bin/zkServer.sh start ZooKeeper JMX enabled by default Using config: /data/software/apache-zookeeper-3.7.0-bin/bin/../conf/zoo.cfg Starting zookeeper ... STARTED- 1

- 2

- 3

- 4

- 5

- 6

7、验证

分别在bigdata01、bigdata02和bigdata03上执行jps命令验证是否有QuorumPeerMain进程;

以bigdata01为例:

[root@BigData01 apache-zookeeper-3.7.0-bin]# jps 1840 QuorumPeerMain 1903 Jps- 1

- 2

- 3

- 若有QuorumPeerMain进程,则说明zookeeper集群启动正常了;

- 若没有QuorumPeerMain进程,则到对应的节点的logs目录下查看zookeeper*-*.out日志文件;

执行bin/zkServer.sh status命令会发现有一个节点显示为leader,其他两个节点显示为follower;

bigdata01:

[root@BigData01 apache-zookeeper-3.7.0-bin]# bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /data/software/apache-zookeeper-3.7.0-bin/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower- 1

- 2

- 3

- 4

- 5

bigdata02:

[root@BigData02 apache-zookeeper-3.7.0-bin]# bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /data/software/apache-zookeeper-3.7.0-bin/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: leader- 1

- 2

- 3

- 4

- 5

bigdata03:

[root@BigData03 apache-zookeeper-3.7.0-bin]# bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /data/software/apache-zookeeper-3.7.0-bin/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower- 1

- 2

- 3

- 4

- 5

8、操作zookeeper

首先使用zookeeper的客户端工具连接到zookeeper里面,使用bin目录下面的zkCli.sh脚本,默认会连接本机的zookeeper服务:

[root@BigData01 apache-zookeeper-3.7.0-bin]# bin/zkCli.sh- 1

进入命令行,随便输入任意字母,然后会显示zookeeper支持的所有命令:

[zk: localhost:2181(CONNECTED) 0] [zk: localhost:2181(CONNECTED) 0] abc ZooKeeper -server host:port -client-configuration properties-file cmd args addWatch [-m mode] path # optional mode is one of [PERSISTENT, PERSISTENT_RECURSIVE] - default is PERSISTENT_RECURSIVE addauth scheme auth close config [-c] [-w] [-s] connect host:port create [-s] [-e] [-c] [-t ttl] path [data] [acl] delete [-v version] path deleteall path [-b batch size] delquota [-n|-b|-N|-B] path get [-s] [-w] path getAcl [-s] path getAllChildrenNumber path getEphemerals path history listquota path ls [-s] [-w] [-R] path printwatches on|off quit reconfig [-s] [-v version] [[-file path] | [-members serverID=host:port1:port2;port3[,...]*]] | [-add serverId=host:port1:port2;port3[,...]]* [-remove serverId[,...]*] redo cmdno removewatches path [-c|-d|-a] [-l] set [-s] [-v version] path data setAcl [-s] [-v version] [-R] path acl setquota -n|-b|-N|-B val path stat [-w] path sync path version whoami Command not found: Command not found abc [zk: localhost:2181(CONNECTED) 1]- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

这样就进入了zookeeper命令行

在这里可以操作zookeeper中的目录结构zookeeper中的目录结构和Linux文件系统的目录结构类似

zookeeper里面的每一个目录,我们都称为节点(ZNode)

正常情况下,我们可以把Znode认为和文件系统中的目录类似,但是有一点需要注意:ZNode节点本身是可以存储数据的;

查看根节点下的内容

[zk: localhost:2181(CONNECTED) 1] ls / [admin, brokers, cluster, config, consumers, controller, controller_epoch, feature, isr_change_notification, kafka-manager, latest_producer_id_block, log_dir_event_notification, zookeeper]- 1

- 2

创建节点

在跟节点下面创建一个test节点,在test节点上存储数据hello_zookeeper

[zk: localhost:2181(CONNECTED) 2] create /test hello_zookeeper Created /test [zk: localhost:2181(CONNECTED) 3] ls / [admin, brokers, cluster, config, consumers, controller, controller_epoch, feature, isr_change_notification, kafka-manager, latest_producer_id_block, log_dir_event_notification, test, zookeeper]- 1

- 2

- 3

- 4

获取节点中的信息

获取test节点中的信息

[zk: localhost:2181(CONNECTED) 4] get /test hello_zookeeper- 1

- 2

删除节点

这个命令可以递归删除,这里面还有一个delete命令,也可以删除节点,但是只能删除空节点,如果下面还有子节点,想一次性删除全部节点建议使用deleteall

[zk: localhost:2181(CONNECTED) 5] deleteall /test [zk: localhost:2181(CONNECTED) 6] ls / [admin, brokers, cluster, config, consumers, controller, controller_epoch, feature, isr_change_notification, kafka-manager, latest_producer_id_block, log_dir_event_notification, zookeeper]- 1

- 2

- 3

查看节点信息

kafka集群中的broker节点启动之后,会自动向zookeeper中注册,保存当前节点信息,可以通过get命令查看节点信息,这里面会显示对应的主机名和端口号等信息;

[zk: localhost:2181(CONNECTED) 0] ls /brokers [ids, seqid, topics] [zk: localhost:2181(CONNECTED) 1] ls /brokers/ids [0, 2] [zk: localhost:2181(CONNECTED) 2] get /brokers/ids/2 {"listener_security_protocol_map":{"PLAINTEXT":"PLAINTEXT"},"endpoints":["PLAINTEXT://BigData03:9092"],"jmx_port":-1,"features":{},"host":"BigData03","timestamp":"1618707961757","port":9092,"version":5}- 1

- 2

- 3

- 4

- 5

- 6

9、停止zookeeper集群

在bigdata01、bigdata02和bigdata03上分别执行如下命令:

bin/zkServer.sh stop- 1

二、部署Kafka集群

1、规划三个节点

注意:

(1)部署Kafka集群之前,需要确保zookeeper集群是启动状态;

(2)Kafka还需要依赖基础环境jdk,需要确保jdk已经安装到位;

规划三个节点,具体如下所示:bigdata01 bigdata02 bigdata03- 1

- 2

- 3

注意:Kafka集群,是没有主从之分,所有节点都是一样的;

2、首先在bigdata01上配置Kafka

解压安装包

[root@BigData01 ~]# cd /data/software/ [root@BigData01 software]# tar -zxvf kafka_2.12-2.7.0.tgz- 1

- 2

修改配置文件

集群模式要修改 broker.id、log.dirs 以及 zookeeper.connect ;- broker.id的默认值是从0开始,集群中所有节点的broker.id从0开始递增即可,所以bigdata01节点的broker.id值为0;

- log.dirs的值建议指定到一块存储空间比较大的磁盘上面,因为实际工作中Kafka会存储很多数据,我们这个虚拟机里只有一块磁盘,所以我们就指定到/data目录下面;

- zookeeper.connect的值是zookeeper集群的地址,可以指定集群中的一个节点或者多个节点地址,多个节点地址使用逗号隔开即可;

[root@BigData01 software]# cd kafka_2.12-2.7.0/config/ [root@BigData01 config]# vi server.properties- 1

- 2

增加如下内容:

broker.id=0 lod.dirs=/data/kafka-logs zookeeper.connect=bigdata01:2181,bigdata02:2181,bigdata03:2181- 1

- 2

- 3

3、将修改好配置的Kafka安装包拷贝到其他两个节点上

[root@BigData01 config]# cd /data/software/ [root@BigData01 software]# scp -rq kafka_2.12-2.7.0 bigdata02:/data/software/ [root@BigData01 software]# scp -rq kafka_2.12-2.7.0 bigdata03:/data/software/- 1

- 2

- 3

4、修改bigdata02和bigdata03上Kafka中broker.id的值

首先将bigdata02节点上的broker.id的值为1;

[root@BigData02 apache-zookeeper-3.7.0-bin]# cd /data/software/kafka_2.12-2.7.0/config/ [root@BigData02 config]# vi server.properties- 1

- 2

具体如下所示:

broker.id=1 lod.dirs=/data/kafka-logs zookeeper.connect=bigdata01:2181,bigdata02:2181,bigdata03:2181- 1

- 2

- 3

然后修改bigdata03节点上的broker.id的值为2;

[root@BigData03 apache-zookeeper-3.7.0-bin]# cd /data/software/kafka_2.12-2.7.0/config/ [root@BigData03 config]# vi server.properties- 1

- 2

具体修改如下所示:

broker.id=2 lod.dirs=/data/kafka-logs zookeeper.connect=bigdata01:2181,bigdata02:2181,bigdata03:2181- 1

- 2

- 3

5、启动集群

分别在三个节点上启动Kafka进程

在bigdata01上启动

[root@BigData01 software]# cd /data/software/kafka_2.12-2.7.0 [root@BigData01 kafka_2.12-2.7.0]# bin/kafka-server-start.sh -daemon config/server.properties- 1

- 2

在bigdata02上启动

[root@BigData02 config]# cd /data/software/kafka_2.12-2.7.0 [root@BigData02 kafka_2.12-2.7.0]# bin/kafka-server-start.sh -daemon config/server.properties- 1

- 2

在bigdata03上启动

[root@BigData03 config]# cd /data/software/kafka_2.12-2.7.0 [root@BigData03 kafka_2.12-2.7.0]# bin/kafka-server-start.sh -daemon config/server.properties- 1

- 2

6、验证

分别在bigdata01、bigdata02和bigdata03节点上执行jps命令验证是否有Kafka进程,若有说明启动正常了; 以bigdata01为例:

[root@BigData01 kafka_2.12-2.7.0]# jps 1840 QuorumPeerMain 2845 Kafka 2941 Jps- 1

- 2

- 3

- 4

安装Kafka集群监控管理工具

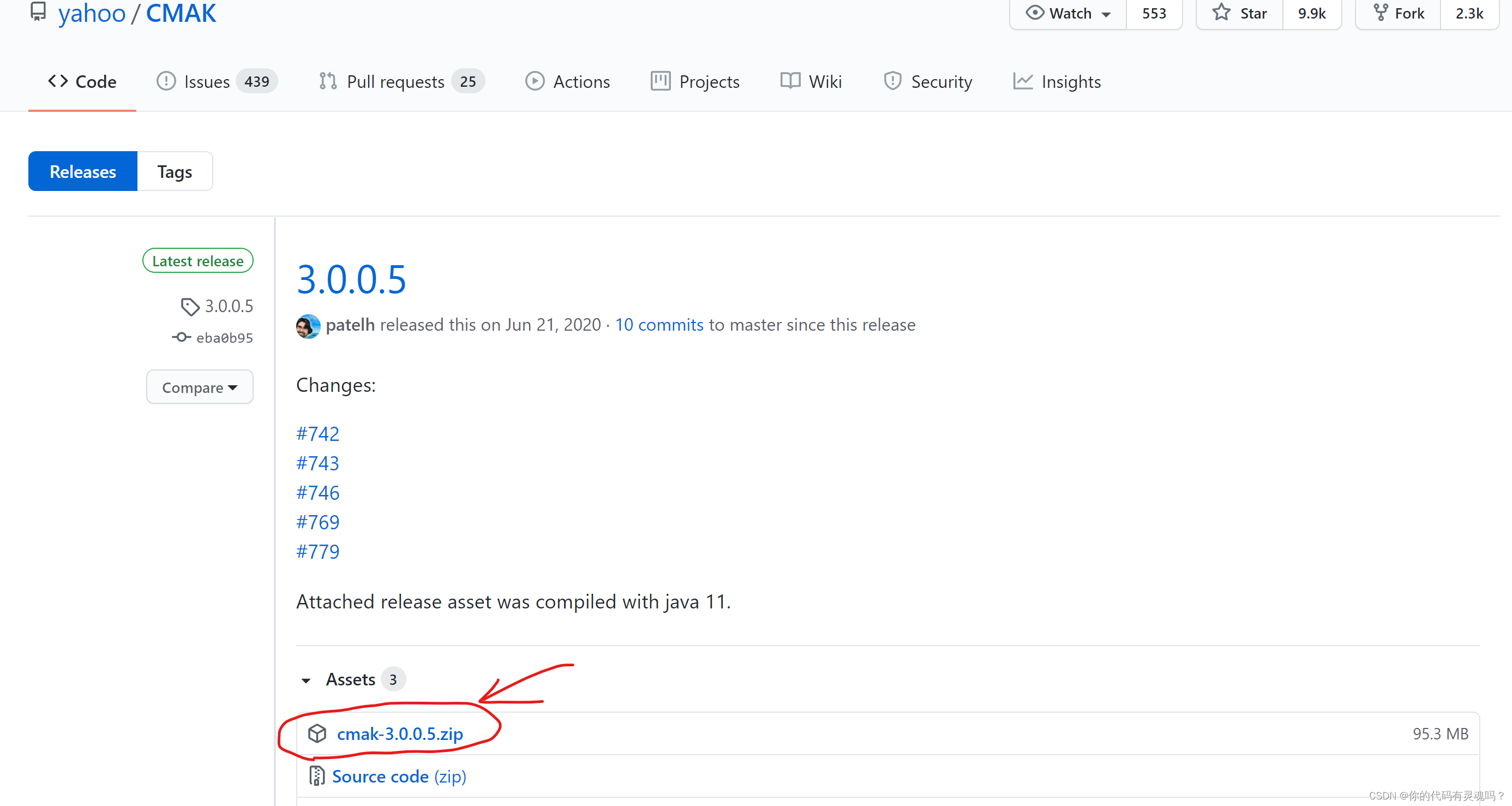

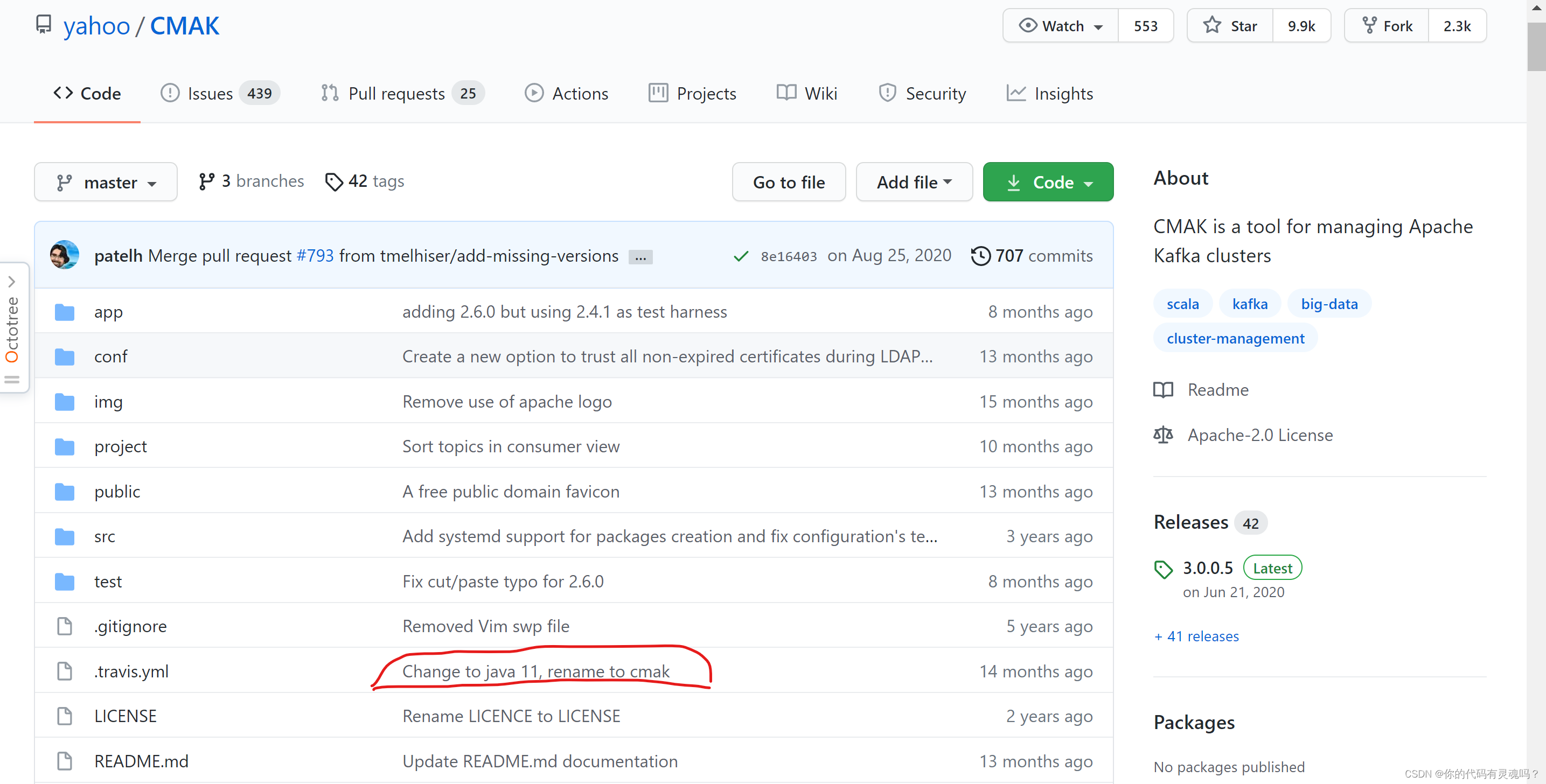

在Github上搜索“CMAK”,CMAK是雅虎开源的一款Kafka集群管理工具。通过该工具,用户可以在Web界面操作Kafka集群,可以轻松检查集群状态(Topic、Consumer、Offset、Brokers、Replica、Partition)等。

由于CMAK-3.0.0.5.zip版本是在Java11上编译的,所以运行的时候也需要使用Java11这个版本,我们目前服务器上使用的是Java8这个版本;

我们可以通过额外安装一个JDK11的方式解决;

1、下载JDK11

只需要解压即可,不需要配置环境变量,因为只有这个CMAK需要使用JDK11

上传JDK和CMAK至bigdata01的/data/software目录下,并解压

[root@BigData01 software]# tar -zxvf jdk-11.0.10_linux-x64_bin.tar.gz- 1

解压CMAK-3.0.0.5.zip文件

[root@BigData01 software]# unzip cmak-3.0.0.5.zip -bash: unzip: command not found- 1

- 2

出现-bash: unzip: command not found问题,我们使用yum命令安装unzip工具

先清空一下yum缓存,否则使用yum可能无法安装unzip

[root@BigData01 software]# yum clean all Loaded plugins: fastestmirror Repodata is over 2 weeks old. Install yum-cron? Or run: yum makecache fast Cleaning repos: base extras updates Cleaning up list of fastest mirrors You have new mail in /var/spool/mail/root- 1

- 2

- 3

- 4

- 5

- 6

再安装unzip

[root@BigData01 software]# yum install -y unzip Loaded plugins: fastestmirror Determining fastest mirrors .... Running transaction Installing : unzip-6.0-21.el7.x86_64 1/1 Verifying : unzip-6.0-21.el7.x86_64 1/1 Installed: unzip.x86_64 0:6.0-21.el7 Complete!- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

再重新解压CMAK-3.0.0.5.zip压缩包

[root@BigData01 software]# unzip cmak-3.0.0.5.zip- 1

2、修改CMAK配置

首先修改bin目录下的cmak脚本

在里面配置JAVA_HOME指向jdk11的安装目录,否则默认会使用jdk8;

[root@BigData01 software]# cd cmak-3.0.0.5/bin/ [root@BigData01 bin]# vi cmak- 1

- 2

增加如下配置:

JAVA_HOME=/data/software/jdk-11.0.10- 1

然后修改conf目录下的application.conf文件

只需要在里面增加一行cmak.zkhosts参数的配置即可,指定zookeeper的地址注意:在这里指定zookeeper地址主要是为了让CMAK在里面保存数据,这个zookeeper地址不一定是Kafka集群中使用的那个zookeeper集群,随便指定哪个zookeeper集群都可以。

[root@BigData01 bin]# cd /data/software/cmak-3.0.0.5/conf/ [root@BigData01 conf]# vi application.conf- 1

- 2

修改cmak.zkhosts配置,注意cmak.zkhosts已经有默认值,把默认值改为如下值:

cmak.zkhosts="bigdata01:2181,bigdata02:2181,bigdata03:2181"- 1

3、修改Kafka启动配置

想要在CMAK中查看Kafka的一些指标信息,在启动Kakfa的时候需要指定JXM_PORT

先停止三台机器上的Kafka集群

bigdata01:

[root@BigData01 conf]# cd /data/software/kafka_2.12-2.7.0 You have new mail in /var/spool/mail/root [root@BigData01 kafka_2.12-2.7.0]# bin/kafka-server-stop.sh- 1

- 2

- 3

bigdata02:

[root@BigData02 ~]# cd /data/software/kafka_2.12-2.7.0/ You have new mail in /var/spool/mail/root [root@BigData02 kafka_2.12-2.7.0]# bin/kafka-server-stop.sh- 1

- 2

- 3

bigdata03:

[root@BigData03 ~]# cd /data/software/kafka_2.12-2.7.0/ You have new mail in /var/spool/mail/root [root@BigData03 kafka_2.12-2.7.0]# bin/kafka-server-stop.sh- 1

- 2

- 3

重新启动Kafka集群,指定JXM_PORT;

bigdata01:[root@BigData01 kafka_2.12-2.7.0]# JMX_PORT=9988 bin/kafka-server-start.sh -daemon config/server.properties- 1

bigdata02:

[root@BigData02 kafka_2.12-2.7.0]# JMX_PORT=9988 bin/kafka-server-start.sh -daemon config/server.properties- 1

bigdata03:

[root@BigData03 kafka_2.12-2.7.0]# JMX_PORT=9988 bin/kafka-server-start.sh -daemon config/server.properties- 1

4、启动CMAK

[root@BigData01 cmak-3.0.0.5]# bin/cmak -Dconfig.file=conf/application.conf -Dhttp.port=9001- 1

如果想把CMAK放在后台执行的话需要添加上nohup和&

nohup bin/cmak -Dconfig.file=conf/application.conf -Dhttp.port=9001 &- 1

-

相关阅读:

《opencv学习笔记》-- 分水岭算法

《MongoDB入门教程》第09篇 逻辑运算符

L2-027 名人堂与代金券

7-2 时间换算

C++ Primer 第十一章 关联容器 重点解读

何时使用Elasticsearch而不是MySql

【uniapp】小程序开发4:微信小程序支付、h5跳转小程序

MPC模型预测控制器学习笔记(附程序)

Python 教程之控制流(4)Python 中的循环技术

【苹果群发推】iMessage推送这是促进服务器的Apple消息

- 原文地址:https://blog.csdn.net/yuanziwoxin/article/details/126563078