-

k8s--基础--28.3--ceph--集群安装

k8s–基础–28.3–ceph–集群安装

1、机器

IP hostname CPU 内存 硬盘 说明 192.168.187.156 monitor1 2核 2G 20G 管理节点,监控节点 192.168.187.157 monitor2 2核 2G 20G 监控节点 192.168.187.158 monitor3 2核 2G 20G 监控节点 192.168.187.159 osd1 2核 2G 20G osd节点,也就是对象存储节点 192.168.187.160 osd2 2核 2G 20G osd节点,也就是对象存储节点 2、公共操作

各个节点都要操作

2.1、修改主机名

hostnamectl set-hostname monitor1 hostnamectl set-hostname monitor2 hostnamectl set-hostname monitor3 hostnamectl set-hostname osd1 hostnamectl set-hostname osd2- 1

- 2

- 3

- 4

- 5

2.2、修改host文件

cat >> /etc/hosts <- 1

- 2

- 3

- 4

- 5

- 6

- 7

2.3、配置monitor1到其他节点无密码登陆

在 monitor1上操作

2.3.1、生成加密信息

cd ssh-keygen -t rsa- 1

- 2

一直回车就可以

2.3.2、将公钥复制到对应的节点上

ssh-copy-id -i .ssh/id_rsa.pub root@monitor2 ssh-copy-id -i .ssh/id_rsa.pub root@monitor3 ssh-copy-id -i .ssh/id_rsa.pub root@osd1 ssh-copy-id -i .ssh/id_rsa.pub root@osd2- 1

- 2

- 3

- 4

2.4、安装基础软件包

yum install -y wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release lrzsz openssh-server yum install -y deltarpm- 1

- 2

- 3

- 4

2.5、关闭firewalld防火墙

所有节点节点操作

2.5.1、停止firewalld服务,并禁用

# 停止firewalld服务 systemctl stop firewalld.service # 禁用firewalld服务 systemctl disable firewalld.service # 查看状态 systemctl status firewalld.service- 1

- 2

- 3

- 4

- 5

- 6

- 7

2.5.2、安装iptables

除非你要用iptables,否则可以不用装,我这里是没有安装。

2.5.2.1、安装

yum install -y iptables-services- 1

2.5.2.2、禁用iptables

# 停止iptables服务 并 禁用这个服务 service iptables stop && systemctl disable iptables # 查看状态 service iptables status- 1

- 2

- 3

- 4

- 5

2.6、时间同步

2.6.1、在monitor1上

ntpdate cn.pool.ntp.org # 启动ntpd,并且设置开机自启动 systemctl start ntpd && systemctl enable ntpd- 1

- 2

- 3

2.6.2、其他节点 同步monitor1 时间

2.6.2.1、同步monitor1时间

ntpdate monitor1- 1

2.6.2.2、定时同步时间

crontab -e

* */1 * * * /usr/sbin/ntpdate monitor1- 1

2.7、安装epel源

- 目的: 让我们可以安装ceph-deploy

- 在各个节点上操作

yum install -y yum-utils sudo yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/ sudo yum install --nogpgcheck -y epel-release sudo rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 sudo rm /etc/yum.repos.d/dl.fedoraproject.org*- 1

- 2

- 3

- 4

- 5

- 6

2.8、配置ceph的yum源

- 目的: 让我们可以安装ceph-deploy

- 在各个节点上操作

vi /etc/yum.repos.d/ceph.repo- 1

内容

[Ceph] name=Ceph packages for $basearch baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/x86_64/ enabled=1 gpgcheck=0 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc priority=1 [Ceph-noarch] name=Ceph noarch packages baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/noarch/ enabled=1 gpgcheck=0 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc priority=1 [ceph-source] name=Ceph source packages baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/SRPMS/ enabled=1 gpgcheck=0 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc priority=1- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

2.9、更新yum源

- 目的: 让我们可以安装ceph-deploy

- 在各个节点上操作

yum update -y- 1

3、安装ceph-deploy

3.1、monitor1

yum install -y ceph-deploy yum install -y yum-plugin-priorities- 1

- 2

3.2、其他节点

yum install -y ceph- 1

4、搭建集群

在 monitor1 上操作

4.1、创建目录

用于保存ceph-deploy生成的配置文件信息

mkdir -p /root/ceph-deploy- 1

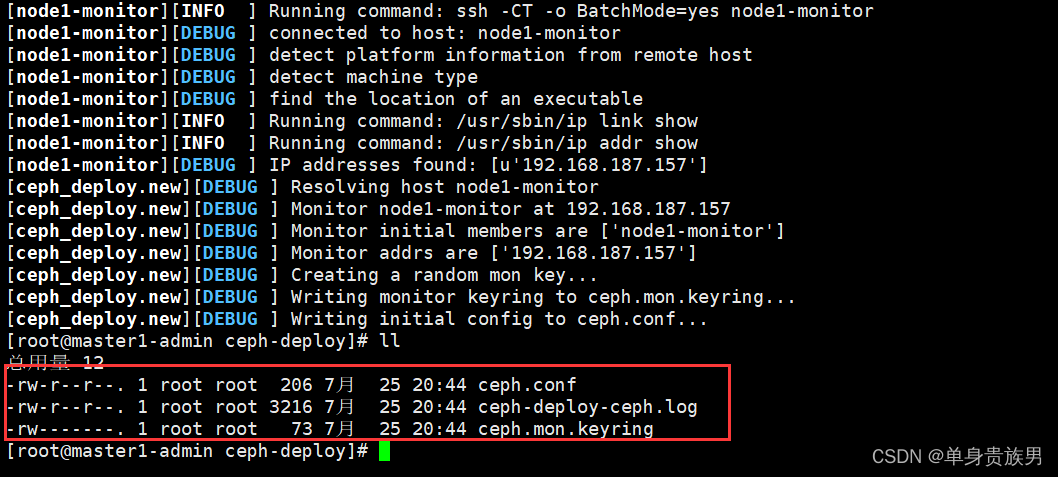

4.2、初始化monitor节点,

# 进入目录 cd /root/ceph-deploy # 执行ceph-deploy,生成的配置文件信息 ceph-deploy new monitor1 monitor2 monitor3- 1

- 2

- 3

- 4

- 5

- 6

4.3、修改ceph配置文件

vi ceph.conf- 1

内容

[global] fsid = c9b9252e-b3e5-4ffb-84b5-3a5d68effefe mon_initial_members = monitor1, monitor2, monitor3 mon_host = 192.168.187.156,192.168.187.157,192.168.187.158 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx # 新增,把默认副本数从3改成2,也就是只有2个osd osd pool default size = 2- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

因为我们环境中有2个OSD,Ceph模式的默认osd副本个数为3,因此需要修改为2

4.4、安装ceph集群

# 进入目录 cd /root/ceph-deploy ceph-deploy install monitor1 monitor2 monitor3 osd1 osd2- 1

- 2

- 3

- 4

4.5、部署初始监视器并收集密钥

ceph-deploy mon create-initial ll- 1

- 2

可以看到生成很多密钥

ceph.bootstrap-mds.keyring # MDS启动key ceph.bootstrap-mgr.keyring ceph.bootstrap-osd.keyring # OSD启动key ceph.bootstrap-rgw.keyring ceph.client.admin.keyring # 管理员key ceph.conf ceph-deploy-ceph.log ceph.mon.keyring- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

5、给ceph添加osd,并完成激活

5.1、在osd节点osd1和osd2上操作(对象存储节点)

mkdir /var/local/osd1 chmod 777 /var/local/osd1/- 1

- 2

5.2、monitor1上操作

5.2.1、准备osd

# 进入目录 cd /root/ceph-deploy ceph-deploy osd prepare osd1:/var/local/osd1 ceph-deploy osd prepare osd2:/var/local/osd1- 1

- 2

- 3

- 4

5.2.2、激活osd

# 进入目录 cd /root/ceph-deploy ceph-deploy osd activate osd1:/var/local/osd1 ceph-deploy osd activate osd2:/var/local/osd1- 1

- 2

- 3

- 4

6、将配置文件和管理密钥分发到各个ceph节点

6.1、在monitor1上操作

# 进入目录 cd /root/ceph-deploy # 把秘钥文件拷贝到管理节点和ceph节点 ceph-deploy admin monitor1 monitor2 monitor3 osd1 osd2- 1

- 2

- 3

- 4

- 5

6.2、在 monitor1 monitor2 monitor3 osd1 osd2 上操作

# 给秘钥文件 设置读权限 chmod +r /etc/ceph/ceph.client.admin.keyring- 1

- 2

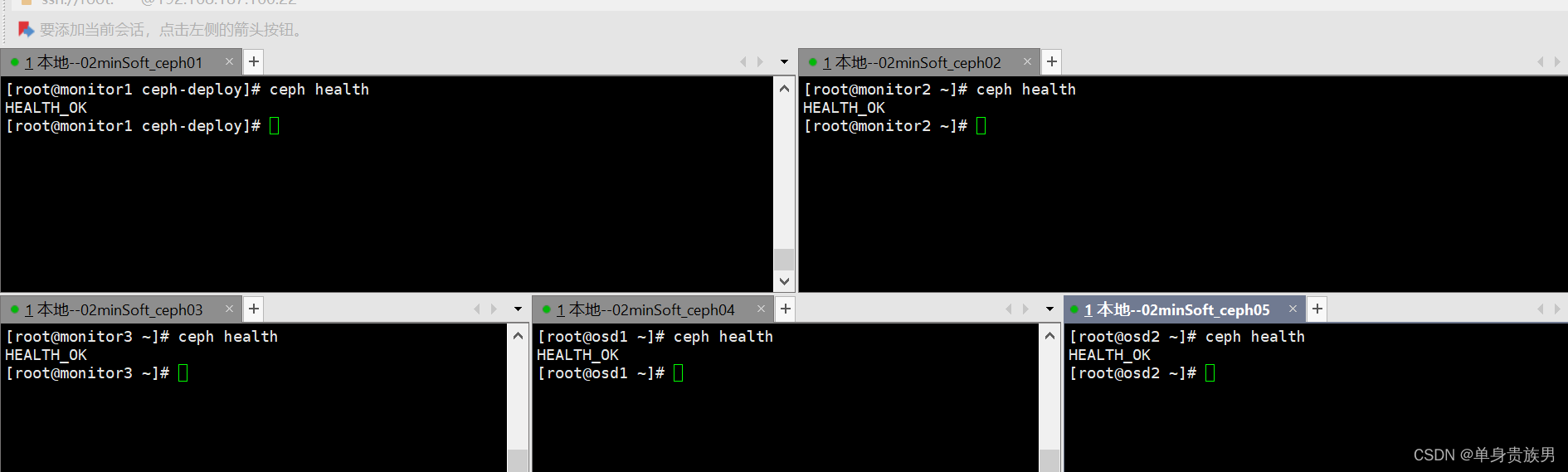

6.3、查看集群健康状态

ceph health- 1

HEALTH_OK,说明ceph osd添加成功,可以正常使用

7、ceph–rbd创建和使用

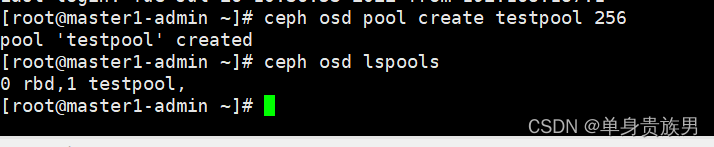

7.1、创建一个pool池

# 创建一个pool,名称是testpool ceph osd pool create testpool 256 # 查看都有哪些pool池 ceph osd lspools- 1

- 2

- 3

- 4

7.2、创建rbd(image)

# 在testpool中创建rbd,名称是myrbd,大小为10240 rbd create testpool/myrbd --size 10240- 1

- 2

7.3、映射块设备到自己机器

# 需要禁用,否则挂载不成功 rbd feature disable testpool/myrbd object-map fast-diff deep-flatten # 查看块设备 rbd map testpool/myrbd- 1

- 2

- 3

- 4

- 5

7.4、挂载使用

# 创建目录 mkdir /mnt/firstrbd # 格式为xfs文件系统 mkfs.xfs /dev/rbd0 # 挂载 mount /dev/rbd0 /mnt/firstrbd- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

浅拷贝时,展开运算符和Object.assign有何区别?

C# 任务调度 c# TaskScheduler

2019银川icpc K 思维,悬线法dp

网络安全(黑客)自学

网上商城建设:微信小程序直播申请开通流程及开通方法

【进程管理】进程状态

基于SSM的服装商城销售系统(含文档资料)

Python 获取两个数组中各个坐标点对之间最短的欧氏距离

【PyQt小知识 - 4】:QGroupBox分组框控件 - 边框和标题设置