-

8月12日TensorFlow学习笔记——梯度下降、手写数字识别实战、TensorBoard、Keras 高层 API

本文为8月12日TensorFlow学习笔记,分为五个章节:- 梯度下降

- Himmelblau 函数优化;

- 手写数字识别实战;

- TensorBoard 可视化;

- Keras 高层 API。

前言

一、梯度下降

1、tf.GradientTape()

w = tf.constant(1.) x = tf.constant(2.) y = x * w with tf.GradientTape() as tape: # 不是 tf.Variable 类型,需要监视 tape.watch([w]) y2 = x * w grad1 = tape.gradient(y, [w]) print('grad1={}'.format(grad1)) with tf.GradientTape() as tape: # 不是 tf.Variable 类型,需要监视 tape.watch([w]) y2 = x * w grad2 = tape.gradient(y2, [w]) print('grad2={}'.format(grad2)) >>> grad1=[None] >>> grad2=[<tf.Tensor: shape=(), dtype=float32, numpy=2.0>]- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

2、二次求导

with tf.GradientTape() as t1: with tf.GradientTape() as t2: y = x * w + b dy_dw, dy_db = t2.gradient(y, [w, b]) dy2_dw2 = t1.gradient(dy_dw, w)- 1

- 2

- 3

- 4

- 5

- 6

3、激活函数

(1)、tf.sigmoid()

a = tf.linspace(-10., 10., 10) with tf.GradientTape() as tape: tape.watch(a) y = tf.sigmoid(a) grads = tape.gradient(y, [a]) print('a: ', a) print('grads: ', grads) >>> a: tf.Tensor( [-10. -7.7777777 -5.5555553 -3.333333 -1.1111107 1.1111116 3.333334 5.5555563 7.7777786 10. ], shape=(10,), dtype=float32) >>> grads: [<tf.Tensor: shape=(10,), dtype=float32, numpy= array([4.5395806e-05, 4.1859134e-04, 3.8362022e-03, 3.3258736e-02, 1.8632649e-01, 1.8632641e-01, 3.3258699e-02, 3.8362255e-03, 4.1854731e-04, 4.5416677e-05], dtype=float32)>]- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

(2)、tf.tanh()

a = tf.linspace(-10., 10., 10) with tf.GradientTape() as tape: tape.watch(a) # y = tf.sigmoid(a) y = tf.tanh(a) grads = tape.gradient(y, [a]) print('a: ', a) print('grads: ', grads) >>> a: tf.Tensor( [-10. -7.7777777 -5.5555553 -3.333333 -1.1111107 1.1111116 3.333334 5.5555563 7.7777786 10. ], shape=(10,), dtype=float32) >>> grads: [<tf.Tensor: shape=(10,), dtype=float32, numpy= array([0.0000000e+00, 8.3446486e-07, 5.9842168e-05, 5.0776950e-03, 3.5285267e-01, 3.5285199e-01, 5.0774571e-03, 5.9722963e-05, 5.9604633e-07, 0.0000000e+00], dtype=float32)>]- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

(3)、tf.nn.relu() / tf.nn.leaky_relu()

a = tf.linspace(-10., 10., 10) with tf.GradientTape() as tape: tape.watch(a) # y = tf.sigmoid(a) # y = tf.tanh(a) y = tf.nn.relu(a) grads = tape.gradient(y, [a]) print('a: ', a) print('grads: ', grads) >>> a: tf.Tensor( [-10. -7.7777777 -5.5555553 -3.333333 -1.1111107 1.1111116 3.333334 5.5555563 7.7777786 10. ], shape=(10,), dtype=float32) >>> grads: [<tf.Tensor: shape=(10,), dtype=float32, numpy=array([0., 0., 0., 0., 0., 1., 1., 1., 1., 1.], dtype=float32)>]- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

4、损失函数

(1)、MSE

x = tf.random.normal([2, 4]) w = tf.random.normal([4, 3]) b = tf.zeros([3]) y = tf.constant([2, 0]) with tf.GradientTape() as tape: tape.watch([w, b]) prob = tf.nn.softmax(x @ w + b, axis=1) loss = tf.reduce_mean(tf.losses.MSE(tf.one_hot(y, depth=3), prob)) grads = tape.gradient(loss, [w, b]) print('grads[0]: ', grads[0]) >>> grads[0]: tf.Tensor( [[ 3.41495015e-02 7.12523609e-03 -4.12747338e-02] [ 5.00929244e-02 6.04621917e-02 -1.10555105e-01] [-9.84368889e-05 -5.46640791e-02 5.47625050e-02] [-8.90391693e-02 1.92172211e-02 6.98219463e-02]], shape=(4, 3), dtype=float32)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

(2)、Cross Entropy

x = tf.random.normal([2, 4]) w = tf.random.normal([4, 3]) b = tf.zeros([3]) y = tf.constant([2, 0]) with tf.GradientTape() as tape: tape.watch([w, b]) logits = x @ w + b loss = tf.reduce_mean(tf.losses.categorical_crossentropy(tf.one_hot(y, depth=3), logits, from_logits=True)) # prob = tf.nn.softmax(x @ w + b, axis=1) # loss = tf.reduce_mean(tf.losses.MSE(tf.one_hot(y, depth=3), prob)) grads = tape.gradient(loss, [w, b]) print('grads[1]: ', grads[1]) >>> grads[1]: tf.Tensor([-0.35595876 0.51454145 -0.15858266], shape=(3,), dtype=float32)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

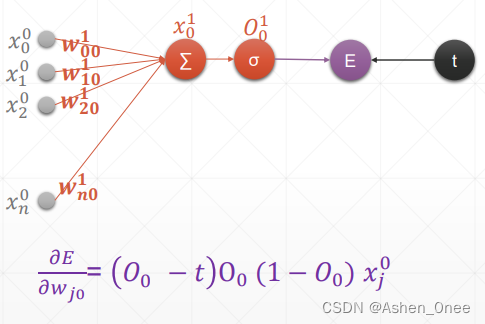

5、单层感知机梯度

x = tf.random.normal([1, 3]) w = tf.ones([3, 1]) b = tf.ones([1]) y = tf.constant([1]) with tf.GradientTape() as tape: tape.watch([w, b]) logits = tf.sigmoid(x@w + b) loss = tf.reduce_mean(tf.losses.MSE(y, logits)) grads = tape.gradient(loss, [w, b]) print('w_grad: ', grads[0]) print('b_grad: ', grads[1]) >>> w_grad: tf.Tensor( [[ 0.48524138] [ 0.33258936] [-0.20803826]], shape=(3, 1), dtype=float32) >>> b_grad: tf.Tensor([-0.2610483], shape=(1,), dtype=float32)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

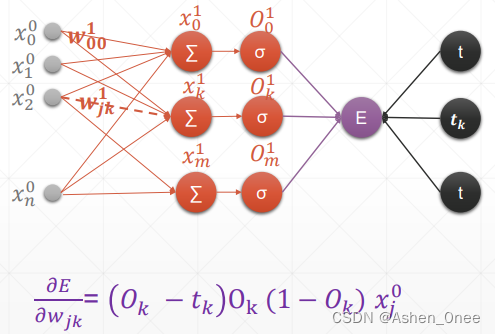

6、多层感知机梯度

x = tf.random.normal([2, 4]) # print(x.shape) w = tf.random.normal([4, 3], dtype=tf.float32) # print(w.shape) b = tf.zeros([3]) y = tf.constant([2, 0]) with tf.GradientTape() as tape: tape.watch([w, b]) prob = tf.nn.softmax(x @ w + b, axis=1) loss = tf.reduce_mean(tf.losses.MSE(tf.one_hot(y, depth=3), prob)) grads = tape.gradient(loss, [w, b]) print('w_grad: ', grads[0]) print('b_grad: ', grads[1]) >>> w_grad: tf.Tensor( [[ 0.08066104 0.01278404 -0.09344502] [-0.03609233 -0.00373706 0.03982937] [ 0.05569387 -0.0013712 -0.05432263] [-0.02967714 0.01885842 0.01081872]], shape=(4, 3), dtype=float32) >>> b_grad: tf.Tensor([ 0.02431668 -0.03734029 0.0130236 ], shape=(3,), dtype=float32)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 链式法则:

x = tf.constant(1.) w1 = tf.constant(2.) b1 = tf.constant(1.) w2 = tf.constant(2.) b2 = tf.constant(1.) # persistent=True: 可调用多次 tf.GradientTape() 函数 with tf.GradientTape(persistent=True) as tape: tape.watch([w1, b1, w2, b2]) y1 = x * w1 + b1 y2 = y1 * w2 + b2 dy2_dy1 = tape.gradient(y2, [y1]) dy1_dw1 = tape.gradient(y1, [w1]) dy2_dw1 = tape.gradient(y2, [w1]) print('dy1_dw1: ', dy2_dy1[0] * dy1_dw1[0]) print('dy2_dw1: ', dy2_dw1[0]) >>> dy1_dw1: tf.Tensor(2.0, shape=(), dtype=float32) >>> dy2_dw1: tf.Tensor(2.0, shape=(), dtype=float32)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

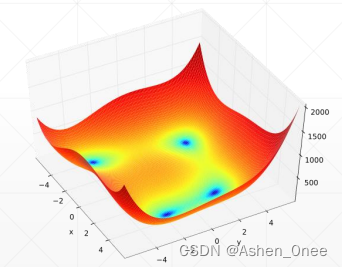

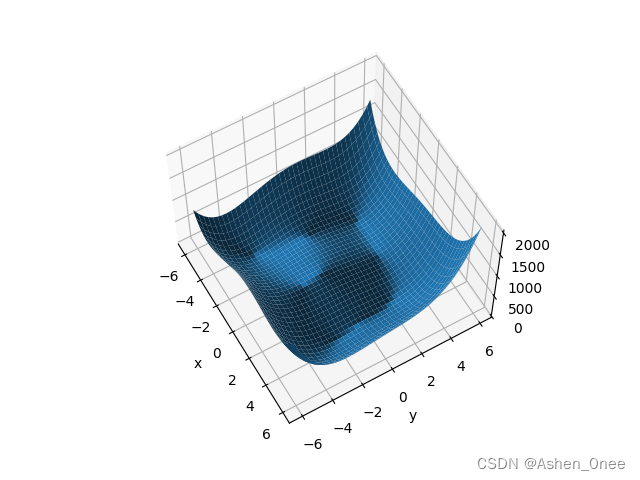

二、Himmelblau 函数优化

f ( x , y ) = ( x 2 + y − 11 ) 2 + ( x + y 2 − 7 ) 2 f(x, y) = (x^2 + y - 11)^2 + (x + y^2 - 7)^2 f(x,y)=(x2+y−11)2+(x+y2−7)2

def himmelblau(x): return (x[0]**2 + x[1] - 11)**2 + (x[0] + x[1]**2 -7)**2 x = np.arange(-6, 6, 0.1) y = np.arange(-6, 6, 0.1) print('x, y range: ', x.shape, y.shape) X, Y = np.meshgrid(x, y) print('X, Y maps: ', X.shape, Y.shape) Z = himmelblau([X, Y]) fig = plt.figure('himmelblau') ax = fig.gca(projection='3d') ax.plot_surface(X, Y, Z) ax.view_init(60, -30) ax.set_xlabel('x') ax.set_ylabel('y') plt.show() x = tf.constant([-4., 0.]) for step in range(200): with tf.GradientTape() as tape: tape.watch([x]) y = himmelblau(x) grads = tape.gradient(y, [x])[0] x -= 0.01 * grads if y == 0: print('step={}, x={}, y={}'.format(step, x, y)) >>> step=199, x=[-3.7793102 -3.283186 ], y=0.0- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

三、手写数字识别实战

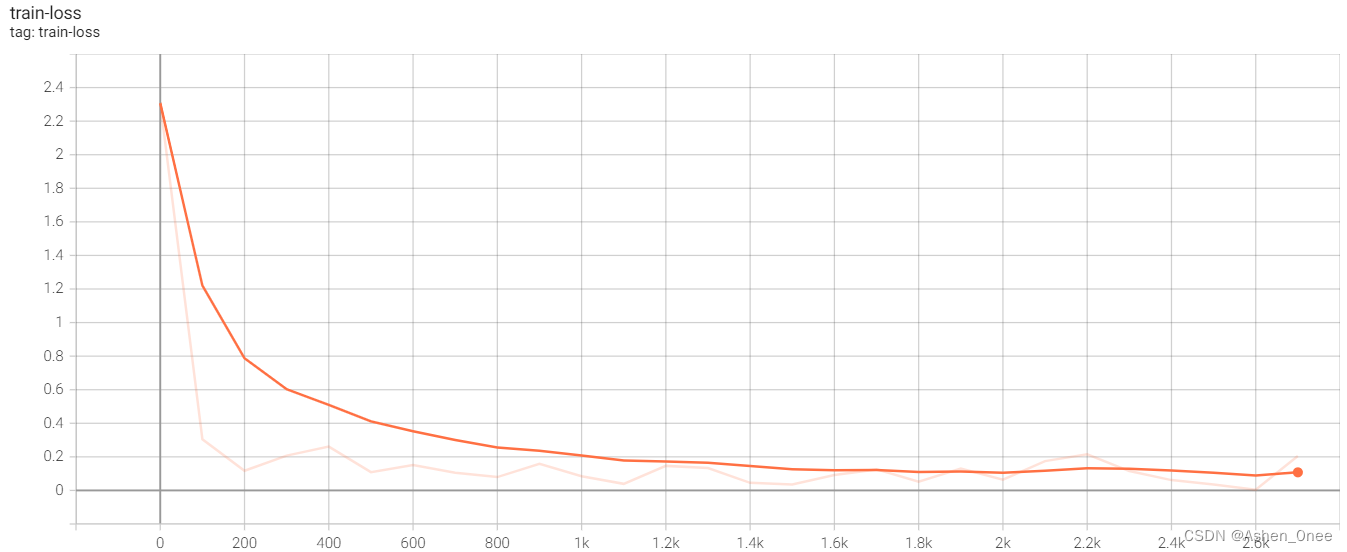

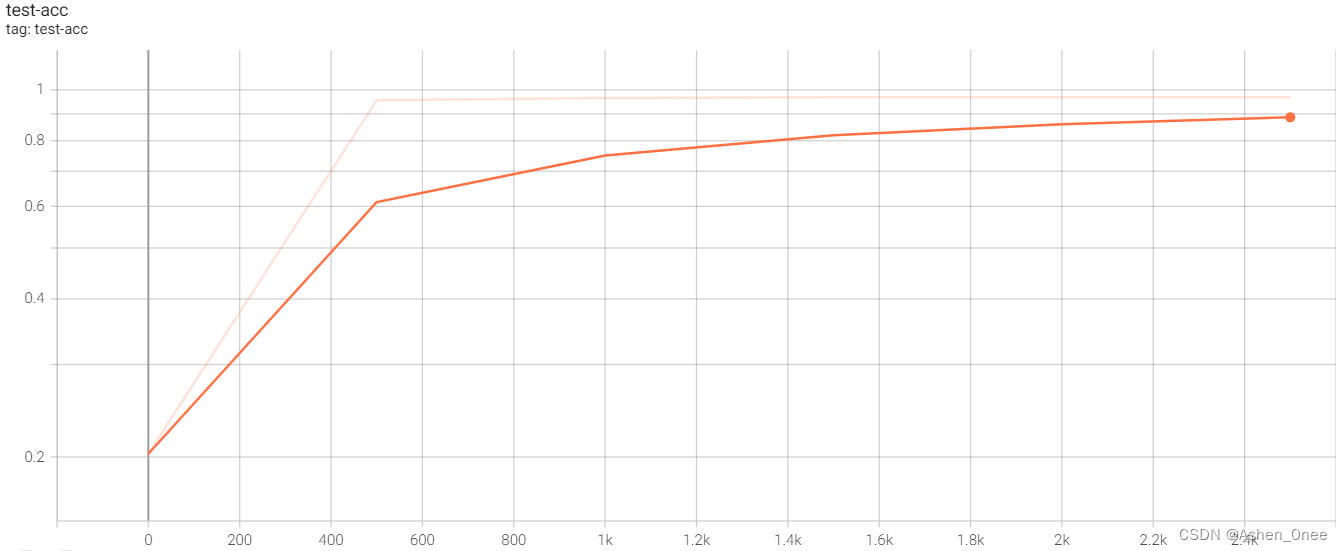

def preprocess(x, y): x = tf.cast(x, dtype=tf.float32) / 255. y = tf.cast(y, dtype=tf.int32) return x, y (x, y), (x_test, y_test) = datasets.fashion_mnist.load_data() print(x.shape, y.shape) # 构建数据集 batchsz = 128 db = tf.data.Dataset.from_tensor_slices((x, y)) db = db.map(preprocess).shuffle(batchsz).batch(batchsz) db_test = tf.data.Dataset.from_tensor_slices((x_test, y_test)) db_test = db_test.map(preprocess).shuffle(batchsz).batch(batchsz) # 迭代器 db_iter = iter(db) sample = next(db_iter) print('batch: ', sample[0].shape, sample[1].shape) model = Sequential([ layers.Dense(256, activation=tf.nn.relu), # [b, 784] ==> [b, 256] layers.Dense(128, activation=tf.nn.relu), # [b, 256] ==> [b, 128] layers.Dense(64, activation=tf.nn.relu), # [b, 128] ==> [b, 64] layers.Dense(32, activation=tf.nn.relu), # [b, 64] ==> [b, 32] layers.Dense(10) # [b, 32] ==> [b, 10] 330 = 32*10 + 10 ]) model.build(input_shape=[None, 28*28]) model.summary() # 优化器:w = w - lr*grad optimizer = optimizers.Adam(learning_rate=1e-3) def main(): for epoch in range(30): for step, (x, y) in enumerate(db): # x: [b, 28, 28] # y: [b] x = tf.reshape(x, [-1, 28*28]) with tf.GradientTape() as tape: # [b, 784] ==> [b, 10] logits = model(x) y_onehot = tf.one_hot(y, depth=10) # [b] loss = tf.reduce_mean(tf.losses.MSE(y_onehot, logits)) loss2 = tf.reduce_mean(tf.losses.categorical_crossentropy(y_onehot, logits, from_logits=True)) grads = tape.gradient(loss2, model.trainable_variables) optimizer.apply_gradients(zip(grads, model.trainable_variables)) if step % 100 == 0: print(epoch, step, 'loss: ', float(loss2), float(loss)) # test total_correct = 0 total_num = 0 for x, y in db_test: # x: [b, 28, 28] # y: [b] x = tf.reshape(x, [-1, 28 * 28]) # [b, 10] logits = model(x) # logits ==> prob, [b, 10] prob = tf.nn.softmax(logits, axis=1) # [b, 10] ==> [b], int64 pred = tf.argmax(prob, axis=1) pred = tf.cast(pred, dtype=tf.int32) # pred: [b] # y: [b] # correct: [b], True: equal, False: not equal correct = tf.equal(pred, y) correct = tf.reduce_sum(tf.cast(correct, dtype=tf.int32)) total_correct += int(correct) # Tensor ==> Numpy total_num += x.shape[0] acc = total_correct / total_num print(epoch, 'test acc: ', acc) if __name__ == '__main__': main() >>> …… 29 test acc: 0.8871- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

四、TensorBoard 可视化

1、Step 1:run listener

tensorboard --logdir logs >>> D:\learning_materials\jupyternotebook_projects\my_TF>tensorboard --logdir logs Serving TensorBoard on localhost; to expose to the network, use a proxy or pass --bind_all TensorBoard 2.9.1 at http://localhost:6006/ (Press CTRL+C to quit)- 1

- 2

- 3

- 4

- 5

2、Step 2:build summary

current_time = datetime.datetime.now().strftime("%Y%m%d-%H%M%S") log_dir = 'logs/' + current_time summary_writer = tr.summary.create_file_write(log_dir)- 1

- 2

- 3

3、Step 3:feed scalar / single image / multi images

with summary_writer.as_default(): tf.summary.scalar('loss', float(loss), step=epoch) tf.summary.scalar('accuracy', float(train_accuracy), step=epoch)- 1

- 2

- 3

五、Keras 高层 API

Keras != tf.keras.

1、Meter

- Step1: Build a meter

acc_meter = metrics.Accuracy() loss_meter = metrics.Mean()- 1

- 2

- Step2: Update data

loss_meter.update_state(loss) acc_meter.update_state(y, pred)- 1

- 2

- Step3: Get average data

print(step, 'loss: ', loss_meter.result().numpy()) print(step, 'Acc: ', total_correct/total, acc_meter.result().numpy())- 1

- 2

- Step4: Clear buffer

if step % 100 == 0: print(step, 'loss: ', loss_meter.result().numpy()) loss_meter.reset_states()- 1

- 2

- 3

2、Compile & fit

- 求 Loss 并优化:

network.Compile(optimizer=optimizers.Adam(learning_rate=0.01), loss=tf.losses.CatergoricalCrossentropy(from_logits=True), metrics=['accuracy'])- 1

- 2

- 3

- 循环:

network.Compile(optimizer=optimizers.Adam(learning_rate=0.01), loss=tf.losses.CatergoricalCrossentropy(from_logits=True), metrics=['accuracy']) network.fit(db, epochs=10)- 1

- 2

- 3

- 4

- 5

- Evaluation:

network.Compile(optimizer=optimizers.Adam(learning_rate=0.01), loss=tf.losses.CatergoricalCrossentropy(from_logits=True), metrics=['accuracy']) network.fit(db, epochs=10, validation_data=ds_val, validation_steps=2)- 1

- 2

- 3

- 4

- 5

- 6

- Test:

network.Compile(optimizer=optimizers.Adam(learning_rate=0.01), loss=tf.losses.CatergoricalCrossentropy(from_logits=True), metrics=['accuracy']) network.fit(db, epochs=10, validation_data=ds_val, validation_steps=2) network.evaluate(ds_val)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- Predict:

sample = next(iter(ds_val)) x = sample[0] y = sample[1] pred = network.predict(x) y = tf.argmax(y, axis=1) pred = tf.argmax(pred, axis=1)- 1

- 2

- 3

- 4

- 5

- 6

- 7

3、自定义网络

- keras.Sequential:

network = keras.Sequential([ keras.layers.Dense(2, activation='relu'), keras.layers.Dense(2, activation='relu'), keras.layers.Dense(2) ]) network.build(input_shape=[None, 4]) network.summary()- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 自定义 MyDense 和 MyModel:

class MyModel(keras.Model): def __init__(self): super(MyModel, self).__init__() self.fc1 = MyDense(28*28, 256) self.fc2 = MyDense(256, 128) self.fc3 = MyDense(128, 64) self.fc4 = MyDense(64, 32) self.fc5 = MyDense(32, 10) def call(self, inputs, training=None): x = self.fc1(inputs) x = tf.nn.relu(x) x = self.fc2(inputs) x = tf.nn.relu(x) x = self.fc3(inputs) x = tf.nn.relu(x) x = self.fc4(inputs) x = tf.nn.relu(x) x = self.fc5(inputs) return x- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

4、模型加载与保存

- save / load weights:

model.save_weights('') # 恢复模型 model = create_model() model.load_weights('') loss, acc = model.evaluate(test_images, test_labels)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- save / load entire models:

model.save('model.h5') print('saved') del network print('loading') model = tf.keras.models.load_model('model.h5') network.evaluate(x_val, y_val)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

5、CIFAR10 实战

import tensorflow as tf from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics from tensorflow import keras import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' def preprocess(x, y): # [0~255] => [-1~1] x = 2 * tf.cast(x, dtype=tf.float32) / 255. - 1. y = tf.cast(y, dtype=tf.int32) return x,y batchsz = 128 # [50k, 32, 32, 3], [10k, 1] (x, y), (x_val, y_val) = datasets.cifar10.load_data() y = tf.squeeze(y) y_val = tf.squeeze(y_val) y = tf.one_hot(y, depth=10) # [50k, 10] y_val = tf.one_hot(y_val, depth=10) # [10k, 10] print('datasets:', x.shape, y.shape, x_val.shape, y_val.shape, x.min(), x.max()) train_db = tf.data.Dataset.from_tensor_slices((x,y)) train_db = train_db.map(preprocess).shuffle(10000).batch(batchsz) test_db = tf.data.Dataset.from_tensor_slices((x_val, y_val)) test_db = test_db.map(preprocess).batch(batchsz) sample = next(iter(train_db)) print('batch:', sample[0].shape, sample[1].shape) class MyDense(layers.Layer): # to replace standard layers.Dense() def __init__(self, inp_dim, outp_dim): super(MyDense, self).__init__() self.kernel = self.add_weight('w', [inp_dim, outp_dim]) # self.bias = self.add_variable('b', [outp_dim]) def call(self, inputs, training=None): print('input_shape: ', inputs.shape) print('kernel_shape', self.kernel.shape) x = inputs @ self.kernel return x class MyNetwork(keras.Model): def __init__(self): super(MyNetwork, self).__init__() self.fc1 = MyDense(32*32*3, 256) self.fc2 = MyDense(256, 128) self.fc3 = MyDense(128, 64) self.fc4 = MyDense(64, 32) self.fc5 = MyDense(32, 10) def call(self, inputs, training=None): """ :param inputs: [b, 32, 32, 3] :param training: :return: """ x = tf.reshape(inputs, [-1, 32*32*3]) # [b, 32*32*3] => [b, 256] x = self.fc1(x) x = tf.nn.relu(x) # [b, 256] => [b, 128] x = self.fc2(x) x = tf.nn.relu(x) # [b, 128] => [b, 64] x = self.fc3(x) x = tf.nn.relu(x) # [b, 64] => [b, 32] x = self.fc4(x) x = tf.nn.relu(x) # [b, 32] => [b, 10] x = self.fc5(x) return x network = MyNetwork() network.compile(optimizer=optimizers.Adam(learning_rate=1e-3), loss=tf.losses.CategoricalCrossentropy(from_logits=True), metrics=['accuracy']) network.fit(train_db, epochs=15, validation_data=test_db, validation_freq=1) network.evaluate(test_db) network.save_weights('ckpt/weights.ckpt') del network print('saved to ckpt/weights.ckpt') network = MyNetwork() network.compile(optimizer=optimizers.Adam(lr=1e-3), loss=tf.losses.CategoricalCrossentropy(from_logits=True), metrics=['accuracy']) network.load_weights('ckpt/weights.ckpt') print('loaded weights from file.') network.evaluate(test_db) >>> 79/79 [==============================] - 0s 1ms/step - loss: 1.6819 - accuracy: 0.5243- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

-

相关阅读:

Tomcat Arbitrary Write-file Vulnerability through PUT Method (CVE-2017-12615)

Linux性能优化--性能工具-系统CPU

CSS3基础

cell delay和net delay

java基于Spring boot+vue的大学生体质健康测试数据分析 elementui

前后端分离的参数加解密

gdb调试进程

es笔记一之es安装与介绍

git:一、GIT介绍+安装+全局配置+基础操作

本地部署Llama3-8B/70B 并进行逻辑推理测试

- 原文地址:https://blog.csdn.net/Ashen_0nee/article/details/126290763