-

激光雷达点云语义分割论文阅读小结

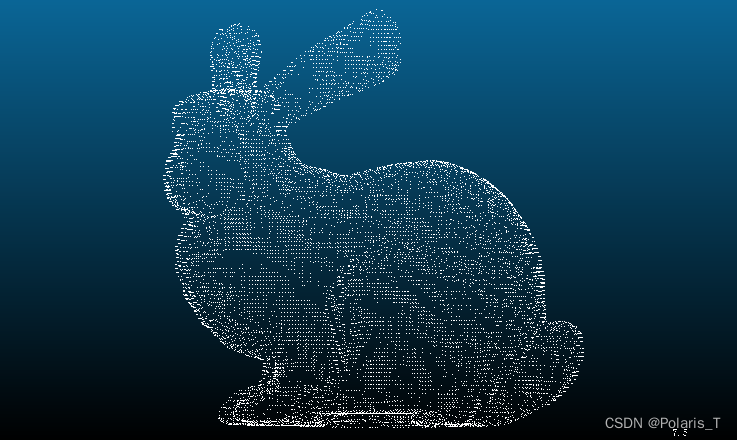

3D点云数据的概念

点云数据一般是由激光雷达等3D扫描设备获取的空间若干点的信息,一般包括(X,Y,Z)位置信息、RGB颜色信息和强度信息等,是一种多维度的复杂数据集合。相比于2D图像来说,3D点云数据可以提供丰富的几何、形状和尺度信息,不易受光照强度变化和其它物体遮挡的影响。

3D点云的获取方式

点云可以通过四种主要技术获得:

- 根据图像衍生而得,如通过双目相机

- 基于光探测距离和测距系统,如LiDAR

- 基于RGBD相机获取点云

- 利用Synthetic Aperture Radar (SAR)系统获取

基于不同原理获取的点云数据,其数据的表示特征和应用的范围也各不相同。

激光雷达点云数据

激光雷达(LiDAR)点云数据,是由三维激光雷达设备扫描得到的空间点的数据集,每一个点都包含了X、Y、Z三维坐标信息,有的还包含颜色信息、反射强度信息、回波次数信息等。

- 数据特点:稀疏性、无序性、空间分布不均匀、信息表示的有限性

激光雷达数据的获取原理:由车载激光扫描系统向周围发射激光信号,然后收集反射的激光信号,再通过数据采集、组合导航、点云解算,计算出这些点的准确空间信息。

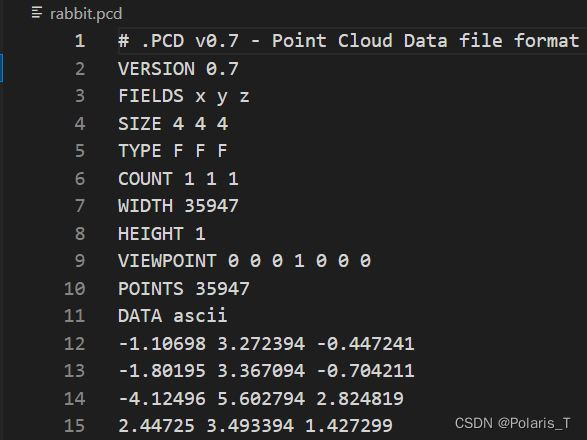

3D点云数据格式

-

pcd点云格式是pcl库种常常使用的点云文件格式。一个pcd文件中通常由两部分组成:分别是文件说明和点云数据

-

ply文件格式是斯坦福大学开发的一套三维mesh模型数据格式,图形学领域内很多著名的模型数据,比如Stanford的三维扫描数据库,Geogia Tech的大型几何模型库,最初的模型都是基于这个格式的。

-

其他:bin,txt格式

3D点云语义分割

- 给点云中的每个点赋予特定的语义标签

- 能够准确描述空间中物体的类型,使我们对空间中的物体有更加细致的了解

点云数据处理方式

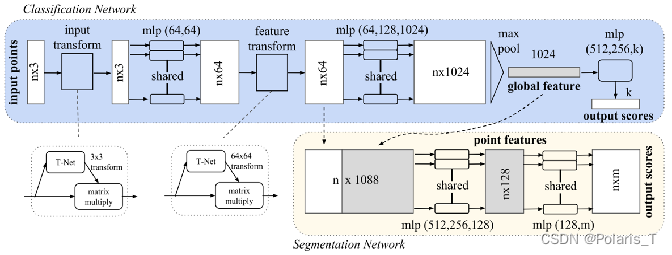

- 基于点的处理:PointNet、PointNet++。

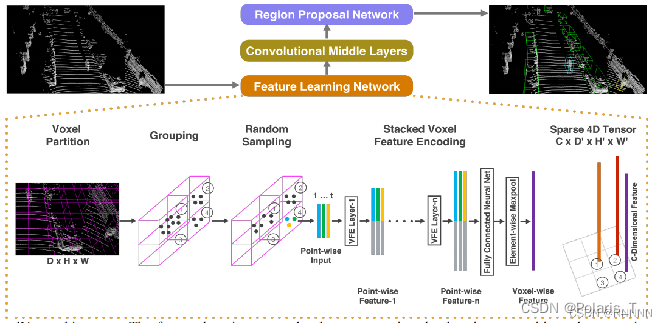

- 基于体素的处理:VoxelNet、FCPN、LatticeNet。Voxel化、Grouping后得到的数据形式为(N, T, C),N为voxel个数,T为每个voxel中的点数,C为每个点的输入特征个数

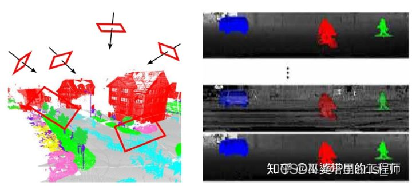

- 基于投影的处理:SqueezeSeg、RangeNet++。将3D点云投影到不同的二维平面上/投影到RangeView上。RangeView是一种非常紧致的数据表示,可有效降低计算量,对自动驾驶的应用而言非常重要

激光雷达点云分割——SqueezeSeg系列算法

SqueezeSeg V1 (ICRA 2018)

https://arxiv.org/pdf/1710.07368.pdf

由于点云的稀疏性以及不规律性,一般的2D CNN无法直接处理,因此需要事先转换成CNN-friendly的数据结构。SqueezeSeg使用球面投影,将点云转换为前视图

采用KITTI64线激光雷达数据,因此前视图H=64,同时受到数据集标注的影响,只考虑90°的前视角范围并划分为512个单元格,因此前视图W=512;每个点有5个feature:点的三维坐标(x,y,z)、反射强度I和点到视角中心的距离r,所以处理后的输入图像尺寸为64x512x5。- 主体网络结构:SqueezeNet

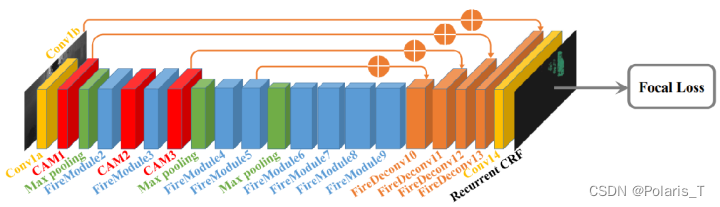

- 经典的Encoder-Decoder架构

- 经典的Skip-Connection方法(U-Net)

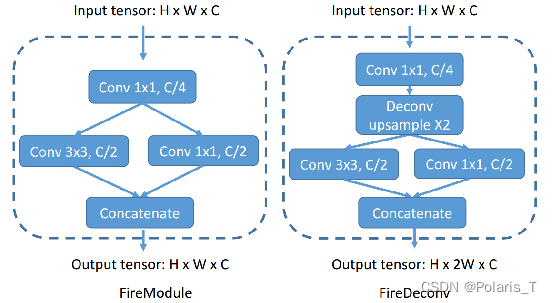

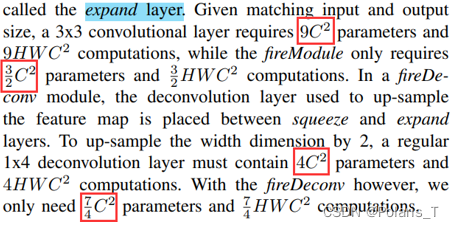

- 亮点:FireConv&FireDeconv Block | Recurrent CRF → Refine Target Image

PyTorch复现SqueezeNet主体部分

import torch import torch.nn as nn import torch.nn.functional as F class Conv(nn.Module): def __init__(self, inputs, outputs, kernel_size=3, stride=(1,2), padding=1): super(Conv, self).__init__() self.conv = nn.Conv2d(inputs, outputs, kernel_size=kernel_size, stride=stride, padding=padding) def forward(self, x):a return F.relu(self.conv(x)) class MaxPool(nn.Module): def __init__(self, kernel_size=3, stride=(1,2), padding=(1,0)): super(MaxPool, self).__init__() self.pool = nn.MaxPool2d(kernel_size=kernel_size, stride=stride, padding=padding, ceil_mode=True) def forward(self,x): return self.pool(x) class Fire(nn.Module): def __init__(self, inputs, out_channels1, out_channelsex1x1, out_channelsex3x3): super(Fire, self).__init__() self.conv1x1 = Conv(inputs, out_channels1, kernel_size=1, stride=1, padding=0) # 64 --> 16 self.ex1x1 = Conv(out_channels1, out_channelsex1x1, kernel_size=1, stride=1, padding=0) # 16 --> 64 self.ex3x3 = Conv(out_channels1, out_channelsex3x3, kernel_size=3, stride=1, padding=1) # 16 --> 64 def forward(self,x): return torch.cat([self.ex1x1(self.conv1x1(x)), self.ex3x3(self.conv1x1(x))], 1) # 合并为128 class Deconv(nn.Module): def __init__(self, inputs, out_channels, kernel_size, stride, padding=0): super(Deconv, self).__init__() self.deconv = nn.ConvTranspose2d(inputs, out_channels, kernel_size=kernel_size, stride=stride, padding=padding) def forward(self, x): return F.relu(self.deconv(x)) class FireDeconv(nn.Module): # W --> W x 2, H不变 def __init__(self, inputs, out_channels, out_channelsex1x1, out_channelsex3x3): super(FireDeconv, self).__init__() self.conv1x1 = Conv(inputs, out_channels, 1, 1, 0) # FireDeconv(512, 64, 128, 128) self.deconv = Deconv(out_channels, out_channels, [1,4], [1,2], [0,1]) self.ex1x1 = Conv(out_channels, out_channelsex1x1, 1, 1, 0) self.ex3x3 = Conv(out_channels, out_channelsex3x3, 3, 1, 1) def forward(self,x): x = self.conv1x1(x) x = self.deconv(x) return torch.cat([self.ex1x1(x), self.ex3x3(x)], 1) class SqueezeSeg( nn.Module ): def __init__(self): super(SqueezeSeg, self).__init__() # encoder self.conv1 = Conv(5, 64, 3, (1,2), 1) # 1, 64, 64, 256 W方向步长为2,H方向步长为1 self.conv1_skip = Conv(5, 64, 1, 1, 0) # 第一个跳跃连接 self.pool1 = MaxPool(3, (1,2), (1,0)) # 1, 64, 64, 128 只在W方向下采样2倍 self.fire2 = Fire(64, 16, 64, 64) # 1, 128, 64, 128 self.fire3 = Fire(128, 16, 64, 64) # 1, 128, 64, 128 self.pool3 = MaxPool(3, (1,2), (1,0)) # 1, 128, 64, 64 self.fire4 = Fire(128, 32, 128, 128) # 1, 256, 64, 64 self.fire5 = Fire(256, 32, 128, 128) # 1, 256, 64, 64 self.pool5 = MaxPool(3, (1,2), (1,0)) # 1, 256, 64, 32 self.fire6 = Fire(256, 48, 192, 192) # 1, 384, 64, 32 self.fire7 = Fire(384, 48, 192, 192) # 1, 384, 64, 32 self.fire8 = Fire(384, 64, 256, 256) # 1, 512, 64, 32 self.fire9 = Fire(512, 64, 256, 256) # 1, 512, 64, 32 # decoder self.firedeconv10 = FireDeconv(512, 64, 128, 128) # 1, 256, 64, 64 self.firedeconv11 = FireDeconv(256, 32, 64, 64) # 1, 128, 64, 128 self.firedeconv12 = FireDeconv(128, 16, 32, 32) # 1, 64, 64, 256 self.firedeconv13 = FireDeconv(64, 16, 32, 32) # 1, 64, 64, 512 self.drop = nn.Dropout2d() self.conv14 = nn.Conv2d(64, 4, kernel_size=3, stride=1, padding=1) # 1, 4, 64, 512 # self.bf = BilateralFilter(mc, stride=1, padding=(1,2)) # self.rc = RecurrentCRF(mc, stride=1, padding=(1,2)) def forward(self, x): # encoder out_c1 = self.conv1(x) out = self.pool1(out_c1) out_f3 = self.fire3( self.fire2(out) ) out = self.pool3(out_f3) out_f5 = self.fire5( self.fire4(out) ) out = self.pool5(out_f5) out = self.fire9( self.fire8( self.fire7( self.fire6(out) ) ) ) # decoder out = torch.add(self.firedeconv10(out), out_f5) out = torch.add(self.firedeconv11(out), out_f3) out = torch.add(self.firedeconv12(out), out_c1) out = self.drop( torch.add(self.firedeconv13(out), self.conv1_skip(x)) ) out = self.conv14(out) # bf_w = self.bf(x[:, :3, :, :]) # out = self.rc(out, lidar_mask, bf_w) return out if __name__ == "__main__": x = torch.randn(1, 5, 64, 512) # 规整为(B, C, H, W)形式 print(x.shape) conv1 = Conv(5, 64, 3, (1,2), 1) conv1_skip = Conv(5, 64, 1, 1, 0) pool1 = MaxPool(3, (1,2), (1,0)) fire2 = Fire(64, 16, 64, 64) fire3 = Fire(128, 16, 64, 64) pool3 = MaxPool(3, (1,2), (1,0)) fire4 = Fire(128, 32, 128, 128) fire5 = Fire(256, 32, 128, 128) pool5 = MaxPool(3, (1,2), (1,0)) fire6 = Fire(256, 48, 192, 192) fire7 = Fire(384, 48, 192, 192) fire8 = Fire(384, 64, 256, 256) fire9 = Fire(512, 64, 256, 256) # decoder firedeconv10 = FireDeconv(512, 64, 128, 128) firedeconv11 = FireDeconv(256, 32, 64, 64) firedeconv12 = FireDeconv(128, 16, 32, 32) firedeconv13 = FireDeconv(64, 16, 32, 32) drop = nn.Dropout2d() convdeconv14 = nn.Conv2d(64, 4, kernel_size=3, stride=1, padding=1) # 输出通道数为4 for layer in [conv1, pool1, fire2, fire3, pool3, fire4, fire5, pool5, fire6, fire7, fire8, fire9, firedeconv10, firedeconv11, firedeconv12, firedeconv13, convdeconv14]: x = layer(x) print(x.shape) # 输出 # torch.Size([1, 5, 64, 512]) # torch.Size([1, 64, 64, 256]) # torch.Size([1, 64, 64, 128]) # torch.Size([1, 128, 64, 128]) # torch.Size([1, 128, 64, 128]) # torch.Size([1, 128, 64, 64]) # torch.Size([1, 256, 64, 64]) # torch.Size([1, 256, 64, 64]) # torch.Size([1, 256, 64, 32]) # torch.Size([1, 384, 64, 32]) # torch.Size([1, 384, 64, 32]) # torch.Size([1, 512, 64, 32]) # torch.Size([1, 512, 64, 32]) # torch.Size([1, 256, 64, 64]) # torch.Size([1, 128, 64, 128]) # torch.Size([1, 64, 64, 256]) # torch.Size([1, 64, 64, 512]) # torch.Size([1, 4, 64, 512])- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 亮点:使用Recurrent CRF进行分割校准

原因:下采样会导致低层细节信息丢失,导致最终的分割结果中出现边界模糊的现象

想法:使在原始空间中相距较远的两点在对应的输出图中也具有较远的距离,进而优化边界模糊等问题

改进思路:(1)将手动设定的高斯核超参数改为由网络学习得到 (2)采用空洞卷积,在增大感受野的同时不丢失细节信息(PointSeg)

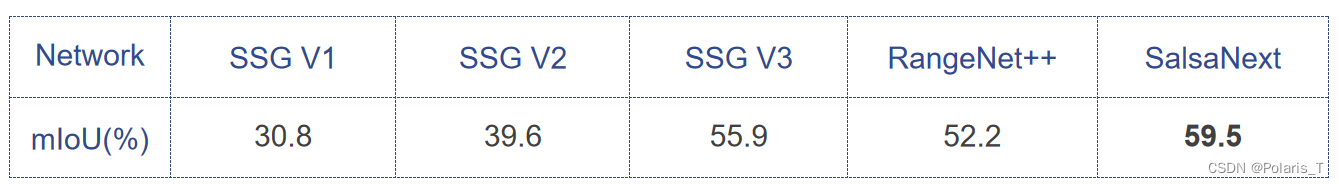

实验结果:

SqueezeSeg V2 (ICRA 2019)

https://arxiv.org/pdf/1809.08495v1.pdf

整体网络结构:

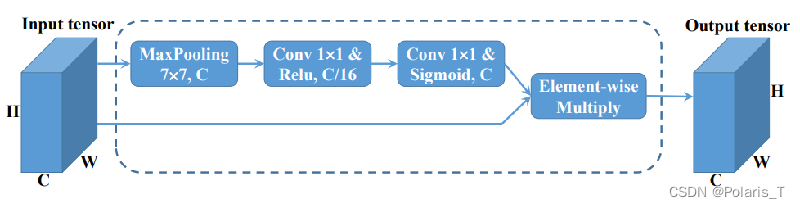

- 提出CAM结构,在扩大感受野的同时避免drop noise

drop noise:现实世界的激光雷达扫描仪会因为各种因素而使得扫描出的点云数据中存在噪点,而这些噪点将对低层特征的提取造成危害。

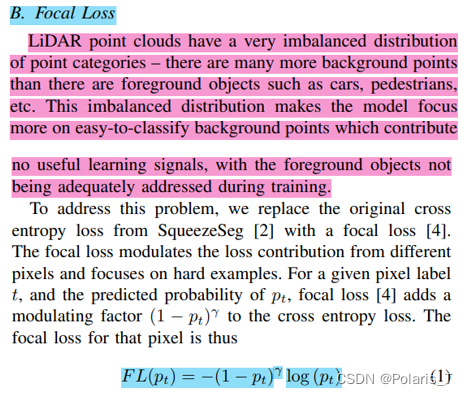

- 提出Focal loss,解决点类别分布不平衡问题

不难看出,若一个点在某类上的预测置信度较高,如接近于1,则该点在该类的惩罚项较小(不关注易学类);反正,若一个点在某类上的预测置信度较小,如接近于0,则该点在该类的惩罚项较大(关注难学类)。

- 提出Geodesic Correlation Alignment,解决域迁移问题

在每个训练开始时,送入一个batch的有标签合成点云数据,再送入一个batch的无标签真实世界点云数据,然后这两个batch各自过一遍主干网络,输出预测结果。对于合成数据,由于我们手上有它的ground truth,因此可以计算出Focal Loss;再将合成数据的预测结果与真实数据的预测结果之间求一个测地线损失。那么一方面focal loss关注于能否正确预测,Geodesic loss关注于域迁移(减小两个域数据间的discrepancies)。

实验结果

“Together with other improvements such as focal loss, batch normalization and a LiDAR mask channel, SqueezeSegV2 sees accuracy improvements of 6.0% to 8.6% in various pixel categories over the original SqueezeSeg.”(论文中未给出检测速度说明)

SqueezeSeg V3 (ECCV 2020)

https://arxiv.org/pdf/2004.01803.pdf

“On the SemanticKITTI benchmark, SqueezeSegV3 out performs all previously published methods by at least 3.7 mIoU with comparable inference speed, demonstrating the effectiveness of spatially-adaptive convolution.”

整体网络结构

亮点:- 提出SAC结构,有效处理雷达图像特征空间分布不均匀的情况,并且能提升计算效率

- 提出multi-layer cross entropy loss,联合多层输出进行梯度反传、优化效率更高

- SAC模块:自适应卷积可以根据输入和图像中的位置来改变权重

实验结果

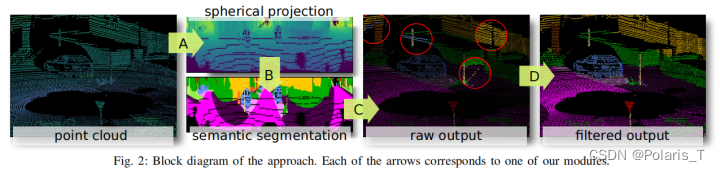

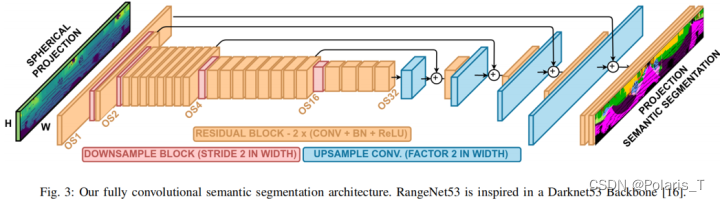

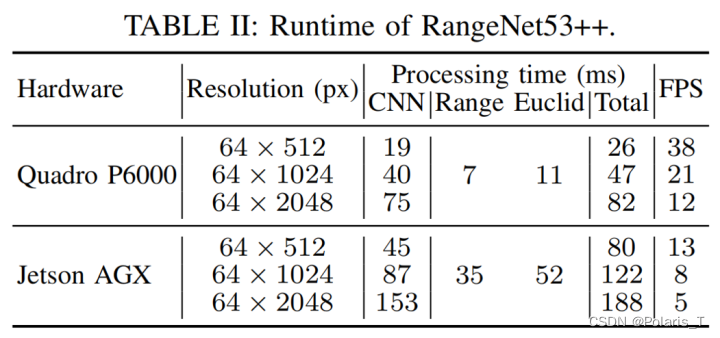

RangeNet++ (IROS 2019)

贡献:

- 准确地对激光雷达点云进行语义分割,显著超越了现有技术(当时的SOTA)

- 为完整的原始点云推断语义标签,无论 CNN 中使用的离散化级别如何,都避免丢弃点

- 在可轻松安装在机器人或车辆中的嵌入式计算机上,并以 Velodyne 扫描仪的帧速率工作

算法步骤:

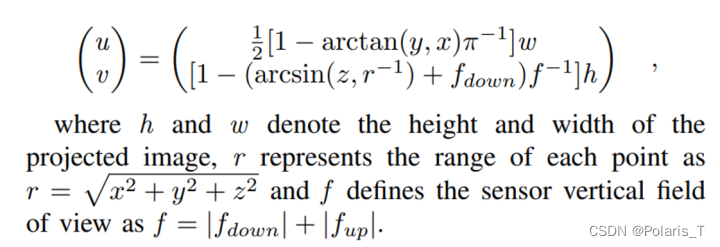

Step1:球映射(Spherical projection)

Step2:语义分割(Segmentation)

Step3:点云重建(Point cloud reconstruction)

Step4:点云后处理(Post-processing)

网络结构

在训练期间,该网络使用随机梯度下降和加权交叉熵损失进行端到端优化:

点云重建 (Point Cloud Reconstruction)做法:- 将 2D range image 图片语义分割的结果映射回每个3D点

- 2D range image上的一个(u,v)点, 可能对应多个实际的3D点。

- 具体操作是用之前存下来的所有3D点对应的2D坐标,一对多地进行重映射

点云后处理 (Post-processing)做法: - 在2D range image上,定义一个大小为(S,S)的窗口,分别以每个2D点作为窗口中心点,用该窗口取出其邻居点。im2col后,可以得到每个点的邻居点,组成一个矩阵,尺寸为(S^2,HW),每列即为对应点的所有邻居点的索引

- 通过3D点到2D点的对应关系,还原出每个3D点的在2D图片上的邻居点,得出一个矩阵,尺寸为(S^2,N)

- 从某个点的所有邻居点中,用KNN算法找出距离跟目标点最近的前k个点作为邻居点(距离定义为两个点的range差的绝对值,而非3D距离,提高了运算效率)

- 用一个距离阈值(cutoff threshold)对k个邻居点进行过滤,去掉距离大于阈值的邻居点

- 对于剩下的邻居点集,哪个类的点数比较多,目标点就属于哪个类

实验结果

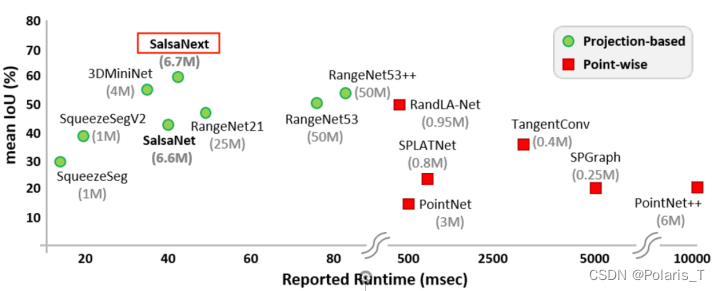

SalsaNext (IROS 2020)

类比于RangeNet++:二者都是先构建range image,然后利用特定的卷积网络进行分割,再将2D分割结果映射回3D点云中,最后用KNN优化分割结果。

点云数据处理:SalsaNext中对于点云数据的处理采用方式和RangeNet++相同,都为使用 range image作为输入。比较特殊的是这里采用360°的数据。

网络架构

类比于SalsaNet:

- SalsaNet使用四通道的鸟瞰图作为输入,而在SalsaNext中,输入换为了RV图,通过将无结构的3D点云进行球面投影生成RV图来作为卷积的输入,因此输入为5通道(x,y,z,i,r)

- 增加了空洞卷积的部分,在保持参数量不增加的同时实现了感受野的增大

- 在encoder和decoder之间加了dropout层来增加网络的表现

- 将SalsaNet中的最大池化换成了平均池化

- SalsaNext将网络换为了贝叶斯网络,将权重换为了一个分布

- 在decoder的部分,SalsaNext将SalsaNet的转置卷积改为pixel shuffle。通过跨通道的像素移动,既起到了decoder的作用,也减小了时间开销(H×W×Cr^2)−>(Hr×Wr×C)

实验结果

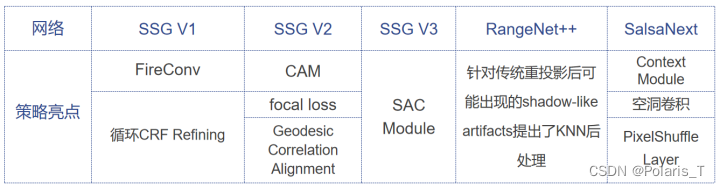

调研结果汇总

-

数据使用形式:上述方法均是先用spherical projection将3D LiDAR点云映射为2D LiDAR image然后使用2D卷积网络进行后续的分割,只是映射公式存在差异

-

网络结构:上述方法均采用了Encoder-Decoder+Skip Connection的结构,并且改进基本是在保持大框架的前提下对各卷积模块进行改进,或是在pre/post processing过程中加入trick

-

策略对比:

-

检测精度对比:

-

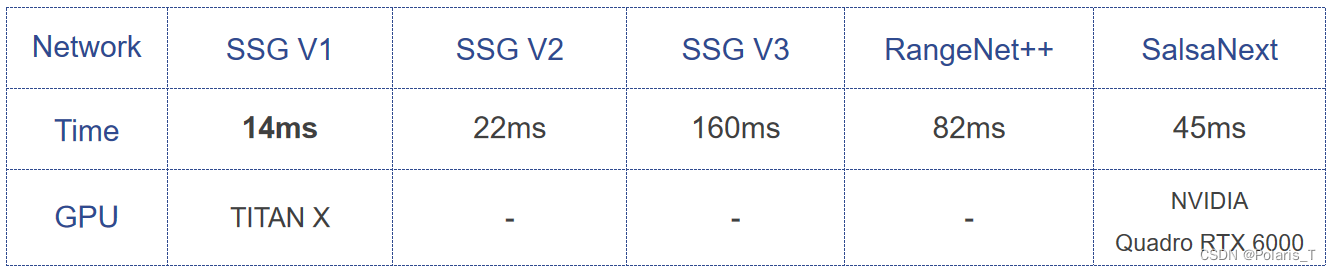

推理速度对比:

注:不同GPU算力不一致,这里仅汇总了论文中提供的实验数据

-

相关阅读:

美国访问学者签证有哪些要求?

MySQL高级:(二)存储引擎

Hive【内部表、外部表、临时表、分区表、分桶表】【总结】

网络工程从头做-1

R数据分析:孟德尔随机化实操

easyRL学习笔记:强化学习基础

Egg.js使用MySql数据库

上周热点回顾(1.15-1.21)

[PAT练级笔记] Basic Level 1018 锤子剪刀布

华1-安装配置CentOS

- 原文地址:https://blog.csdn.net/qq_45717425/article/details/126063376