-

使用spring boot集成shardingsphere分库分表简易测试

根据如下pom整上一个spring-boot项目,spring-boot版本用2.3.5,shardingsphere用5.1.1。

- "1.0" encoding="UTF-8"?>

"http://maven.apache.org/POM/4.0.0" - xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

- xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

4.0.0 com.shardingsphere shardingsphere-test 1.0-SNAPSHOT org.springframework.boot spring-boot-starter-parent 2.3.5.RELEASE org.springframework.boot spring-boot-starter-web org.apache.shardingsphere shardingsphere-jdbc-core-spring-boot-starter 5.1.1 com.baomidou mybatis-plus-boot-starter 3.3.1 mysql mysql-connector-java runtime org.projectlombok lombok org.springframework.boot spring-boot-starter-test test org.junit.vintage junit-vintage-engine junit junit org.springframework.boot spring-boot-test org.springframework spring-test compile

再用mysql整一个名为shardingsphere-test的库(编码集utf8mb4,也可以选自己喜欢的编码集),再往里弄上两个测试表。

- CREATE TABLE t_course_1 (

- `cid` BIGINT(20) NOT NULL,

- `user_id` BIGINT(20) DEFAULT NULL,

- `cname` VARCHAR(50) DEFAULT NULL,

- `brief` VARCHAR(50) DEFAULT NULL,

- `price` DOUBLE DEFAULT NULL,

- `status` INT(11) DEFAULT NULL,

- PRIMARY KEY (`cid`)

- ) ENGINE=INNODB DEFAULT CHARSET=utf8mb4;

- CREATE TABLE t_course_2 (

- `cid` BIGINT(20) NOT NULL,

- `user_id` BIGINT(20) DEFAULT NULL,

- `cname` VARCHAR(50) DEFAULT NULL,

- `brief` VARCHAR(50) DEFAULT NULL,

- `price` DOUBLE DEFAULT NULL,

- `status` INT(11) DEFAULT NULL,

- PRIMARY KEY (`cid`)

- ) ENGINE=INNODB DEFAULT CHARSET=utf8mb4;

项目结构如下。

application.yml的配置及解释如下:

- spring:

- application:

- name: sharding-jdbc-demo # 应用名称

- shardingsphere:

- # 设置全局属性

- props:

- # 打开SQL显示功能,将会打印出执行的原始SQL和实际路由后的SQL

- sql-show: true

- # 数据源配置

- datasource:

- # 定义数据源名称列表,这里只有一个名为db1的数据源

- names: db1

- # 数据源db1的具体配置

- db1:

- # 数据源实现类,此处使用HikariCP连接池

- type: com.zaxxer.hikari.HikariDataSource

- # JDBC驱动类名,对应MySQL数据库驱动

- driver-class-name: com.mysql.jdbc.Driver

- # 数据库连接URL,连接本地MySQL服务器上的shardingsphere-test数据库,并设置字符编码和禁用SSL

- url: jdbc:mysql://127.0.0.1:3306/shardingsphere-test?characterEncoding=UTF-8&useSSL=false

- # 数据库用户名

- username: root

- # 数据库密码

- password: root

- # 规则配置部分

- rules:

- # 分片规则相关配置

- sharding:

- # 1.定义分片表的实际分布情况

- tables:

- # 针对表t_course的分片配置

- t_course:

- # 实际数据节点分布,表明t_course表被分成两张表,分别命名为t_course_0和t_course_1,并存储在db1数据库中

- actual-data-nodes: "db1.t_course_$->{1..2}"

- # 配置策略

- table-strategy:

- # 用于单分片键的标准分片场景

- standard:

- sharding-column: cid

- # 分片算法名字

- sharding-algorithm-name: course_inline

- # 分布式主键生成策略配置

- key-generate-strategy:

- # 主键列名为cid

- column: cid

- # 引用已定义的分布式序列生成器 alg-snowflake

- key-generator-name: snowflake

- # 2.定义分布式序列生成器

- key-generators:

- # 定义名为alg-snowflake的分布式序列生成器 alg-snowflake

- snowflake:

- # 类型为SNOWFLAKE算法,用于生成全局唯一ID

- type: SNOWFLAKE

- # 3.定义分片算法

- sharding-algorithms:

- # 定义名为table-inline的分片算法

- course_inline:

- # 使用INLINE类型的行表达式分片算法

- type: INLINE

- # 算法属性配置

- props:

- # 行表达式算法具体内容,按照cid模2加1的值来确定数据落入哪张表,例如:cid%2+1=0,则落入t_course_1,等于1则落入t_course_2

- algorithm-expression: t_course_$->{cid % 2 + 1}

- # 4.定义分片策略

- #sharding-strategies:

- # 对于表t_course的分片策略定义

- #t_course_strategy:

- # 使用标准分片策略

- #type: STANDARD

- # 指定分片键为cid列

- #sharding-column: cid

- # 引用已定义的分片算法

- #sharding-algorithm-name: course_inline

- # SQL输出日志

- mybatis-plus:

- configuration:

- log-impl: org.apache.ibatis.logging.stdout.StdOutImpl

再弄一个对应数据库的实体类。

- import com.baomidou.mybatisplus.annotation.IdType;

- import com.baomidou.mybatisplus.annotation.TableId;

- import com.baomidou.mybatisplus.annotation.TableName;

- import lombok.Data;

- import lombok.ToString;

- @TableName("t_course")

- @Data

- @ToString

- public class Course {

- //@TableId(type = IdType.AUTO)

- @TableId

- private Long cid;

- private Long userId;

- private Long corderNo;

- private String cname;

- private String brief;

- private Double price;

- private Integer status;

- }

再搞一个mapper。

- import com.baomidou.mybatisplus.core.mapper.BaseMapper;

- import com.shardingsphere.demo.entity.Course;

- import org.apache.ibatis.annotations.Mapper;

- @Mapper

- public interface CourseMapper extends BaseMapper

{ - }

再搞一个service接口。

- public interface CoureInterface {

- public void addCoure();

- }

按道上的规矩再搞一个service接口的实现类。

- import com.shardingsphere.demo.coure.CoureInterface;

- import com.shardingsphere.demo.entity.Course;

- import com.shardingsphere.demo.mapper.CourseMapper;

- import org.springframework.beans.factory.annotation.Autowired;

- import org.springframework.stereotype.Service;

- @Service

- public class CoureInterfaceImpl implements CoureInterface {

- @Autowired

- private CourseMapper courseMapper;

- @Override

- public void addCoure() {

- for (int i = 0; i < 30; i++) {

- Course course = new Course();

- // 注意: cid使用雪花算法配置了(还可以使用MybatisPlus UUID),此处不用配置

- course.setUserId(1000L + i);

- course.setCname("ShardingSphere");

- course.setBrief("ShardingSphere测试");

- course.setPrice(66.6);

- course.setStatus(1);

- courseMapper.insert(course);

- }

- }

- }

再弄上一个controller,来接收远方的呼唤。

- import com.shardingsphere.demo.coure.CoureInterface;

- import org.springframework.beans.factory.annotation.Autowired;

- import org.springframework.web.bind.annotation.RequestMapping;

- import org.springframework.web.bind.annotation.RestController;

- @RestController

- public class CourseController {

- @Autowired

- private CoureInterface coureInterface;

- @RequestMapping(path = "/demo/addCourse")

- public void addCourse(){

- coureInterface.addCoure();

- }

- }

最后再弄上一个spring boot的启动类,用来启动这个sping boot项目。

- import org.springframework.boot.SpringApplication;

- import org.springframework.boot.autoconfigure.SpringBootApplication;

- @SpringBootApplication

- public class ShardingSphereTest {

- public static void main(String[] args) {

- SpringApplication.run(ShardingSphereTest.class, args);

- }

- }

我们启动服务后,直接浏览器访问这个接口简单测试一下。

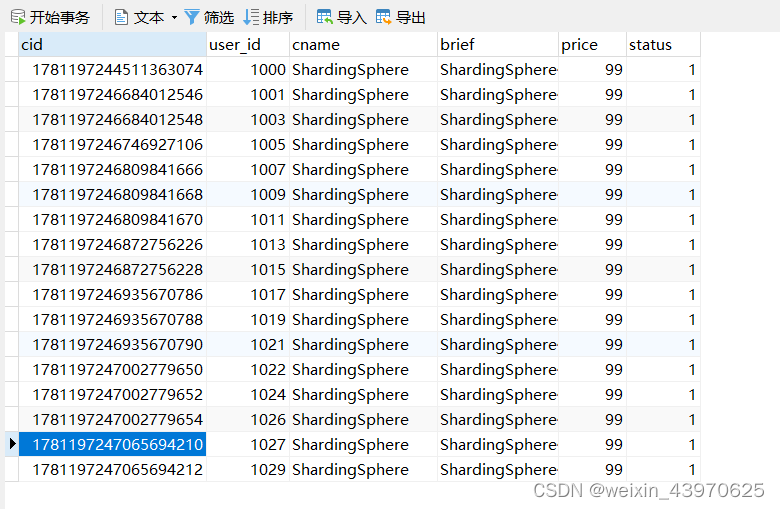

再去数据库看一眼,发现如我们所想,数据已经被拆分到两个表中了。

趁热打铁,我们再进阶一小点,把库也给分了算了。

再找一台机器创建一个跟上面一模一样的数据库(shardingsphere-test),库里的表也跟上面建的一模一样两张表(t_course_1,t_course_2),这样我们就富裕了,有了俩数据库。

需要改造的地方就是我们的application.yml配置文件,加上分库操作。

- spring:

- application:

- name: sharding-jdbc-demo-database # 应用名称

- shardingsphere:

- # 设置全局属性

- props:

- # 打开SQL显示功能,将会打印出执行的原始SQL和实际路由后的SQL

- sql-show: true

- # 数据源配置

- datasource:

- # 定义数据源名称列表,这里有两个数据源(数据的名字可以一样,也可以不一样)

- names: db1, db2

- # 数据源db1的具体配置

- db1:

- # 数据源实现类,此处使用HikariCP连接池

- type: com.zaxxer.hikari.HikariDataSource

- # JDBC驱动类名,对应MySQL数据库驱动

- driver-class-name: com.mysql.jdbc.Driver

- # 数据库连接URL,连接本地MySQL服务器上的shardingsphere-test数据库,并设置字符编码和禁用SSL

- url: jdbc:mysql://127.0.0.1:3306/shardingsphere-test?characterEncoding=UTF-8&useSSL=false

- # 数据库用户名

- username: root

- # 数据库密码

- password: root

- # 数据源db1的具体配置

- db2:

- # 数据源实现类,此处使用HikariCP连接池

- type: com.zaxxer.hikari.HikariDataSource

- # JDBC驱动类名,对应MySQL数据库驱动

- driver-class-name: com.mysql.jdbc.Driver

- # 数据库连接URL,连接本地MySQL服务器上的shardingsphere-test数据库,并设置字符编码和禁用SSL

- url: jdbc:mysql://124.223.XXX.XXX:3306/shardingsphere-test?characterEncoding=UTF-8&useSSL=false

- # 数据库用户名

- username: root

- # 数据库密码

- password: root

- # 规则配置部分

- rules:

- # 分片规则相关配置

- sharding:

- # 1.定义分片表的实际分布情况

- tables:

- # 针对表t_course的分片配置

- t_course:

- # 实际数据节点分布,表明t_course表被分成两张表,分别命名为t_course_0和t_course_1,并存储在db1数据库中

- actual-data-nodes: "db$->{1..2}.t_course_$->{1..2}"

- # 配置库策略

- database-strategy:

- standard:

- sharding-column: user_id

- sharding-algorithm-name: table_inline

- # 配置表策略

- table-strategy:

- # 用于单分片键的标准分片场景

- standard:

- sharding-column: cid

- # 分片算法名字

- sharding-algorithm-name: course_inline

- # 分布式主键生成策略配置

- key-generate-strategy:

- # 主键列名为cid

- column: cid

- # 引用已定义的分布式序列生成器 alg-snowflake

- key-generator-name: snowflake

- # 2.定义分布式序列生成器

- key-generators:

- # 定义名为alg-snowflake的分布式序列生成器 alg-snowflake

- snowflake:

- # 类型为SNOWFLAKE算法,用于生成全局唯一ID

- type: SNOWFLAKE

- # 3.定义分片算法

- sharding-algorithms:

- #定义库分片算法

- table_inline:

- type: INLINE

- props:

- algorithm-expression: db$->{user_id % 2 + 1}

- # 定义名为table-inline的分片算法,表分片

- course_inline:

- # 使用INLINE类型的行表达式分片算法

- type: INLINE

- # 算法属性配置

- props:

- # 行表达式算法具体内容,按照cid模2加1的值来确定数据落入哪张表,例如:cid%2+1=0,则落入t_course_1,等于1则落入t_course_2

- algorithm-expression: t_course_$->{cid % 2 + 1}

- # 4.定义分片策略

- #sharding-strategies:

- # 对于表t_course的分片策略定义

- #t_course_strategy:

- # 使用标准分片策略

- #type: STANDARD

- # 指定分片键为cid列

- #sharding-column: cid

- # 引用已定义的分片算法

- #sharding-algorithm-name: course_inline

- # SQL输出日志

- mybatis-plus:

- configuration:

- log-impl: org.apache.ibatis.logging.stdout.StdOutImpl

然后重启服务,重新访问localhost:8080/demo/addCourse 调用添加数据的服务接口,此时再查数据库就会发现数据已经被shardingsphere分到不同库的不同表里了。

分库查询

入库了以后,我们写个测试类尝试查询一下,看看会是怎么样。

- @SpringBootTest

- @RunWith(SpringRunner.class)

- @Slf4j

- public class MyTest {

- @Autowired(required = false)

- private CourseMapper courseMapper;

- /**

- * 水平分片:查询所有记录

- * 查询了两个数据源,每个数据源中使用UNION ALL连接两个表

- */

- @Test

- public void testShardingSelectOne(){

- List

courses = courseMapper.selectList(null); - courses.forEach(System.out::println);

- }

- }

我们来看一下查询结果。

- Creating a new SqlSession

- SqlSession [org.apache.ibatis.session.defaults.DefaultSqlSession@30b0d5a7] was not registered for synchronization because synchronization is not active

- JDBC Connection [org.apache.shardingsphere.driver.jdbc.core.connection.ShardingSphereConnection@411e567e] will not be managed by Spring

- ==> Preparing: SELECT cid,user_id,cname,brief,price,status FROM t_course

- ==> Parameters:

- 2024-04-19 21:17:29.909 INFO 25740 --- [ main] ShardingSphere-SQL : Logic SQL: SELECT cid,user_id,cname,brief,price,status FROM t_course

- 2024-04-19 21:17:29.909 INFO 25740 --- [ main] ShardingSphere-SQL : SQLStatement: MySQLSelectStatement(table=Optional.empty, limit=Optional.empty, lock=Optional.empty, window=Optional.empty)

- 2024-04-19 21:17:29.910 INFO 25740 --- [ main] ShardingSphere-SQL : Actual SQL: db1 ::: SELECT cid,user_id,cname,brief,price,status FROM t_course_1 UNION ALL SELECT cid,user_id,cname,brief,price,status FROM t_course_2

- 2024-04-19 21:17:29.910 INFO 25740 --- [ main] ShardingSphere-SQL : Actual SQL: db2 ::: SELECT cid,user_id,cname,brief,price,status FROM t_course_1 UNION ALL SELECT cid,user_id,cname,brief,price,status FROM t_course_2

- <== Columns: cid, user_id, cname, brief, price, status

- <== Row: 1781311094111928321, 1000, ShardingSphere, ShardingSphere尝试查询, 99.0, 1

- <== Row: 1781311097660309505, 1002, ShardingSphere, ShardingSphere尝试查询, 99.0, 1

- <== Row: 1781311098117488641, 1004, ShardingSphere, ShardingSphere尝试查询, 99.0, 1

- <== Row: 1781311098650165249, 1006, ShardingSphere, ShardingSphere尝试查询, 99.0, 1

- <== Row: 1781311097660309506, 1003, ShardingSphere, ShardingSphere尝试查询, 99.0, 1

- <== Row: 1781311098184597506, 1005, ShardingSphere, ShardingSphere尝试查询, 99.0, 1

- <== Row: 1781311098650165250, 1007, ShardingSphere, ShardingSphere尝试查询, 99.0, 1

- <== Row: 1781311096750145537, 1001, ShardingSphere, ShardingSphere尝试查询, 99.0, 1

- <== Total: 8

可以看到,它是把每个库的每张表进行union操作,返回返回总结果。

多表关联查询

如果我们要是多表查询呢?先建上两张有关联的表来试一下。如下两个数据库分别创建t_order0,t_order1,t_order_item0,t_order_item1,仍然分库分表创建,只不过让t_order和t_order_item有联系,即有如此关联:SELECT o.*,i.* FROM t_order o JOIN t_order_item i ON o.order_no = i.order_no;

- CREATE TABLE t_order0 (

- id BIGINT,

- order_no VARCHAR(30),

- user_id BIGINT,

- amount DECIMAL(10,2),

- PRIMARY KEY(id)

- );

- CREATE TABLE t_order1 (

- id BIGINT,

- order_no VARCHAR(30),

- user_id BIGINT,

- amount DECIMAL(10,2),

- PRIMARY KEY(id)

- );

- CREATE TABLE t_order_item0(

- id BIGINT,

- order_no VARCHAR(30),

- user_id BIGINT,

- price DECIMAL(10,2),

- `count` INT,

- PRIMARY KEY(id)

- );

- CREATE TABLE t_order_item1(

- id BIGINT,

- order_no VARCHAR(30),

- user_id BIGINT,

- price DECIMAL(10,2),

- `count` INT,

- PRIMARY KEY(id)

- );

再在配置文件中将这俩表的配置搞好。

- spring:

- application:

- name: sharding-jdbc-demo-database # 应用名称

- shardingsphere:

- # 设置全局属性

- props:

- # 打开SQL显示功能,将会打印出执行的原始SQL和实际路由后的SQL

- sql-show: true

- # 数据源配置

- datasource:

- # 定义数据源名称列表,这里有两个数据源(数据的名字可以一样,也可以不一样)

- names: db1, db2

- # 数据源db1的具体配置

- db1:

- # 数据源实现类,此处使用HikariCP连接池

- type: com.zaxxer.hikari.HikariDataSource

- # JDBC驱动类名,对应MySQL数据库驱动

- driver-class-name: com.mysql.jdbc.Driver

- # 数据库连接URL,连接本地MySQL服务器上的shardingsphere-test数据库,并设置字符编码和禁用SSL

- url: jdbc:mysql://127.0.0.1:3306/shardingsphere-test?characterEncoding=UTF-8&useSSL=false

- # 数据库用户名

- username: root

- # 数据库密码

- password: root

- # 数据源db1的具体配置

- db2:

- # 数据源实现类,此处使用HikariCP连接池

- type: com.zaxxer.hikari.HikariDataSource

- # JDBC驱动类名,对应MySQL数据库驱动

- driver-class-name: com.mysql.jdbc.Driver

- # 数据库连接URL,连接本地MySQL服务器上的shardingsphere-test数据库,并设置字符编码和禁用SSL

- url: jdbc:mysql://124.223.XXX.XXX:3306/shardingsphere-test?characterEncoding=UTF-8&useSSL=false

- # 数据库用户名

- username: root

- # 数据库密码

- password: 123456

- # 规则配置部分

- rules:

- # 分片规则相关配置

- sharding:

- # 1.定义分片表的实际分布情况

- tables:

- # 针对表t_course的分片配置

- t_course:

- # 实际数据节点分布,表明t_course表被分成两张表,分别命名为t_course_0和t_course_1,并存储在db1数据库中

- actual-data-nodes: "db$->{1..2}.t_course_$->{1..2}"

- # 配置库策略

- database-strategy:

- standard:

- sharding-column: user_id

- sharding-algorithm-name: table_inline

- # 配置表策略

- table-strategy:

- # 用于单分片键的标准分片场景

- standard:

- sharding-column: cid

- # 分片算法名字

- sharding-algorithm-name: course_inline

- # 分布式主键生成策略配置

- key-generate-strategy:

- # 主键列名为cid

- column: cid

- # 引用已定义的分布式序列生成器 alg-snowflake

- key-generator-name: snowflake

- #order表的分片分库策略

- t_order:

- # 实际数据节点分布,表明t_course表被分成两张表,分别命名为t_course_0和t_course_1,并存储在db1数据库中

- actual-data-nodes: "db$->{1..2}.t_order$->{0..1}"

- # 配置分库策略

- database-strategy:

- standard:

- sharding-column: user_id

- sharding-algorithm-name: order_inline

- # 配置分表策略

- table-strategy:

- # 用于单分片键的标准分片场景,根据order_no的hash值进行分片

- standard:

- sharding-column: order_no

- # 分片算法名字

- sharding-algorithm-name: order_no_mod

- # 分布式主键生成策略配置

- key-generate-strategy:

- # 主键列名为cid

- column: id

- # 引用已定义的分布式序列生成器 alg-snowflake

- key-generator-name: snowflake

- #order表的分片分库策略

- t_order_item:

- # 实际数据节点分布,表明t_course表被分成两张表,分别命名为t_course_0和t_course_1,并存储在db1数据库中

- actual-data-nodes: "db$->{1..2}.t_order_item$->{0..1}"

- # 配置分库策略

- database-strategy:

- standard:

- sharding-column: user_id

- sharding-algorithm-name: order_inline

- # 配置分表策略

- table-strategy:

- # 用于单分片键的标准分片场景,根据order_no的hash值进行分片

- standard:

- sharding-column: order_no

- # 分片算法名字

- sharding-algorithm-name: order_no_mod

- # 分布式主键生成策略配置

- key-generate-strategy:

- # 主键列名为cid

- column: id

- # 引用已定义的分布式序列生成器 alg-snowflake

- key-generator-name: snowflake

- # 2.定义分布式序列生成器

- key-generators:

- # 定义名为alg-snowflake的分布式序列生成器 alg-snowflake

- snowflake:

- # 类型为SNOWFLAKE算法,用于生成全局唯一ID

- type: SNOWFLAKE

- # 3.定义你想配置表的分片算法

- sharding-algorithms:

- #定义库分片算法

- table_inline:

- type: INLINE

- props:

- algorithm-expression: db$->{user_id % 2 + 1}

- # 定义名为table-inline的分片算法,表分片

- course_inline:

- # 使用INLINE类型的行表达式分片算法

- type: INLINE

- # 算法属性配置

- props:

- # 行表达式算法具体内容,按照cid模2加1的值来确定数据落入哪张表,例如:cid%2+1=0,则落入t_course_1,等于1则落入t_course_2

- algorithm-expression: t_course_$->{cid % 2 + 1}

- order_inline:

- type: INLINE

- props:

- algorithm-expression: db$->{user_id % 2 + 1}

- order_no_mod:

- # 使用HASH_MOD类型的行表达式分片算法

- type: HASH_MOD

- # 算法属性配置

- props:

- # 行表达式算法具体内容,

- sharding-count: 2

- # 4.定义分片策略

- #sharding-strategies:

- # 对于表t_course的分片策略定义

- #t_course_strategy:

- # 使用标准分片策略

- #type: STANDARD

- # 指定分片键为cid列

- #sharding-column: cid

- # 引用已定义的分片算法

- #sharding-algorithm-name: course_inline

- # SQL输出日志

- mybatis-plus:

- configuration:

- log-impl: org.apache.ibatis.logging.stdout.StdOutImpl

OrderMapper改造一下,进行表关联。

- import com.baomidou.mybatisplus.core.mapper.BaseMapper;

- import com.shardingsphere.demo.entity.Order;

- import com.shardingsphere.demo.vo.OrderVo;

- import org.apache.ibatis.annotations.Mapper;

- import org.apache.ibatis.annotations.Select;

- import java.util.List;

- @Mapper

- public interface OrderMapper extends BaseMapper

{ - @Select({"SELECT o.order_no, SUM(i.price * i.count) AS amount",

- "FROM t_order o JOIN t_order_item i ON o.order_no = i.order_no",

- "GROUP BY o.order_no"})

- List

getOrderAmount(); - }

再写个测试方法。

- /**

- * 测试关联表查询

- */

- @Test

- public void testGetOrderAmount(){

- List

orderAmountList = orderMapper.getOrderAmount(); - orderAmountList.forEach(System.out::println);

- }

看看执行结果:

- ==> Preparing: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order o JOIN t_order_item i ON o.order_no = i.order_no GROUP BY o.order_no

- ==> Parameters:

- 2024-04-19 21:27:52.938 INFO 21784 --- [ main] ShardingSphere-SQL : Logic SQL: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order o JOIN t_order_item i ON o.order_no = i.order_no GROUP BY o.order_no

- 2024-04-19 21:27:52.939 INFO 21784 --- [ main] ShardingSphere-SQL : SQLStatement: MySQLSelectStatement(table=Optional.empty, limit=Optional.empty, lock=Optional.empty, window=Optional.empty)

- 2024-04-19 21:27:52.939 INFO 21784 --- [ main] ShardingSphere-SQL : Actual SQL: db2 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order0 o JOIN t_order_item0 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- 2024-04-19 21:27:52.939 INFO 21784 --- [ main] ShardingSphere-SQL : Actual SQL: db2 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order1 o JOIN t_order_item0 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- 2024-04-19 21:27:52.939 INFO 21784 --- [ main] ShardingSphere-SQL : Actual SQL: db2 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order0 o JOIN t_order_item1 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- 2024-04-19 21:27:52.939 INFO 21784 --- [ main] ShardingSphere-SQL : Actual SQL: db2 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order1 o JOIN t_order_item1 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- 2024-04-19 21:27:52.939 INFO 21784 --- [ main] ShardingSphere-SQL : Actual SQL: db1 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order0 o JOIN t_order_item0 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- 2024-04-19 21:27:52.939 INFO 21784 --- [ main] ShardingSphere-SQL : Actual SQL: db1 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order1 o JOIN t_order_item0 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- 2024-04-19 21:27:52.939 INFO 21784 --- [ main] ShardingSphere-SQL : Actual SQL: db1 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order0 o JOIN t_order_item1 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- 2024-04-19 21:27:52.939 INFO 21784 --- [ main] ShardingSphere-SQL : Actual SQL: db1 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order1 o JOIN t_order_item1 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- <== Columns: order_no, amount

- <== Row: ShardingSphere1, 40.00

- <== Row: ShardingSphere2, 40.00

- <== Row: ShardingSphere5, 6.00

- <== Row: ShardingSphere6, 6.00

- <== Total: 4

发现了一个问题:可以看到同一个数据源中,查询的次数是

t_order和t_order_item的笛卡尔积数量,但是t_order0中的订单数据只会在对应数据源中t_order_item0,不会在t_order_item1中,所以有些关联查询是没有意义的,那么接下来就引入了绑定表的概念。绑定表

指分片规则一致的一组分片表。 使用绑定表进行多表关联查询时,必须使用分片键进行关联,否则会出现笛卡尔积关联或跨库关联,从而影响查询效率。所以我们来配置一下,将

t_order和t_order_item绑定一下,只需要在rules这里增加如下配置,如果你的配置文件是.properties类型的,需要这样配:spring.shardingsphere.rules.sharding.binding-tables[0]=t_order,t_order_item。- # 规则配置部分

- rules:

- # 分片规则相关配置

- sharding:

- #绑定表

- binding-tables:

- - t_order,t_order_item

再次查询:

- ==> Preparing: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order o JOIN t_order_item i ON o.order_no = i.order_no GROUP BY o.order_no

- ==> Parameters:

- 2024-04-19 21:43:08.069 INFO 24608 --- [ main] ShardingSphere-SQL : Logic SQL: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order o JOIN t_order_item i ON o.order_no = i.order_no GROUP BY o.order_no

- 2024-04-19 21:43:08.069 INFO 24608 --- [ main] ShardingSphere-SQL : SQLStatement: MySQLSelectStatement(table=Optional.empty, limit=Optional.empty, lock=Optional.empty, window=Optional.empty)

- 2024-04-19 21:43:08.070 INFO 24608 --- [ main] ShardingSphere-SQL : Actual SQL: db1 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order0 o JOIN t_order_item0 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- 2024-04-19 21:43:08.070 INFO 24608 --- [ main] ShardingSphere-SQL : Actual SQL: db1 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order1 o JOIN t_order_item1 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- 2024-04-19 21:43:08.070 INFO 24608 --- [ main] ShardingSphere-SQL : Actual SQL: db2 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order0 o JOIN t_order_item0 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- 2024-04-19 21:43:08.070 INFO 24608 --- [ main] ShardingSphere-SQL : Actual SQL: db2 ::: SELECT o.order_no, SUM(i.price * i.count) AS amount FROM t_order1 o JOIN t_order_item1 i ON o.order_no = i.order_no GROUP BY o.order_no ORDER BY o.order_no ASC

- <== Columns: order_no, amount

- <== Row: ShardingSphere1, 40.00

- <== Row: ShardingSphere2, 40.00

- <== Row: ShardingSphere5, 6.00

- <== Row: ShardingSphere6, 6.00

- <== Total: 4

突然一家伙少了四条查询。

- 如果不配置绑定表:测试的结果为8个SQL。 多表关联查询会出现笛卡尔积关联。

- 如果配置绑定表:测试的结果为4个SQL。 多表关联查询不会出现笛卡尔积关联,关联查询效率将大大提升。

-

相关阅读:

七客咖啡50店齐开,拓展咖啡赛道

k8s kubesphere 部署 harbor私服仓库

ios面试准备 - objective-c篇

力扣每日一题-第25天-496.下一个更大元素Ⅰ

猿创征文丨学C/C++的进来看看---这三种经典数组笔试题你都会做吗?

postgresql源码学习(23)—— 故障恢复④-事务日志的组装

[GIS]WPS地理处理服务

【PAT(甲级)】1065 A+B and C (64bit)[相加时关于编译器的错误]

PHP 写的旅游景点系统

自动化测试 selenium+Junit 总结知识

- 原文地址:https://blog.csdn.net/weixin_43970625/article/details/137962390