-

AGI之MFM:《多模态基础模型:从专家到通用助手》翻译与解读之视觉理解、视觉生成

AGI之MFM:《Multimodal Foundation Models: From Specialists to General-Purpose Assistants多模态基础模型:从专家到通用助手》翻译与解读之视觉理解、视觉生成

目录

过去十年主要研究图像表示的方法:图像级别(图像分类/图像-文本检索/图像字幕)→区域级别(目标检测/短语定位)→像素级别(语义/实例/全景分割)

如何学习图像表示:两种方法(图像中挖掘出的监督信号/从Web上挖掘的图像-文本数据集的语言监督)、三种学习方式(监督预训练/CLIP/仅图像的自监督学习)

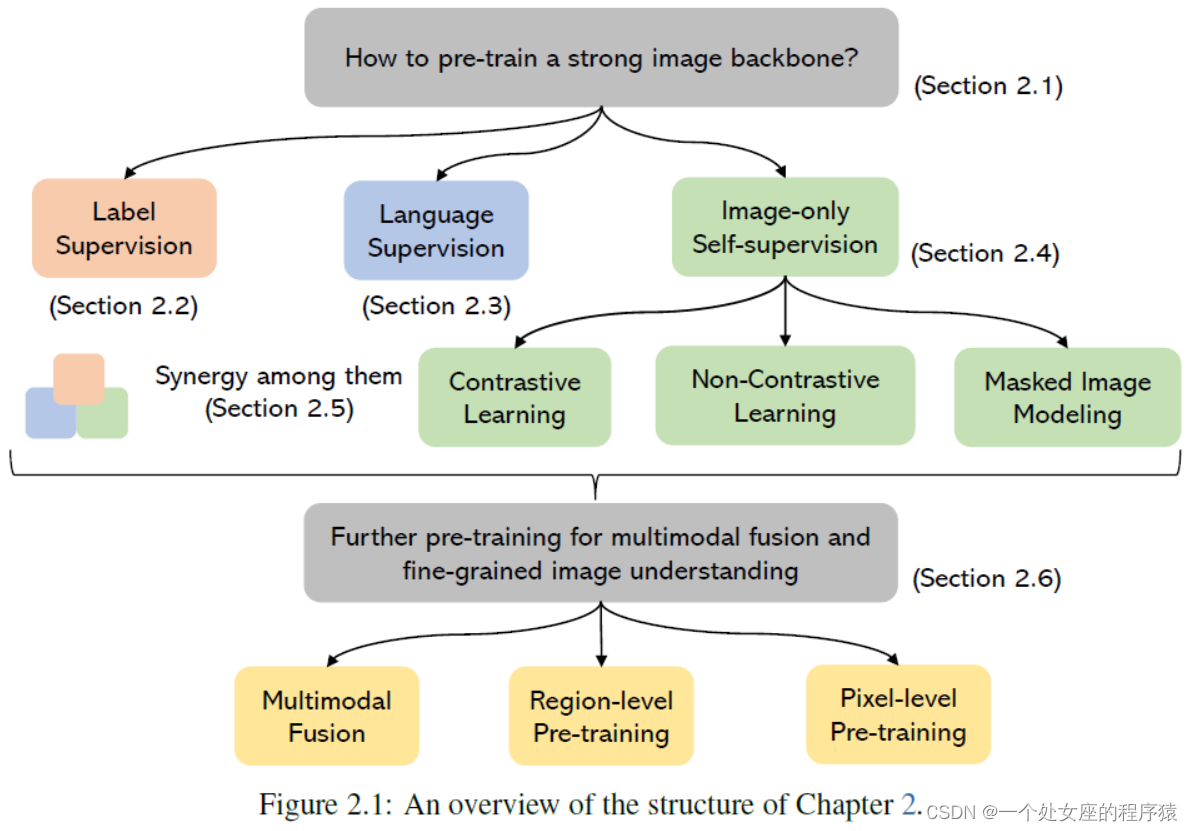

Figure 2.1: An overview of the structure of Chapter 2.

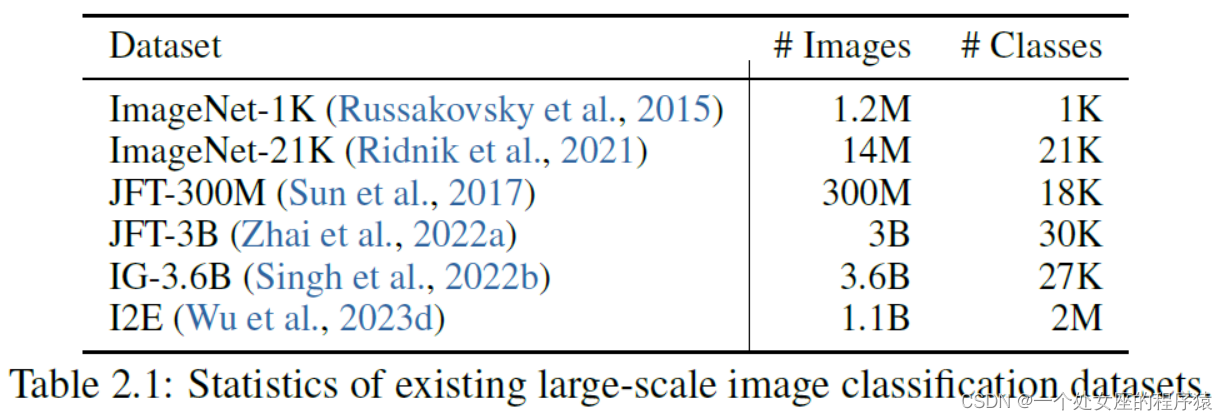

Table 2.1: Statistics of existing large-scale image classification datasets

2.3、Contrastive Language-Image Pre-training对比语言-图像预训练

2.3.1、Basics of CLIP Training-CLIP训练基础

语言数据+训练方式,如CLIP/ALIGN/Florence/BASIC/OpenCLIP等

固定数据集并设计不同的算法→DataComp提出转向→固定的CLIP训练方法来选择和排名数据集

Objective function.目标函数:细粒度监督、对比式描述生成、仅使用字幕损失、用于语言-图像预训练的Sigmoid损失

2.4、Image-Only Self-Supervised Learning仅图像自监督学习:三类(对比学习/非对比学习/遮蔽图像建模)

2.4.1、Contrastive and Non-contrastive Learning

Non-contrastive learning.非对比学习

2.4.2、Masked Image Modeling遮蔽图像建模

Figure 2.9: Illustration of Masked Autoencoder (MAE)

Targets目标:两类(低级像素特征【更细粒度图像理解】、高级特征),损失函数的选择取决于目标的性质,离散标记的目标(通常使用交叉熵损失)+像素值或连续值特征(选择是ℓ1、ℓ2或余弦相似度损失)

MIM for video pre-training视频预训练的MIM:将MIM扩展到视频预训练,如BEVT/VideoMAE/Feichtenhofer

Lack of learning global image representations全局图像表示的不足,如iBOT/DINO/BEiT等

Scaling properties of MIM—MIM的规模特性:尚不清楚探讨将MIM预训练扩展到十亿级仅图像数据的规模

2.5、Synergy Among Different Learning Approaches不同学习方法的协同作用

Combining CLIP with label supervision将CLIP与标签监督相结合,如UniCL、LiT、MOFI

Figure 2.11: Illustration of MVP (Wei et al., 2022b), EVA (Fang et al., 2023) and BEiTv2 (Peng

Combining CLIP with image-only (non-)contrastive learning将CLIP与图像仅(非)对比学习相结合:如SLIP、xCLIP

Combining CLIP with MIM将CLIP与MIM相结合

浅层交互:将CLIP提取的图像特征用作MIM训练的目标(CLIP图像特征可能捕捉了在MIM训练中缺失的语义),比如MVP/BEiTv2等

深度整合:BERT和BEiT的组合非常有前景,比如BEiT-3

2.6、Multimodal Fusion, Region-Level and Pixel-Level Pre-training多模态融合、区域级和像素级预训练

2.6.1、From Multimodal Fusion to Multimodal LLM从多模态融合到多模态LLM

基于双编码器的CLIP(图像和文本独立编码+仅通过两者特征向量的简单点乘实现模态交互):擅长图像分类/图像-文本检索,不擅长图像字幕/视觉问答

OD-based models基于OD的模型:使用共同注意力进行多模态融合(如ViLBERT/LXMERT)、将图像特征作为文本输入的软提示(如VisualBERT)

Figure 2.13: Illustration of UNITER (Chen et al., 2020d) and CoCa (Yu et al., 2022a),

2.6.2、Region-Level Pre-training区域级预训练

CLIP:通过对比预训练学习全局图像表示+不适合细粒度图像理解的任务(如目标检测【包含两个子任务=定位+识别】等)

基于图像-文本模型进行微调(如OVR-CNN)、只训练分类头(如Detic)、

2.6.3、Pixel-Level Pre-training像素级预训练(代表作SAE):

Figure 2.15: Overview of the Segment Anything project, which

Concurrent to SAM与SAM同时并行:OneFormer(一种通用的图像分割框架)、SegGPT(一种统一不同分割数据格式的通用上下文学习框架)、SEEM(扩展了单一分割模型)

VG的目的(生成高保真的内容),作用(支持创意应用+合成训练数据),关键(生成严格与人类意图对齐的视觉数据,比如文本条件)

3.1.1、Human Alignments in Visual Generation视觉生成中的人类对齐:核心(遵循人类意图来合成内容),四类探究

Figure 3.1: An overview of improving human intent alignments in T2I generation—T2I生成改善人类意向对齐的概述。

3.1.2、Text-to-Image Generation文本到图像生成

T2I的目的(视觉质量高+语义与输入文本相对应)、数据集(图像-文本对进行训练)

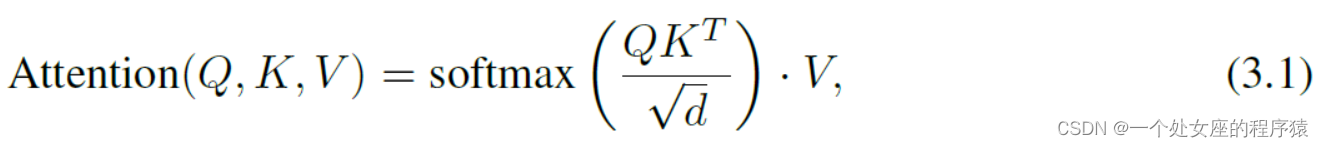

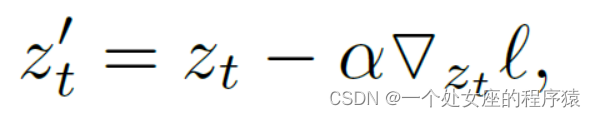

Stable Diffusion的详解:基于交叉注意力的图像-文本融合机制(如自回归T2I生成),三模块(图像VAE+去噪U-Net+条件编码器)

VAE:包含一对编码器E和解码器D,将RGB图像x编码为潜在随机变量z→对潜在变量解码重建图像

文本编码器:使用ViT-L/14 CLIP文本编码器将标记化的输入文本查询y编码为文本特征τ(y)

Figure 3.4: Overview of the ReCo model architecture. Image credit: Yang et al. (2023b).ReCo模型体系结构的概述

去噪U-Net:预测噪声λ (zt, t)与目标噪声λ之间的L2损失来训练

3.2、Spatial Controllable Generation空间可控生成

痛点:仅使用文本在某些描述方面是无效(比如空间引用),需额外空间输入条件来指导图像生成

Region-controlled T2I generation区域可控T2I生成:可显著提高生成高分辨率图像,但缺乏空间可控性,需开放性文本描述的额外输入条件,如ReCo/GLIGEN

GLIGEN:即插即用的方法,冻结原始的T2I模型+训练额外的门控自注意层

T2I generation with dense conditions—T2I生成与密集条件

ControlNet:基于稳定扩散+引入额外的可训练的ControlNet分支(额外的输入条件添加到文本提示中)

Uni-ControlNet(统一输入条件+使单一模型能够理解多种输入条件类型)、Disco(生成可控元素【人类主题/视频背景/动作姿势】人类跳舞视频=成功将背景和人体姿势条件分开;

ControlNet的两个不同分支【图像帧+姿势图】,人类主体、背景和舞蹈动作的任意组合性)

Inference-time spatial guidance推理时的空间指导:

文本到图像编辑:通过给定的图像和输入文本描述合成新的图像+保留大部分视觉内容+遵循人类意图

三个代表性方向:改变局部图像区域(删除或更高)、语言用作编辑指令、编辑系统集成不同的专业模块(如分割模型和大型语言模型)

Figure 3.8: Examples of text instruction editing. Image credit: Brooks et al. (2023).文本指令编辑的示例

3.4、Text Prompts Following文本提示跟随

采用图像-文本对鼓励但不强制完全遵循文本提示→当图像描述变得复时的两类研究=推断时操作+对齐调整

Summary and trends总结和趋势:目的(增强T2I模型更好地遵循文本提示能力),通过调整对齐来提升T2I模型根据文本提示生成图像的能力,未来(基于RL的方法更有潜力但需扩展)

3.5、Concept Customization概念定制:旨在使这些模型能够理解和生成与特定情况相关的视觉概念

语言的痛点:表达人类意图强大但全面描述细节效率较低,因此直接通过图像输入扩展T2I模型来理解视觉概念是更好的选择

三大研究(视觉概念自定义在T2I模型中的应用研究进展):单一概念定制、多概念定制、无Test-time微调的定制

Summary and trends摘要和趋势:早期(测试阶段微调嵌入)→近期(直接在冻结模型中执行上下文图像生成),两个应用=检索相关图像来促进生成+基于描述性文本指令的统一图像输入的不同用途

3.6、Trends: Unified Tuning for Human Alignments趋势:人类对齐的统一调整

调整T2I模型以更准确地符合人类意图的三大研究:提升空间可控性、编辑现有图像以改进匹配程度、个性化T2I模型以适应新的视觉概念

一个趋势:即朝着需要最小问题特定调整的整合性对齐解决方案转移

对齐调优阶段有两个主要目的:扩展了T2I的文本输入以合并交错的图像-文本输入,通过使用数据、损失和奖励来微调基本的T2I模型

Tuning with alignment-focused loss and rewards以对齐为焦点的损失和奖励的调整:

Closed-loop of multimodal content understanding and generation多模态内容理解和生成的闭环

相关文章

AGI之MFM:《Multimodal Foundation Models: From Specialists to General-Purpose Assistants多模态基础模型:从专家到通用助手》翻译与解读之简介

AGI之MFM:《Multimodal Foundation Models: From Specialists to General-Purpose Assistants多模态基础模型:从专家到通用助手》翻译与解读之视觉理解、视觉生成

AGI之MFM:《多模态基础模型:从专家到通用助手》翻译与解读之视觉理解、视觉生成_一个处女座的程序猿的博客-CSDN博客

AGI之MFM:《Multimodal Foundation Models: From Specialists to General-Purpose Assistants多模态基础模型:从专家到通用助手》翻译与解读之统一的视觉模型、加持LLMs的大型多模态模型

AGI之MFM:《多模态基础模型:从专家到通用助手》翻译与解读之统一的视觉模型、加持LLMs的大型多模态模型-CSDN博客

AGI之MFM:《Multimodal Foundation Models: From Specialists to General-Purpose Assistants多模态基础模型:从专家到通用助手》翻译与解读之与LLM协同工作的多模态智能体、结论和研究趋势

AGI之MFM:《多模态基础模型:从专家到通用助手》翻译与解读之与LLM协同工作的多模态智能体、结论和研究趋势-CSDN博客

2、Visual Understanding视觉理解

过去十年主要研究图像表示的方法:图像级别(图像分类/图像-文本检索/图像字幕)→区域级别(目标检测/短语定位)→像素级别(语义/实例/全景分割)

Over the past decade, the research community has devoted significant efforts to study the acquisition of high-quality, general-purpose image representations. This is essential to build vision foundation models, as pre-training a strong vision backbone to learn image representations is fundamental to all types of computer vision downstream tasks, ranging from image-level (e.g., image classifica- tion (Krizhevsky et al., 2012), image-text retrieval (Frome et al., 2013), image captioning (Chen et al., 2015)), region-level (e.g., object detection (Girshick, 2015), phrase grounding (Plummer et al., 2015)), to pixel-level (e.g., semantic/instance/panoptic segmentation (Long et al., 2015; Hafiz and Bhat, 2020; Kirillov et al., 2019)) tasks.

在过去的十年里,研究界投入了大量的精力来研究高质量、通用性图像表示的方法。这对于构建视觉基础模型至关重要,因为预训练强大的视觉骨干来学习图像表示对于各种类型的计算机视觉下游任务都是基础的,包括从图像级别(例如图像分类(Krizhevsky et al., 2012)、图像-文本检索(Frome et al., 2013)、图像字幕(Chen et al., 2015)),到区域级别(例如目标检测(Girshick, 2015)、短语定位(Plummer et al., 2015)),再到像素级别(例如语义/实例/全景分割(Long et al., 2015; Hafiz and Bhat, 2020; Kirillov et al., 2019))的任务。

如何学习图像表示:两种方法(图像中挖掘出的监督信号/从Web上挖掘的图像-文本数据集的语言监督)、三种学习方式(监督预训练/CLIP/仅图像的自监督学习)

In this chapter, we present how image representations can be learned, either using supervision sig- nals mined inside the images, or through using language supervision of image-text datasets mined from the Web. Specifically, Section 2.1 presents an overview of different learning paradigms, in- cluding supervised pre-training, contrastive language-image pre-training (CLIP), and image-only self-supervised learning. Section 2.2 discusses supervised pre-training. Section 2.3 focuses on CLIP. Section 2.4 discusses image-only self-supervised learning, including contrastive learning, non-contrastive learning, and masked image modeling. Given the various learning approaches to training vision foundation models, Section 2.5 reviews how they can be incorporated for better per- formance. Lastly, Section 2.6 discusses how vision foundation models can be used for finer-grained visual understanding tasks, such as fusion-encoder-based pre-training for image captioning and vi- sual question answering that require multimodal fusion, region-level pre-training for grounding, and pixel-level pre-training for segmentation.

在本章中,我们将介绍如何学习图像表示,要么使用从图像中挖掘出的监督信号,要么通过使用从Web上挖掘的图像-文本数据集的语言监督。

具体而言,第2.1节概述了不同的学习范式,包括监督预训练、对比语言-图像预训练(CLIP)以及仅图像的自监督学习。第2.2节讨论了监督预训练。第2.3节重点讨论CLIP。第2.4节讨论了仅图像的自监督学习,包括对比学习、非对比学习和遮挡图像建模。鉴于训练视觉基础模型的各种学习方法,第2.5节回顾了如何将它们结合起来以获得更好的性能。最后,第2.6节讨论了视觉基础模型如何用于更精细的视觉理解任务,例如基于融合编码器的图像字幕预训练和需要多模态融合的视觉问题回答,区域级预训练的基础和像素级预训练的分割。

2.1、Overview概述

Figure 2.1: An overview of the structure of Chapter 2.

预训练图像骨干的三类方法:标签监督(研究得最充分+以图像分类的形式,如ImageNet/工业实验室)、语言监督(来自文本的弱监督信号+预训练上亿个图像-文本对+对比损失,如CLIP/ALIGN)、仅图像的自监督学习(监督信号来自图像本身+,如对比学习等)

There is a vast amount of literature on various methods of learning general-purpose vision back- bones. As illustrated in Figure 2.1, we group these methods into three categories, depending on the types of supervision signals used to train the models, including:

>> Label supervision: Arguably, the most well-studied image representation learning methods are based on label supervisions (typically in the form of image classification) (Sun et al., 2017), where datasets like ImageNet (Krizhevsky et al., 2012) and ImageNet21K (Ridnik et al., 2021) have been popular, and larger-scale proprietary datasets are also used in industrial labs (Sun et al., 2017; Singh et al., 2022b; Zhai et al., 2022a; Wu et al., 2023d).

>> Language supervision: Another popular approach to learning image representations leverages weakly supervised signals from text, which is easy to acquire in large scale. For instance, CLIP (Radford et al., 2021) and ALIGN (Jia et al., 2021) are pre-trained using a contrastive loss and billions of image-text pairs mined from the internet. The resultant models achieve strong zero-shot performance on image classification and image-text retrieval, and the learned image and text encoders have been widely used for various downstream tasks and allow traditional com- puter vision models to perform open-vocabulary CV tasks (Gu et al., 2021; Ghiasi et al., 2022a; Qian et al., 2022; Ding et al., 2022b; Liang et al., 2023a; Zhang et al., 2023e; Zou et al., 2023a; Minderer et al., 2022).

>> Image-only self-supervision: There is also a vast amount of literature on exploring image-only self-supervised learning methods to learn image representations. As the name indicates, the super- vision signals are mined from the images themselves, and popular methods range from contrastive learning (Chen et al., 2020a; He et al., 2020), non-contrastive learning (Grill et al., 2020; Chen and He, 2021; Caron et al., 2021), to masked image modeling (Bao et al., 2022; He et al., 2022a).

关于学习通用视觉骨干的方法有大量的文献。如图2.1所示,根据用于训练模型的监督信号类型,我们将这些方法分为三类,包括:

>>标签监督:可以说,研究得最充分的图像表示学习方法是基于标签监督(通常以图像分类的形式)(Sun et al., 2017),其中像ImageNet(Krizhevsky et al., 2012)和ImageNet21K(Ridnik et al., 2021)这样的数据集一直很受欢迎,工业实验室也使用规模更大的专有数据集(Sun et al., 2017; Singh et al., 2022b; Zhai et al., 2022a; Wu et al., 2023d)。

>>语言监督:另一种学习图像表示的常用方法是利用来自文本的弱监督信号,这在大规模情况下很容易获得。例如,CLIP(Radford et al., 2021)和ALIGN(Jia et al., 2021)是使用对比损失和从互联网上挖掘的数十亿个图像-文本对进行预训练的。由此产生的模型在图像分类和图像-文本检索方面表现出色,实现了强大的零样本性能,学习的图像和文本编码器已被广泛用于各种下游任务,并允许传统计算机视觉模型执行开放词汇的计算机视觉任务(Gu et al., 2021; Ghiasi et al., 2022a; Qian et al., 2022; Ding et al., 2022b; Liang et al., 2023a; Zhang et al., 2023e; Zou et al., 2023a; Minderer et al., 2022)。

>>仅图像的自监督学习:还有大量文献探讨了通过自监督学习方法学习图像表示。正如名称所示,监督信号来自图像本身,并且流行的方法从对比学习(Chen et al., 2020a; He et al., 2020)、非对比学习(Grill et al., 2020; Chen and He, 2021; Caron et al., 2021)到遮挡图像建模(Bao et al., 2022; He et al., 2022a)不一而足。

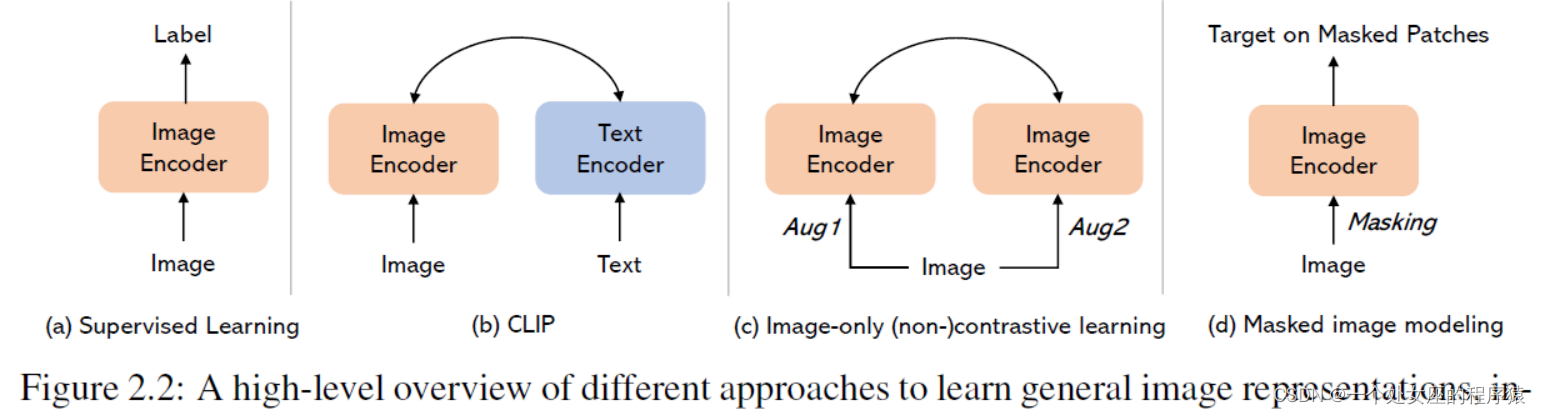

An illustration of these learning methods is shown in Figure 2.2. Besides the methods of pre- training image backbones, we will also discuss pre-training methods that allow multimodal fusion (e.g., CoCa (Yu et al., 2022a), Flamingo (Alayrac et al., 2022)), region-level and pixel-level image understanding (e.g., GLIP (Li et al., 2022e) and SAM (Kirillov et al., 2023)). These methods typi- cally rely on a pre-trained image encoder or a pre-trained image-text encoder pair. Figure 2.3 shows an overview of the topics covered in this chapter and some representative works in each topic.

这些学习方法的示意图如图2.2所示。除了预训练图像骨干的方法,我们还将讨论允许多模态融合(例如,CoCa(Yu et al., 2022a)、Flamingo(Alayrac et al., 2022))、区域级和像素级图像理解(例如,GLIP(Li et al., 2022e)和SAM(Kirillov et al., 2023))的预训练方法。这些方法通常依赖于预训练的图像编码器或预训练的图像-文本编码器对。图2.3显示了本章涵盖的主题概览以及每个主题中的一些代表性作品。

2.2、Supervised Pre-training监督预训练—依赖数据集的有效性和多样性+人工高成本性:基于ImageNet数据集,如AlexNet/ResNet/vision transformer/Swin transformer

Supervised pre-training on large-scale human-labeled datasets, such as ImageNet (Krizhevsky et al., 2012) and ImageNet21K (Ridnik et al., 2021), has emerged as a widely adopted approach to ac- quiring transferable visual representations. It aims to map an image to a discrete label, which is associated with a visual concept. This approach has greatly expedited progress in designing various vision backbone architectures (e.g., AlexNet (Krizhevsky et al., 2012), ResNet (He et al., 2016), vision transformer (Dosovitskiy et al., 2021), and Swin transformer (Liu et al., 2021)), and is the testbed for all the modern vision backbones. It also powered computer vision tasks across the whole spectrum, ranging from image classification, object detection/segmentation, visual question answer- ing, image captioning, to video action recognition. However, the effectiveness of learned represen- tations is often limited by the scale and diversity of supervisions in pre-training datasets, as human annotation is expensive.

在大规模人工标记的数据集上进行监督预训练,例如ImageNet(Krizhevsky et al., 2012)和ImageNet21K(Ridnik et al., 2021),已经成为一种广泛采用的获取可转移视觉表示的方法。它的目标是将图像映射到与视觉概念相关联的离散标签。这种方法极大地加速了各种视觉骨干架构的设计进程(例如AlexNet(Krizhevsky et al., 2012)、ResNet(He et al., 2016)、vision transformer(Dosovitskiy et al., 2021)和Swin transformer(Liu et al., 2021)),并成为所有现代视觉骨干的测试平台。它还推动了整个领域的计算机视觉任务,从图像分类、目标检测/分割、视觉问答、图像字幕到视频动作识别等任务。然而,学到的表示的有效性通常受到预训练数据集中监督规模和多样性的限制,因为人工标注很昂贵。

Large-scale datasets大规模数据集

Large-scale datasets. For larger-scale pre-training, noisy labels can be derived in large quantities from image-text pairs crawled from the Web. Using noisy labels, many industrial labs have suc- cessfully constructed comprehensive classification datasets using semi-automatic pipelines, such as JFT (Sun et al., 2017; Zhai et al., 2022a) and I2E (Wu et al., 2023d), or by leveraging proprietary data like Instagram hashtags (Singh et al., 2022b). The statistics of existing large-scale image clas-sification datasets are shown in Table 2.1. The labels are typically in the form of fine-grained image entities with a long-tailed distribution. Though classical, this approach has been very powerful for learning universal image representations. For example, JFT-300M (Sun et al., 2017) has been used for training the BiT (“Big Transfer”) models (Kolesnikov et al., 2020), and JFT-3B (Zhai et al., 2022a) has been used to scale up the training of a plain vision transformer (Dosovitskiy et al., 2021) to 22B in model size. LiT (Zhai et al., 2022b) proposes to first learn the image backbone on JFT- 3B (Zhai et al., 2022a), and keep it frozen and learn another text tower to align the image and text embedding space to make the model open-vocabulary and is capable of performing zero-shot image classification.

大规模数据集。对于更大规模的预训练,可以从Web上爬取的图像-文本对中获得大量的噪声标签。使用噪声标签,许多工业实验室成功地构建了全面的分类数据集,使用半自动流水线,如JFT(Sun et al., 2017; Zhai et al., 2022a)和I2E(Wu et al., 2023d),或通过利用专有数据,如Instagram标签(Singh et al., 2022b)。现有大规模图像分类数据集的统计数据如表2.1所示。标签通常采用长尾分布的形式呈现出细粒度的图像实体。尽管这是一种经典的方法,但它对于学习通用图像表示非常强大。例如,JFT-300M(Sun et al., 2017)已被用于训练BiT(“Big Transfer”)模型(Kolesnikov et al., 2020),而JFT-3B(Zhai et al., 2022a)已被用于将纯视觉transformers(Dosovitskiy et al., 2021)的模型规模扩展到22B。LiT(Zhai et al., 2022b)提出了在JFT-3B(Zhai et al., 2022a)上首先学习图像骨干,然后将其冻结并学习另一个文本塔对齐图像和文本嵌入空间,从而使模型能够执行零样本图像分类。

图2.2: 不同方法学习通用图像表示的高级概述

Figure 2.2: A high-level overview of different approaches to learn general image representations, in- cluding supervised learning (Krizhevsky et al., 2012), contrastive language-image pre-training (Rad- ford et al., 2021; Jia et al., 2021), and image-only self-supervised learning, including contrastive learning (Chen et al., 2020a; He et al., 2020), non-contrastive learning (Grill et al., 2020; Chen and He, 2021), and masked image modeling (Bao et al., 2022; He et al., 2022a).

图2.2: 不同方法学习通用图像表示的高级概述,包括监督学习(Krizhevsky et al., 2012)、对比语言-图像预训练(Radford et al., 2021; Jia et al., 2021)以及仅图像的自监督学习,包括对比学习(Chen et al., 2020a; He et al., 2020)、非对比学习(Grill et al., 2020; Chen and He, 2021)和遮挡图像建模(Bao et al., 2022; He et al., 2022a)。

图2.3: 本章涵盖的主题概述以及每个主题中的代表性作品

Figure 2.3: An overview of the topics covered in this chapter and representative works in each topic. We start from supervised learning and CLIP, and then move on to image-only self-supervised learn- ing, including contrastive learning, non-contrastive learning, and masked image modeling. Lastly, we discuss pre-training methods that empower multimodal fusion, region-level and pixel-level im- age understanding.

图2.3: 本章涵盖的主题概述以及每个主题中的代表性作品。我们从监督学习和CLIP开始,然后转向仅图像的自监督学习,包括对比学习、非对比学习和遮挡图像建模。最后,我们讨论了增强多模态融合、区域级和像素级图像理解的预训练方法。

Table 2.1: Statistics of existing large-scale image classification datasets

图2.4: 对比语言-图像预训练的示意图

Figure 2.4: Illustration of contrastive language-image pre-training, and how the learned model can be used for zero-shot image classification. Image credit: Radford et al. (2021).

图2.4: 对比语言-图像预训练的示意图,以及学到的模型如何用于零样本图像分类。图片来源: Radford等人 (2021)。

Model training模型训练:

Model training. There are many loss functions that can be used to promote embedding properties (e.g., separability) (Musgrave et al., 2020). For example, the large margin loss (Wang et al., 2018) is used for MOFI training (Wu et al., 2023d). Furthermore, if the datasets have an immense number of labels (can potentially be over 2 million as in MOFI (Wu et al., 2023d)), predicting all the labels in each batch becomes computationally costly. In this case, a fixed number of labels is typically used for each batch, similar to sampled softmax (Gutmann and Hyva¨rinen, 2010).

模型训练。有许多可以用于促进嵌入属性(例如,可分离性)的损失函数(Musgrave等人,2020)。例如,大间隔损失(Wang等人,2018)用于MOFI训练(Wu等人,2023d)。此外,如果数据集具有巨大数量的标签(可能会超过200万,如MOFI(Wu等人,2023d)),预测每批中的所有标签在计算上变得昂贵。在这种情况下,通常会为每个批次使用固定数量的标签,类似于采样的softmax(Gutmann和Hyva¨rinen,2010)。

2.3、Contrastive Language-Image Pre-training对比语言-图像预训练

2.3.1、Basics of CLIP Training-CLIP训练基础

语言数据+训练方式,如CLIP/ALIGN/Florence/BASIC/OpenCLIP等

Language is a richer form of supervision than classical closed-set labels. Rather than deriving noisy label supervision from web-crawled image-text datasets, the alt-text can be directly used for learning transferable image representations, which is the spirit of contrastive language-image pre-training (CLIP) (Radford et al., 2021). In particular, models trained in this way, such as ALIGN (Jia et al., 2021), Florence (Yuan et al., 2021), BASIC (Pham et al., 2021), and OpenCLIP (Ilharco et al., 2021), have showcased impressive zero-shot image classification and image-text retrieval capabilities by mapping images and text into a shared embedding space. Below, we discuss how the CLIP model is pre-trained and used for zero-shot prediction.

语言是比传统封闭集标签更丰富的监督形式。与从Web爬取的图像-文本数据集中获取嘈杂的标签监督不同,可以直接使用替换文本来学习可转移的图像表示,这是对比语言-图像预训练(CLIP)(Radford等人,2021)的精神。特别是,以这种方式训练的模型,如ALIGN(Jia等人,2021),Florence(Yuan等人,2021),BASIC(Pham等人,2021)和OpenCLIP(Ilharco等人,2021),通过将图像和文本映射到共享的嵌入空间,展示了令人印象深刻的零样本图像分类和图像-文本检索能力。接下来,我们将讨论CLIP模型如何进行预训练并用于零样本预测。

训练:对比学习+三维度扩展(批次大小+数据大小+模型大小)

Training: As shown in Figure 2.4(1), CLIP is trained via simple contrastive learning. CLIP is an outstanding example of “simple algorithms that scale well” (Li et al., 2023m). To achieve satisfac- tory performance, model training needs to be scaled along three dimensions: batch size, data size, and model size (Pham et al., 2021). Specifically, the typical batch size used for CLIP training can be 16k or 32k. The number of image-text pairs in the pre-training datasets is frequently measured in billions rather than millions. A vision transformer trained in this fashion can typically vary from 300M (Large) to 1B (giant) in model size.

训练:如图2.4(1)所示,CLIP通过简单的对比学习进行训练。CLIP是“扩展性良好的简单算法”的的一个杰出例子(Li et al., 2023m)。为了达到令人满意的性能,模型训练需要在三个维度上扩展:批次大小、数据大小和模型大小(Pham等人,2021)。

具体来说,CLIP训练中使用的典型批次大小可以为16k或32k。在预训练数据集中的图像-文本对数量通常以数十亿计而不是百万计。以这种方式训练的视觉transformers通常可以在模型大小上从300M(Large大型)变化到1B(giant巨型)。

零样本预测:零样本的图像分类+零样本的图像-文本检索

Zero-shot prediction: As shown in Figure 2.4 (2) and (3), CLIP empowers zero-shot image classification via reformatting it as a retrieval task and considering the semantics behind labels. It can also be used for zero-shot image-text retrieval by its design. Besides this, the aligned image- text embedding space makes it possible to make all the traditional vision models open vocabulary and has inspired a rich line of work on open-vocabulary object detection and segmentation (Li et al., 2022e; Zhang et al., 2022b; Zou et al., 2023a; Zhang et al., 2023e).

零样本预测:如图2.4(2)和(3)所示,CLIP通过将其重新格式化为检索任务并考虑标签背后的语义,提供了零样本图像分类的能力。它还可以用于零样本图像-文本检索,因为它的设计如此。除此之外,对齐的图像-文本嵌入空间使得所有传统视觉模型都能够进行开放式词汇学习,并且已经启发了许多关于开放式词汇目标检测和分割的工作(Li等人,2022e;Zhang等人,2022b;Zou等人,2023a;Zhang等人,2023e)。

Figure 2.5: ImageBind (Girdhar et al., 2023) proposes to link a total of six modalities into a common embedding space via leveraging pre-trained CLIP models, enabling new emergent alignments and capabilities. Image credit: Girdhar et al. (2023).图2.5: ImageBind(Girdhar等人,2023)提出通过利用预训练的CLIP模型将总共六种模态链接到共同的嵌入空间,从而实现新的紧密对齐和能力。图片来源: Girdhar等人(2023)。

2.3.2、CLIP Variants—CLIP变种

Since the birth of CLIP, there have been tons of follow-up works to improve CLIP models, as to be discussed below. We do not aim to provide a comprehensive literature review of all the methods, but focus on a selected set of topics.

自CLIP诞生以来,已经有大量后续研究来改进CLIP模型,如下所讨论。我们的目标不是提供所有方法的全面文献综述,而是专注于一组选定的主题。

Data scaling up数据规模扩大:CLIP(Web中挖掘400M图像-文本对)→ALIGN(1.8B图像-文本对)→BASIC探究关系,较小规模的图像-文本数据集(如SBU/RedCaps/WIT)→大规模图像-文本数据集(如Shutterstock/LAION-400M/COYO-700M/LAION-2B)

Data scaling up. Data is the fuel for CLIP training. For example, OpenAI’s CLIP was trained on 400M image-text pairs mined from the web, while ALIGN used a proprietary dataset consisting of 1.8B image-text pairs. In BASIC (Pham et al., 2021), the authors have carefully studied the scaling among three dimensions: batch size, data size, and model size. However, most of these large-scale datasets are not publicly available, and training such models requires massive computing resources.

In academic settings, researchers (Li et al., 2022b) have advocated the use of a few millions of image- text pairs for model pre-training, such as CC3M (Sharma et al., 2018), CC12M (Changpinyo et al., 2021), YFCC (Thomee et al., 2016). Relatively small-scale image-text datasets that are publicly available include SBU (Ordonez et al., 2011), RedCaps (Desai et al., 2021), and WIT (Srinivasan et al., 2021). Large-scale public available image-text datasets include Shutterstock (Nguyen et al., 2022), LAION-400M (Schuhmann et al., 2021), COYO-700M (Byeon et al., 2022), and LAION- 2B (Schuhmann et al., 2022), to name a few. For example, LAION-2B (Schuhmann et al., 2022) has been used by researchers to study the reproducible scaling laws for CLIP training (Cherti et al., 2023).

数据规模扩大。数据是CLIP训练的动力源。例如,OpenAI的CLIP是在从Web中挖掘的400M图像-文本对上进行训练的,而ALIGN则使用了包含1.8B图像-文本对的专有数据集。在BASIC(Pham等人,2021)中,作者仔细研究了批次大小、数据大小和模型大小之间的扩展。然而,大多数这些大规模数据集并不公开,训练这些模型需要大量计算资源。

在学术环境中,研究人员(Li等人,2022b)提倡使用数百万个图像-文本对进行模型预训练,例如CC3M(Sharma等人,2018)、CC12M(Changpinyo等人,2021)、YFCC(Thomee等人,2016)。

公开可用的相对较小规模的图像-文本数据集包括SBU(Ordonez等人,2011)、RedCaps(Desai等人,2021)和WIT(Srinivasan等人,2021)。公开可用的大规模图像-文本数据集包括Shutterstock(Nguyen等人,2022)、LAION-400M(Schuhmann等人,2021)、COYO-700M(Byeon等人,2022)和LAION-2B(Schuhmann等人,2022)等。例如,LAION-2B(Schuhmann等人,2022)已被研究人员用于研究CLIP训练的可复制的扩展定律(Cherti等人,2023)。

固定数据集并设计不同的算法→DataComp提出转向→固定的CLIP训练方法来选择和排名数据集

Interestingly, in search of the next-generation image-text datasets, in DataComp (Gadre et al., 2023), instead of fixing the dataset and designing different algorithms, the authors propose to se- lect and rank datasets using the fixed CLIP training method. Besides paired image-text data mined from the Web for CLIP training, inspired by the interleaved image-text dataset M3W introduced in Flamingo (Alayrac et al., 2022), there have been recent efforts of collecting interleaved image-text datasets, such as MMC4 (Zhu et al., 2023b) and OBELISC (Laurenc¸on et al., 2023).

有趣的是,在寻找下一代图像-文本数据集时,DataComp(Gadre等人,2023)中提到,与其固定数据集并设计不同的算法,不如使用固定的CLIP训练方法来选择和排名数据集。除了从Web中挖掘的成对图像-文本数据用于CLIP训练外,受Flamingo(Alayrac等人,2022)中引入的交错图像-文本数据集M3W的启发,最近还有一些收集交错图像-文本数据集的工作,如MMC4(Zhu等人,2023b)和OBELISC(Laurenc¸on等人,2023)。

Model design and training methods. 模型设计和训练方法:图像塔(如FLIP/MAE)、语言塔(如K-Lite/LaCLIP)、可解释性(如STAIR)、更多模态(如ImageBind/)

CLIP training has been significantly improved. Below, we review some representative works.

>> Image tower: On the image encoder side, FLIP (Li et al., 2023m) proposes to scale CLIP train- ing via masking. By randomly masking out image patches with a high masking ratio, and only encoding the visible patches as in MAE (He et al., 2022a), the authors demonstrate that masking can improve training efficiency without hurting the performance. The method can be adopted for all CLIP training. Cao et al. (2023) found that filtering out samples that contain text regions in the image improves CLIP training efficiency and robustness.

>> Language tower: On the language encoder side, K-Lite (Shen et al., 2022a) proposes to use external knowledge in the form of Wiki definition of entities together with the original alt-text for contrastive pre-training. Empirically, the use of enriched text descriptions improves the CLIP performance. LaCLIP (Fan et al., 2023a) shows that CLIP can be improved via rewriting the noisy and short alt-text using large language models such as ChatGPT.

>> Interpretability: The image representation is typically a dense feature vector. In order to im- prove the interpretability of the shared image-text embedding space, STAIR (Chen et al., 2023a) proposes to map images and text to a high-dimensional, sparse, embedding space, where each dimension in the sparse embedding is a (sub-)word in a large dictionary in which the predicted non-negative scalar corresponds to the weight associated with the token. The authors show that STAIR achieves better performance than the vanilla CLIP with improved interpretability.

>> More modalities: The idea of contrastive learning is general, and can go beyond just image and text modalities. For example, as shown in Figure 2.5, ImageBind (Girdhar et al., 2023) proposes to encode six modalities into a common embedding space, including images, text, audio, depth, thermal, and IMU modalities. In practice, a pre-trained CLIP model is used and kept frozen during training, which indicates that other modality encoders are learned to align to the CLIP embedding space, so that the trained model can be applied to new applications such as audio-to- image generation and multimodal LLMs (e.g., PandaGPT (Su et al., 2023)).

CLIP训练已经得到了显著改进。以下是一些代表性的工作。

>> 图像塔:在图像编码器方面,FLIP(Li等人,2023m)提出通过遮盖来扩展CLIP训练。通过随机遮盖高比例的图像区块,并仅对可见区块进行编码,如MAE(He等人,2022a)一样,作者证明了遮盖可以提高训练效率而不影响性能。该方法可以应用于所有CLIP训练。Cao等人(2023)发现,过滤掉包含图像中文本区域的样本可以提高CLIP的训练效率和鲁棒性。

>> 语言塔:在语言编码器方面,K-Lite(Shen等人,2022a)提出使用实体的Wiki定义与原始备用文本一起进行对比预训练。从经验上看,使用丰富的文本描述可以提高CLIP的性能。LaCLIP(Fan等人,2023a)表明,通过使用大型语言模型(例如ChatGPT)重写嘈杂且短的备用文本,可以改善CLIP的性能。

>> 可解释性:图像表示通常是稠密特征向量。为了提高共享图像-文本嵌入空间的可解释性,STAIR(Chen等人,2023a)提出将图像和文本映射到高维稀疏嵌入空间,其中稀疏嵌入中的每个维度是大字典中的一个(子)词,其中预测的非负标量对应于与该标记相关联的权值。作者表明,STAIR比vanilla CLIP实现了更好的性能,并提高了可解释性。

>> 更多模态:对比学习的思想是通用的,可以超越仅限于图像和文本模态。例如,如图2.5所示,ImageBind(Girdhar等人,2023)提出将六种模态编码到共同的嵌入空间中,包括图像、文本、音频、深度、热像和IMU模态。在实践中,使用预训练的CLIP模型,并在训练期间保持冻结,这意味着其他模态编码器被学习以与CLIP嵌入空间对齐,以便训练模型可以应用于新的应用,如音频到图像生成和多模态LLMs(例如PandaGPT(Su等人,2023))。

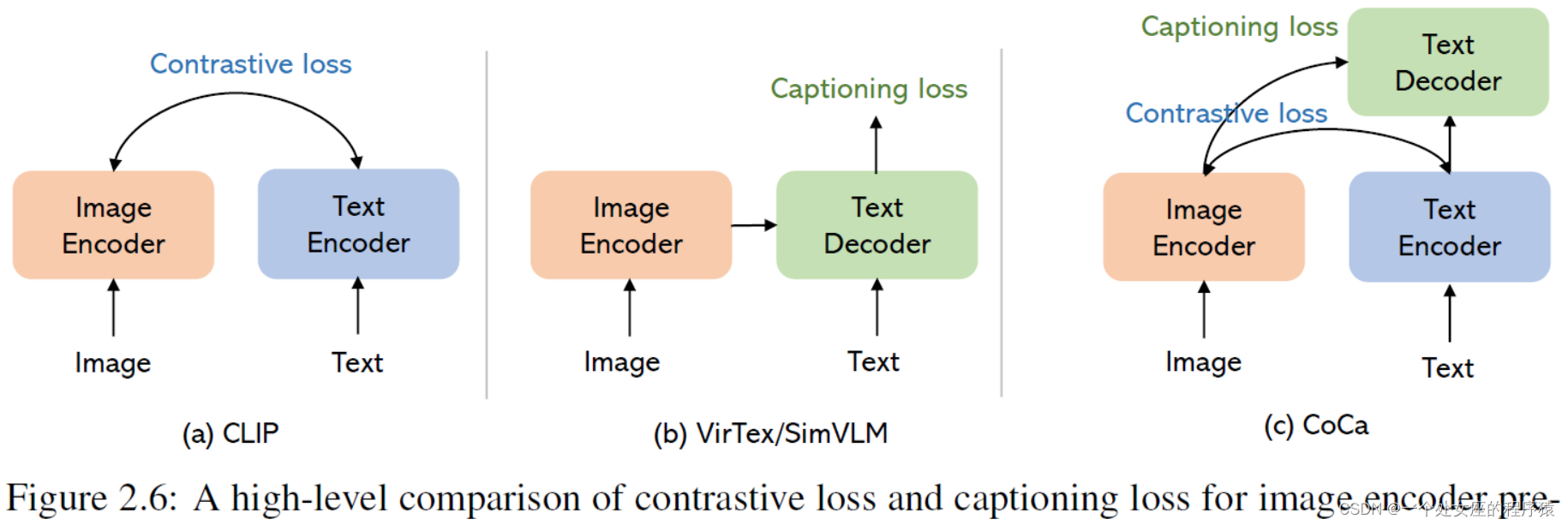

Figure 2.6: A high-level comparison of contrastive loss and captioning loss for image encoder pre-training. (a) CLIP (Radford et al., 2021) uses contrastive loss alone for pre-training, which enables zero-shot image classification and has demonstrated strong scaling behavior. (b) VirTex (Desai and Johnson, 2021) uses captioning loss alone for pre-training. SimVLM (Wang et al., 2022g) uses prefix language modeling for pre-training in a much larger scale. The model architecture is similar to multimodal language models (e.g., GIT (Wang et al., 2022a) and Flamingo (Alayrac et al., 2022)), but VirTex and SimVLM aim to pre-train the image encoder from scratch. (c) CoCa (Yu et al., 2022a) uses both contrastive and captioning losses for pre-training. The model architecture is similar to ALBEF (Li et al., 2021b), but CoCa aims to pre-train the image encoder from scratch, instead of using a pre-trained one. 图2.6:图像编码器预训练中对比度损失和字幕损失的高级对比。

(a) CLIP (Radford et al., 2021)仅使用对比损失进行预训练,实现了零射击图像分类,并表现出很强的缩放行为。

(b) VirTex (Desai and Johnson, 2021)仅使用字幕损失进行预训练。SimVLM (Wang et al., 2022g)在更大的范围内使用前缀语言建模进行预训练。模型架构类似于多模态语言模型(例如GIT (Wang et al., 2022a)和Flamingo (Alayrac et al., 2022)),但VirTex和SimVLM旨在从头开始预训练图像编码器。

(c) CoCa (Yu et al., 2022a)同时使用对比损失和字幕损失进行预训练。模型架构类似于ALBEF (Li et al., 2021b),但CoCa旨在从头开始预训练图像编码器,而不是使用预训练的图像编码器。

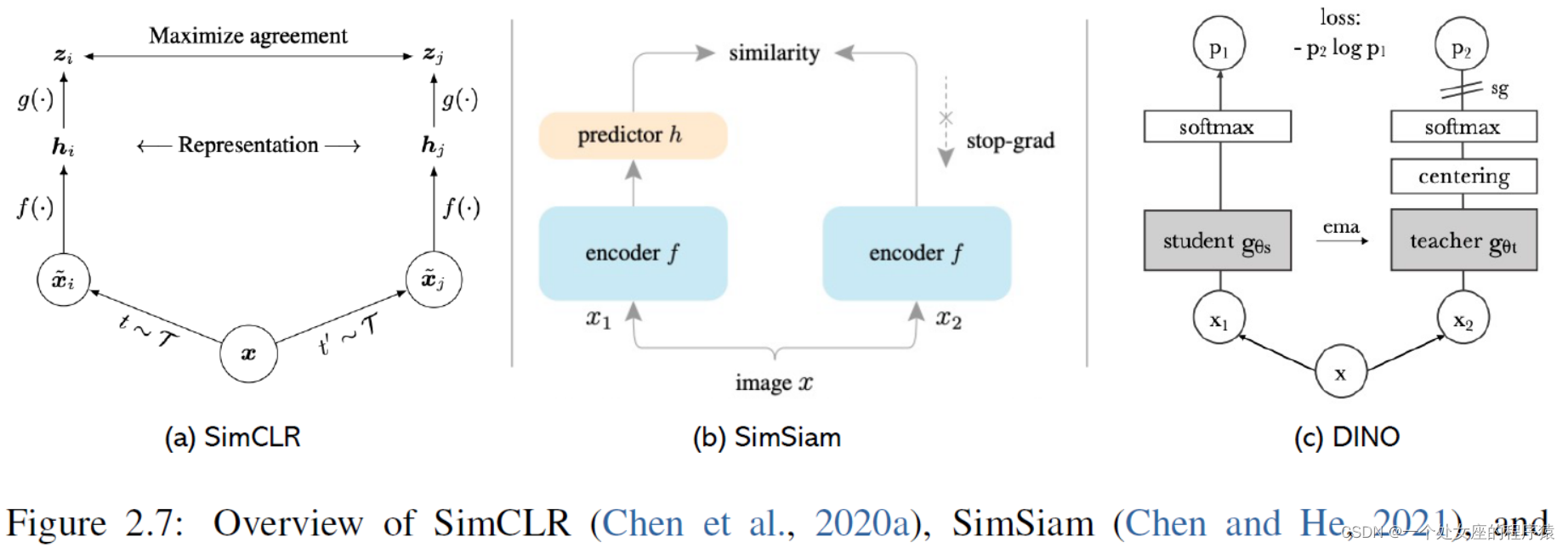

Figure 2.7: Overview of SimCLR (Chen et al., 2020a), SimSiam (Chen and He, 2021), and DINO (Caron et al., 2021) for self-supervised image representation learning. SimCLR uses con- trastive learning for model training, while SimSiam and DINO explores non-contrastive learning methods. Image credit: Chen et al. (2020a), Chen and He (2021), Caron et al. (2021).

Figure 2.7: Overview of SimCLR (Chen et al., 2020a), SimSiam (Chen and He, 2021), and DINO (Caron et al., 2021) for self-supervised image representation learning. SimCLR uses con- trastive learning for model training, while SimSiam and DINO explores non-contrastive learning methods. Image credit: Chen et al. (2020a), Chen and He (2021), Caron et al. (2021).

图2.7:SimCLR(Chen等人,2020a)、SimSiam(Chen和He,2021)和DINO(Caron等人,2021)自监督图像表示学习的概述。SimCLR使用对比学习进行模型训练,而SimSiam和DINO探索非对比学习方法。图片来源:Chen等人(2020a),Chen和He(2021),Caron等人(2021)。

Objective function.目标函数:细粒度监督、对比式描述生成、仅使用字幕损失、用于语言-图像预训练的Sigmoid损失

The use of contrastive loss alone is powerful, especially when the model is scaled up. However, other objective functions can also be applied.

>> Fine-grained supervision: Instead of using a simple dot-product to calculate the similarity of an image-text pair, the supervision can be made more fine-grained via learning word-patch alignment. In FILIP (Yao et al., 2022b), the authors propose to first compute the loss by calculating the token- wise similarity, and then aggregating the matrix by max-pooling for word-patch alignment.

>> Contrastive captioner: Besides the contrastive learning branch, CoCa (Yu et al., 2022a) (shown in Figure 2.6(c)) adds a generative loss to improve performance and allow new capabilities that require multimodal fusion (e.g., image captioning and VQA). This is similar to many fusion- encoder-based vision-language models such as ALBEF (Li et al., 2021b), but with the key differ- ence in that CoCa aims to learn a better image encoder from scratch. A detailed discussion on multimodal fusion is in Section 2.6.1.

>> Captioning loss alone: How about using the captioning loss alone to pre-train an image encoder? Actually, before CLIP was invented, VirTex (Desai and Johnson, 2021) (shown in Figure 2.6(b)) and ICMLM (Sariyildiz et al., 2020) learn encoders using a single image captioning loss, but the scale is very small (restricted to COCO images) and the performance is poor. CLIP also shows that contrastive pre-training is a much better choice. In SimVLM (Wang et al., 2022g), the authors found that the learned image encoder was not as competitive as CLIP. However, in the recent work Cap/CapPa (Tschannen et al., 2023), the authors argue that image captioners are scalable vision learners, too. Captioning can exhibit the same or even better scaling behaviors.

>> Sigmoid loss for language-image pre-training: Unlike standard contrastive learning with soft- max normalization, Zhai et al. (2023) uses a simple pairwise sigmoid loss for image-text pre- training, which operates on image-text pairs and does not require a global view of the pairwise similarities for normalization. The authors show that the use of simple sigmoid loss can also achieve strong performance on zero-shot image classification.

仅使用对比损失本身非常强大,特别是当模型按比例放大时。然而,其他目标函数也可以应用。

>> 细粒度监督:与使用简单的点积来计算图像-文本对相似性不同,可以通过学习单词-区块对齐来使监督更细粒度。在FILIP(Yao等人,2022b)中,作者建议首先通过计算token之间的相似性来计算损失,然后通过最大池化来对词-区块对齐进行矩阵聚合矩阵以进行单词补丁对齐。

>> 对比式描述生成:除了对比学习分支外,CoCa(Yu等人,2022a)(图2.6(c)中显示)增加了生成损失以提高性能,并允许需要多模态融合的新功能(例如图像字幕和VQA)。这类似于许多基于融合编码器的视觉-语言模型,如ALBEF(Li等人,2021b),但关键区别在于CoCa旨在从头学习更好的图像编码器。多模态融合的详细讨论在第2.6.1节中。

>> 仅使用字幕损失:如何单独使用字幕损失来预训练图像编码器?实际上,在CLIP被发明之前,VirTex(Desai和Johnson,2021)(图2.6(b)中显示)和ICMLM(Sariyildiz等人,2020)使用单一图像字幕损失来学习编码器,但规模非常小(仅限于COCO图像)且性能较差。CLIP也表明对比式预训练是一个更好的选择。在SimVLM(Wang等人,2022g)中,作者发现学到的图像编码器不如CLIP具有竞争力。然而,在最近的工作Cap/CapPa(Tschannen等人,2023)中,作者认为图像字幕生成器也是可扩展的视觉学习者。字幕生成可以展现出相同或甚至更好的扩展行为。

>> 用于语言-图像预训练的Sigmoid损失:与标准的对比学习不同,标准对比学习使用softmax归一化,Zhai等人(2023)使用了一个简单的成对Sigmoid损失进行图像-文本预训练,该损失操作于图像-文本对,并且不需要对两两相似度进行归一化的全局视图。作者表明,使用简单的Sigmoid损失也可以在零样本图像分类上获得强大的性能。

2.4、Image-Only Self-Supervised Learning仅图像自监督学习:三类(对比学习/非对比学习/遮蔽图像建模)

Now, we shift our focus to image-only self-supervised learning, and divide the discussion into three parts: (i) contrastive learning, (ii) non-contrastive learning, and (iii) masked image modeling.

现在,我们将重点转向仅图像自监督学习,并将讨论分为三个部分:

(i)对比学习,

(ii)非对比学习,和

(iii)遮蔽图像建模。

2.4.1、Contrastive and Non-contrastive Learning

Contrastive learning.对比学习

The core idea of contrastive learning (Gutmann and Hyva¨rinen, 2010; Arora et al., 2019) is to promote the positive sample pairs and repulse the negative sample pairs. Besides being used in CLIP, contrastive learning has also been a popular concept in self-supervised image representation learning (Wu et al., 2018; Ye et al., 2019b; Tian et al., 2020a; Chen et al., 2020a; He et al., 2020; Misra and Maaten, 2020; Chen et al., 2020c). It has been shown that the contrastive objective, known as the InfoNCE loss (Oord et al., 2018), can be interpreted as max- imizing the lower bound of mutual information between different views of the data (Hjelm et al., 2018; Bachman et al., 2019; Henaff, 2020).

In a nutshell, all the image-only contrastive learning methods (e.g., SimCLR (Chen et al., 2020a), see Figure 2.7(a), MoCo (He et al., 2020), SimCLR-v2 (Chen et al., 2020b), MoCo-v2 (Chen et al., 2020c)) share the same high-level framework, detailed below.

>> Given one image, two separate data augmentations are applied;

>> A base encoder is followed by a project head, which is trained to maximize agreement using a contrastive loss (i.e., they are from the same image or not);

>> The project head is thrown away for downstream tasks.

However, a caveat of contrastive learning is the requirement of a large number of negative samples. These samples can be maintained in a memory bank (Wu et al., 2018), or directly from the current batch (Chen et al., 2020a), which suggests the requirement of a large batch size. MoCo (He et al., 2020) maintains a queue of negative samples and turns one branch into a momentum encoder to improve the consistency of the queue. Initially, contrastive learning was primarily studied for pre- training convolutional networks. However, with the rising popularity of vision transformers (ViT), researchers have also explored its application in the context of ViT. (Chen et al., 2021b; Li et al., 2021a; Xie et al., 2021).

对比学习的核心思想(Gutmann和Hyva¨rinen,2010;Arora等人,2019)是促进正样本对并排斥负样本对。除了用于CLIP之外,对比学习也是自监督图像表示学习中的一个流行概念(Wu等人,2018;Ye等人,2019b;Tian等人,2020a;Chen等人,2020a;He等人,2020;Misra和Maaten,2020;Chen等人,2020c)。研究表明,被称为InfoNCE损失的对比目标(Oord等人,2018)可以被解释为最大化不同数据视图之间互信息的下界(Hjelm等人,2018;Bachman et al., 2019;Henaff, 2020)。

简而言之,所有仅图像对比学习方法(例如SimCLR(Chen等人,2020a),见图2.7(a),MoCo(He等人,2020),SimCLR-v2(Chen等人,2020b),MoCo-v2(Chen等人,2020c))共享相同的高级框架,如下所述。

>> 给定一幅图像,应用两个单独的数据增强;

>> 一个基础编码器后跟一个项目头,该项目头经过对比损失进行训练,以最大程度地提高一致性(即它们来自同一图像或不来自同一图像);

>> 项目头被丢弃,用于下游任务。

然而,对比学习的一个缺点是需要大量的负样本。这这些样本可以保存在内存库中(Wu等人,2018),也可以直接保存在当前批次中(Chen等人,2020a),这表明需要更大的批次大小。MoCo(He等人,2020)维护了一个负样本队列,并将一个分支转换为动量编码器,以提高队列的一致性。最初,对比学习主要用于预训练卷积网络。然而,随着视觉transformers(ViT)的日益流行,研究人员也在探索其在ViT背景下的应用(Chen等人,2021b;Li等人,2021a;Xie等人,2021)。

Figure 2.8: Overview of BEiT pre-training for image transformers. Image credit: Bao et al. (2022).图像转换器的BEiT预训练概述

Non-contrastive learning.非对比学习

Recent self-supervised learning methods do not depend on negative samples. The use of negatives is replaced by asymmetric architectures (e.g., BYOL (Grill et al., 2020), SimSiam (Chen and He, 2021)), dimension de-correlation (e.g., Barlow twins (Zbontar et al., 2021), VICReg (Bardes et al., 2021), Whitening (Ermolov et al., 2021)), and clustering (e.g., SWaV (Caron et al., 2020), DINO (Caron et al., 2021), Caron et al. (2018); Amrani et al. (2022); Assran et al. (2022); Wang et al. (2023b)), etc.

For example, as illustrated in Figure 2.7(b), in SimSiam (Chen and He, 2021), two augmented views of a single image are processed by an identical encoder network. Subsequently, a prediction MLP is applied to one view, while a stop-gradient operation is employed on the other. The primary objective of this model is to maximize the similarity between the two views. It is noteworthy that SimSiam relies on neither negative pairs nor a momentum encoder.

Another noteworthy method, known as DINO (Caron et al., 2021) and illustrated in Figure 2.7(c), takes a distinct approach. DINO involves feeding two distinct random transformations of an input image into both the student and teacher networks. Both networks share the same architecture but have different parameters. The output of the teacher network is centered by computing the mean over the batch. Each network outputs a feature vector that is normalized with a temperature softmax applied to the feature dimension. The similarity between these features is quantified using a cross- entropy loss. Additionally, a stop-gradient operator is applied to the teacher network to ensure that gradients propagate exclusively through the student network. Moreover, DINO updates the teacher’s parameters using an exponential moving average of the student’s parameters.

最近的自监督学习方法不依赖于负样本。负样本的使用被不对称架构(例如BYOL(Grill等人,2020),SimSiam(Chen和He,2021)),维度去相关(例如Barlow twins(Zbontar等人,2021),VICReg(Bardes等人,2021),Whitening(Ermolov等人,2021))和聚类(例如SWaV(Caron等人,2020),DINO(Caron等人,2021),Caron等人(2018);Amrani等人(2022);Assran等人(2022);Wang等人(2023b))等方法所取代。

例如,如图2.7(b)所示,在SimSiam(Chen和He,2021)中,单个图像的两个增强视图经过相同的编码器网络处理。随后,在一个视图上应用预测MLP,而在另一个视图上使用stop-gradient停止梯度操作。该模型的主要目标是最大化两个视图之间的相似性。值得注意的是,SimSiam既不依赖于负对也不依赖于动量编码器。

另一个值得注意的方法,称为DINO(Caron等人,2021),如图2.7(c)所示,采用了一种独特的方法。DINO涉及将输入图像的两个不同的随机变换馈送到学生和教师网络中。两个网络共享相同的架构,但具有不同的参数。教师网络的输出通过计算批次的均值来居中。每个网络输出一个特征向量,该向量通过应用于特征维度的温度softmax进行归一化。这些特征之间的相似性使用交叉熵损失来量化。此外,stop-gradient运算符应用于教师网络,以确保梯度仅通过学生网络传播。此外,DINO使用学生参数的指数移动平均值来更新教师参数。

2.4.2、Masked Image Modeling遮蔽图像建模

Masked language modeling (Devlin et al., 2019) is a powerful pre-training task that has revolution- ized the NLP research. To mimic the success of BERT pre-training for NLP, the pioneering work BEiT (Bao et al., 2022), as illustrated in Figure 2.8, proposes to perform masked image modeling (MIM) to pre-train image transformers. Specifically,

>>Image tokenizer: In order to perform masked token prediction, an image tokenizer is required to tokenize an image into discrete visual tokens, so that these tokens can be treated just like an ad- ditional set of language tokens. Some well-known learning methods for image tokenziers include VQ-VAE (van den Oord et al., 2017), VQ-VAE-2 (Razavi et al., 2019), VQ-GAN (Esser et al., 2021), ViT-VQGAN (Yu et al., 2021), etc. These image tokenizers have also been widely used for autoregressive image generation, such as DALLE (Ramesh et al., 2021a), Make-A-Scene (Gafni et al., 2022), Parti (Yu et al., 2022b), to name a few.

>>Mask-then-predict: The idea of MIM is conceptually simple: models accept the corrupted input image (e.g., via random masking of image patches), and then predict the target of the masked con- tent (e.g., discrete visual tokens in BEiT). As discussed in iBOT (Zhou et al., 2021), this training procedure can be understood as knowledge distillation between the image tokenizer (which serves as the teacher) and the BEiT encoder (which serves as the student), while the student only sees partial of the image.

遮蔽语言建模(Devlin等人,2019)是一项强大的预训练任务,已经彻底改变了自然语言处理领域的研究。为了模仿BERT在自然语言处理领域的成功,开创性的工作BEiT(Bao等人,2022),如图2.8所示,提出了执行遮蔽图像建模(MIM)来预训练图像transformers。具体而言,

>> 图像标记器:为了执行遮蔽标记预测,需要一个图像标记器,将图像标记为离散的视觉标记,以便这些标记可以像额外的语言标记一样处理。一些知名的图像标记方法包括VQ-VAE(van den Oord等人,2017),VQ-VAE-2(Razavi等人,2019),VQ-GAN(Esser等人,2021),ViT-VQGAN(Yu等人,2021),等等。这些图像标记器也广泛用于自回归图像生成,如DALLE(Ramesh等人,2021a),Make-A-Scene(Gafni等人,2022),Parti(Yu等人,2022b),等等。

>> 掩码后预测:MIM的思想在概念上很简单:模型接受损坏的输入图像(例如,通过对图像块进行随机掩码),然后预测被掩码内容的目标(例如,BEiT中的离散视觉标记)。正如iBOT(Zhou等人,2021)中讨论的那样,这种训练过程可以理解为图像标记器(作为教师)和BEiT编码器(作为学生)之间的知识蒸馏,而学生只看到图像的部分。

Figure 2.9: Illustration of Masked Autoencoder (MAE)

Figure 2.9: Illustration of Masked Autoencoder (MAE) (He et al., 2022a) that uses raw pixel values for MIM training, and MaskFeat (Wei et al., 2021) that uses different features as the targets. HOG, a hand-crafted feature descriptor, was found to work particularly well in terms of both performance and efficiency. Image credit: He et al. (2022a) and Wei et al. (2021). 图2.9:使用原始像素值进行MIM训练的mask Autoencoder (MAE) (He et al., 2022a)和使用不同特征作为目标的MaskFeat (Wei et al., 2021)的说明。HOG是一个手工制作的特征描述符,在性能和效率方面都表现得特别好。图片来源:He et al. (2022a)和Wei et al.(2021)。 Targets目标:两类(低级像素特征【更细粒度图像理解】、高级特征),损失函数的选择取决于目标的性质,离散标记的目标(通常使用交叉熵损失)+像素值或连续值特征(选择是ℓ1、ℓ2或余弦相似度损失)

In Peng et al. (2022b), the authors have provided a unified view of MIM: a teacher model, a normalization layer, a student model, an MIM head, and a proper loss function. The most sig- nificant difference among all these models lies in the reconstruction targets, which can be pixels, discrete image tokens, features from pre-trained models, and outputs from the momentum updated teacher. Specifically, the targets can be roughly grouped into two categories.

>>Low-level pixels/features as targets: MAE (He et al., 2022a), SimMIM (Xie et al., 2022b), Con- vMAE (Gao et al., 2022), HiViT (Zhang et al., 2022d), and GreenMIM (Huang et al., 2022a) leverage either original or normalized pixel values as the target for MIM. These methods have typically explored the use of a plain Vision Transformer (Dosovitskiy et al., 2021) or the Swin Transformer (Liu et al., 2021) as the backbone architecture. MaskFeat (Wei et al., 2021) intro- duced the Histogram of Oriented Gradients (HOG) feature descriptor as the target for MIM (see Figure 2.9(b)). Meanwhile, Ge2-AE (Liu et al., 2023b) employed both pixel values and frequency information obtained from the 2D discrete Fourier transform as the target. Taking MAE (He et al., 2022a) as an example (Figure 2.9(a)), the authors show that using pixel values as targets works particularly well. Specifically, a large random subset of images (e.g., 75%) is masked out; then, the image encoder is only applied to visible patches, while mask tokens are introduced after the encoder. It was shown that such pre-training is especially effective for object detection and seg- mentation tasks, which require finer-grained image understanding.

>>High-level features as targets: BEiT (Bao et al., 2022), CAE (Chen et al., 2022g), SplitMask (El- Nouby et al., 2021), and PeCo (Dong et al., 2023) involve the prediction of discrete tokens using learned image tokenizers. MaskFeat (Wei et al., 2021) takes a different approach by proposing direct regression of high-level features extracted from models like DINO (Caron et al., 2021) and DeiT (Touvron et al., 2021). Expanding this idea, MVP (Wei et al., 2022b) and EVA (Fang et al., 2023) make feature prediction using image features from CLIP as target features. Additionally, other methods such as data2vec (Baevski et al., 2022), MSN (Assran et al., 2022), ConMIM (Yi et al., 2022), SIM (Tao et al., 2023), and BootMAE (Dong et al., 2022) propose to construct regression feature targets by leveraging momentum-updated teacher models to enhance online learning. The choice of loss functions depends on the nature of the targets: cross-entropy loss is typically used when the targets are discrete tokens, while ℓ1, ℓ2, or cosine similarity losses are common choices for pixel values or continuous-valued features.

在Peng等人(2022b)中,作者提供了对MIM的统一视图:教师模型、规范化层、学生模型、MIM头部和适当的损失函数。所有这些模型中最重要的区别在于重建目标,可以是像素、离散图像标记、来自预训练模型的特征以及来自动量更新的教师的输出。具体而言,这些目标可以粗略分为两类。

>> 低级像素/特征作为目标:MAE(He等人,2022a),SimMIM(Xie等人,2022b),ConvMAE(Gao等人,2022),HiViT(Zhang等人,2022d)和GreenMIM(Huang等人,2022a)使用原始或归一化的像素值作为MIM的目标。这些方法通常探讨了使用普通的Vision Transformer(Dosovitskiy等人,2021)或Swin Transformer(Liu等人,2021)作为骨干架构。MaskFeat(Wei等人,2021)引入了方向梯度直方图(HOG)特征描述符作为MIM的目标(见图2.9(b))。同时,Ge2-AE(Liu等人,2023b)将二维离散傅里叶变换得到的像素值和频率信息作为目标。以MAE(He等人,2022a)为例(图2.9(a)),作者表明使用像素值作为目标效果特别好。具体来说,一个大的随机图像子集(例如,75%)被屏蔽掉;然后,图像编码器仅应用于可见的patch补丁,而在编码器之后引入掩码标记。结果表明,这种预训练对需要更细粒度图像理解的目标检测和分割任务特别有效。

>> 高级特征作为目标:BEiT(Bao等人,2022),CAE(Chen等人,2022g),SplitMask(El-Nouby等人,2021)和PeCo(Dong等人,2023)通过使用学习的图像标记器来预测离散标记,采用了不同的方法。MaskFeat(Wei等人,2021)通过提出从DINO(Caron等人,2021)和DeiT(Touvron等人,2021)等模型中提取的高级特征的直接回归,提出了不同的方法。扩展这个思想,MVP(Wei等人,2022b)和EVA(Fang等人,2023)使用来自CLIP的图像特征作为目标特征进行特征预测。此外,其他方法,如data2vec(Baevski等人,2022),MSN(Assran等人,2022),ConMIM(Yi等人,2022),SIM(Tao等人,2023)和BootMAE(Dong等人,2022),提出利用动量更新的教师模型构建回归特征目标,以增强在线学习。损失函数的选择取决于目标的性质:对于离散标记的目标,通常使用交叉熵损失,而对于像素值或连续值特征,常见选择是ℓ1、ℓ2或余弦相似度损失。

Figure 2.10: Overview of UniCL (Yang et al., 2022a) that performs unified contrastive pre-training on image-text and image-label data. Image credit: Yang et al. (2022a).对图像-文本和图像-标签数据进行统一对比预训练的UniCL概述

MIM for video pre-training视频预训练的MIM:将MIM扩展到视频预训练,如BEVT/VideoMAE/Feichtenhofer

Naturally, there are recent works on extending MIM to video pre-training. Prominent examples include BEVT (Wang et al., 2022c), MAE as spatiotemporal learner (Feichtenhofer et al., 2022), VideoMAE (Tong et al., 2022), and VideoMAEv2 (Wang et al., 2023e). Taking Feichtenhofer et al. (2022) as an example. This paper studies a conceptually simple extension of MAE to video pre-training via randomly masking out space-time patches in videos and learns an autoencoder to reconstruct them in pixels. Interestingly, the authors found that MAE learns strong video representations with almost no inductive bias on space-time, and spacetime-agnostic random masking performs the best, with an optimal masking ratio as high as 90%.

自然地,有一些最近的工作将MIM扩展到视频预训练。突出的例子包括BEVT(Wang等人,2022c),MAE作为空间时间学习器(Feichtenhofer等人,2022),VideoMAE(Tong等人,2022)和VideoMAEv2(Wang等人,2023e)。以Feichtenhofer等人(2022)为例。本文研究了一种概念上简单的将MAE扩展到视频预训练的方法,通过随机屏蔽视频中的时空补丁,并学习一个自编码器以像素为单位重建它们。作者发现MAE在几乎没有时空上的归纳偏差的情况下学习了强大的视频表示,而与时空无关的随机掩蔽表现最好,其最佳掩蔽率高达90%。

Lack of learning global image representations全局图像表示的不足,如iBOT/DINO/BEiT等

MIM is an effective pre-training method that provides a good parameter initialization for further model finetuning. However, the vanilla MIM pre-trained model does not learn a global image representation. In iBOT (Zhou et al., 2021), the authors propose to enhance BEiT (Bao et al., 2022) with a DINO-like self-distillation loss (Caron et al., 2021) to force the [CLS] token to learn global image representations. The same idea has been extended to DINOv2 (Oquab et al., 2023).

MIM是一种有效的预训练方法,为进一步的模型微调提供了良好的参数初始化。然而,纯粹的MIM预训练模型并不学习全局图像表示。在iBOT(Zhou等人,2021)中,作者提出了使用类似于DINO的自我蒸馏损失(Caron等人,2021)增强BEiT(Bao等人,2022)的方法,以迫使[CLS]标记学习全局图像表示。相同的想法已经扩展到DINOv2(Oquab等人,2023)。

Scaling properties of MIM—MIM的规模特性:尚不清楚探讨将MIM预训练扩展到十亿级仅图像数据的规模

MIM is scalable in terms of model size. For example, we can per- form MIM pre-training of a vision transformer with billions of parameters. However, the scaling property with regard to data size is less clear. There are some recent works that aim to understand the data scaling of MIM (Xie et al., 2023b; Lu et al., 2023a); however, the data scale is limited to millions of images, rather than billions, except Singh et al. (2023) that studies the effectiveness of MAE as a so-called “pre-pretraining” method for billion-scale data. Generally, MIM can be con- sidered an effective regularization method that helps initialize a billion-scale vision transformer for downstream tasks; however, whether or not scaling the MIM pre-training to billion-scale image-only data requires further exploration.

MIM在模型大小方面是可扩展的。例如,我们可以对具有数十亿个参数的视觉transformer进行MIM预训练。然而,关于数据大小的规模属性不太明确。一些最近的工作旨在了解MIM的数据规模(Xie等人,2023b;Lu等人,2023a);然而,数据规模仅限于数百万张图像,而不是数十亿张,除了Singh等人(2023)研究了MAE作为所谓的“预-预训练”方法对十亿级数据的有效性。总的来说,MIM可以被视为一种有效的正则化方法,有助于初始化后续任务的十亿级视觉transformer ;然而,是否需要进一步探讨将MIM预训练扩展到十亿级仅图像数据的规模特性,目前尚不清楚。

2.5、Synergy Among Different Learning Approaches不同学习方法的协同作用

Till now, we have reviewed different approaches to pre-training image backbones, especially for vi- sion transformers. Below, we use CLIP as the anchor point, and discuss how CLIP can be combined with other learning methods.

到目前为止,我们已经回顾了不同的图像主干预训练方法,尤其是针对视觉transformers的方法。下面,我们以CLIP为锚点,讨论如何将CLIP与其他学习方法相结合。

Combining CLIP with label supervision将CLIP与标签监督相结合,如UniCL、LiT、MOFI

Noisy labels and text supervision can be jointly used for image backbone pre-training. Some representative works are discussed below.

>>UniCL (Yang et al., 2022a) proposes a principled way to use image-label and image-text data together in a joint image-text-label space for unified contrastive learning, and Florence (Yuan et al., 2021) is a scaled-up version of UniCL. See Figure 2.10 for an illustration of the framework.

>>LiT (Zhai et al., 2022b) uses a pre-trained ViT-g/14 image encoder learned from supervised pre- training on the JFT-3B dataset, and then makes the image encoder open-vocabulary by learning an additional text tower via contrastive pre-training on image-text data. Essentially, LiT teaches a text model to read out good representations from a pre-trained image model for new tasks.

>>MOFI (Wu et al., 2023d) proposes to learn image representations from 1 billion noisy entity- annotated images, and uses both image classification and contrastive losses for model training. For image classification, entities associated with each image are considered as labels, and supervised pre-training on a large number of entities is conducted; for constrastive pre-training, entity names are treated as free-form text, and are further enriched with entity descriptions.

噪声标签和文本监督可以共同用于图像主干预训练。下面讨论一些代表性的工作。

>> UniCL(Yang等人,2022a)提出了一种原则性的方法,将图像标签和图像-文本数据结合在一个联合图像-文本-标签空间中进行统一对比学习,而Florence(Yuan等人,2021)是UniCL的一个规模较大的版本。请参见图2.10,了解该框架的示意图。

>> LiT(Zhai等人,2022b)使用了从JFT-3B数据集的监督预训练中学到的预训练ViT-g/14图像编码器,然后通过在图像-文本数据上进行对比预训练来使图像编码器具备开放词汇的能力。从本质上讲,LiT教文本模型从预训练的图像模型中读出新的任务的良好表示。

>> MOFI(Wu等人,2023d)提出从10亿个带有噪声实体注释的图像中学习图像表示,并使用图像分类和对比损失进行模型训练。对于图像分类,将与每个图像相关联的实体视为标签,并进行大量实体的监督预训练;对于对比预训练,将实体名称视为自由形式的文本,并进一步丰富实体描述。

Figure 2.11: Illustration of MVP (Wei et al., 2022b), EVA (Fang et al., 2023) and BEiTv2 (Peng

Combining CLIP with image-only (non-)contrastive learning将CLIP与图像仅(非)对比学习相结合:如SLIP、xCLIP

CLIP can also be enhanced with image-only self-supervision. Specifically,

>>SLIP (Mu et al., 2021) proposes a conceptually simple idea to combine SimCLR (Chen et al., 2020a) and CLIP for model training, and shows that SLIP outperforms CLIP on both zero-shot transfer and linear probe settings. DeCLIP (Li et al., 2022g) mines self-supervised learning signals on each modality to make CLIP training data-efficient. In terms of image supervision, the SimSam framework (Chen and He, 2021) is used.

>>xCLIP (Zhou et al., 2023c) makes CLIP non-contrastive via introducing additional sharpness and smoothness regularization terms borrowed from the image-only non-contrastive learning litera- ture. However, the authors show that only non-contrastive pre-training (nCLIP) is not sufficient to achieve strong performance on zero-shot image classification, and it needs to be combined with the original CLIP for enhanced performance.

CLIP也可以通过图像仅自我监督进行增强。具体而言,

>> SLIP(Mu等人,2021)提出了一个概念上简单的思路,将SimCLR(Chen等人,2020a)与CLIP结合起来进行模型训练,并表明SLIP在零样本迁移和线性探测设置上优于CLIP。DeCLIP(Li等人,2022g)在每个模态上挖掘自监督学习信号,使CLIP训练数据高效。在图像监督方面,使用了SimSam框架(Chen和He,2021)。

>> xCLIP(Zhou等人,2023c)通过引入额外的锐度和平滑度正则项,借鉴了仅图像的非对比学习文献的方法,使CLIP成为非对比。然而,作者表明仅非对比预训练(nCLIP)不足以在零样本图像分类上获得较强的性能,需要与原始CLIP相结合以增强性能。

Combining CLIP with MIM将CLIP与MIM相结合

浅层交互:将CLIP提取的图像特征用作MIM训练的目标(CLIP图像特征可能捕捉了在MIM训练中缺失的语义),比如MVP/BEiTv2等

There are recent works that aim to combine CLIP and MIM for model training. We group them into two categories.

>>Shallow interaction. It turns out that image features extracted from CLIP are a good target for MIM training, as the CLIP image features potentially capture the semantics that are missing in MIM training. Along this line of work, as shown in Figure 2.11, MVP (Wei et al., 2022b)proposes to regress CLIP features directly, while BEiTv2 (Peng et al., 2022a) first compresses the information inside CLIP features into discrete visual tokens, and then performs regular BEiT train- ing. Similar use of CLIP features as MIM training target has also been investigated in EVA (Fang et al., 2023), CAEv2 (Zhang et al., 2022c), and MaskDistill (Peng et al., 2022b). In EVA-02 (Fang et al., 2023), the authors advocate alternative learning of MIM and CLIP representations. Specifi- cally, an off-the-shelf CLIP model is used to provide a feature target for MIM training; while the MIM pre-trained image backbone is used to initialize CLIP training. The MIM representations are used to finetune various downstream tasks while the learned frozen CLIP embedding enables zero-shot image classification and other applications.

最近有一些工作旨在将CLIP和MIM结合进行模型训练。我们将它们分为两类。

>> 浅层交互。事实证明,从CLIP提取的图像特征是MIM训练的良好目标,因为CLIP图像特征可能捕捉了在MIM训练中缺失的语义。沿着这一思路,如图2.11所示,MVP(Wei等人,2022b)提出直接回归CLIP特征,而BEiTv2(Peng等人,2022a)首先将CLIP特征中的信息压缩成离散的视觉标记,然后进行常规的BEiT训练。类似使用CLIP特征作为MIM训练目标的方法还在EVA(Fang等人,2023),CAEv2(Zhang等人,2022c)和MaskDistill(Peng等人,2022b)中进行了研究。在EVA-02(Fang等人,2023)中,作者提倡了MIM和CLIP表示的替代学习。具体来说,使用现成的CLIP模型为MIM训练提供特征目标;而使用MIM预训练的图像主干初始化CLIP训练。MIM表示用于微调各种下游任务,而学到的冻结CLIP嵌入使零样本图像分类和其他应用成为可能。

Figure 2.12: Overview of BEiT-3 that performs masked data modeling on both image/text and joint image-text data via a multiway transformer. Image credit: Wang et al. (2022d).通过多路转换器对图像/文本和联合图像-文本数据执行掩码数据建模的BEiT-3概述

深度整合:BERT和BEiT的组合非常有前景,比如BEiT-3

>>Deeper integration. However, instead of using CLIP as targets for MIM training, if one aims to combine CLIP and MIM for joint model training, MIM does not seem to improve a CLIP model at scale (Weers et al., 2023; Li et al., 2023m).

>>Although the combination of CLIP and MIM does not lead to a promising result at the current stage, the combination of BERT and BEiT is very promising, as evidenced in BEiT-3 (Wang et al., 2022d) (see Figure 2.12), where the authors show that masked data modeling can be performed on both image/text and joint image-text data via the design of a multiway transformer, and state- of-the-art performance can be achieved on a wide range of vision and vision-language tasks.

>> 深度整合。然而,如果有人的目标是将CLIP和MIM结合进行联合模型训练,而不是将CLIP用作MIM训练的目标,那么在大规模情况下,MIM似乎并不能改进CLIP模型(Weers等人,2023;Li等人,2023m)。

>> 尽管当前阶段CLIP和MIM的组合并没有取得令人满意的结果,但BERT和BEiT的组合非常有前景,正如BEiT-3(Wang等人,2022d)所证明的,该工作通过设计多向transformers在图像/文本和联合图像-文本数据上执行遮蔽数据建模,实现了在广泛的视觉和视觉-语言任务上实现了最新的性能。

2.6、Multimodal Fusion, Region-Level and Pixel-Level Pre-training多模态融合、区域级和像素级预训练

Till now, we have focused on the methods of pre-training image backbones from scratch, but not on pre-training methods that power multimodal fusion, region-level and pixel-level image under- standing. These methods typically use a pre-trained image encoder at the first hand to perform a second-stage pre-training. Below, we briefly discuss these topics.

到目前为止,我们关注了从头开始的图像主干预训练方法,但没有关注用于多模态融合、区域级和像素级图像理解的预训练方法。这些方法通常首先使用预训练的图像编码器进行第二阶段的预训练。以下,我们简要讨论这些主题。

2.6.1、From Multimodal Fusion to Multimodal LLM从多模态融合到多模态LLM

基于双编码器的CLIP(图像和文本独立编码+仅通过两者特征向量的简单点乘实现模态交互):擅长图像分类/图像-文本检索,不擅长图像字幕/视觉问答

For dual encoders such as CLIP (Radford et al., 2021), image and text are encoded separately, and modality interaction is only handled via a simple dot product of image and text feature vectors. This can be very effective for zero-shot image classification and image-text retrieval. However, due to the lack of deep multimodal fusion, CLIP alone performs poorly on the image captioning (Vinyals et al., 2015) and visual question answering (Antol et al., 2015) tasks. This requires the pre-training of a fusion encoder, where additional transformer layers are typically employed to model the deep interaction between image and text representations. Below, we review how these fusion-encoder pre-training methods are developed over time.

对于像CLIP(Radford等人,2021)这样的双编码器,图像和文本是分别编码的,而模态交互仅通过图像和文本特征向量的简单点乘来处理。这对于零样本图像分类和图像-文本检索非常有效。然而,由于缺乏深度多模态融合,仅使用CLIP在图像字幕(Vinyals等人,2015)和视觉问答(Antol等人,2015)任务上表现不佳。这需要对融合编码器进行预训练,其中通常使用额外的transformers层来模拟图像和文本表示之间的深层交互。下面,我们回顾了随着时间推移而发展的这些融合编码器预训练方法。

OD-based models基于OD的模型:使用共同注意力进行多模态融合(如ViLBERT/LXMERT)、将图像特征作为文本输入的软提示(如VisualBERT)

Most early methods use pre-trained object detectors (ODs) to extract visual features. Among them, ViLBERT (Lu et al., 2019) and LXMERT (Tan and Bansal, 2019) use co- attention for multimodal fusion, while methods like VisualBERT (Li et al., 2019b), Unicoder-VL (Li et al., 2020a), VL-BERT (Su et al., 2019), UNITER (Chen et al., 2020d), OSCAR (Li et al., 2020b),VILLA (Gan et al., 2020) and VinVL (Zhang et al., 2021) treat image features as soft prompts of the text input to be sent into a multimodal transformer.

大多数早期方法使用预训练的目标检测器(ODs)来提取视觉特征。其中,ViLBERT(Lu等人,2019)和LXMERT(Tan和Bansal,2019)使用共同注意力进行多模态融合,

而像VisualBERT(Li等人,2019b)、Unicoder-VL(Li等人,2020a)、VL-BERT(Su等人,2019)、UNITER(Chen等人,2020d)、OSCAR(Li等人,2020b)、VILLA(Gan等人,2020)和VinVL(Zhang等人,2021)等方法将图像特征作为文本输入发送到多模态transformer的软提示。

Figure 2.13: Illustration of UNITER (Chen et al., 2020d) and CoCa (Yu et al., 2022a),

Figure 2.13: Illustration of UNITER (Chen et al., 2020d) and CoCa (Yu et al., 2022a), which serve as a classical and a modern model that performs pre-training on multimodal fusion. CoCa also pre-trains the image backbone from scratch. Specifically, UNITER extracts image features via an off-the-shelf object detector and treat image features as soft prompts of the text input to be sent into a multimodal transformer. The model is pre-trained over a few millions of image-text pairs. For CoCa, an image encoder and a text encoder is used, with a multimodal transformer stacked on top. Both contrastive loss and captioning loss are used for model training, and the model is trained over billions of image-text pairs and JFT data. Image credit: Chen et al. (2020d), Yu et al. (2022a).

图2.13:UNITER (Chen et al., 2020d)和CoCa (Yu et al., 2022a)的示意图,分别作为经典模型和现代模型,对多模态融合进行预训练。CoCa还从头开始对图像主干进行预训练。具体来说,UNITER通过现成的对象检测器提取图像特征,并将图像特征作为文本输入的软提示发送到多模态变压器。该模型是在数百万图像-文本对上进行预训练的。对于CoCa,使用了一个图像编码器和一个文本编码器,并在顶部堆叠了一个多模态变压器。对比损失和字幕损失都用于模型训练,该模型是在数十亿的图像文本对和JFT数据上训练的。图片来源:Chen等人(2020d), Yu等人(2022a)。

End-to-end models端到端模型:早期基于CNN提取图像特征(如PixelBERT/SOHO/CLIP-ViL)→直接将图像块特征和文本token嵌入输入到多模态transformers(如ViLT/ViTCAP)→ViT(简单地使用ViT作为图像编码器,如Swintransformers/ALBEF/METER/VLMo/X-VLM/BLIP/SimVLM/FLAVA/CoCa/UNITER/CoCa)

Now, end-to-end pre-training methods become the mainstream. Some early methods use CNNs to extract image features, such as PixelBERT (Huang et al., 2020), SOHO (Huang et al., 2021), and CLIP-ViL (Shen et al., 2022b), while ViLT (Kim et al., 2021) and ViTCAP (Fang et al., 2022) directly feed image patch features and text token embeddings into a multimodal transformer. Due to the popularity of vision transformer (ViT), now most methods simply use ViT as the image encoder (e.g., plain ViT (Dosovitskiy et al., 2021) and Swin trans- former (Liu et al., 2021)). Prominent examples include ALBEF (Li et al., 2021b), METER (Dou et al., 2022b), VLMo (Wang et al., 2021b), X-VLM (Zeng et al., 2022), BLIP (Li et al., 2022d), SimVLM (Wang et al., 2022g), FLAVA (Singh et al., 2022a) and CoCa (Yu et al., 2022a).

An illustration of UNITER (Chen et al., 2020d) and CoCa (Yu et al., 2022a) is shown in Figure 2.13. They serve as two examples of a classical model and a modern model, respectively, which performs pre-training on multimodal fusion. CoCa also performs image backbone pre-training directly, as all the model components are trained from scratch. Please refer to Chapter 3 of Gan et al. (2022) for a comprehensive literature review.

现在,端到端预训练方法成为主流。一些早期方法使用CNN来提取图像特征,例如PixelBERT(Huang等人,2020)、SOHO(Huang等人,2021)和CLIP-ViL(Shen等人,2022b),而ViLT(Kim等人,2021)和ViTCAP(Fang等人,2022)直接将图像块特征和文本token嵌入输入到多模态transformers中。由于视觉transformers(ViT)的流行,现在大多数方法都简单地使用ViT作为图像编码器(例如,普通ViT(Dosovitskiy等人,2021)和Swintransformers(Liu等人,2021))。杰出的例子包括ALBEF(Li等人,2021b)、METER(Dou等人,2022b)、VLMo(Wang等人,2021b)、X-VLM(Zeng等人,2022)以及BLIP(Li等人,2022d)、SimVLM(Wang等人,2022g)、FLAVA(Singh等人,2022a)和CoCa(Yu等人,2022a)。UNITER(Chen等人,2020d)和CoCa(Yu等人,2022a)的示意图如图2.13所示。它们分别是经典模型和现代模型的两个示例,它们执行了多模态融合的预训练。CoCa还直接执行图像主干预训练,因为所有模型组件都是从头开始训练的。有关详细文献综述,请参见Gan等人(2022)的第3章。

Trend to multimodal LLM趋势是多模态LLM:早期模型(侧重于大规模预训练,如Flamingo/GIT/PaLI/PaLI-X)→近期工作(侧重于基于LLMs的指令调优,如LLaVA/MiniGPT-4)

Instead of using masked language modeling, image-text matching and image-text contrastive learning, SimVLM (Wang et al., 2022g) uses a simple PrefixLM loss for pre-training. Since then, multimodal language models have become popular. Early models focus on large-scale pre-training, such as Flamingo (Alayrac et al., 2022), GIT (Wang et al., 2022a), PaLI (Chen et al., 2022h), PaLI-X (Chen et al., 2023g), while recent works focus on using pre- trained LLMs for instruction tuning, such as LLaVA (Liu et al., 2023c) and MiniGPT-4 (Zhu et al., 2023a). A detailed discussion on this topic is provided in Chapter 5.

SimVLM (Wang et al., 2022g)使用简单的PrefixLM损失进行预训练,而不是使用掩码语言建模、图像-文本匹配和图像-文本对比学习。此后,多模态语言模型变得流行起来。早期模型侧重于大规模预训练,例如Flamingo(Alayrac等人,2022)、GIT(Wang等人,2022a)、PaLI(Chen等人,2022h)、PaLI-X(Chen等人,2023g),而最近的工作侧重于使用预训练的LLMs进行指令调优,例如LLaVA(Liu等人,2023c)和MiniGPT-4(Zhu等人,2023a)。有关此主题的详细讨论,请参见第5章。

Figure 2.14: Overview of GLIP that performs grounded language-image pre-training for open-set object detection. Image credit: Li et al. (2022f).

2.6.2、Region-Level Pre-training区域级预训练

CLIP:通过对比预训练学习全局图像表示+不适合细粒度图像理解的任务(如目标检测【包含两个子任务=定位+识别】等)

使用2阶段检测器从CLIP中提取知识(ViLD/RegionCLIP)、基于语言-图像的预训练(将检测重新定义为短语定位问题,如MDETR/GLIP)、视觉语言理解任务进行了统一的预训练(GLIPv2/FIBER)、

基于图像-文本模型进行微调(如OVR-CNN)、只训练分类头(如Detic)、

CLIP learns global image representations via contrastive pre-training. However, for tasks that re- quire fine-grained image understanding such as object detection, CLIP is not enough. Object detec- tion contains two sub-tasks: localization and recognition. (i) Localization aims to locate the pres- ence of objects in an image and indicate the position with a bounding box, while (ii) recognition determines what object categories are present in the bounding box. By following the reformulation that converts image classification to image retrieval used in CLIP, generic open-set object detection can be achieved.

Specifically, ViLD (Gu et al., 2021) and RegionCLIP (Zhong et al., 2022a) distill knowledge from CLIP with a two-stage detector for zero-shot object detection. In MDETR (Kamath et al., 2021) and GLIP (Li et al., 2022e) (as shown in Figure 2.14), the authors propose to reformulate detection as a phrase grounding problem, and perform grounded language-image pre-training. GLIPv2 (Zhang et al., 2022b) and FIBER (Dou et al., 2022a) further perform unified pre-training for both grounding and vision-language understanding tasks. OVR-CNN (Zareian et al., 2021) finetunes an image-text model to detection on a limited vocabulary and relies on image-text pre-training for generalization to an open vocabulary setting. Detic (Zhou et al., 2022b) improves long-tail detection performance with weak supervision by training only the classification head on the examples where only image- level annotations are available. Other works include OV-DETR (Zang et al., 2022), X-DETR (Cai et al., 2022), FindIT (Kuo et al., 2022), PromptDet (Feng et al., 2022a), OWL-ViT (Minderer et al., 2022), GRiT (Wu et al., 2022b), to name a few. Recently, Grounding DINO (Liu et al., 2023h) is proposed to marry DINO (Zhang et al., 2022a) with grounded pre-training for open-set object detection. Please refer to Section 4.2 for a detailed review of this topic.

CLIP通过对比预训练学习全局图像表示。对于需要细粒度图像理解的任务,如目标检测,CLIP是不够的。目标检测包含两个子任务:定位和识别。 (i)定位旨在在图像中定位物体的存在并用边界框指示位置,而

(ii)识别旨在确定边界框中存在哪些物体类别。

通过遵循将图像分类转换为在CLIP中使用的图像检索,可以实现通用开放集目标检测。

具体来说,ViLD(Gu等人,2021)和RegionCLIP(Zhong等人,2022a)使用2阶段检测器从CLIP中提取知识,用于零样本目标检测。在MDETR(Kamath等人,2021)和GLIP(Li等人,2022e)(如图2.14所示)中,作者提出将检测重新定义为短语定位问题,并执行基于语言-图像的预训练。GLIPv2(Zhang等人,2022b)和FIBER(Dou等人,2022a)进一步对基础和视觉语言理解任务进行了统一的预训练。OVR-CNN(Zareian等人,2021)对图像-文本模型进行微调,以在有限的词汇表上进行检测,并依靠图像-文本预训练将其泛化到开放的词汇表设置。Detic(Zhou等人,2022b)通过在只有图像级注释可用的示例上只训练分类头,提高了弱监督下的长尾检测性能。其他作品包括OV-DETR(Zang等人,2022)、X-DETR(Cai等人,2022)、FindIT(Kuo等人,2022)、PromptDet(Feng等人,2022a)、OWL-ViT(Minderer等人,2022)、GRiT(Wu等人,2022b)等等。最近,提出了Grounding DINO(Liu等人,2023h)以将DINO(Zhang等人,2022a)与基于定位的预训练相结合,用于开放式目标检测。有关此主题的详细评论,请参见第4.2节。

2.6.3、Pixel-Level Pre-training像素级预训练(代表作SAE):

The Segment Anything Model (SAM) (Kirillov et al., 2023) is a recent vision foundation model for image segmentation that aims to perform pixel-level pre-training. Since its birth, it has attracted wide attention and spurred tons of follow-up works and applications. Below, we briefly review SAM, as a representative work for pixel-level visual pre-training.

As depicted in Figure 2.15, the objective of the Segment Anything project is to develop a founda- tional vision model for segmentation. This model is designed to be readily adaptable to a wide range of both existing and novel segmentation tasks, such as edge detection, object proposal generation, instance segmentation, open-vocabulary segmentation, and more. This adaptability is seamlessly accomplished through a highly efficient and user-friendly approach, facilitated by the integration of three interconnected components. Specifically,

“Segment Anything Model”(SAM)(Kirillov等人,2023)是一种最新的用于图像分割的视觉基础模型,旨在执行像素级预训练。自诞生以来,它引起了广泛关注,并激发了大量的后续工作和应用。以下,我们简要回顾一下SAM作为像素级视觉预训练的代表工作。

如图2.15所示,Segment Anything项目的目标是开发一个用于分割的基础视觉模型。该模型旨在能够轻松适应各种现有和新型的分割任务,如边缘检测、对象提议生成、实例分割、开放式词汇分割等。这种适应性是通过高效和用户友好的方法无缝实现的,这得益于三个相互连接的组件的集成。具体来说,

>>Task. The authors propose the promptable segmentation task, where the goal is to return a valid segmentation mask given any segmentation prompt, such as a set of points, a rough box or mask, or free-form text.

>>Model. The architecture of SAM is conceptually simple. It is composed of three main com- ponents: (i) a powerful image encoder (MAE (He et al., 2022a) pre-trained ViT); (ii) a prompt encoder (for sparse input such as points, boxes, and free-form text, the CLIP text encoder is used; for dense input such as masks, a convolution operator is used); and (iii) a lightweight mask de- coder based on transformer.

>>Data. To acquire large-scale data for pre-training, the authors develop a data engine that performs model-in-the-loop dataset annotation.

>> 任务。作者提出了可提示的分割任务,目标是在给定任何分割提示的情况下返回有效的分割掩码,例如一组点、一个粗糙的框或掩码、或自由格式的文本。

>> 模型。SAM的架构在概念上很简单。它由三个主要组成部分组成:(i)一个强大的图像编码器(MAE(He等人,2022a)预训练的ViT);(ii)提示编码器(用于稀疏输入的CLIP文本编码器,例如点、框和自由文本,用于密集输入的掩码(如使用卷积算子);

(iii)基于transformers的轻量级掩码解码器。

>> 数据。为了获取大规模的预训练数据,作者开发了一个执行模型-数据集标注的数据引擎。

Figure 2.15: Overview of the Segment Anything project, which

Figure 2.15: Overview of the Segment Anything project, which aims to build a vision foundation model for segmentation by introducing three interconnected components: a promptable segmenta-tion task, a segmentation model, and a data engine. Image credit: Kirillov et al. (2023).

图2.15:Segment Anything项目概述,该项目旨在通过引入三个相互关联的组件:提示式分割任务、分割模型和数据引擎,构建一个分割的视觉基础模型。图片来源:Kirillov et al.(2023)。

Concurrent to SAM与SAM同时并行:OneFormer(一种通用的图像分割框架)、SegGPT(一种统一不同分割数据格式的通用上下文学习框架)、SEEM(扩展了单一分割模型)

Parallel to SAM, many efforts have been made to develop general-purpose segmentation models as well. For example, OneFormer (Jain et al., 2023) develops a universal im- age segmentation framework; SegGPT (Wang et al., 2023j) proposes a generalist in-context learning framework that unifies different segmentation data formats; SEEM (Zou et al., 2023b) further ex- pands the types of supported prompts that a single segmentation model can handle, including points, boxes, scribbles, masks, texts, and referred regions of another image.

与SAM同时并行,开发通用分割模型也做了很多努力。例如,OneFormer(Jain等人,2023)开发了一种通用的图像分割框架;SegGPT(Wang等人,2023j)提出了一种统一不同分割数据格式的通用上下文学习框架;SEEM(Zou等人,2023b)进一步扩展了单一分割模型可以处理的支持提示类型,包括点、框、涂鸦、掩码、文本和另一图像的相关区域等。

Extensions of SAM—SAM的扩展到应用中的模型:Inpaint Anything/Edit Everything/Any-to-Any Style Transfer/Caption Anything→Grounding DINO/Grounding-SAM1

Extensions of SAM. SAM has spurred tons of follow-up works that extend SAM to a wide range of applications, e.g., Inpaint Anything (Yu et al., 2023c), Edit Everything (Xie et al., 2023a), Any- to-Any Style Transfer (Liu et al., 2023g), Caption Anything (Wang et al., 2023g), Track Any- thing (Yang et al., 2023b), Recognize Anything (Zhang et al., 2023n; Li et al., 2023f), Count Anything (Ma et al., 2023), 3D reconstruction (Shen et al., 2023a), medical image analysis (Ma and Wang, 2023; Zhou et al., 2023d; Shi et al., 2023b; Zhang and Jiao, 2023), etc. Additionally, recent works have attempted to develop models for detecting and segmenting anything in the open- vocabulary scenarios, such as Grounding DINO (Liu et al., 2023h) and Grounding-SAM1. For a comprehensive review, please refer to Zhang et al. (2023a) and some GitHub repos.2

SAM已经激发了大量的后续工作,将SAM扩展到各种应用中,例如Inpaint Anything(Yu等人,2023c)、Edit Everything(Xie等人,2023a)、Any-to-Any Style Transfer(Liu等人,2023g)、Caption Anything(Wang等人,2023g)、Track Anything(Yang等人,2023b)、Recognize Anything(Zhang等人,2023n;Li等人,2023f)、Count Anything(Ma等人,2023)、3D重建(Shen等人,2023a)、医学图像分析(Ma和Wang,2023;Zhou等人,2023d;Shi等人,2023b;Zhang和Jiao,2023)等等。此外,最近的研究尝试开发用于在开放词汇情景中检测和分割任何物体的模型,例如Grounding DINO(Liu等人,2023h)和Grounding-SAM1。有关全面的综述,请参阅Zhang等人(2023a)和一些GitHub资源库。

3、Visual Generation视觉生成

VG的目的(生成高保真的内容),作用(支持创意应用+合成训练数据),关键(生成严格与人类意图对齐的视觉数据,比如文本条件)

Visual generation aims to generate high-fidelity visual content, including images, videos, neural ra- diance fields, 3D point clouds, etc.. This topic is at the core of recently popular artificial intelligence generated content (AIGC), and this ability is crucial in supporting creative applications such as de- sign, arts, and multimodal content creation. It is also instrumental in synthesizing training data to help understand models, leading to the closed loop of multimodal content understanding and gen- eration. To make use of visual generation, it is critical to produce visual data that is strictly aligned with human intents. These intentions are fed into the generation model as input conditions, such as class labels, texts, bounding boxes, layout masks, among others. Given the flexibility offered by open-ended text descriptions, text conditions (including text-to-image/video/3D) have emerged as a pivotal theme in conditional visual generation.

In this chapter, we describe how to align with human intents in visual generation, with a focus on image generation. We start with the overview of the current state of text-to-image (T2I) generation in Section 3.1, highlighting its limitations concerning alignment with human intents. The core of this chapter is dedicated to reviewing the literature on four targeted areas that aim at enhancing alignments in T2I generation, i.e., spatial controllable T2I generation in Section 3.2, text-based image editing in Section 3.3, better following text prompts in Section 3.4, and concept customization in T2I generation in Section 3.5. At the end of each subsection, we share our observations on the current research trends and short-term future research directions. These discussions coalesce in Section 3.6, where we conclude the chapter by considering future trends. Specifically, we envision the development of a generalist T2I generation model, which can better follow human intents, to unify and replace the four separate categories of alignment works.

视觉生成旨在生成高保真的视觉内容,包括图像、视频、神经辐射场、3D点云等。这个主题是最近流行的人工智能生成内容(AIGC)的核心,这种能力对支持创意应用,如设计、艺术和多模态内容创作至关重要。它还有助于合成训练数据,帮助理解模型,从而形成多模态内容理解和生成的闭环。要利用视觉生成,关键是生成严格与人类意图对齐的视觉数据。这些意图作为输入条件输入到生成模型中,例如类别标签、文本、边界框、布局掩码等。由于开放性文本描述提供的灵活性,文本条件(包括文本到图像/视频/3D等)已经成为条件视觉生成中的关键主题。

在本章中,我们将重点介绍视觉生成中与人类意图对齐的方法,重点关注图像生成。我们从第3.1节中的文本到图像(T2I)生成的当前状态概述开始,突出了其在与人类意图对齐方面的局限性。本章的核心部分致力于回顾四个目标领域的文献,这些领域旨在增强T2I生成中的对齐,即第3.2节中的空间可控的T2I生成,第3.3节中的基于文本的图像编辑,第3.4节中更好地遵循文本提示,以及第3.5节中的T2I生成中的概念定制。在每个子节结束时,我们分享了对当前研究趋势和短期未来研究方向的观察。这些讨论在第3.6节中汇总,我们在该节中通过考虑未来趋势来总结本章。具体来说,我们设想开发一个通用的T2I生成模型,它可以更好地遵循人类意图,以统一和替代四个独立的对齐工作类别。

3.1、Overview概述

3.1.1、Human Alignments in Visual Generation视觉生成中的人类对齐:核心(遵循人类意图来合成内容),四类探究

空间可控T2I生成(将文本输入与其他条件结合起来→更好的可控性)、基于文本的图像编辑(基于多功能编辑工具)、更好地遵循文本提示(因生成过程不一定严格遵循指令)、视觉概念定制(专门的token嵌入或条件图像来定制T2I模型)

AI Alignment research in the context of T2I generation is the field of study dedicated to developing image generation models that can easily follow human intents to synthesize the desired generated visual content. Current literature typically focuses on one particular weakness of vanilla T2I models that prevents them from accurately producing images that align with human intents. This chapter delves into four commonly studied issues, as summarized in Figure 3.1 (a) and follows.

>>Spatial controllable T2I generation. Text serves as a powerful medium for human-computer interaction, making it a focal point in conditional visual generation. However, text alone falls short in providing precise spatial references, such as specifying open-ended descriptions for arbi- trary image regions with precise spatial configurations. Spatial controllable T2I generation (Yang et al., 2023b; Li et al., 2023n; Zhang and Agrawala, 2023) aims to combine text inputs with other conditions for better controllability, thereby facilitating users to generate the desired images.

>>Text-based image editing. Editing is another important means for acquiring human-intended vi- sual content. Users might possess near-perfect images, whether generated by a model or naturally captured by a camera, but these might require specific adjustments to meet their intent. Editing has diverse objectives, ranging from locally modifying an object to globally adjusting the image style. Text-based image editing (Brooks et al., 2023) explores effective ways to create a versatile editing tool.

>>Better following text prompts. Despite T2I models being trained to reconstruct images con- ditioned on the paired text input, the training objective does not necessarily ensure or directly

optimize for a strict adherence to text prompts during image generation. Studies (Yu et al., 2022b; Rombach et al., 2022) have shown that vanilla T2I models might overlook certain text descriptions and generate images that do not fully correspond to the input text. Research (Feng et al., 2022b; Black et al., 2023) along this line explores improvements to have T2I models better following text prompts, thereby facilitating the easier use of T2I models.

>>Visual concept customization. Incorporating visual concepts into textual inputs is crucial for various applications, such as generating images of one’s pet dog or family members in diverse settings, or crafting visual narratives featuring a specific character. These visual elements often encompass intricate details that are difficult to articulate in words. Alternatively, studies (Ruiz et al., 2023; Chen et al., 2023f) explore if T2I models can be customized to draw those visual concepts with specialized token embeddings or conditioned images.

T2I生成背景下的AI对齐研究是一门致力于开发图像生成模型的研究领域,这些模型可以轻松地遵循人类意图来合成所需的生成视觉内容。当前文献通常集中在普通T2I模型的一个特定弱点上,这个弱点阻止了它们准确生成与人类意图一致的图像。本章探讨了四个通常研究的问题,如图3.1(a)所总结的那样,如下所述。

>> 空间可控T2I生成。文本在人机交互中充当强大的媒介,使其成为条件视觉生成的焦点。然而,仅文本不能提供精确的空间参考,比如为具有精确空间配置的任意图像区域指定开放式描述。空间可控的T2I生成(Yang等人,2023b;Li等人,2023n;Zhang和Agrawala,2023)旨在将文本输入与其他条件结合起来,以实现更好的可控性,从而使用户能够生成所需的图像。

>> 基于文本的图像编辑。编辑是获取人类意图的视觉内容的另一种重要手段。用户可能拥有近乎完美的图像,无论是由模型生成的还是由相机自然捕获的,但这些可能需要进行特定的调整以满足他们的意图。编辑具有多种目标,从局部修改对象到全局调整图像风格。基于文本的图像编辑(Brooks等人,2023)探索了创建多功能编辑工具的有效方法。

>> 更好地遵循文本提示。尽管T2I模型经过训练以在配对的文本输入条件下重构图像,但训练目标不一定确保或直接优化图像生成过程中严格遵循文本提示。研究(Yu等人,2022b;Rombach等人,2022)表明,普通T2I模型可能忽视某些文本描述,并生成与输入文本不完全对应的图像。在这方面的研究(Feng等人,2022b;Black等人,2023)探索了改进T2I模型更好地遵循文本提示的方法,从而使T2I模型更容易使用。

>> 视觉概念定制。将视觉概念纳入文本输入对于各种应用至关重要,比如在不同场景中生成宠物狗或家庭成员的图像,或制作以特定角色为特色的视觉叙事。有些视觉元素通常包含难以用文字表达的复杂细节。或者,研究(Ruiz等人,2023;Chen等人,2023f)探讨了是否可以通过专门的token嵌入或条件图像来定制T2I模型以绘制那些视觉概念。

Before introducing the alignment works in detail, we first review the basics of text-to-image gener- ation in the next section.

在详细介绍对齐工作之前,我们首先在下一节中回顾文本到图像生成的基础知识。

Figure 3.1: An overview of improving human intent alignments in T2I generation—T2I生成改善人类意向对齐的概述。

3.1.2、Text-to-Image Generation文本到图像生成

Figure 3.2: An overview of representative text-to-image generation models until July 2023.截至2023年7月的代表性文本到图像生成模型概述

T2I的目的(视觉质量高+语义与输入文本相对应)、数据集(图像-文本对进行训练)

T2I generation aims to generate images that are not only of high visual quality but also semantically correspond to the input text. T2I models are usually trained with image-text pairs, where text is taken as input conditions, with the paired image being the targeted output. Abstracted from the wide range of T2I models shown in Figure 3.2, we give a high-level overview of the representative image generation techniques.

T2I生成旨在生成不仅视觉质量高,而且在语义上与输入文本相对应的图像。T2I模型通常使用图像-文本对进行训练,其中文本被视为输入条件,配对图像是目标输出。从图3.2中显示的广泛的T2I模型范围中抽象出来,我们对代表性的图像生成技术进行了高层次的概述。

GAN(生成器和判别器+两者对抗试图区分真假→引导生成器改进生成能力)、VAE(概率模型+编码器和解码器→最小化重构误差+KL散度正则化)、离散图像token预测(成对图像标记器和解标记器的组合+令牌预测策略【自回归Transformer+按顺序生成视觉标记+左上角开始】)、扩散模型(采用随机微分方程将随机噪声逐渐演化成图像=随机图像初始化+多次迭代再细化+持续演变)、