-

flume安装及实战

flume官方下载地址:Welcome to Apache Flume — Apache Flume

一、flume安装

(1)解压至安装目录

tar -zxf ./apache-flume-1.9.0-bin.tar.gz -C /opt/soft/(2)配置文件flume-env.sh

- cd /opt/soft/flume190/conf

- ll

- cp ./flume-env.sh.template ./flume-env.sh

- vim ./flume-env.sh

- ------------------------------------

- 22 export JAVA_HOME=/opt/soft/jdk180

- 25 export JAVA_OPTS="-Xms2000m -Xmx2000m -Dcom.sun.management.jmxremete"

- -----------------------------------

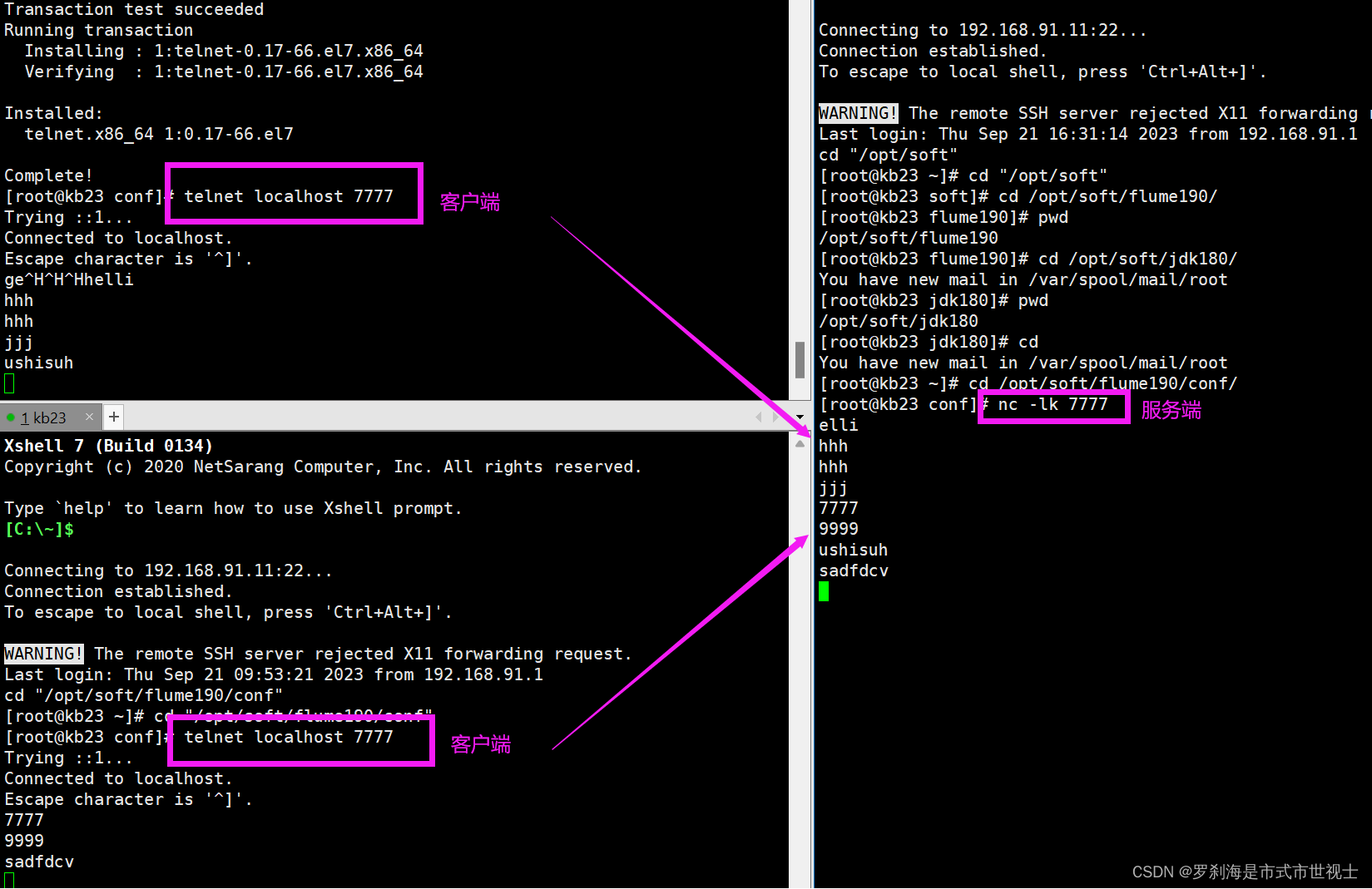

(3)安装net-tools、安装netcat、安装telnet服务、安装telnet客户端

yum install -y net-tools 安装

yum install -y nc 安装netcat

yum install -y telnet-server 安装telnet服务端

yum install -y telnet.* 安装telnet客户端

(4)启动服务

netstat -lnp | grep 7777 查看指定端口是否被占用

nc -lk 7777 启动服务端

telnet localhost 7777 连接服务器

注:一个服务端可以连接多个客户端;服务端关闭,客户端也关闭。

(5)拷贝Hadoop下guava-27.0-jre.jar的到flume下

cp /opt/soft/hadoop313/share/hadoop/hdfs/lib/guava-27.0-jre.jar /opt/soft/flume190/lib/二、flume实战

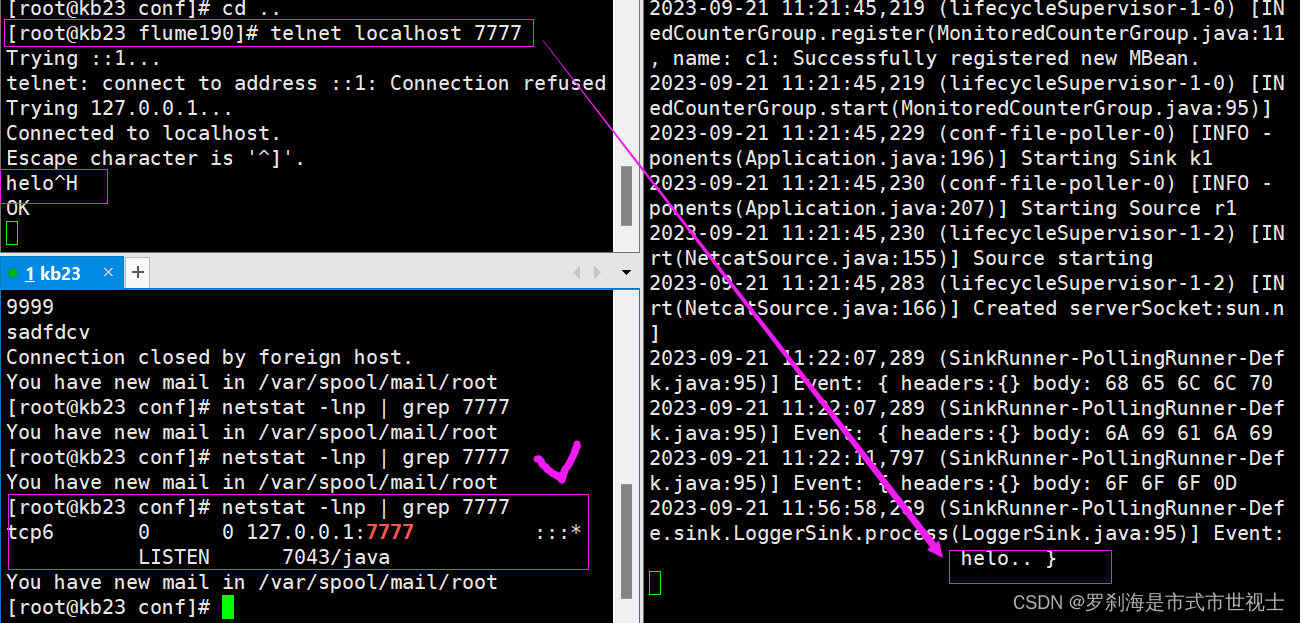

(一)/opt/soft/flume190/conf/myconf2目录下配置netcat-logger.conf 文件

vim ./netcat-logger.conf

- #配置Agent a1的组件

- a1.sources=r1

- a1.channels=c1

- a1.sinks=k1

- a1.sources.r1.type=netcat #netcat表示通过指定端口来访问

- a1.sources.r1.bind=localhost #主机名称

- a1.sources.r1.port=7777 #指定端口

- a1.channels.c1.type=memory #选择管道类型

- a1.sinks.k1.type=logger #表示数据汇聚点的类型是logger日志

- a1.sources.r1.channels=c1

- a1.sinks.k1.channel=c1

启动

./bin/flume-ng agent --name a1 --conf ./conf/ --conf-file ./conf/myconf2/netcat-logger.conf -Dflume.root.logger=INFO,console

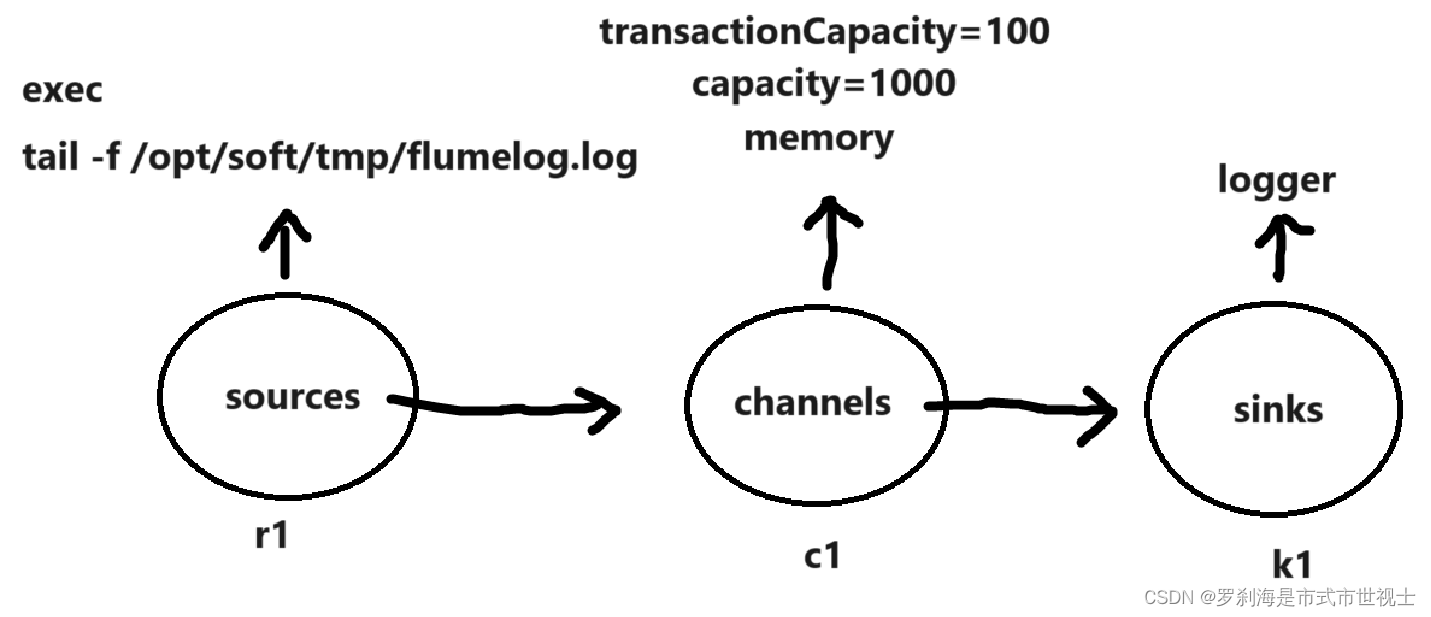

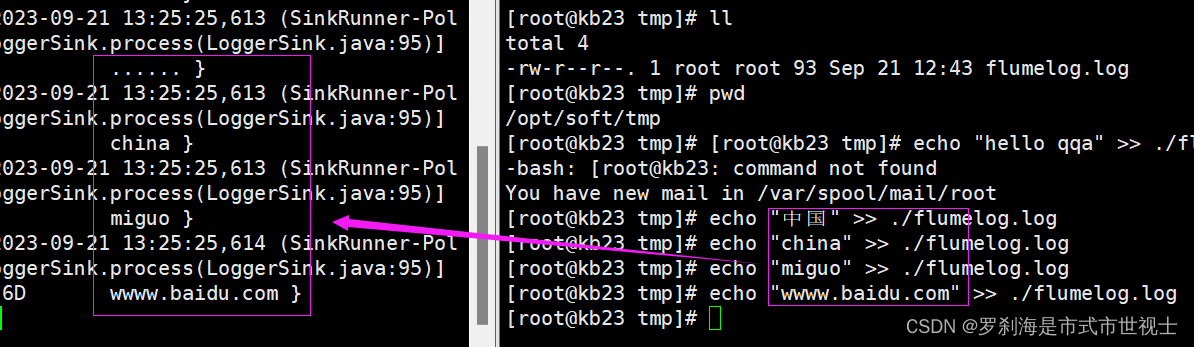

(二)通过路径监控---配置filelogger.conf文件-->监控flumelog.log文件的内容

1、拓展

mkdir /opt/soft/tmp 创建目录

vim ./flumelog.log 配置被监控文件

tail -f ./flumelog.log 启动被监控文件

echo "aaaa" >> ./flumelog.log 输入文件,检测可以输入内容

2、filelogger.conf配置文件

- a2.sources=r1

- a2.channels=c1

- a2.sinks=k1

- a2.sources.r1.type=exec

- a2.sources.r1.command=tail -f /opt/soft/tmp/flumelog.log

- a2.channels.c1.type=memory

- a2.channels.c1.capacity=1000

- a2.channels.c1.transactionCapacity=100

- a2.sinks.k1.type=logger

- a2.sources.r1.channels=c1

- a2.sinks.k1.channel=c1 【没有S】

3、启动

./bin/flume-ng agent --name a2 --conf ./conf/ --conf-file ./conf/myconf2/filelogger.conf -Dflume.root.logger=INFO,console

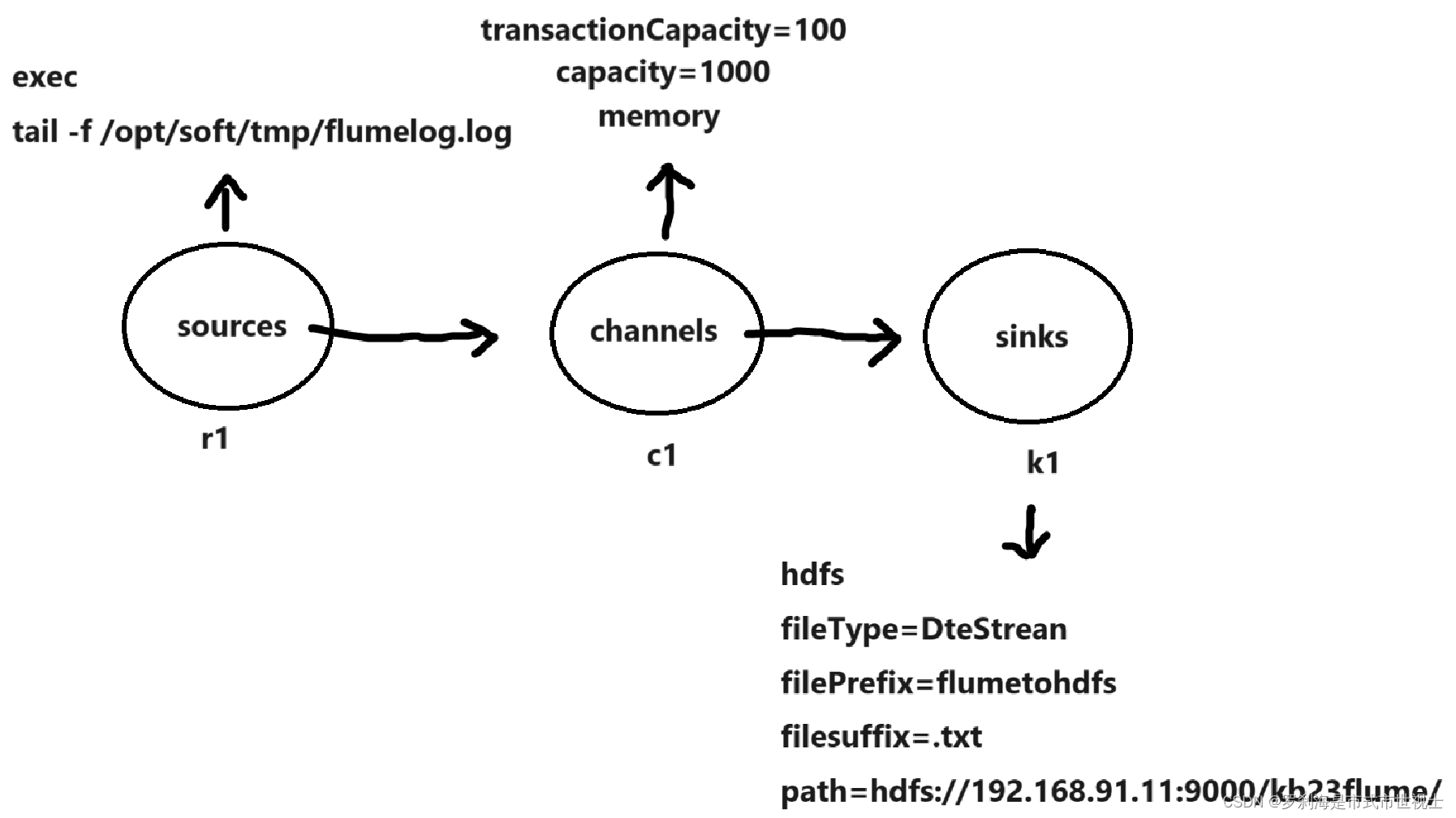

(三)输出上传到hdfs内----配置file-flume-hdfs.conf文件

(1)启动Hadoop,并退出安全模式

- start-all.sh

- hdfs dfsadmin -safemode leave

(2)配置file-flume-hdfs.conf文件

- a3.sources=r1

- a3.channels=c1

- a3.sinks=k1

- a3.sources.r1.type=exec

- a3.sources.r1.command=tail -f /opt/soft/tmp/flumelog.log

- a3.channels.c1.type=memory

- a3.channels.c1.capacity=1000

- a3.channels.c1.transactionCapacity=100

- a3.sinks.k1.type=hdfs

- a3.sinks.k1.hdfs.fileType=DataStream

- a3.sinks.k1.hdfs.filePrefix=flumetohdfs

- a3.sinks.k1.hdfs.fileSuffix=.txt

- a3.sinks.k1.hdfs.path=hdfs://192.168.91.11:9000/kb23flume/

- a3.sources.r1.channels=c1

- a3.sinks.k1.channel=c1

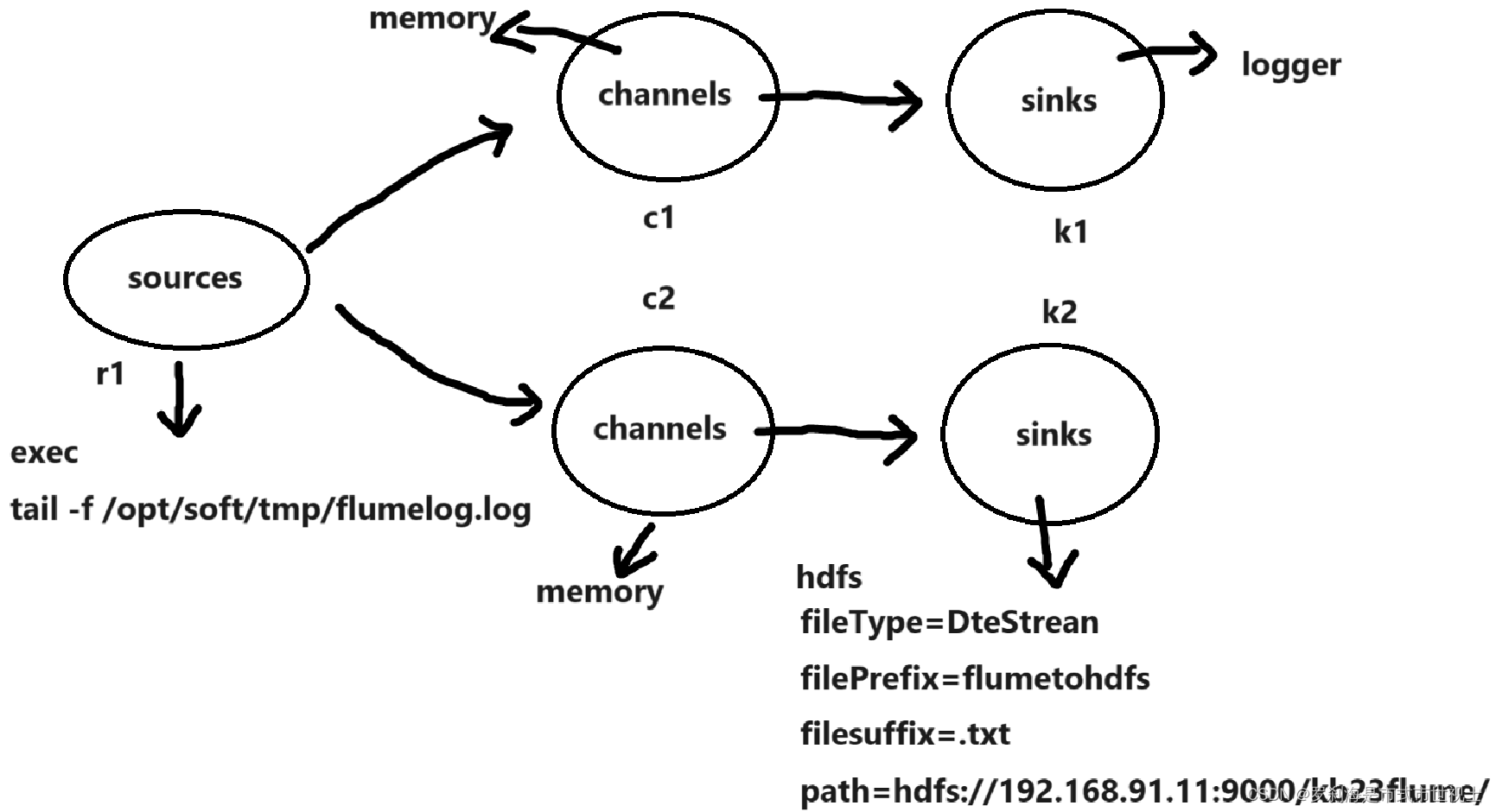

(四)输出两个路径----配置netcat-logger.conf 文件

- a4.sources=s1

- a4.channels=c1 c2

- a4.sinks=k1 k2

- a4.sources.s1.type=exec

- a4.sources.s1.command=tail -f /opt/soft/tmp/flumelog.log

- a4.channels.c1.type=memory

- a4.channels.c2.type=memory

- a4.sinks.k1.type=logger

- a4.sinks.k2.type=hdfs

- a4.sinks.k2.hdfs.fileType=DataStream

- a4.sinks.k2.hdfs.filePrefix=flumetohdfs

- a4.sinks.k2.hdfs.fileSuffix=.txt

- a4.sinks.k2.hdfs.path=hdfs://192.168.91.11:9000/kb23flume1/

- a4.sources.s1.channels=c1 c2

- a4.sinks.k1.channel=c1

- a4.sinks.k2.channel=c2

-

相关阅读:

阿里云轻量应用服务器Ubuntu20.04上手体验与基本配置(图形界面,ssh,代理等)

Google Earth Engine 教程——NDVI差异分析以及图像采集迭代分析

【ONE·Linux || 网络基础(一)】

2311rust到27版本更新

Redis命令及原理学习(一)

ElasticSearch学习总结(二):ES介绍与架构说明

美团面试拷打:ConcurrentHashMap 为何不能插入 null?HashMap 为何可以?

第六届“强网杯”全国网络安全挑战赛-青少年专项赛

Mock安装及应用

(三)Python Range循环

- 原文地址:https://blog.csdn.net/berbai/article/details/133208542