-

【错误记录】安装 Hadoop 运行环境报错 ( Error: JAVA_HOME is incorrectly set. Please update xxx\hadoop-env.cmd )

总结 :

报错 : Error: JAVA_HOME is incorrectly set. Please update xxx\hadoop-env.cmd

JDK 安装在了 C:\Program Files\ 目录下 , 安装目录 Program Files 有空格 , 太坑了 ;

换一个没有空格的 JDK 目录 ;

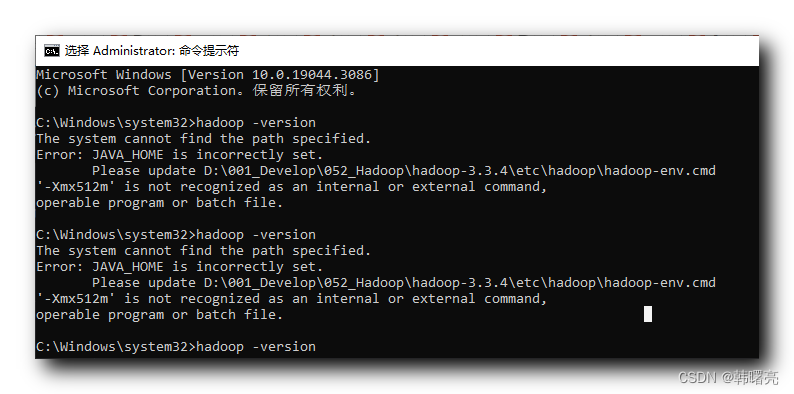

一、报错信息

安装 Hadoop 运行环境 ,

完成上述安装步骤后 , 运行 hadoop 命令报错 ;

C:\Windows\system32>hadoop -version

The system cannot find the path specified.

Error: JAVA_HOME is incorrectly set.

Please update D:\001_Develop\052_Hadoop\hadoop-3.3.4\etc\hadoop\hadoop-env.cmd

‘-Xmx512m’ is not recognized as an internal or external command,

operable program or batch file.

报错信息如下 :

C:\Windows\system32>hadoop -version The system cannot find the path specified. Error: JAVA_HOME is incorrectly set. Please update D:\001_Develop\052_Hadoop\hadoop-3.3.4\etc\hadoop\hadoop-env.cmd '-Xmx512m' is not recognized as an internal or external command, operable program or batch file. C:\Windows\system32>hadoop -version The system cannot find the path specified. Error: JAVA_HOME is incorrectly set. Please update D:\001_Develop\052_Hadoop\hadoop-3.3.4\etc\hadoop\hadoop-env.cmd '-Xmx512m' is not recognized as an internal or external command, operable program or batch file.- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

二、问题分析

核心报错信息 :

Error: JAVA_HOME is incorrectly set.

Please update D:\001_Develop\052_Hadoop\hadoop-3.3.4\etc\hadoop\hadoop-env.cmd那就是 在 D:\001_Develop\052_Hadoop\hadoop-3.3.4\etc\hadoop\hadoop-env.cmd 文件中的 JAVA_HOME 设置错误 ;

设置内容如下 :

set JAVA_HOME=C:\Program Files\Java\jdk1.8.0_91- 1

问题就是出在这个路径上 , 这个路径是 JDK 的实际路径 ;

但是路径中出现一个空格 , 这就导致了运行 Hadoop 报错 ;

三、解决方案

换一个没有空格的 JDK :

set JAVA_HOME=D:\001_Develop\031_Java8u144_Frida\jdk1.8.0_144- 1

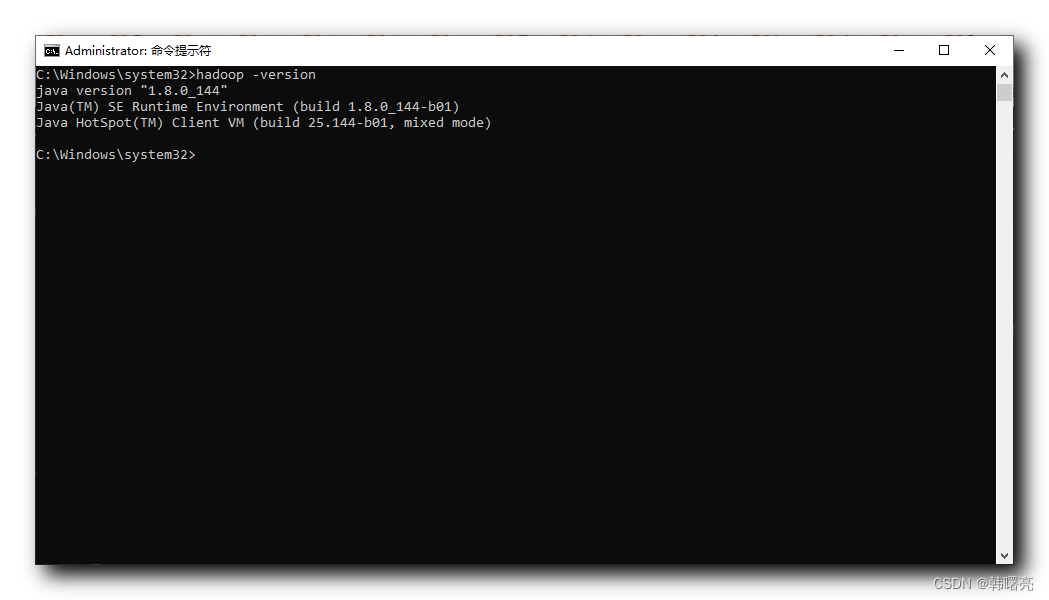

再次运行

hadoop -version- 1

命令 , 此时 Hadoop 运行成功 ;

C:\Windows\system32>hadoop -version java version "1.8.0_144" Java(TM) SE Runtime Environment (build 1.8.0_144-b01) Java HotSpot(TM) Client VM (build 25.144-b01, mixed mode)- 1

- 2

- 3

- 4

- 5

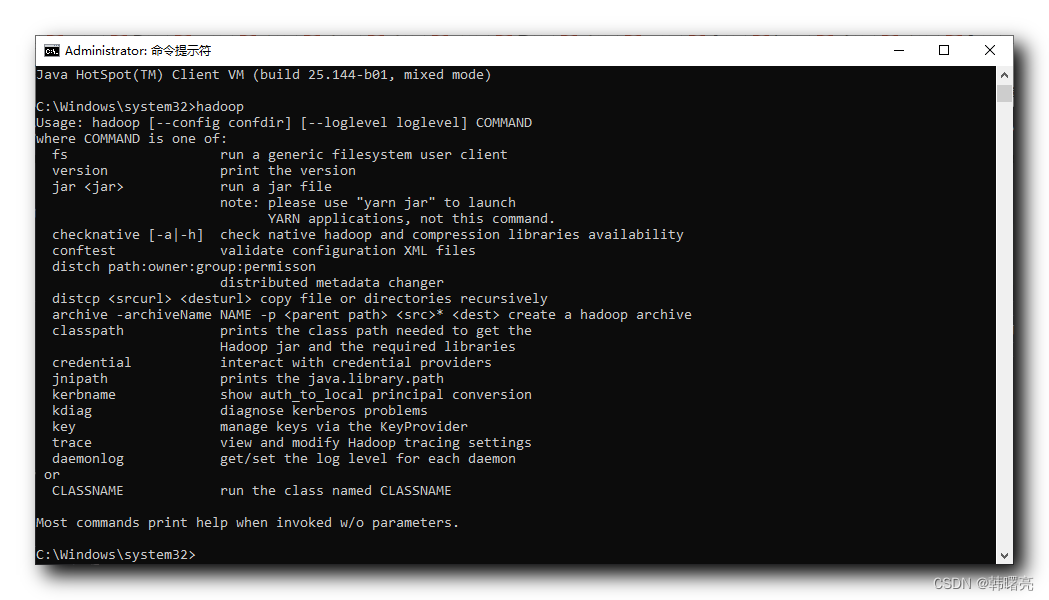

运行

hadoop- 1

命令 , 可以正确运行 ;

C:\Windows\system32>hadoop Usage: hadoop [--config confdir] [--loglevel loglevel] COMMAND where COMMAND is one of: fs run a generic filesystem user client version print the version jar <jar> run a jar file note: please use "yarn jar" to launch YARN applications, not this command. checknative [-a|-h] check native hadoop and compression libraries availability conftest validate configuration XML files distch path:owner:group:permisson distributed metadata changer distcp <srcurl> <desturl> copy file or directories recursively archive -archiveName NAME -p <parent path> <src>* <dest> create a hadoop archive classpath prints the class path needed to get the Hadoop jar and the required libraries credential interact with credential providers jnipath prints the java.library.path kerbname show auth_to_local principal conversion kdiag diagnose kerberos problems key manage keys via the KeyProvider trace view and modify Hadoop tracing settings daemonlog get/set the log level for each daemon or CLASSNAME run the class named CLASSNAME Most commands print help when invoked w/o parameters.- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

-

相关阅读:

高保链路分析——一看就会

Kotlin 协程调度切换线程是时候解开谜团了

Neo4j学习笔记(三) 导入数据

linux下抽取pdf的单双页命令

详解C++三大特性之多态

JVM第十五讲:调试排错 - Java 内存分析之堆外内存

ICDE 2023|TKDE Poster Session(CFP)

内存管理总结

计组+系统02:30min导图复习 存储系统

基于java+springboot+mybatis+vue+elementui的眼镜商城系统

- 原文地址:https://blog.csdn.net/han1202012/article/details/132081767