-

Kafka3.0.0版本——消费者(独立消费者消费某一个主题数据案例__订阅主题)

一、独立消费者消费某一个主题数据案例

1.1、案例需求

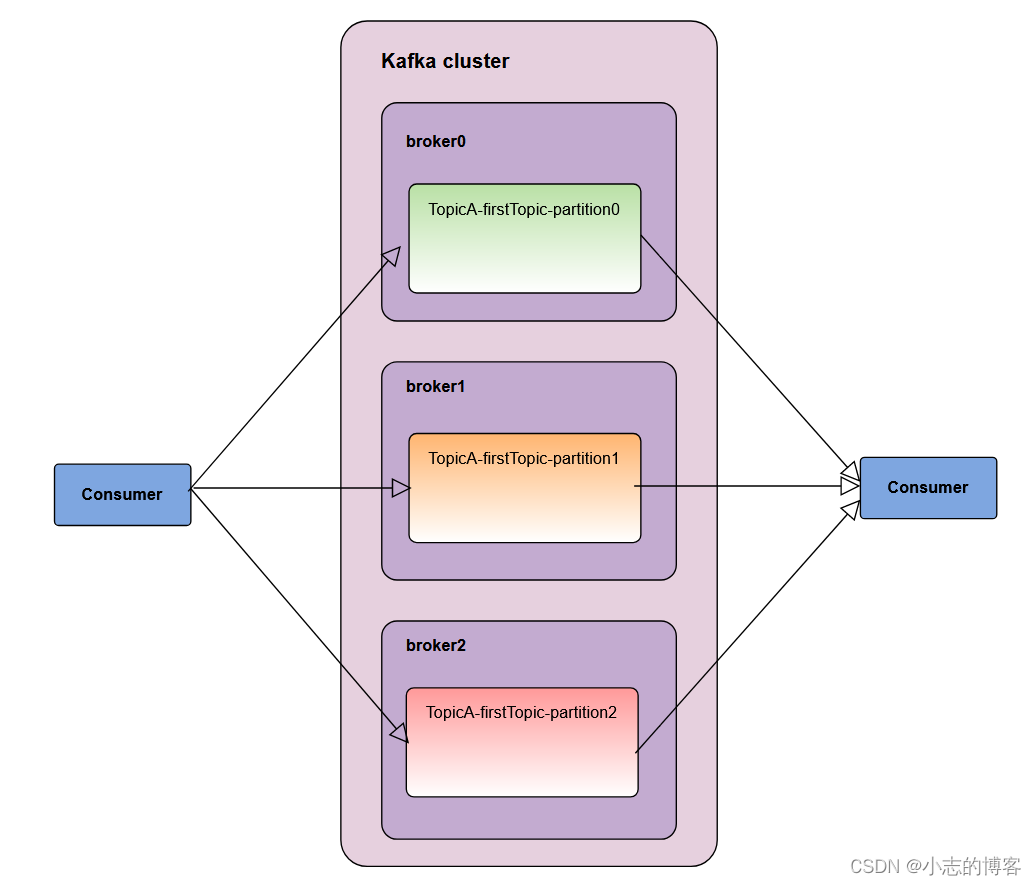

- 创建一个独立消费者,消费firstTopic主题中数据,所下图所示:

注意:在消费者 API 代码中必须配置消费者组 id。命令行启动消费者不填写消费者组id 会被自动填写随机的消费者组 id。

1.2、案例代码

-

代码

package com.xz.kafka.consumer; import org.apache.kafka.clients.consumer.ConsumerConfig; import org.apache.kafka.clients.consumer.ConsumerRecord; import org.apache.kafka.clients.consumer.ConsumerRecords; import org.apache.kafka.clients.consumer.KafkaConsumer; import org.apache.kafka.common.serialization.StringDeserializer; import java.time.Duration; import java.util.ArrayList; import java.util.Properties; /*** * 独立消费者,消费某一个主题中的数据 */ public class CustomConsumer { public static void main(String[] args) { // 配置 Properties properties = new Properties(); // 连接 bootstrap.servers properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.136.27:9092,192.168.136.28:9092,192.168.136.29:9092"); // 反序列化 properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName()); properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName()); // 配置消费者组id properties.put(ConsumerConfig.GROUP_ID_CONFIG,"test5"); // 设置分区分配策略 properties.put(ConsumerConfig.PARTITION_ASSIGNMENT_STRATEGY_CONFIG,"org.apache.kafka.clients.consumer.StickyAssignor"); // 1 创建一个消费者 "", "hello" KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<>(properties); // 2 订阅主题 first ArrayList<String> topics = new ArrayList<>(); topics.add("firstTopic"); kafkaConsumer.subscribe(topics); // 3 消费数据 while (true){ //每一秒拉取一次数据 ConsumerRecords<String, String> consumerRecords = kafkaConsumer.poll(Duration.ofSeconds(1)); //输出数据 for (ConsumerRecord<String, String> consumerRecord : consumerRecords) { System.out.println(consumerRecord); } kafkaConsumer.commitAsync(); } } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

1.3、测试

-

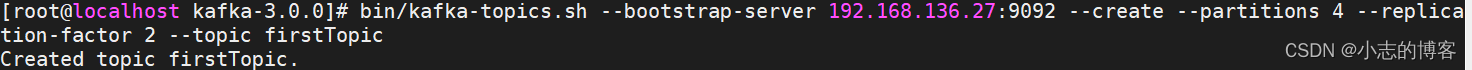

在 Kafka 集群控制台,创建firstTopic主题

bin/kafka-topics.sh --bootstrap-server 192.168.136.27:9092 --create --partitions 3 --replication-factor 1 --topic firstTopic- 1

-

在 IDEA中启动案例代码

-

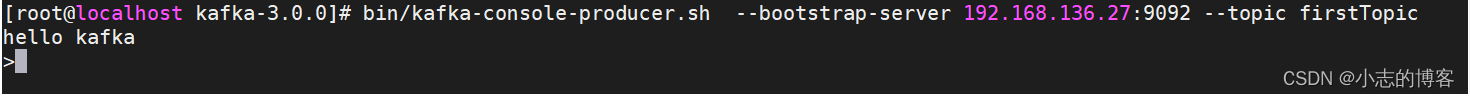

在 Kafka 集群控制台,创建 Kafka生产者,并输入数据。

bin/kafka-console-producer.sh --bootstrap-server 192.168.136.27:9092 --topic firstTopic- 1

-

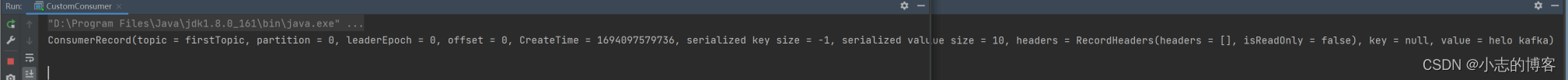

在 IDEA 控制台观察接收到的数据。

ConsumerRecord(topic = firstTopic, partition = 0, leaderEpoch = 0, offset = 0, CreateT ime = 1694097579736, serialized key size = -1, serialized value size = 10, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = helo kafka)- 1

- 2

- 3

- 创建一个独立消费者,消费firstTopic主题中数据,所下图所示:

-

相关阅读:

相似性搜索:第 2 部分:产品量化

第五章 Docker 自定义镜像

基于Java+Spring+vue+element社区疫情服务平台设计和实现

设计索引的8个小技巧(荣耀典藏版)

使用JavaScript实现无限滚动的方法

一维时间序列信号的小波时间散射变换(MATLAB 2021)

嵌入式裸板开发

JavaScript【字符串数组实操、二维数组转化一维数组 、数组去重、数组排序、 函数概述、函数的重复声明、 函数名的提升、 函数的属性和方法、函数作用域、函数参数】(七)

Au 入门系列之一:认识 Audition

DelayQueue的源码分析

- 原文地址:https://blog.csdn.net/li1325169021/article/details/132747472