-

Linux企业运维之k8s(集群部署)

一、k8s简介

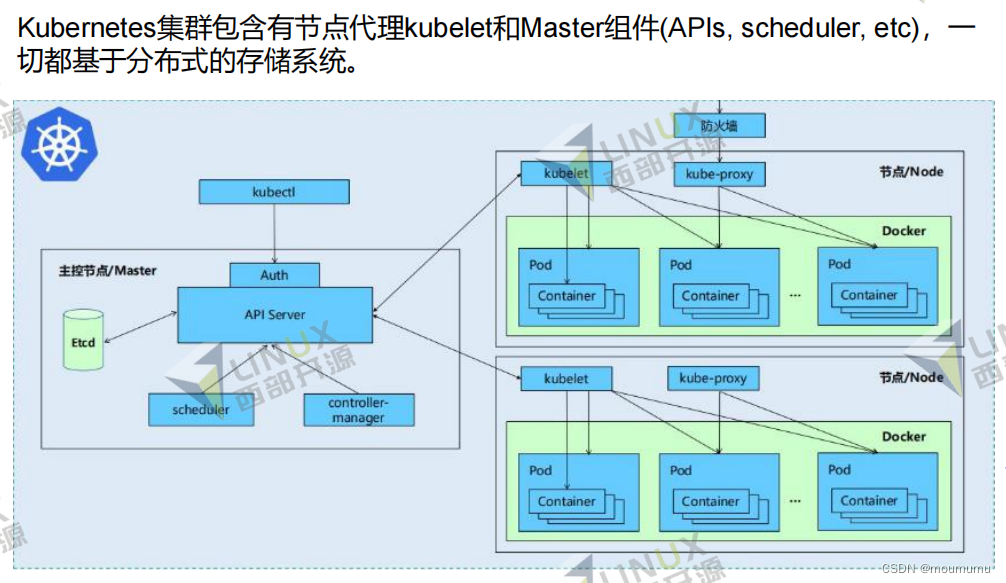

k8s设计架构

• Kubernetes master由五个核心组件组成:

• etcd:本身是分布式存储系统,保存apiserver所需的原信息,保证master组件的高可用性

• apiserver:提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注册和发现

等机制,可以水平扩展部署。

• controller manager:负责维护集群的状态,比如故障检测、自动扩展、滚动更新等,支持

热备

• scheduler:负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上,支持热备

• Kubernetes node包含组件:

• kubelet:负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理

• Container runtime:负责镜像管理以及Pod和容器的真正运行(CRI)

• kube-proxy:负责为Service提供cluster内部的服务发现和负载均衡

• 除了核心组件,还有一些推荐的Add-ons:

• kube-dns:负责为整个集群提供DNS服务

• Ingress Controller:为服务提供外网入口

• metrics-server:提供资源监控

• Dashboard:提供GUI • Fluentd-elasticsearch:提供集群日志采集、存储与查询

二、k8s部署

首先开启三个新的虚拟机;关闭所有节点的selinux和iptables防火墙,所有节点部署docker引擎,并且在k8s1上将Docker使用的Cgroup Driver改为systemd,因为systemd是Kubernetes自带的cgroup管理器, 负责为每个进程分配cgroups, 但docker的cgroup driver默认是cgroupfs,这样就同时运行有两个cgroup控制管理器, 当资源有压力的情况时,有可能出现不稳定的情况

[root@k8s1 docker]# ls daemon.json key.json [root@k8s1 docker]# cat daemon.json { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2" } [root@k8s1 docker]#- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

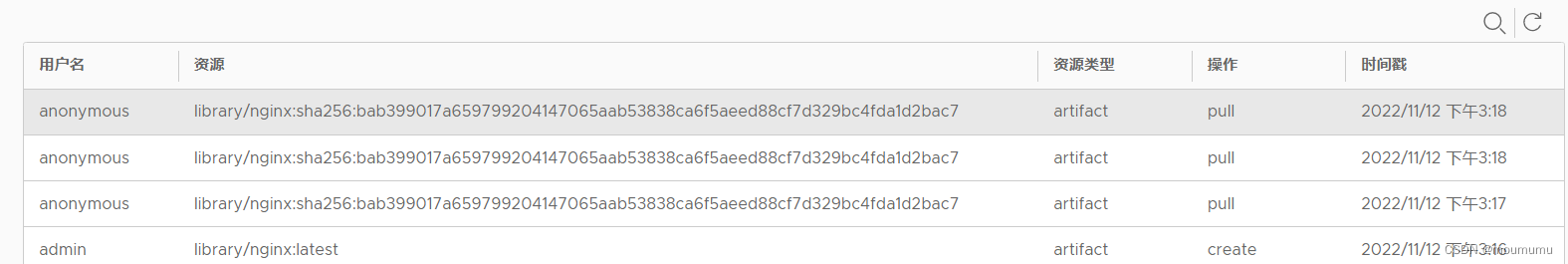

给每个节点都添加私有仓库,方便之后拉取镜像,这样的速度就会快很多;从harbor的日志中也能看到我们的私有仓库添加成功,镜像都是由私有仓库拉取过去的

[root@k8s3 docker]# ls certs.d daemon.json key.json [root@k8s3 docker]# cat daemon.json { "registry-mirrors": ["https://reg.westos.org"], "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2" }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

禁用swap分区[root@k8s1 ~]# swapoff -a [root@k8s1 ~]# vim /etc/fstab- 1

- 2

- 3

二、安装kubeadam、kubelet、kubectl

安装的时候安装1.23版本,因为在这个版本以后并不能适配docker容器;完成安装后要设置kubelet的开机自启

[root@k8s1 yum.repos.d]# systemctl enable --now kubelet Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.- 1

- 2

- 3

配置kubectl

这是启用tab补齐功能,方便我们输入[root@k8s1 ~]# vim kube-flannel.yml [root@k8s1 ~]# vim kube-flannel.yml [root@k8s1 ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc [root@k8s1 ~]# source .bashrc- 1

- 2

- 3

- 4

- 5

[root@k8s1 ~]# kubectl apply -f kube-flannel.yml namespace/kube-flannel created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds created- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

[root@k8s1 ~]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-flannel kube-flannel-ds-2lgr5 1/1 Running 0 3m kube-system coredns-6d8c4cb4d-8tcbp 1/1 Running 0 9h kube-system coredns-6d8c4cb4d-pkbwg 1/1 Running 0 9h kube-system etcd-k8s1 1/1 Running 0 9h kube-system kube-apiserver-k8s1 1/1 Running 0 9h kube-system kube-controller-manager-k8s1 1/1 Running 4 (6m38s ago) 9h kube-system kube-proxy-rn2wm 1/1 Running 0 9h kube-system kube-scheduler-k8s1 1/1 Running 4 (6m41s ago) 9h- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

在另外两个主机上也进行以下操作

[root@k8s2 ~]# kubeadm join 192.168.1.11:6443 --token ps1byt.9l42okkh08rw9eg7 \ > --discovery-token-ca-cert-hash sha256:fd48328fba74285440ac9961928fb4111ca3c60421f3ec9cc7ab19a70e8c2485 [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

当我们看到这三个节点都ready的时候就表示我们的平台部署成功,可以正常使用

[root@k8s1 ~]# kubectl get node NAME STATUS ROLES AGE VERSION k8s1 Ready control-plane,master 9h v1.23.12 k8s2 NotReady35s v1.23.12 k8s3 NotReady 33s v1.23.12 [root@k8s1 ~]# kubectl get node NAME STATUS ROLES AGE VERSION k8s1 Ready control-plane,master 9h v1.23.12 k8s2 Ready 2m53s v1.23.12 k8s3 Ready 2m51s v1.23.12 [root@k8s1 ~]# - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

-

相关阅读:

Redis最全详解(三)——SpringBoot整合2种方式

13. 机器学习 - 数据集的处理

8086/8088CPU的储存器结构(二)

PLC连接库MCProtocolLibrary

Ubuntu 安装PostgreSQL

vue CORS 跨域问题 的终极解决方案

数据治理中最常听到的名词有哪些?

被问实习最大的收获是什么可以怎么回答?

MySQL迁移表分区【图文教程】

区块链与云融合的催化剂:存储资源盘活系统

- 原文地址:https://blog.csdn.net/moumumu/article/details/127818955