-

Python机器学习16——相关向量机(RVM)

本系列基本不讲数学原理,只从代码角度去让读者们利用最简洁的Python代码实现机器学习方法。

背景介绍

学机器学习的应该都知道支持向量机(SVM),这个方法在深度学习兴起之前算是很热门的分类方法,在机器学习里面,分类算法属SVM效果比较好,回归算法属随机森林(RF)的效果比较好。

虽然目前在深度学习神经网络算法的面前,它们的效果都已经黯然失色。但是学术界还是不少人使用这些传统的算法,因为数学理论强,很受老师们喜欢。

相关向量机(RVM)也是,它是一种基于贝叶斯框架的方法,核心思想是先验后验概率找最大似然,在科研领域中,关于使用RVM的文章不在少数。

但是由于我们最常用的sklearn库没有rvm的接口......所以没办法直接调用,这篇博客就是补充这个空白的。rvm使用Python实现相关向量机,并且做成我们最为熟悉的sklearn库接口,方便使用。

算法原理

我这里就不多介绍原理了,感兴趣同学直接看这个pdf,讲的还是不错的。

https://files-cdn.cnblogs.com/files/axlute/RVMExplained.pdf

代码实现

定义RVM的类,回归和分类都有。

- """Relevance Vector Machine classes for regression and classification."""

- import numpy as np

- from scipy.optimize import minimize

- from scipy.special import expit

- from sklearn.base import BaseEstimator, RegressorMixin, ClassifierMixin

- from sklearn.metrics.pairwise import (

- linear_kernel,

- rbf_kernel,

- polynomial_kernel

- )

- from sklearn.multiclass import OneVsOneClassifier

- from sklearn.utils.validation import check_X_y

- class BaseRVM(BaseEstimator):

- """Base Relevance Vector Machine class.

- Implementation of Mike Tipping's Relevance Vector Machine using the

- scikit-learn API. Add a posterior over weights method and a predict

- in subclass to use for classification or regression.

- """

- def __init__(

- self,

- kernel='rbf',

- degree=3,

- coef1=None,

- coef0=0.0,

- n_iter=3000,

- tol=1e-3,

- alpha=1e-6,

- threshold_alpha=1e9,

- beta=1.e-6,

- beta_fixed=False,

- bias_used=True,

- verbose=False

- ):

- """Copy params to object properties, no validation."""

- self.kernel = kernel

- self.degree = degree

- self.coef1 = coef1

- self.coef0 = coef0

- self.n_iter = n_iter

- self.tol = tol

- self.alpha = alpha

- self.threshold_alpha = threshold_alpha

- self.beta = beta

- self.beta_fixed = beta_fixed

- self.bias_used = bias_used

- self.verbose = verbose

- def get_params(self, deep=True):

- """Return parameters as a dictionary."""

- params = {

- 'kernel': self.kernel,

- 'degree': self.degree,

- 'coef1': self.coef1,

- 'coef0': self.coef0,

- 'n_iter': self.n_iter,

- 'tol': self.tol,

- 'alpha': self.alpha,

- 'threshold_alpha': self.threshold_alpha,

- 'beta': self.beta,

- 'beta_fixed': self.beta_fixed,

- 'bias_used': self.bias_used,

- 'verbose': self.verbose

- }

- return params

- def set_params(self, **parameters):

- """Set parameters using kwargs."""

- for parameter, value in parameters.items():

- setattr(self, parameter, value)

- return self

- def _apply_kernel(self, x, y):

- """Apply the selected kernel function to the data."""

- if self.kernel == 'linear':

- phi = linear_kernel(x, y)

- elif self.kernel == 'rbf':

- phi = rbf_kernel(x, y, self.coef1)

- elif self.kernel == 'poly':

- phi = polynomial_kernel(x, y, self.degree, self.coef1, self.coef0)

- elif callable(self.kernel):

- phi = self.kernel(x, y)

- if len(phi.shape) != 2:

- raise ValueError(

- "Custom kernel function did not return 2D matrix"

- )

- if phi.shape[0] != x.shape[0]:

- raise ValueError(

- "Custom kernel function did not return matrix with rows"

- " equal to number of data points."""

- )

- else:

- raise ValueError("Kernel selection is invalid.")

- if self.bias_used:

- phi = np.append(phi, np.ones((phi.shape[0], 1)), axis=1)

- return phi

- def _prune(self):

- """Remove basis functions based on alpha values."""

- keep_alpha = self.alpha_ < self.threshold_alpha

- if not np.any(keep_alpha):

- keep_alpha[0] = True

- if self.bias_used:

- keep_alpha[-1] = True

- if self.bias_used:

- if not keep_alpha[-1]:

- self.bias_used = False

- self.relevance_ = self.relevance_[keep_alpha[:-1]]

- else:

- self.relevance_ = self.relevance_[keep_alpha]

- self.alpha_ = self.alpha_[keep_alpha]

- self.alpha_old = self.alpha_old[keep_alpha]

- self.gamma = self.gamma[keep_alpha]

- self.phi = self.phi[:, keep_alpha]

- self.sigma_ = self.sigma_[np.ix_(keep_alpha, keep_alpha)]

- self.m_ = self.m_[keep_alpha]

- def fit(self, X, y):

- """Fit the RVR to the training data."""

- X, y = check_X_y(X, y)

- n_samples, n_features = X.shape

- self.phi = self._apply_kernel(X, X)

- n_basis_functions = self.phi.shape[1]

- self.relevance_ = X

- self.y = y

- self.alpha_ = self.alpha * np.ones(n_basis_functions)

- self.beta_ = self.beta

- self.m_ = np.zeros(n_basis_functions)

- self.alpha_old = self.alpha_

- for i in range(self.n_iter):

- self._posterior()

- self.gamma = 1 - self.alpha_*np.diag(self.sigma_)

- self.alpha_ = self.gamma/(self.m_ ** 2)

- if not self.beta_fixed:

- self.beta_ = (n_samples - np.sum(self.gamma))/(

- np.sum((y - np.dot(self.phi, self.m_)) ** 2))

- self._prune()

- if self.verbose:

- print("Iteration: {}".format(i))

- print("Alpha: {}".format(self.alpha_))

- print("Beta: {}".format(self.beta_))

- print("Gamma: {}".format(self.gamma))

- print("m: {}".format(self.m_))

- print("Relevance Vectors: {}".format(self.relevance_.shape[0]))

- print()

- delta = np.amax(np.absolute(self.alpha_ - self.alpha_old))

- if delta < self.tol and i > 1:

- break

- self.alpha_old = self.alpha_

- if self.bias_used:

- self.bias = self.m_[-1]

- else:

- self.bias = None

- return self

- class RVR(BaseRVM, RegressorMixin):

- """Relevance Vector Machine Regression.

- Implementation of Mike Tipping's Relevance Vector Machine for regression

- using the scikit-learn API.

- """

- def _posterior(self):

- """Compute the posterior distriubtion over weights."""

- i_s = np.diag(self.alpha_) + self.beta_ * np.dot(self.phi.T, self.phi)

- self.sigma_ = np.linalg.inv(i_s)

- self.m_ = self.beta_ * np.dot(self.sigma_, np.dot(self.phi.T, self.y))

- def predict(self, X, eval_MSE=False):

- """Evaluate the RVR model at x."""

- phi = self._apply_kernel(X, self.relevance_)

- y = np.dot(phi, self.m_)

- if eval_MSE:

- MSE = (1/self.beta_) + np.dot(phi, np.dot(self.sigma_, phi.T))

- return y, MSE[:, 0]

- else:

- return y

- class RVC(BaseRVM, ClassifierMixin):

- """Relevance Vector Machine Classification.

- Implementation of Mike Tipping's Relevance Vector Machine for

- classification using the scikit-learn API.

- """

- def __init__(self, n_iter_posterior=50, **kwargs):

- """Copy params to object properties, no validation."""

- self.n_iter_posterior = n_iter_posterior

- super(RVC, self).__init__(**kwargs)

- def get_params(self, deep=True):

- """Return parameters as a dictionary."""

- params = super(RVC, self).get_params(deep=deep)

- params['n_iter_posterior'] = self.n_iter_posterior

- return params

- def _classify(self, m, phi):

- return expit(np.dot(phi, m))

- def _log_posterior(self, m, alpha, phi, t):

- y = self._classify(m, phi)

- log_p = -1 * (np.sum(np.log(y[t == 1]), 0) +

- np.sum(np.log(1-y[t == 0]), 0))

- log_p = log_p + 0.5*np.dot(m.T, np.dot(np.diag(alpha), m))

- jacobian = np.dot(np.diag(alpha), m) - np.dot(phi.T, (t-y))

- return log_p, jacobian

- def _hessian(self, m, alpha, phi, t):

- y = self._classify(m, phi)

- B = np.diag(y*(1-y))

- return np.diag(alpha) + np.dot(phi.T, np.dot(B, phi))

- def _posterior(self):

- result = minimize(

- fun=self._log_posterior,

- hess=self._hessian,

- x0=self.m_,

- args=(self.alpha_, self.phi, self.t),

- method='Newton-CG',

- jac=True,

- options={

- 'maxiter': self.n_iter_posterior

- }

- )

- self.m_ = result.x

- self.sigma_ = np.linalg.inv(

- self._hessian(self.m_, self.alpha_, self.phi, self.t)

- )

- def fit(self, X, y):

- """Check target values and fit model."""

- self.classes_ = np.unique(y)

- n_classes = len(self.classes_)

- if n_classes < 2:

- raise ValueError("Need 2 or more classes.")

- elif n_classes == 2:

- self.t = np.zeros(y.shape)

- self.t[y == self.classes_[1]] = 1

- return super(RVC, self).fit(X, self.t)

- else:

- self.multi_ = None

- self.multi_ = OneVsOneClassifier(self)

- self.multi_.fit(X, y)

- return self

- def predict_proba(self, X):

- """Return an array of class probabilities."""

- phi = self._apply_kernel(X, self.relevance_)

- y = self._classify(self.m_, phi)

- return np.column_stack((1-y, y))

- def predict(self, X):

- """Return an array of classes for each input."""

- if len(self.classes_) == 2:

- y = self.predict_proba(X)

- res = np.empty(y.shape[0], dtype=self.classes_.dtype)

- res[y[:, 1] <= 0.5] = self.classes_[0]

- res[y[:, 1] >= 0.5] = self.classes_[1]

- return res

- else:

- return self.multi_.predict(X)

好了,下面就可以像别的sklearn库里面的包一样使用了。

代码测试

我们对分类问题和回归问题都测试一下,并且和支持向量机做对比。

- import numpy as np

- import pandas as pd

- import matplotlib.pyplot as plt

- import seaborn as sns

- from sklearn.preprocessing import StandardScaler

- from sklearn.model_selection import train_test_split

- from sklearn.model_selection import KFold, StratifiedKFold

- from sklearn.model_selection import GridSearchCV

- from sklearn.metrics import plot_confusion_matrix

- from sklearn.svm import SVC

- from sklearn.svm import SVR

- from sklearn.datasets import load_boston

- from sklearn.datasets import load_breast_cancer

分类测试

分类我们使用经典的鸢尾花数据集

- iris = load_breast_cancer() #加载数据

- X = iris.data

- y = iris.target

- X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, stratify=y, random_state=0)

- scaler = StandardScaler()

- scaler.fit(X_train)

- X_train_s = scaler.transform(X_train)

- X_test_s = scaler.transform(X_test)

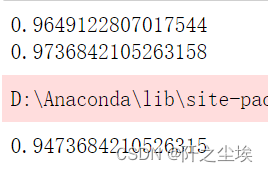

支持向量机,不同核函数的效果:

- #线性核函数

- model = SVC(kernel="linear", random_state=123)

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- #二次多项式核

- model = SVC(kernel="poly", degree=2, random_state=123)

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- #三次多项式

- model = SVC(kernel="poly", degree=3, random_state=123)

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- #径向核

- model = SVC(kernel="rbf", random_state=123)

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- #S核

- model = SVC(kernel="sigmoid",random_state=123)

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

相关向量机(RVM)效果:

- model = RVC(kernel="linear")

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- model = RVC(kernel="rbf")

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- model = RVC(kernel="poly")

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

效果差不多。

回归测试

回归使用波士顿数据集

- # Support Vector Regression with Boston Housing Data

- X, y = load_boston(return_X_y=True)

- X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=1)

- scaler = StandardScaler()

- scaler.fit(X_train)

- X_train_s = scaler.transform(X_train)

- X_test_s = scaler.transform(X_test)

支持向量机效果:(不同核函数)

- #线性核函数

- model = SVR(kernel="linear")

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- #二次多项式核

- model = SVR(kernel="poly", degree=2)

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- #三次多项式

- model = SVR(kernel="poly", degree=3)

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- #径向核

- model = SVR(kernel="rbf")

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- #S核

- model = SVR(kernel="sigmoid")

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

相关向量机(RVM)效果:

- model = RVR(kernel="linear")

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- model = RVR(kernel="rbf")

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

- model = RVR(kernel="poly")

- model.fit(X_train_s, y_train)

- print(model.score(X_test_s, y_test))

可以看到,在回归问题上,相关向量机比支持向量机的效果要好。

结论:

分类用SVM,回归用RVM

当然我这里只用了两个sklearn自带的数据集测试,结论肯定有点武断,有兴趣的同学可以用于别的数据集,然后做多次K折交叉验证,进一步对比他们的效果。

-

相关阅读:

【allegro 17.4软件操作保姆级教程八】布线操作基础之三

【python】爬取杭州市二手房销售数据做数据分析【附源码】

企业知识库有什么价值?

Java 21 新特性:虚拟线程(Virtual Threads)

可观测|时序数据降采样在Prometheus实践复盘

蓝牙信标的优势及应用场景

装机指南1.0

Oracle共享内存不释放

linux常见命令以及jdk,tomcat环境搭建

GDPU 数据结构 天码行空9

- 原文地址:https://blog.csdn.net/weixin_46277779/article/details/127927306