-

Mediapipe 手势模型 转换rknn

mediappipe手势模型低端设备 RK3568推理 GPU 占用比较高 尝试3568平台NPU 进行推理

一下进行 tflite模型转换 rknn模型

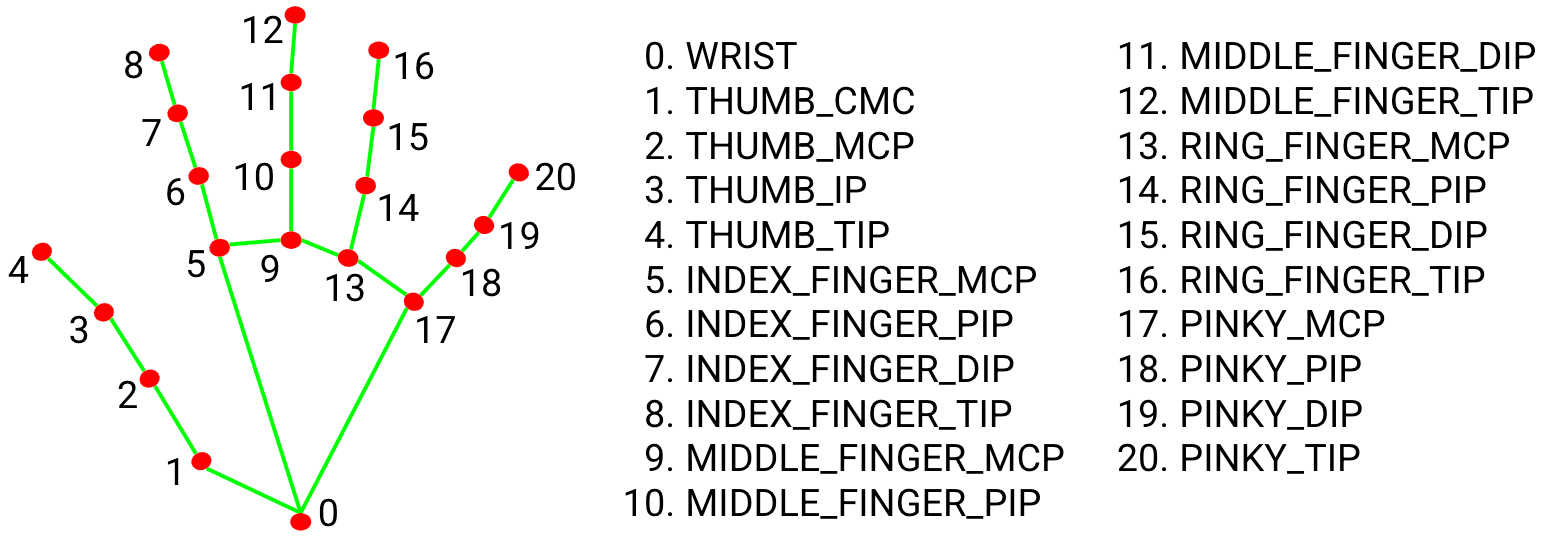

手部坐标 模型 图

准备rknn-tookit2 1.3.0以上环境

进入 t@ubuntu:~/rknn/rknn-toolkit2/examples/tflite$ 目录

参照 mobilenet_v1 demo 转换hands模型

copy mobilenet_v1 demo 重命名 mediapipe_hand

cd /rknn/rknn-toolkit2/examples/tflite/mediapipe_hand$

修改 test.py 加载模型 及输入图片资源

- import numpy as np

- import cv2

- from rknn.api import RKNN

- #import tensorflow.compat.v1 as tf #使用1.0版本的方法

- #tf.disable_v2_behavior() #

- def show_outputs(outputs):

- output = outputs[0][0]

- output_sorted = sorted(output, reverse=True)

- top5_str = 'mobilenet_v1\n-----TOP 5-----\n'

- for i in range(5):

- value = output_sorted[i]

- index = np.where(output == value)

- for j in range(len(index)):

- if (i + j) >= 5:

- break

- if value > 0:

- topi = '{}: {}\n'.format(index[j], value)

- else:

- topi = '-1: 0.0\n'

- top5_str += topi

- print(top5_str)

- if __name__ == '__main__':

- # Create RKNN object

- rknn = RKNN(verbose=True)

- # Pre-process config

- print('--> Config model')

- rknn.config(mean_values=[128, 128, 128], std_values=[128, 128, 128])

- print('done')

- # Load model

- print('--> Loading model')

- ret = rknn.load_tflite(model='hand_landmark_lite.tflite')

- #ret = rknn.load_tflite(model='palm_detection_lite.tflite')

- if ret != 0:

- print('Load model failed!')

- exit(ret)

- print('done')

- # Build model

- print('--> Building model')

- ret = rknn.build(do_quantization=True, dataset='./dataset.txt')

- if ret != 0:

- print('Build model failed!')

- exit(ret)

- print('done')

- # Export rknn model

- print('--> Export rknn model')

- ret = rknn.export_rknn('./hands.rknn')

- if ret != 0:

- print('Export rknn model failed!')

- exit(ret)

- print('done')

- # Set inputs

- img = cv2.imread('./hand_1.jpg')

- img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

- img = cv2.resize(img, (224,224))

- img = np.expand_dims(img, 0)

- # Init runtime environment

- print('--> Init runtime environment')

- ret = rknn.init_runtime()

- if ret != 0:

- print('Init runtime environment failed!')

- exit(ret)

- print('done')

- # Inference

- print('--> Running model')

- outputs = rknn.inference(inputs=[img])

- print('--> outputs:' ,outputs)

- np.save('./tflite_hands.npy', outputs[0])

- show_outputs(outputs)

- print('done')

- rknn.release()

注意输入 图的size tensorflow版本 尽量使用最新的

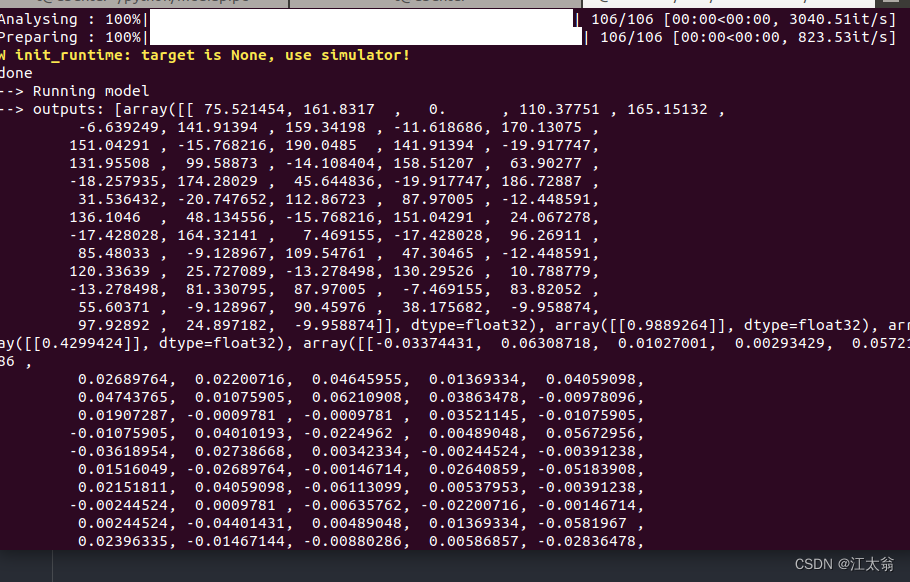

模拟推理结果

数据格式

[array([[ 75.521454, 161.8317 , 0. , 110.37751 , 165.15132 ,

-6.639249, 141.91394 , 159.34198 , -11.618686, 170.13075 ,

151.04291 , -15.768216, 190.0485 , 141.91394 , -19.917747,

131.95508 , 99.58873 , -14.108404, 158.51207 , 63.90277 ,

-18.257935, 174.28029 , 45.644836, -19.917747, 186.72887 ,

31.536432, -20.747652, 112.86723 , 87.97005 , -12.448591,

136.1046 , 48.134556, -15.768216, 151.04291 , 24.067278,

-17.428028, 164.32141 , 7.469155, -17.428028, 96.26911 ,

85.48033 , -9.128967, 109.54761 , 47.30465 , -12.448591,

120.33639 , 25.727089, -13.278498, 130.29526 , 10.788779,

-13.278498, 81.330795, 87.97005 , -7.469155, 83.82052 ,

55.60371 , -9.128967, 90.45976 , 38.175682, -9.958874,

97.92892 , 24.897182, -9.958874]], dtype=float32), array([[0.9889264]], dtype=float32), array([[0.4299424]], dtype=float32), array([[-0.03374431, 0.06308718, 0.01027001, 0.00293429, 0.0572186 ,

0.02689764, 0.02200716, 0.04645955, 0.01369334, 0.04059098,

0.04743765, 0.01075905, 0.06210908, 0.03863478, -0.00978096,

0.01907287, -0.0009781 , -0.0009781 , 0.03521145, -0.01075905,

-0.01075905, 0.04010193, -0.0224962 , 0.00489048, 0.05672956,

-0.03618954, 0.02738668, 0.00342334, -0.00244524, -0.00391238,

0.01516049, -0.02689764, -0.00146714, 0.02640859, -0.05183908,

0.02151811, 0.04059098, -0.06113099, 0.00537953, -0.00391238,

-0.00244524, 0.0009781 , -0.00635762, -0.02200716, -0.00146714,

0.00244524, -0.04401431, 0.00489048, 0.01369334, -0.0581967 ,

0.02396335, -0.01467144, -0.00880286, 0.00586857, -0.02836478,

-0.01956192, 0.00244524, -0.02151811, -0.03716764, -0.00048905,

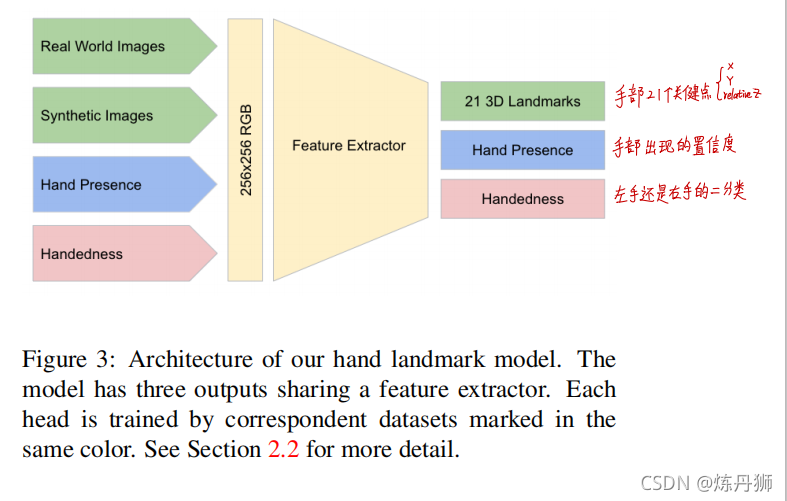

-0.01173715, -0.04499241, -0.01907287]], dtype=float32)]手部坐标预测模型 (Hand LandMark Model)

21个关键点 hands检测置信度 左右手分类 ..

-

相关阅读:

React-Router(V6版本)

Mybatis-Plus--update(), updateById()将字段更新为null

DBNN实验进展

Postman中的断言

python中的字典

Kafka监控工具,LinkedIn详解

owt-server源码剖析(五)--js模块分析

交换机聚合配置 (H3C)

程序员副业大揭秘:如何利用技术优势实现财富自由?

【Minio】Linux中Docker下Minio启动提示权限不足

- 原文地址:https://blog.csdn.net/TyearLin/article/details/127102623