-

04 随机梯度下降

一:什么是梯度

1、Clarification

2、What does grad mean?

3、How to search for minima?

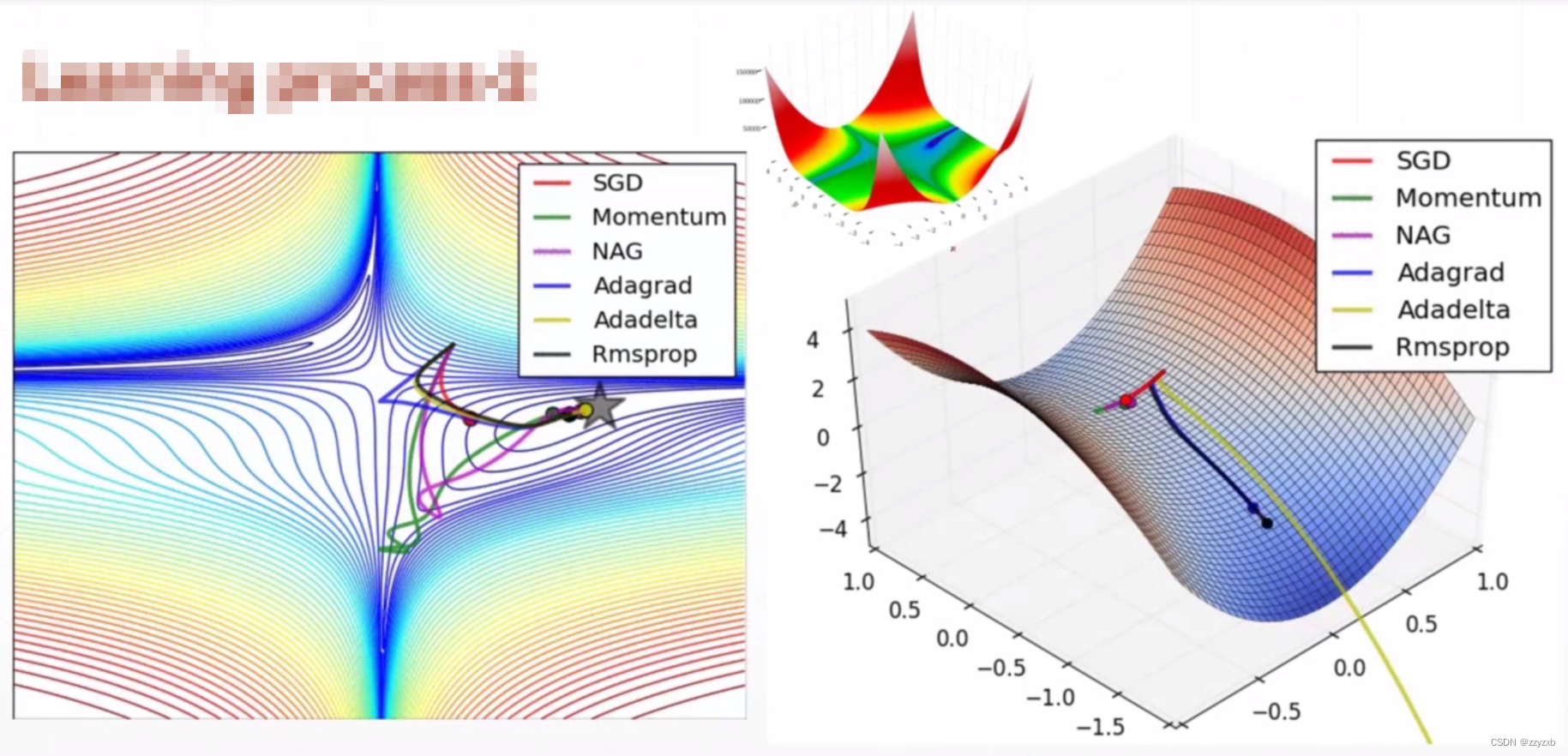

4、Learning process

1

2

5、Convex function

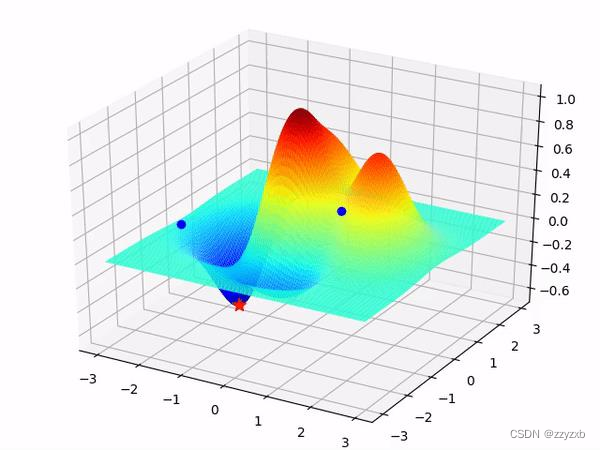

6、Local Minima

7、ResNet-56

8、Saddle point

9、Optimizer Performance

▪ initialization status

▪ learning rate

▪ momentum

▪ etc.10、Initialization

11、Learning rate

12、Escape minima

二:激活函数

1、Activation Functions

Derivative

2、Sigmoid / Logistic

Derivative

torch.sigmoid

3、Tanh

Derivative

torch.tanh

4、Rectified Linear Unit

Derivative

F.relu

三:LOSS及其梯度

1、Typical Loss

▪ Mean Squared Error

▪ Cross Entropy Loss

▪ binary

▪ multi-class

▪ +softmax

▪ Leave it to Logistic Regression Part2、MSE

Derivative

autograd.grad

loss.backward

Gradient API

3、Softmax

soft version of max

Derivative

F.softmax

四:感知机的梯度推导

1、Derivative

2、Perceptron

3、Multi-output Perceptron

4、Derivative

5、Multi-output Perceptron

-

相关阅读:

倍增(小试牛刀)

Rust语言——小小白的入门学习05

MySQL数据库——视图-介绍及基本语法(创建、查询、修改、删除、演示示例)

FreeRTOS 中互斥锁的基本使用方法

【转】推送消息&推送机制

Linux 软件包管理器 yum

一文带你了解 Spring 的@Enablexxx 注解

EasyPoi导入

git 撤销与删除

nodejs 安装多版本 版本切换

- 原文地址:https://blog.csdn.net/zzyzxb/article/details/127095885