-

VGG论文

#pic_center =400x

系列文章:

ABSTRACT

In this work we investigate the effect of the convolutional network depth on its

accuracy in the large-scale image recognition setting. Our main contribution is

a thorough evaluation of networks of increasing depth using an architecture with

very small (3×3) convolution filters, which shows that a significant improvement

on the prior-art configurations can be achieved by pushing the depth to 16–19

weight layers. These findings were the basis of our ImageNet Challenge 2014

submission, where our team secured the first and the second places in the localisation and classification tracks respectively. We also show that our representations

generalise well to other datasets, where they achieve state-of-the-art results. We

have made our two best-performing ConvNet models publicly available to facilitate further research on the use of deep visual representations in computer vision- 我们研究了在大规模图像识别环境中,卷积深度对精度的影响。

- 我们的主要贡献是 对 使用了3x3卷积的 的 不断加深的神经网络 进行全面的评估 , 结果表明:通过将深度增至16-19个带权重的层 可以在现有的技术配置 得到显著提升。

- 这些发现是在 ImageNet Challenge 2014的基础上得到的,我们的团队在 localisation and classification任务上分别取得第一名和第二名。

- 我们也发现在其他数据集上,我们团队的神经网络模型泛化能力很好,也取得了 state-of-the-art results.

- 我们把性能最好的两个卷积模型公开方便未来 在计算机视觉运用深度卷积进行科研。

INTRODUCTION

Convolutional networks (ConvNets) have recently enjoyed a great success in large-scale im-

age and video recognition (Krizhevsky et al., 2012; Zeiler & Fergus, 2013; Sermanet et al., 2014;

Simonyan & Zisserman, 2014) which has become possible due to the large public image reposito-

ries, such as ImageNet (Deng et al., 2009), and high-performance computing systems, such as GPUs

or large-scale distributed clusters (Dean et al., 2012). In particular, an important role in the advance

of deep visual recognition architectures has been played by the ImageNet Large-Scale Visual Recog-

nition Challenge (ILSVRC) (Russakovsky et al., 2014), which has served as a testbed for a few

generations of large-scale image classification systems, from high-dimensional shallow feature en-

codings (Perronnin et al., 2010) (the winner of ILSVRC-2011) to deep ConvNets (Krizhevsky et al.,

2012) (the winner of ILSVRC-2012)- 最近,卷积网络在大规模图像及视频已经取得 great success, 能取得这样的成功可能是由于:1 大规模公开的图像数据库如Imagenet(数据集大)2高性能的计算系统如GPU以及分布式集群(算力)。

- 特别是,在深度视觉识别架构的发展中, ImageNet Large-Scale Visual Recognition Challenge (ILSVRC) 扮演一个重要的角色, 它充当实验平台,几代大型图像分类系统在上面实验,从高分辨率浅特征编码到深度卷积网络。

With ConvNets becoming more of a commodity in the computer vision field, a number of attempts have been made to improve the original architecture of Krizhevsky et al. (2012) in a

bid to achieve better accuracy. For instance, the best-performing submissions to the ILSVRC-

2013 (Zeiler & Fergus, 2013; Sermanet et al., 2014) utilised smaller receptive window size and

smaller stride of the first convolutional layer. Another line of improvements dealt with training

and testing the networks densely over the whole image and over multiple scales (Sermanet et al.,

2014; Howard, 2014). In this paper, we address another important aspect of ConvNet architecture

design – its depth. To this end, we fix other parameters of the architecture, and steadily increase the

depth of the network by adding more convolutional layers, which is feasible due to the use of very

small (3 × 3) convolution filters in all layers.- 随着卷积网络在计算机视觉领域正在变成越来越常用,为了得到更好的精度,进行了很多尝试来提升原有的体系结构。例如 ILSVRC2013性能最好的模型 利用更小的容易接受的窗口大小以及小步长的的第一层卷积。

- 另一个提升是在整个图像以及多分辨率上进行密集地训练和测试神经网络。

- 在这篇论文中,我们讨论了另一个重要的方面-卷积架构设计-深度。为此,我们固定了网络模型其他的参数,通过添加更多的卷积层来平稳地增加网络深度,由于在所有层都使用很小的卷积3x3,取得很好的效果。

As a result, we come up with significantly more accurate ConvNet architectures, which not only

achieve the state-of-the-art accuracy on ILSVRC classification and localisation tasks, but are also

applicable to other image recognition datasets, where they achieve excellent performance even when

used as a part of a relatively simple pipelines (e.g. deep features classified by a linear SVM without

fine-tuning). We have released our two best-performing models1

to facilitate further research.- 结果,我们提出精度更高的的多个卷积模型,该模型不仅在ILSVRC分类以及localisation任务中取得SOTA效果,而且能应用到其他图像识别数据集上,这些模型也取得卓越的性能甚至只是用作 relatively simple pipelines的一部分(使用SVM对深层特征分类没有fine-tuning)。

- 我们公开了两个性能呢最好的模型以便于进一步的研究。

The rest of the paper is organised as follows. In Sect. 2, we describe our ConvNet configurations.

The details of the image classification training and evaluation are then presented in Sect. 3, and the configurations are compared on the ILSVRC classification task in Sect. 4. Sect. 5 concludes the

paper. For completeness, we also describe and assess our ILSVRC-2014 object localisation system

in Appendix A, and discuss the generalisation of very deep features to other datasets in Appendix B.

Finally, Appendix C contains the list of major paper revisions.- 剩余的论文组织如下:在Sect2 描述我们的卷积模型, 图像分类的训练和验证的细节在Sect 3, 我们的卷积模型与 ILSVRC分类任务的对比在 Sect 4, Sect5是论文总结。

- 为了完整性,我们还在附录A中描述和评估了我们的ILSVRC-2014对象定位系统,并在附录B中讨论了将非常深的特征推广到其他数据集。最后,附录C包含主要文件修订列表。

CONVNET CONFIGURATIONS 卷积设计/配置

To measure the improvement brought by the increased ConvNet depth in a fair setting, all our

ConvNet layer configurations are designed using the same principles, inspired by Ciresan et al.

(2011); Krizhevsky et al. (2012). In this section, we first describe a generic layout of our ConvNet

configurations (Sect. 2.1) and then detail the specific configurations used in the evaluation (Sect. 2.2).

Our design choices are then discussed and compared to the prior art in Sect. 2.3.- 为了在一个公平的环境中测量 卷积深度所带来的提升, 受Ciresan等的启发我们的卷积层配置设计采用相同的原则。

- 在这个章节,我们在Sect2.1中首次描述卷积网络的大致配置, Sect2.2详细说明 在验证集上所用的配置, 2.3然后讨论我们的设计选择,并与第节中的现有技术进行比较

2.1 ARCHITECTURE 体系结构

During training, the input to our ConvNets is a fixed-size 224 × 224 RGB image.

The only pre-processing we do is subtracting the mean RGB value, computed on the training set, from each pixel.

The image is passed through a stack of convolutional (conv.) layers, where we use filters with a very small receptive field: 3 × 3 (which is the smallest size to capture the notion of left/right, up/down,center).

In one of the configurations we also utilise 1 × 1 convolution filters, which can be seen as a linear transformation of the input channels (followed by non-linearity).

The convolution stride is fixed to 1 pixel; the spatial padding of conv. layer input is such that the spatial resolution is preserved after convolution, i.e. the padding is 1 pixel for 3 × 3 conv. layers. Spatial pooling is carried out by five max-pooling layers, which follow some of the conv. layers (not all the conv. layers are followed by max-pooling). Max-pooling is performed over a 2 × 2 pixel window, with stride 2.- 在训练阶段,卷积网络的输入是固定大小224x224的RGB 图像。

- 唯一的前置处理是 在 每个像素上减去RGB均值。

- 对图像进行多次卷积,我们使用具有非常小感受野的卷积3x3(这是捕捉左右上下中间的notion的最小的尺寸)

- 在 configurations众多模型架构中的另一个上,我们使用了适用时,LRN层的参数为(Krizhevsky等人,2012年)的参数。,可以看做对输入通道的线性变换。

- 卷积步长固定为1,使用填充是为了在卷积后保持分辨率,3x3卷积核填充是1像素。 在进行多次卷积后使用5x5大小的最大值池化(并非所有的卷积都有最大值池化)。池化性能 最好的是2x2大小,步长为2

A stack of convolutional layers (which has a different depth in different architectures) is followed by three Fully-Connected (FC) layers: the first two have 4096 channels each, the third performs 1000-way ILSVRC classification and thus contains 1000 channels (one for each class). The final layer is

the soft-max layer.

The configuration of the fully connected layers is the same in all networks.- 一些列的卷积层(不同模型卷积深度不同)后是3个全连接层:前两层都是4096输出,第三层是1000输出,最后是softmax.

- 所有网络结构的全连接设计都是相同的(作者提出多个模型,每个模型最后全连接设计相同)

All hidden layers are equipped with the rectification (ReLU (Krizhevsky et al., 2012)) non-linearity.

We note that none of our networks (except for one) contain Local Response Normalisation(LRN) normalisation (Krizhevsky et al., 2012): as will be shown in Sect. 4, such normalisation does not improve the performance on the ILSVRC dataset, but leads to increased memory consumption and computation time.

Where applicable, the parameters for the LRN layer are those of (Krizhevsky et al., 2012).- 所有的隐藏层都使用ReLU

- 我们声明:所有的架构模型都没有使用LRN ,正如Sect4所展示,LRN在ILSVRC数据集上上并没有提升性能,反而增加了内存以及计算时间

- 适用时,LRN层的参数为(Krizhevsky等人,2012年)的参数。

2.2 CONFIGURATIONS 配置

The ConvNet configurations, evaluated in this paper, are outlined in Table 1, one per column.

In the following we will refer to the nets by their names (A–E). All configurations follow the generic

design presented in Sect. 2.1, and differ only in the depth: from 11 weight layers in the network A(8 conv. and 3 FC layers) to 19 weight layers in the network E (16 conv. and 3 FC layers).

The width of conv. layers (the number of channels) is rather small, starting from 64 in the first layer and then increasing by a factor of 2 after each max-pooling layer, until it reaches 512.- 卷积网络的配置/设计的轮廓 如表1,每一列是一个卷积架构

- 接下来我们会使用A-E 代指 卷积网络架构

- 在2.1中已经大致说明了所有架构的设计, 这些架构的位移不同之处在于深度:从A网络 带权重的11层(8个卷积层+3个全连接) 到E网络的 19个带权重的层(16个卷积+3全连接)

- 卷积层的宽度(通道数)很小,从第一层的64,经过每一次池化增加两倍,最终到512,

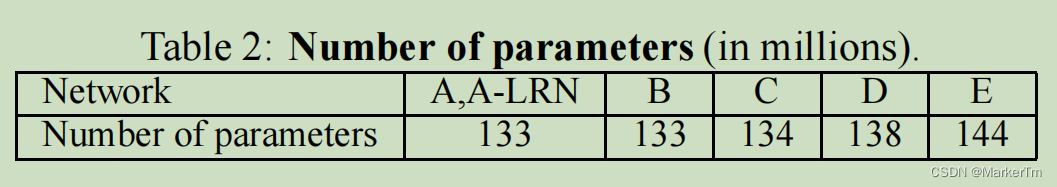

表1:卷积配置:从A到E模型卷积深度增加,卷积层参数描述为: con感受野大小-通道数。ReLU为了简洁没显示In Table 2 we report the number of parameters for each configuration. In spite of a large depth, the number of weights in our nets is not greater than the number of weights in a more shallow net with larger conv. layer widths and receptive fields (144M weights in (Sermanet et al., 2014)).

- 表2 我们说明了每个卷积设计/配置的参数量

- 尽管深度较大,但我们的网络中的权重数量不大于具有较大转换层宽度和感受野的较浅网络中的权值数量(2014年,Sermanet al.,144M权重)。

2.3 DISCUSSION 讨论

Our ConvNet configurations are quite different from the ones used in the top-performing entries of the ILSVRC-2012 (Krizhevsky et al., 2012) and ILSVRC-2013 competitions (Zeiler & Fergus, 2013; Sermanet et al., 2014).

Rather than using relatively large receptive fields in the first conv. layers (e.g. 11×11 with stride 4 in (Krizhevsky et al., 2012), or 7×7 with stride 2 in (Zeiler & Fergus, 2013; Sermanet et al., 2014)), we use very small 3 × 3 receptive fields throughout the whole net, which are convolved with the input at every pixel (with stride 1).

It is easy to see that a stack of two 3×3 conv. layers (without spatial pooling in between) has an effective receptive field of 5×5; three such layers have a 7 × 7 effective receptive field.

So what have we gained by using, for instance, a stack of three 3×3 conv. layers instead of a single 7×7 layer?

First, we incorporate three non-linear rectification layers instead of a single one, which makes the decision function more discriminative.

Second, we decrease the number of parameters: assuming that both the input and the output of a

three-layer 3 × 3 convolution stack has C channels, the stack is parametrised by 3- 我们的卷积模型与2012 2013 的top性能以及2014上的Sermanet 的ILSVRC 比赛上模型完全不同

- 在卷积的第一层,我们并没有使用大的感受野(如卷积核11x11,步长4, 7x7,步长2),我们在所有的卷积层使用3x3卷积,在输入图像上每个像素进行卷积(步长1)

- 很容易看出,两个3x3卷积的堆叠(两个卷积之间没有使用padding)与5x5感受野大小的卷积效果相同。3个3x3卷积与感受野大小7x7效果相同

- 所以,如果使用3个3x3卷积而不是单个7x7会得到?

- 首先,我们 使用 三合一的 非线性矫正层 而不是 单个卷积层,这会让决策函数更有 区分度

- 第二,我们减少参数量:假定 三层的3x3卷积 输入输出层都有C通道, 使用3个3x3卷积的参数量是 3 ( 3 2 C 2 ) = 27 C 2 3(3^2C^2)=27C^2 3(32C2)=27C2, 而使用单个7x7卷积的参数量 7 2 C 2 = 49 C 2 7^2C^2=49C^2 72C2=49C2参数量增加81%

- 这可以看成对7x7的卷积施加 正则化,强迫7x7卷积分解为3x3的卷积(其中有非线性)

The incorporation of 1 × 1 conv. layers (configuration C, Table 1) is a way to increase the nonlinearity of the decision function without affecting the receptive fields of the conv. layers. Eventhough in our case the 1 × 1 convolution is essentially a linear projection onto the space of the same dimensionality (the number of input and output channels is the same), an additional non-linearity is introduced by the rectification function. It should be noted that 1×1 conv. layers have recently been utilised in the “Network in Network” architecture of Lin et al. (2014).

- 使用1x1卷积(表1C) 是增加决策层非线性的一种方式

- 在我们的案例/情形下,尽管使用1x1卷积是做维度变化的必要方式(1x1卷积改变通道数,需要保证输入输出通道数相同),在做维度变化的同时,使用1x1增加非线性,也增加了矫正

- 在Lin et2014的文章中使用了1x1卷积

Small-size convolution filters have been previously used by Ciresan et al. (2011), but their nets are significantly less deep than ours, and they did not evaluate on the large-scale ILSVRC dataset.

Goodfellow et al. (2014) applied deep ConvNets (11 weight layers) to the task of street number recognition, and showed that the increased depth led to better performance.

GoogLeNet (Szegedy et al., 2014), a top-performing entry of the ILSVRC-2014 classification task, was developed independently of our work, but is similar in that it is based on very deep ConvNets (22 weight layers) and small convolution filters (apart from 3 × 3, they also use 1 × 1 and 5 × 5 convolutions).

Their network topology is, however, more complex than ours, and the spatial resolution of the feature maps is reduced more aggressively in the first layers to decrease the amount of computation. As will be shown in Sect. 4.5, our model is outperforming that of Szegedy et al. (2014) in terms of the single-network classification accuracy.- Ciresan 等之前就使用了小尺寸的卷积,但他的模型深度 要 远小于 我们模型的深度,而且他并没有在ILSVRC数据集上 进行评估

- Goodfellow et al. (2014)使用了深度卷积进行街道号码识别,结果表明随着深度增加,性能变好

- GoogLeNet (Szegedy et al., 2014),是2014年性能达到top的模型,该模型和我们之前的工作是独立(作者并没有借鉴该模型),但是两者有相似之处:都使用深度卷积(22个权重层),使用小卷积(除了3x3他也用了1x1, 5x5)

- 他们网络拓扑结构要比我们的复杂,为了减少计算量,特征图的分辨率在第一层被降低。正如Sect4.5所示,在分类精度方面我们的模型要优于Szegedy et al.(2014)

3 CLASSIFICATION FRAMEWORK

In the previous section we presented the details of our network configurations. In this section, we

describe the details of classification ConvNet training and evaluation.

在之前的章节,已经展示了网络模型配置的细节,在本章,我们接着展示 卷积网络的训练以及评估3.1 TRAINING

The ConvNet training procedure generally follows Krizhevsky et al. (2012) (except for sampling

the input crops from multi-scale training images, as explained later). Namely, the training is carried

out by optimising the multinomial logistic regression objective using mini-batch gradient descent

(based on back-propagation (LeCun et al., 1989)) with momentum. The batch size was set to 256,

momentum to 0.9. The training was regularised by weight decay (the L2 penalty multiplier set to

5 · 10−4) and dropout regularisation for the first two fully-connected layers (dropout ratio set to 0.5).

The learning rate was initially set to 10−2, and then decreased by a factor of 10 when the validation set accuracy stopped improving. In total, the learning rate was decreased 3 times, and the learning was stopped after 370K iterations (74 epochs). We conjecture that in spite of the larger number of parameters and the greater depth of our nets compared to (Krizhevsky et al., 2012), the nets required less epochs to converge due to (a) implicit regularisation imposed by greater depth and smaller conv. filter sizes; (b) pre-initialisation of certain layers.- 卷积训练过程遵循Krizhevsky等人(2012)(除了除了从多尺度训练图像中采样输入,如下文所述)

- 即,训练是通过使用 含momentum的小批量梯度下降(基于反向传播)的优化多项式逻辑回归目标(multinomial logistic regression objective)实现。

- batch-size=256, momentum=0.9, 训练通过权重衰减(L2惩罚系数设置为 5.1 0 − 4 5.10^{-4} 5.10−4, 第一层、第二层全连接层的dropout=0.5, 学习率lr初始化为 lr= 1 0 − 2 10^{-2} 10−2,当验证集精度不在提升时,lr减少10倍

- 共计,学习率下降了三次,经过370K次迭代(74个epochs),学习率不在下降

- 我们假设:尽管 我们网络结构的参数深度要比 Krizhevsky et al., 2012多,深,但是这个忘了结构需要更少的epoch达到收敛,因为: a)更大深度以及小卷积所带来的 含蓄的( implicit )正则化 b)某些层的预初始化

The initialisation of the network weights is important, since bad initialisation can stall learning due

to the instability of gradient in deep nets. To circumvent this problem, we began with training

the configuration A (Table 1), shallow enough to be trained with random initialisation. Then, when

training deeper architectures, we initialised the first four convolutional layers and the last three fully-

connected layers with the layers of net A (the intermediate layers were initialised randomly). We did

not decrease the learning rate for the pre-initialised layers, allowing them to change during learning.

For random initialisation (where applicable), we sampled the weights from a normal distribution

with the zero mean and 10−2 variance. The biases were initialised with zero. It is worth noting that

after the paper submission we found that it is possible to initialise the weights without pre-training

by using the random initialisation procedure of Glorot & Bengio (2010).- 网络权重初始化是重要的,因为由于深度神经网络梯度不稳定,不好的初始化可以导致学习率停滞

- 为了规避这个问题,我们使用配置A(表1),因为网络比较浅,所以可以随机初始化进行训练。

- 当训练深的网络是,我们 使用配置A 的参数初始化深的网络的 第一个 4个卷积层 以及最后的 三个全连接层, 深度网络中间层的参数采用随机初始化

- 对于已经预初始化的层 ,我们没有 减小学习率, 在学习的过程中允许他们改变(他们指的??)

- 对于随机初始化 的,从均值为0方差为 1 0 − 2 10^{-2} 10−2随机抽取

- 偏置 初始化为0

- 值得注意的是, 在提交论文后,我们发现, 可以使用Glorot & Bengio (2010随机初始化)程序 对 进行 没有预训练 的权重进行初始化

To obtain the fixed-size 224×224 ConvNet input images, they were randomly cropped from rescaled

training images (one crop per image per SGD iteration). To further augment the training set, the

crops underwent random horizontal flipping and random RGB colour shift (Krizhevsky et al., 2012).

Training image rescaling is explained below.- 为了获得固定大小的224x224的图片进行卷积输入,我们从重新缩放的训练图片(每次SGD迭代每个图像一次裁剪)随机裁剪

- 为了增强训练集,进行随机水平翻转和随机RGB色移Krizhevsky等人,2012)。

- 下面解释图片缩放

Training image size. Let S be the smallest side of an isotropically-rescaled training image, from

which the ConvNet input is cropped (we also refer to S as the training scale). While the crop size

is fixed to 224 × 224, in principle S can take on any value not less than 224: for S = 224 the crop

will capture whole-image statistics, completely spanning the smallest side of a training image; for

S ≫ 224 the crop will correspond to a small part of the image, containing a small object or an object

part.- List item

We consider two approaches for setting the training scale S. The first is to fix S, which corresponds

to single-scale training (note that image content within the sampled crops can still represent multi-

scale image statistics). In our experiments, we evaluated models trained at two fixed scales: S =

256 (which has been widely used in the prior art (Krizhevsky et al., 2012; Zeiler & Fergus, 2013;

Sermanet et al., 2014)) and S = 384. Given a ConvNet configuration, we first trained the network

using S = 256. To speed-up training of the S = 384 network, it was initialised with the weights

pre-trained with S = 256, and we used a smaller initial learning rate of 10−3. -

相关阅读:

POJO>JavaBean

短视频ai剪辑分发账号矩阵系统(招商oem)----源头技术开发

嵌入式 Linux 入门(四、Linux 下的编辑器 — 让人爱恨交加的 vi )

file_get_contents 与curl 的对比

计算机毕业设计(附源码)python智慧停车系统

【华为OD机试真题 python】 靠谱的车【2022 Q4 | 100分】

animate动画库的使用步骤

sql登录报错18456和233怎么解决

技术人的面试求职套路

三维电子沙盘数字沙盘M3DGIS大数据人工智能开发课程第8课

- 原文地址:https://blog.csdn.net/weixin_42382758/article/details/126240884