-

Hadoop伪分布式搭建教程(小白教程)

安装前提

- 必需知道Linux基础命令的使用,比如 vim 的使用

教程推荐:https://blog.csdn.net/weixin_55305220/article/details/123588501

准备工作

- 这里使用的是刚刚安装好的Centos镜像

资源下载:

JDK:https://www.jianguoyun.com/p/DaJ9OJ0Q7uH1ChiJr9cEIAA

Hadoop:https://www.jianguoyun.com/p/DdSqSrkQ7uH1ChiHr9cEIAA- 先配置网络

网络配置教程推荐:https://blog.csdn.net/qq_41474121/article/details/108929640

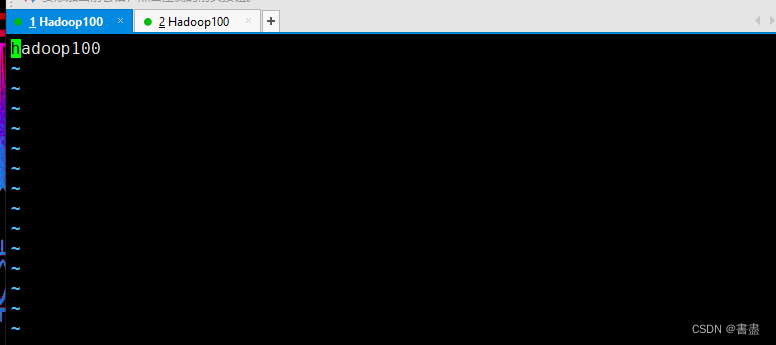

- 更改主机名,这里使用Xshell连接虚拟机

vim /etc/hostname- 1

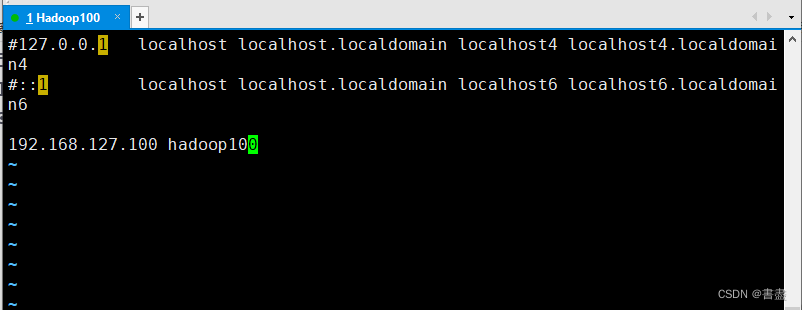

#修改IP及主机名映射 vim /etc/hosts- 1

- 2

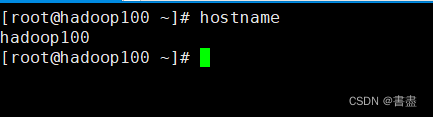

- 重新启动一下

#重启虚拟机 reboot #查看主机名 hostname- 1

- 2

- 3

- 4

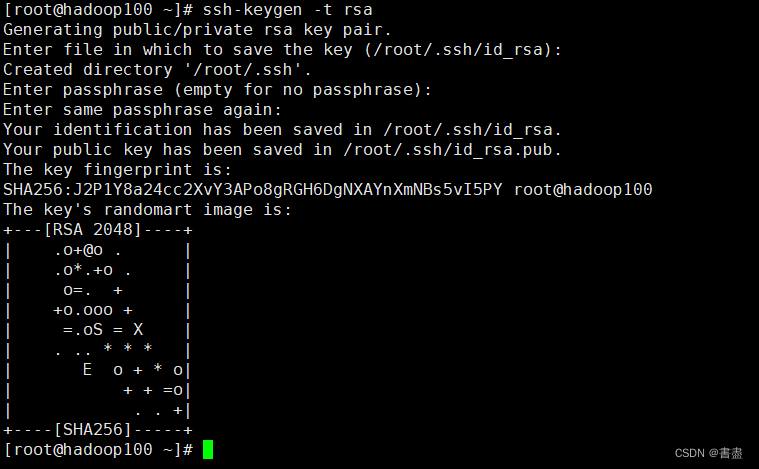

SSH免密登陆

# 连续三次回车即可 ssh-keygen -t rsa- 1

- 2

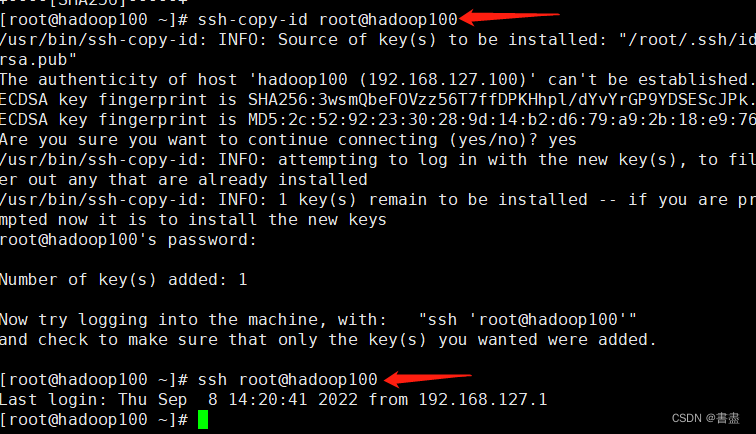

ssh-copy-id root@hadoop100 #测试ssh ssh root@hadoop100- 1

- 2

- 3

- 4

没有问题,完成配置

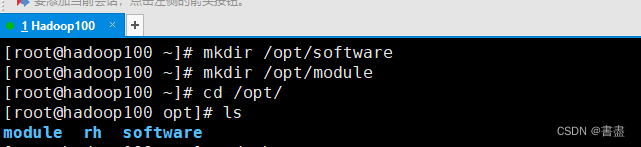

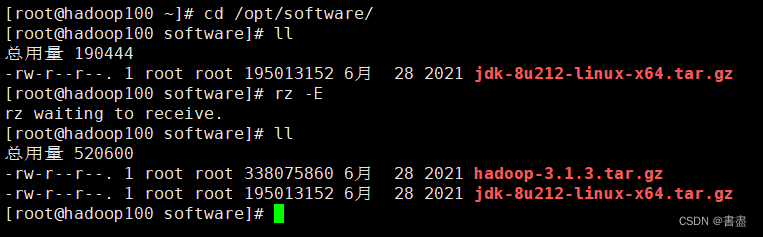

#创建两个文件夹 mkdir /opt/software #放安装包 mkdir /opt/module #放解压好的组件- 1

- 2

- 3

JDK配置

- 注意得先卸载自带的JDK

openjdk =>>先卸载它

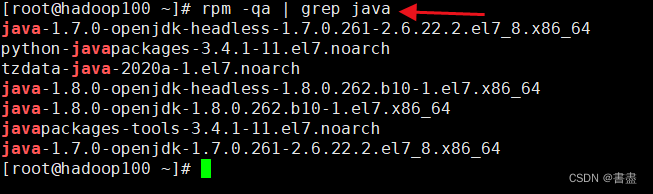

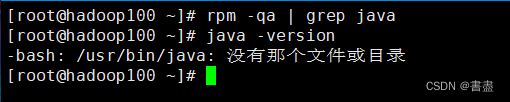

#查看自带的JDK rpm -qa | grep java- 1

- 2

#卸载命令,一一卸载 rpm -e --nodeps #使用这个 rpm -e --nodeps java-1.7.0-openjdk-headless-1.7.0.261-2.6.22.2.el7_8.x86_64 ...... #出现如下即可- 1

- 2

- 3

- 4

- 5

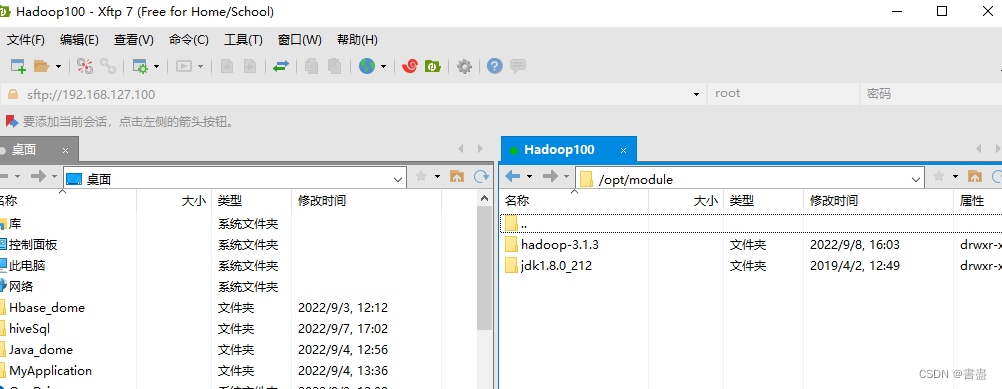

使用Xftp上传

或者使用Xshell直接从windows拖进去就行

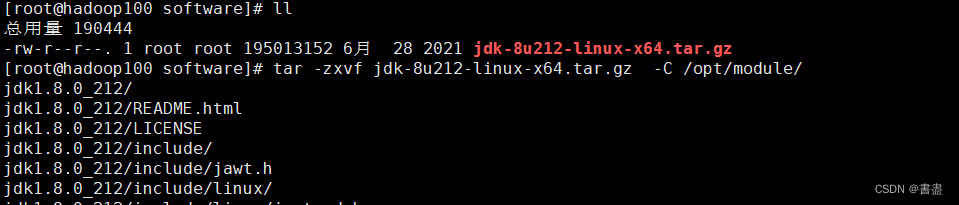

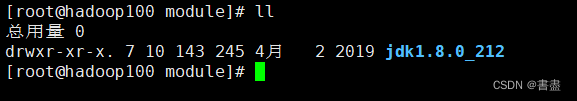

- 解压

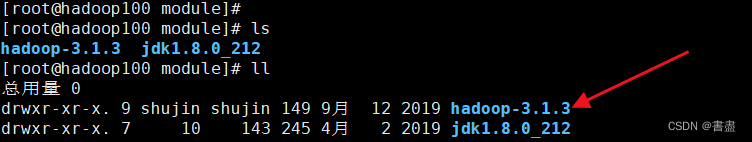

tar -zxvf jdk-8u212-linux-x64.tar.gz -C /opt/module/- 1

- 配置环境变量

vim /etc/profile #加入如下配置 export JAVA_HOME=/opt/module/jdk1.8.0_212 export PATH=$PATH:$JAVA_HOME/bin- 1

- 2

- 3

- 4

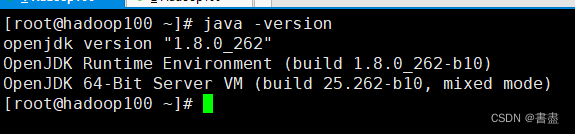

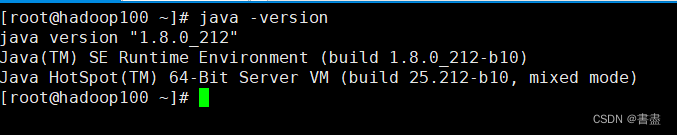

#保存后刷新环境变量 source /etc/profile #查看JDK 如下 java -version- 1

- 2

- 3

- 4

- 5

Hadoop配置

- 上传压缩包

- 解压

tar -zxvf hadoop-3.1.3.tar.gz -C /opt/module/- 1

- 修改配置文件

cd /opt/module/hadoop-3.1.3/etc/hadoop/ #修改 hadoop-env.sh 文件 vim hadoop-env.sh export JAVA_HOME=/opt/module/jdk1.8.0_212- 1

- 2

- 3

- 4

- 5

注意JDK路径,必需正确

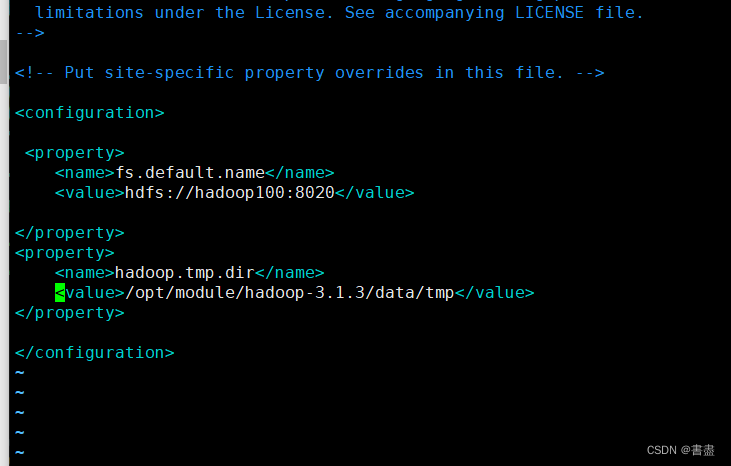

#修改 core-site.xml vim core-site.xml- 1

- 2

<configuration> <property> <name>fs.default.name</name> <value>hdfs://hadoop100:8020</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/opt/module/hadoop-3.1.3/data/tmp</value> </property> </configuration>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

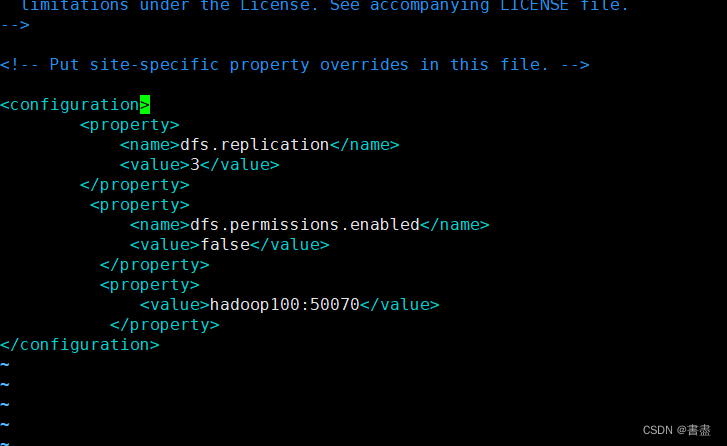

# 修改 hdfs-site.xml vim hdfs-site.xml- 1

- 2

<configuration> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.permissions.enabled</name> <value>false</value> </property> <property> <value>hadoop100:9870</value> </property> </configuration>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

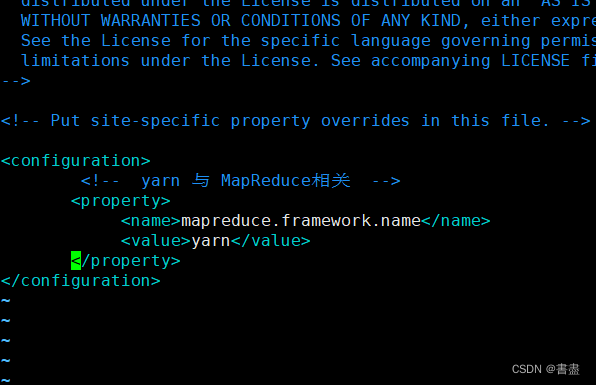

#修改 mapred-site.xml vim mapred-site.xml- 1

- 2

<configuration> <!-- yarn 与 MapReduce相关 --> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>- 1

- 2

- 3

- 4

- 5

- 6

- 7

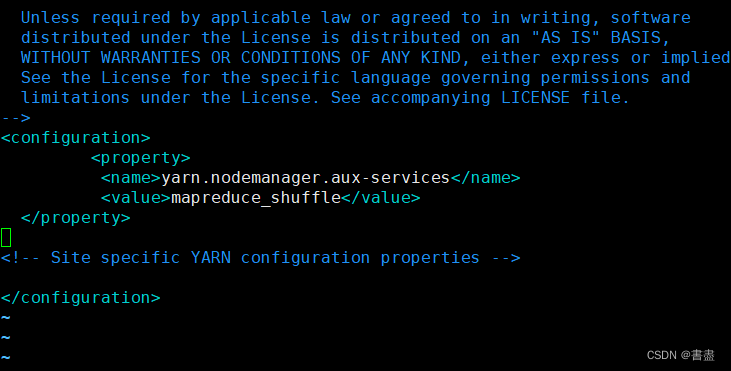

#修改 yarn-site.xml vim yarn-site.xml- 1

- 2

<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration>- 1

- 2

- 3

- 4

- 5

- 6

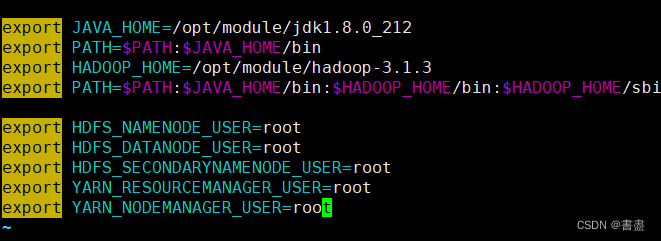

- 配置Hadoop环境变量

vim /etc/profile- 1

export HADOOP_HOME=/opt/module/hadoop-3.1.3 export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin export HDFS_NAMENODE_USER=root export HDFS_DATANODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root export YARN_RESOURCEMANAGER_USER=root export YARN_NODEMANAGER_USER=root- 1

- 2

- 3

- 4

- 5

- 6

- 7

# 刷新环境变量 source /etc/profile- 1

- 2

- 验证环境变量是否配置成功

hadoop version- 1

- 格式化NameNode

hadoop namenode -format- 1

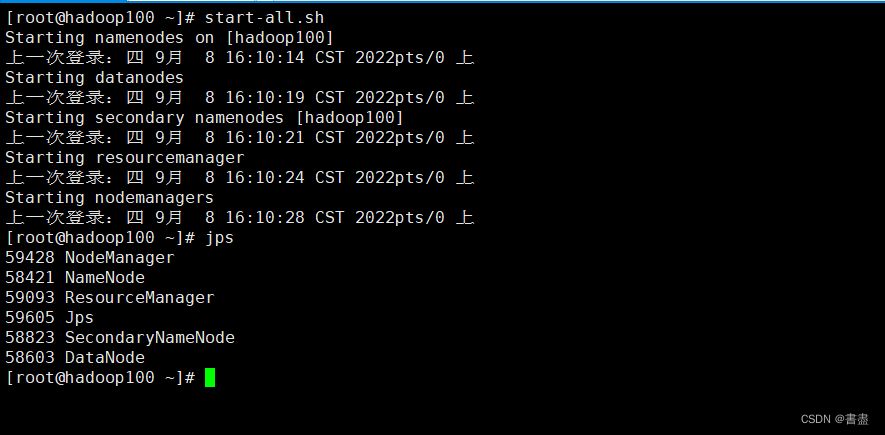

- Hadoop起停命令

start-all.sh #启动 stop-all.sh #停止- 1

- 2

#输入 jps 查看进程 jps- 1

- 2

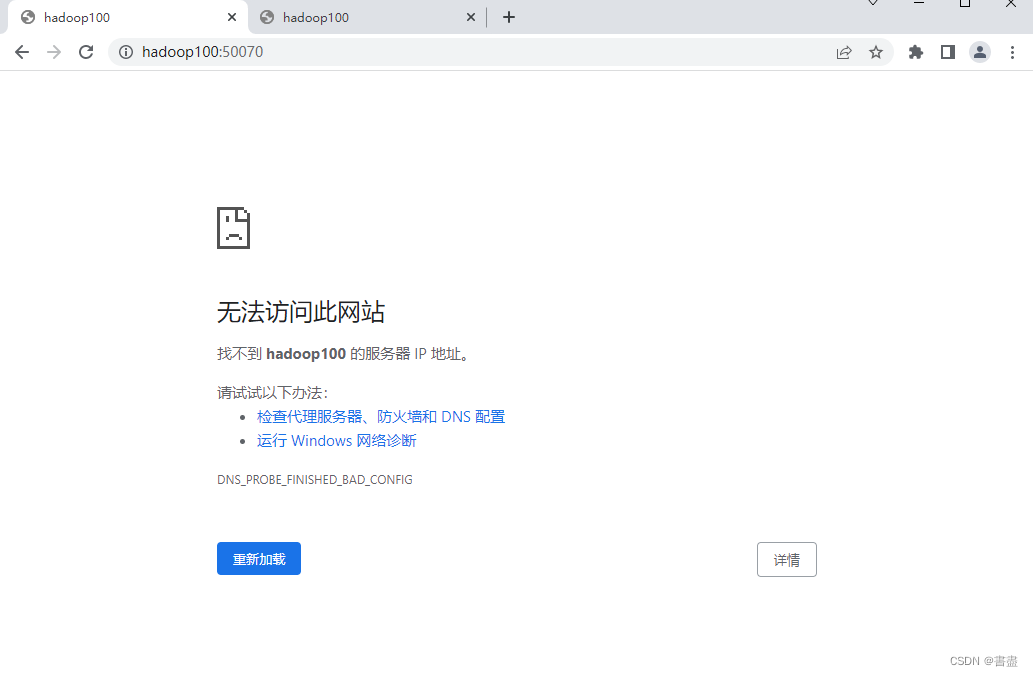

注意自己的IP地址,这里得使用自己的IP

http://192.168.127.100:9870 访问 hdfs

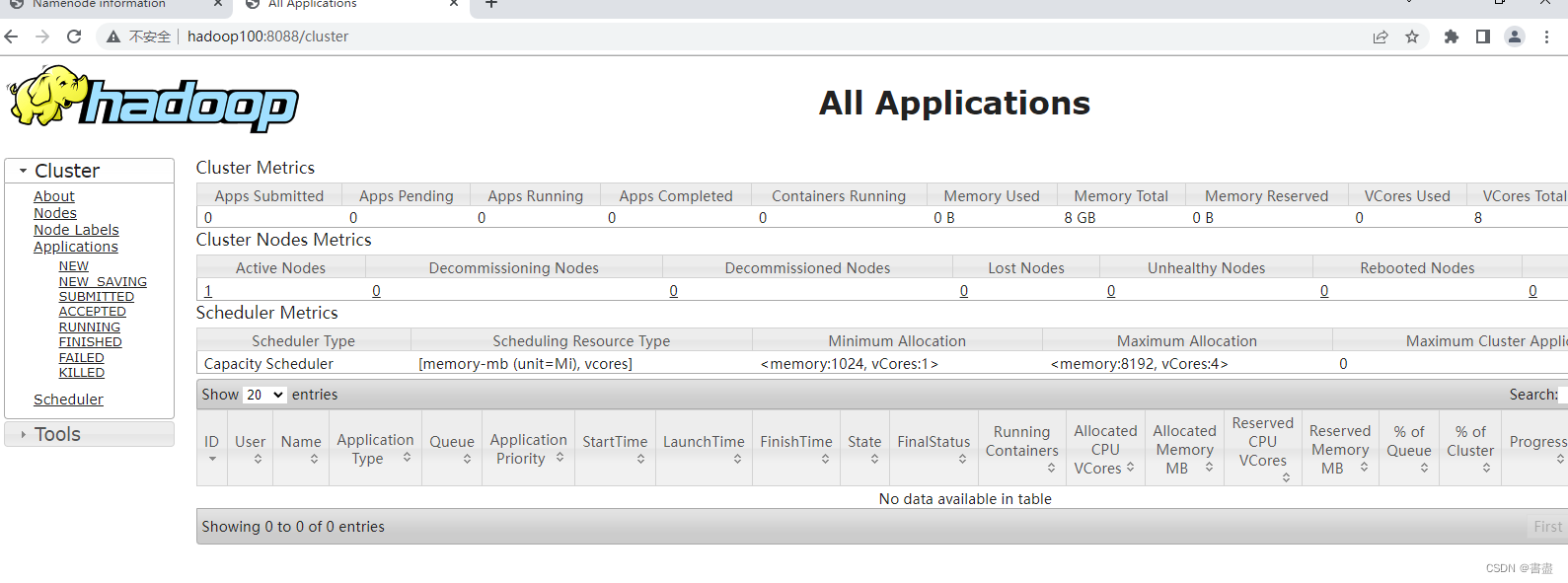

http://192.168.127.100:8088 访问 yarn- 如打开游览器无法查看

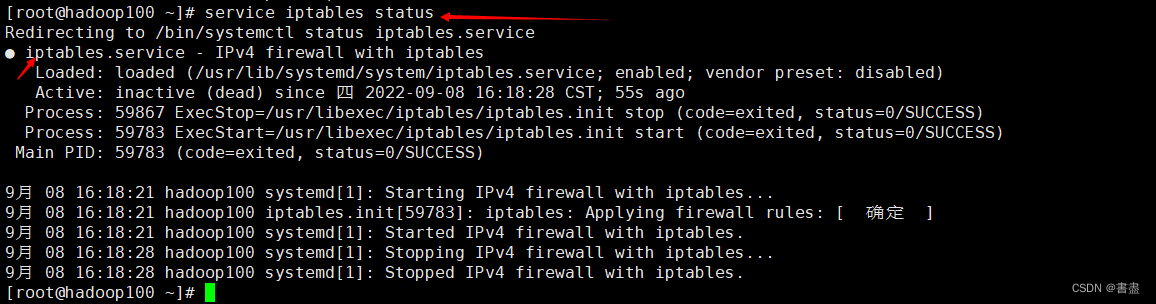

- 检查防火墙是否关闭

查看防火墙状态: service iptables status 关闭防火墙: service iptables stop irewalld 方式 启动: systemctl start firewalld 查看状态: systemctl status firewalld 禁用,禁止开机启动: systemctl disable firewalld 停止运行: systemctl stop firewalld 重启:firewall-cmd --reload- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 防火墙已经关闭

- 再次打开游览器

总结

- 网络一定要配置好

- 主机名称后面不能有空格

- 一定要细心仔细

-

相关阅读:

VIM操作

ASEMI高压二极管CL08-RG210参数,CL08-RG210封装

react中jsx语法

快递查询 根据城市名称筛选最后站点城市的单号

什么是Jmeter?Jmeter使用的原理步骤是什么?

nginx反向代理.NetCore开发的基于WebApi创建的gRPC服务

SpringCore完整学习教程3,入门级别

Win10 Mysql 8.0.26版本忘记密码后重新修改。

cesium态势标会(距离测量 ---- 不可修改)

伪选择器和伪元素选择器

- 原文地址:https://blog.csdn.net/weixin_51309151/article/details/126762995