-

CNN经典架构

前言

近几年来,我们见证了无数CNN的诞生,本篇文章介绍了CNN的5种经典架构:

- LeNet

- AlexNet

- VGGNet(VGG-16)

- ResNet

- GoogLeNet

MNIST数据集手写数字识别请移步:PyTorch实现MNIST数据集手写数字识别

以下面案例可供参考一、LeNet

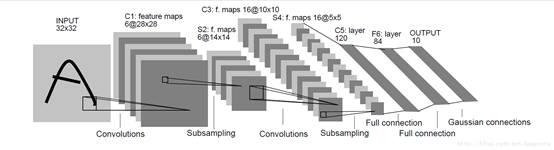

在 LeNet 中,第一个卷积池化层就像是提取线稿,第二个卷积池化层就像是提取框架,然后放入全连接网络中进行训练,拟合出一个函数。LeNet模型结构:

- 2个卷积-池化层

- 3个全连接层

模型代码如下:

class LeNet(torch.nn.Module): def __init__(self, label_num=10): super(LeNet, self).__init__() self.conv_pool_1 = torch.nn.Sequential( # 卷积层 (1*28*28) -> 6*28*28) torch.nn.Conv2d(in_channels=1, out_channels=6, kernel_size=5, stride=1, padding=2), torch.nn.ReLU(), # 池化层 (6*28*28) -> (6*14*14) torch.nn.MaxPool2d(kernel_size=2, stride=2) ) self.conv_pool_2 = torch.nn.Sequential( # 卷积层 (6*14*14) -> (16*10*10) torch.nn.Conv2d(in_channels=6, out_channels=16, kernel_size=5, stride=1, padding=0), torch.nn.ReLU(), # 池化层 (16*10*10) -> (16*5*5) torch.nn.MaxPool2d(2, 2) ) self.fc = torch.nn.Sequential( # 将卷积池化后的tensor拉成向量 torch.nn.Flatten(), # 全连接层 16*5*5 -> 120 torch.nn.Linear(16 * 5 * 5, 120), torch.nn.ReLU(), # 全连接层 120 -> 84 torch.nn.Linear(120, 84), torch.nn.ReLU(), # 全连接层 84 -> 10 torch.nn.Linear(84, label_num) ) def forward(self, x): x = self.conv_pool_1(x) x = self.conv_pool_2(x) x = self.fc(x) return x- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

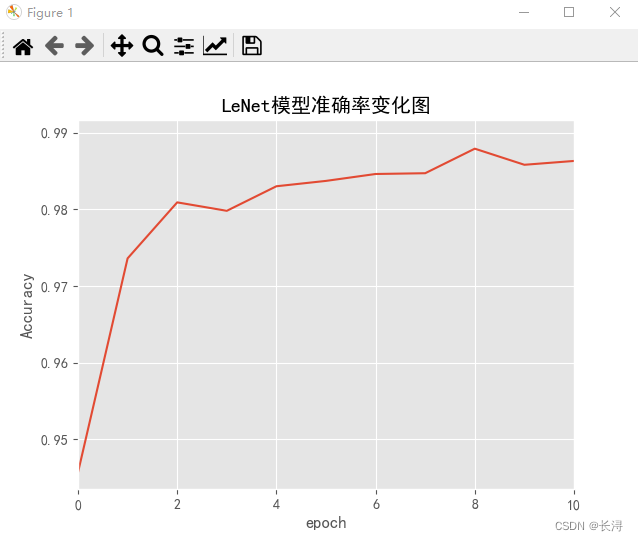

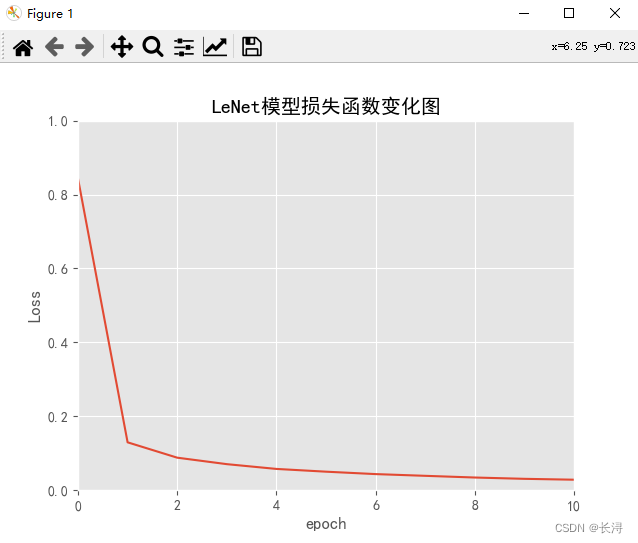

运行结果(MNIST数据集手写数字识别结果):

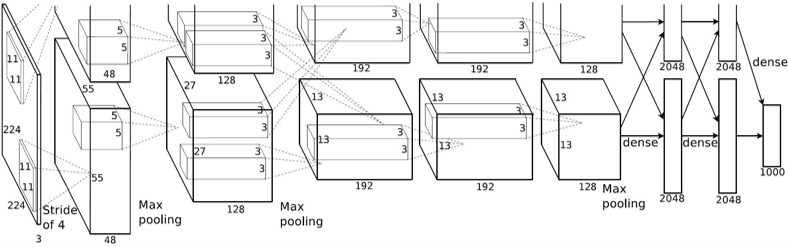

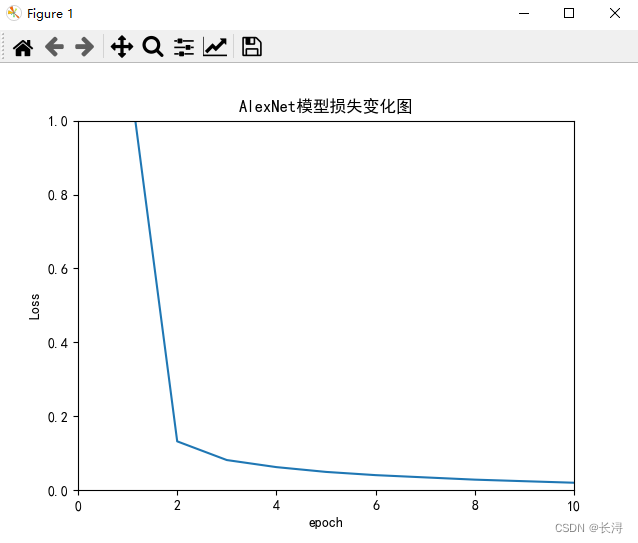

二、AlexNet

AlexNet是2012年ImageNet竞赛冠军获得者Hinton和他的学生Alex Krizhevsky设计的。特点是在全连接层的前两层中首次使用了 Dropout 随机失活神经元操作,引入Dropout主要是为了防止过拟合。

AlexNet模型结构:

- 5 层卷积(2卷积-池化层+3卷积层+1池化层)

- 3 个全连接层

模型代码如下:

class AlexNet(torch.nn.Module): def __init__(self, label_num=10, dropout=0): super(AlexNet,self).__init__() self.conv_pool_1 = torch.nn.Sequential( # 卷积层 (1*28*28) -> (24*28*28) torch.nn.Conv2d(in_channels=1, out_channels=24, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), # 池化层 (24*28*28) -> (24*14*14) torch.nn.MaxPool2d(kernel_size=2, stride=2), torch.nn.LocalResponseNorm(size=3) ) self.conv_pool_2 = torch.nn.Sequential( # 卷积层 (24*14*14) -> (64*14*14) torch.nn.Conv2d(in_channels=24, out_channels=64, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), # 池化层 (64*14*14) -> (64*7*7) torch.nn.MaxPool2d(kernel_size=2, stride=2), torch.nn.LocalResponseNorm(size=3) ) self.conv_pool_3 = torch.nn.Sequential( # 卷积层 (64*7*7) -> (96*7*7) torch.nn.Conv2d(in_channels=64, out_channels=96, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), # 卷积层 (96*7*7) -> (96*7*7) torch.nn.Conv2d(in_channels=96, out_channels=96, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), # 卷积层 (96*7*7) -> (64*7*7) torch.nn.Conv2d(in_channels=96, out_channels=64, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), # 池化层 (64*7*7) -> (64*3*3) torch.nn.MaxPool2d(kernel_size=2, stride=2) ) self.fc = torch.nn.Sequential( # 将卷积池化后的tensor拉成向量 torch.nn.Flatten(), # dropout torch.nn.Dropout(dropout), # 全连接层 (64*3*3) -> (512) torch.nn.Linear(64 * 3 * 3, 512), torch.nn.ReLU(), # dropout torch.nn.Dropout(dropout), # 全连接层 (512) -> (512) torch.nn.Linear(512, 512), torch.nn.ReLU(), # dropout torch.nn.Dropout(dropout), # 全连接层 (512) -> (10) torch.nn.Linear(512, label_num) ) def forward(self,x): x = self.conv_pool_1(x) x = self.conv_pool_2(x) x = self.conv_pool_3(x) x = self.fc(x) return x- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

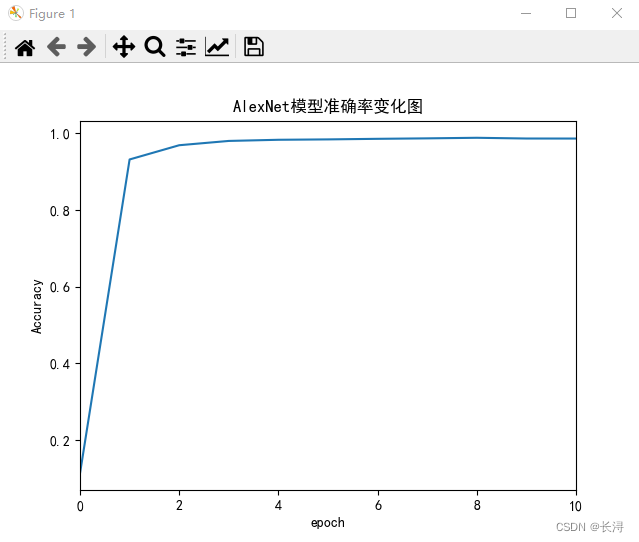

运行结果:

三、VGGNet(VGG-16)

到现在为止,你可能已经注意到 CNN 开始变得越来越深入。这是因为提高深度神经网络性能最直接的方法是增加它们的大小。Visual Geometry Group (VGG) 的人发明了 VGG-16,它有 13 个卷积层和 3 个全连接层,同时继承了 AlexNet 的 ReLU 传统。该网络在 AlexNet 上堆叠了更多层,并使用了更小的过滤器(2×2 和 3×3)。

VGGNet模型结构:

- 13个卷积层(2个 卷积(2)-池化 + 3个 卷积(3)-池化)

- 3个全连接层

模型代码如下:

class VGGNet(torch.nn.Module): def __init__(self, label_num = 10, dropout=0): super(VGGNet, self).__init__() self.conv_pool_1 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=1, out_channels=4, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.Conv2d(in_channels=4, out_channels=4, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.MaxPool2d(kernel_size=2, stride=1, padding=1) ) self.conv_pool_2 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=4, out_channels=8, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.Conv2d(in_channels=8, out_channels=8, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.MaxPool2d(kernel_size=2, stride=1, padding=1) ) self.conv_pool_3 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=8, out_channels=16, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.Conv2d(in_channels=16, out_channels=16, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.Conv2d(in_channels=16, out_channels=16, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.MaxPool2d(kernel_size=2, stride=2) ) self.conv_pool_4 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=16, out_channels=32, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.Conv2d(in_channels=32, out_channels=32, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.Conv2d(in_channels=32, out_channels=32, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.MaxPool2d(kernel_size=2, stride=2) ) self.conv_pool_5 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=32, out_channels=32, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.Conv2d(in_channels=32, out_channels=32, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.Conv2d(in_channels=32, out_channels=32, kernel_size=3, stride=1, padding=1), torch.nn.ReLU(), torch.nn.MaxPool2d(kernel_size=2, stride=2) ) self.fc = torch.nn.Sequential( torch.nn.Flatten(), torch.nn.Dropout(dropout), torch.nn.Linear(32 * 3 * 3, 256), torch.nn.ReLU(), torch.nn.Dropout(dropout), torch.nn.Linear(256, 256), torch.nn.ReLU(), torch.nn.Dropout(dropout), torch.nn.Linear(256, 256), torch.nn.ReLU(), torch.nn.Dropout(dropout), torch.nn.Linear(256, label_num) ) def forward(self, x): x = self.conv_pool_1(x) x = self.conv_pool_2(x) x = self.conv_pool_3(x) x = self.conv_pool_4(x) x = self.conv_pool_5(x) x = self.fc(x) return x- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

这里提一下,使用多个3×3卷积堆叠的作用有两个:

- 在不影响感受野的前提下减少了参数;

- 增加了网络的非线性。

由于MNIST数据集过于简单,在使用VGGNet

出来了四、ResNet

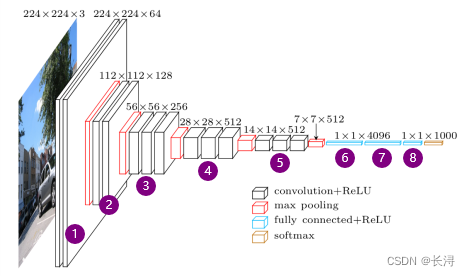

从过去的几个 CNN 中,我们只看到设计中的层数越来越多,并获得了更好的性能。但网络层数达到一定深度的时候,准确率就会达到饱和,然后迅速下降。

原因是由于当神经网络越来越深的时候,反传回来的梯度之间的相关性会越来越差,最后接近白噪声。微软研究院的人用 ResNet 解决了这个问题——使用跳过连接(又名快捷连接,残差),同时构建更深层次的模型。

ResNet 是批标准化的早期采用者之一(由 Ioffe 和 Szegedy 撰写的批规范论文于 2015 年提交给 ICML)。

模型结构:- 卷积池化层

- 残差层(由多个残差块构成)

- 平均池化层

- 全连接层

模型代码如下:

class BasicBlock(torch.nn.Module): multiplier = 1 def __init__(self, in_channels, out_channels, stride=1): super(BasicBlock, self).__init__() self.conv1 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=3, stride=stride, padding=1, bias=False), torch.nn.BatchNorm2d(out_channels), torch.nn.ReLU() ) self.conv2 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=out_channels, out_channels=out_channels * self.multiplier, kernel_size=3, stride=1, padding=1, bias=False), torch.nn.BatchNorm2d(out_channels * self.multiplier), ) self.shortcut = torch.nn.Sequential() if in_channels != out_channels * self.multiplier or stride != 1: self.shortcut = torch.nn.Sequential( torch.nn.Conv2d(in_channels=in_channels, out_channels=out_channels * self.multiplier, kernel_size=1, stride=stride, padding=0, bias=False), torch.nn.BatchNorm2d(out_channels * self.multiplier) ) self.relu = torch.nn.ReLU() def forward(self, x): residual = self.conv2(self.conv1(x)) shortcut = self.shortcut(x) return self.relu(residual + shortcut) class Bottleneck(torch.nn.Module): multiplier = 4 def __init__(self, in_channels, out_channels, stride=1): super(Bottleneck, self).__init__() self.conv1 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=1, padding=0, bias=False), torch.nn.BatchNorm2d(out_channels), torch.nn.ReLU() ) self.conv2 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=out_channels, out_channels=out_channels, kernel_size=3, stride=stride, padding=1, bias=False), torch.nn.BatchNorm2d(out_channels), torch.nn.ReLU() ) self.conv3 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=out_channels, out_channels=out_channels * self.multiplier, kernel_size=1, stride=1, padding=0, bias=False), torch.nn.BatchNorm2d(out_channels * self.multiplier) ) self.shortcut = torch.nn.Sequential() if in_channels != out_channels * self.multiplier or stride != 1: self.shortcut = torch.nn.Sequential( torch.nn.Conv2d(in_channels=in_channels, out_channels=out_channels * self.multiplier, kernel_size=1, stride=stride, padding=0, bias=False), torch.nn.BatchNorm2d(out_channels * self.multiplier) ) self.relu = torch.nn.ReLU() def forward(self, x): resiudual = self.conv3(self.conv2(self.conv1(x))) shortcut = self.shortcut(x) return self.relu(resiudual + shortcut) class ResNet(torch.nn.Module): def __init__(self, layer_num=18, label_num=10): super(ResNet, self).__init__() self.base_channels = 64 block_type, block_nums = self.res_net_params(layer_num) self.conv_pool_layer = torch.nn.Sequential( torch.nn.Conv2d(in_channels=1, out_channels=self.base_channels, kernel_size=7, stride=2, padding=3, bias=False), torch.nn.BatchNorm2d(self.base_channels), torch.nn.ReLU(), torch.nn.MaxPool2d(kernel_size=3, stride=1, padding=1) ) self.res_layers = torch.nn.Sequential( self.res_layer(block_type, 64, block_nums[0], stride=1), self.res_layer(block_type, 128, block_nums[1], stride=2), self.res_layer(block_type, 256, block_nums[2], stride=2), self.res_layer(block_type, 512, block_nums[3], stride=2) ) # 平均池化,平均池化成1*1 self.avg_pool_layer = torch.nn.AdaptiveAvgPool2d((1, 1)) self.fc_layer = torch.nn.Sequential( torch.nn.Flatten(), torch.nn.Linear(512 * block_type.multiplier, label_num) ) def res_layer(self, block_type, out_channel, block_num, stride): blocks = [] for _ in range(block_num): new_block = block_type(in_channels=self.base_channels, out_channels=out_channel, stride=stride) blocks.append(new_block) self.base_channels = out_channel * new_block.multiplier return torch.nn.Sequential(*blocks) def res_net_params(self, layer_num): if layer_num == 18: return BasicBlock, [2, 2, 2, 2] if layer_num == 34: return BasicBlock, [3, 4, 6, 3] if layer_num == 50: return Bottleneck, [3, 4, 6, 3] if layer_num == 101: return Bottleneck, [3, 4, 23, 3] if layer_num == 152: return Bottleneck, [3, 8, 36, 3] def forward(self, x): x = self.conv_pool_layer(x) x = self.res_layers(x) x = self.avg_pool_layer(x) x = self.fc_layer(x) return x- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

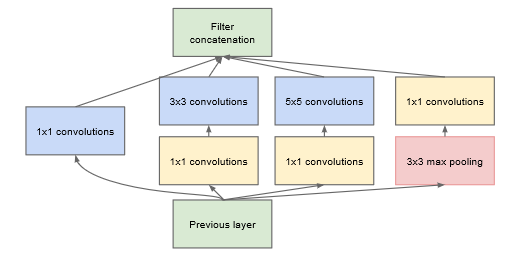

五、GoogLeNet

GoogLeNet 最大的特点就是 inception 的设计,主要是为了解决网络训练问题,随着网络深度越来越深,参数也越来越多,这使得网络训练越来越慢,同时也会带来其他副作用:梯度消失/爆炸,过拟合等。

inception 的设计就是为了缓解这些情况。

模型代码如下:# GoogLeNet Inception模块 class Inception(torch.nn.Module): def __init__(self, in_channels, out_channels_1, out_channels_2_1, out_channels_2_2, out_channels_3_1, out_channels_3_2, out_channels_4): super(Inception, self).__init__() self.branch1 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=in_channels, out_channels=out_channels_1, kernel_size=1), torch.nn.BatchNorm2d(out_channels_1), torch.nn.ReLU() ) self.branch2 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=in_channels, out_channels=out_channels_2_1, kernel_size=1), torch.nn.BatchNorm2d(out_channels_2_1), torch.nn.ReLU(), torch.nn.Conv2d(in_channels=out_channels_2_1, out_channels=out_channels_2_2,kernel_size=3, padding=1), torch.nn.BatchNorm2d(out_channels_2_2), torch.nn.ReLU() ) self.branch3 = torch.nn.Sequential( torch.nn.Conv2d(in_channels=in_channels, out_channels=out_channels_3_1, kernel_size=1), torch.nn.BatchNorm2d(out_channels_3_1), torch.nn.ReLU(), torch.nn.Conv2d(in_channels=out_channels_3_1, out_channels=out_channels_3_2, kernel_size=5, padding=2), torch.nn.BatchNorm2d(out_channels_3_2), torch.nn.ReLU() ) self.branch4 = torch.nn.Sequential( torch.nn.MaxPool2d(kernel_size=3, stride=1, padding=1), torch.nn.Conv2d(in_channels=in_channels, out_channels=out_channels_4, kernel_size=1), torch.nn.BatchNorm2d(out_channels_4), torch.nn.ReLU() ) def forward(self, x): x_1 = self.branch1(x) x_2 = self.branch2(x) x_3 = self.branch3(x) x_4 = self.branch4(x) x = torch.cat([x_1, x_2, x_3, x_4], 1) return x # 辅助分类器 class AuxClassifier(torch.nn.Module): def __init__(self, in_channels, label_num=10, dropout=0.5): super(AuxClassifier, self).__init__() self.average_pool = torch.nn.AvgPool2d(kernel_size=5, stride=3) self.conv = torch.nn.Sequential( torch.nn.Conv2d(in_channels=in_channels, out_channels=128, kernel_size=1), torch.nn.BatchNorm2d(128), torch.nn.ReLU() ) self.fc = torch.nn.Sequential( torch.nn.Flatten(), torch.nn.Dropout(dropout), torch.nn.Linear(128 * 4 * 4, 1024), torch.nn.ReLU(), torch.nn.Dropout(dropout), torch.nn.Linear(1024, label_num), torch.nn.ReLU() ) self.softmax = torch.nn.Softmax(dim=1) def forward(self, x): x = self.average_pool(x) x = self.conv(x) x = self.fc(x) x = self.softmax(x) return x # GoogLeNet class GoogLeNet(torch.nn.Module): def __init__(self, label_num=10, dropout=0.5, aux=False): super(GoogLeNet, self).__init__() self.aux = aux self.conv_pool = torch.nn.Sequential( # (1*28*28) -> (8*28*28) torch.nn.Conv2d(in_channels=1, out_channels=8, kernel_size=7, stride=1, padding=3), torch.nn.BatchNorm2d(8), torch.nn.ReLU(), # (8*28*28) -> (8*14*14) torch.nn.MaxPool2d(kernel_size=3, stride=2, padding=1), # (8*14*14) -> (8*14*14) torch.nn.Conv2d(in_channels=8, out_channels=8, kernel_size=1), torch.nn.BatchNorm2d(8), torch.nn.ReLU(), # (8*14*14) -> (24*14*14) torch.nn.Conv2d(in_channels=8, out_channels=24, kernel_size=3, stride=1, padding=1), torch.nn.BatchNorm2d(24), torch.nn.ReLU(), # (24*14*14) -> (24*7*7) torch.nn.MaxPool2d(kernel_size=3, stride=2, padding=1) ) self.inceptions_1 = torch.nn.Sequential( Inception(24, 8, 12, 16, 2, 4, 4), # inception3a Inception(32, 16, 16, 24, 4, 12, 8), # inception3b # (24*7*7) -> (24*3*3) torch.nn.MaxPool2d(kernel_size=3, stride=2), # MaxPool 3*3+2(S) Inception(60, 24, 12, 26, 2, 6, 8) # inception4a ) self.aux1 = AuxClassifier(64, label_num, dropout) self.inceptions_2 = torch.nn.Sequential( Inception(64, 20, 14, 28, 3, 8, 8), # inception4b Inception(64, 16, 16, 32, 3, 8, 8), # inception4c Inception(64, 14, 18, 36, 4, 8, 8), # inception4d Inception(66, 32, 20, 40, 4, 16, 16), # inception4e # (24*3*3) -> (24*1*1) torch.nn.MaxPool2d(kernel_size=3, stride=2) # MaxPool 3*3+2(S) ) self.aux2 = AuxClassifier(66, label_num, dropout) self.inceptions_3 = torch.nn.Sequential( Inception(104, 32, 20, 40, 4, 16, 16), # inception5a Inception(104, 48, 24, 48, 6, 16, 16) # inception5b ) self.avg_pool = torch.nn.AdaptiveAvgPool2d((1, 1)) self.fc = torch.nn.Sequential( torch.nn.Flatten(), torch.nn.Dropout(dropout), torch.nn.Linear(128, label_num), torch.nn.ReLU() ) def forward(self, x): x = self.conv_pool(x) x = self.inceptions_1(x) if self.training and self.aux: x_aux_1 = self.aux1(x) x = self.inceptions_2(x) if self.training and self.aux: x_aux_2 = self.aux2(x) x = self.inceptions_3(x) x = self.avg_pool(x) x = self.fc(x) if self.aux: return x, x_aux_1, x_aux_2 return x- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

总结

以上就是本篇文章介绍的CNN的5种经典架构,欢迎相互学习交流!

-

相关阅读:

数据库锁机制和我的理解

Zabbix监控环境搭建,监控项简单、高级应用,包括自定义监控项、采集器、自动发现规则、监控项原型、宏

Android7.1 ROOT权限的获取

【数据结构趣味多】顺序表基本操作实现(Java)

将linux上的文件/文件夹下载到本地

Leetcode 15

基于hadoop的气象数据可视化分析

浅谈网络损伤仪HoloWAN的使用场景

全站综合热榜第四

论文解读(CosFace)《CosFace: Large Margin Cosine Loss for Deep Face Recognition》

- 原文地址:https://blog.csdn.net/qq_41664447/article/details/126698265