-

【云原生 | Kubernetes 系列】---Prometheus 联邦

【云原生 | Kubernetes 系列】—Prometheus 联邦

序号 服务器IP 角色 1 192.168.31.201 prometheus-server 2 192.168.31.121 prometheus-node1 3 192.168.31.122 prometheus-node2 4 192.168.31.123 prometheus-node3 5 192.168.31.101 k8s-node-exporter1 6 192.168.31.102 k8s-node-exporter2 7 192.168.31.103 k8s-node-exporter3 8 192.168.31.111 k8s-node-exporter4 9 192.168.31.112 k8s-node-exporter5 10 192.168.31.113 k8s-node-exporter6 11 192.168.31.114 k8s-node-exporter7

1. Prometheus搭建

- 将安装包和service文件复制到3台联邦节点上

# scp prometheus-2.38.0.linux-amd64.tar.gz 192.168.31.121:/apps/ # scp /etc/systemd/system/prometheus.service 192.168.31.121:/etc/systemd/system/prometheus.service # scp prometheus-2.38.0.linux-amd64.tar.gz 192.168.31.122:/apps/ # scp /etc/systemd/system/prometheus.service 192.168.31.122:/etc/systemd/system/prometheus.service # scp prometheus-2.38.0.linux-amd64.tar.gz 192.168.31.123:/apps/ # scp /etc/systemd/system/prometheus.service 192.168.31.123:/etc/systemd/system/prometheus.service- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 在3台联邦服务器上安装prometheus

root@zookeeper-3:/apps# cd /apps root@zookeeper-3:/apps# tar xf prometheus-2.38.0.linux-amd64.tar.gz root@zookeeper-3:/apps# ln -sf /apps/prometheus-2.38.0.linux-amd64 /apps/prometheus- 1

- 2

- 3

2. 所有节点安装node-exporter

这些节点之前都装过了,就不再复述了

只要确认可以通过nose:9100/metrics访问到数据即可

3. 配置联邦

3.1 prometheus-node1

/apps/prometheus/prometheus.yml追加node-exporter配置

- job_name: "node-exporter" static_configs: - targets: ["192.168.31.101:9100","192.168.31.102:9100","192.168.31.103:9100"]- 1

- 2

- 3

启动服务后确认9090监听是否正常

root@zookeeper-1:/apps/prometheus# systemctl enable --now prometheus.service Created symlink /etc/systemd/system/multi-user.target.wants/prometheus.service → /etc/systemd/system/prometheus.service. root@zookeeper-1:/apps/prometheus# ss -ntl|grep 9090 LISTEN 0 4096 *:9090 *:*- 1

- 2

- 3

- 4

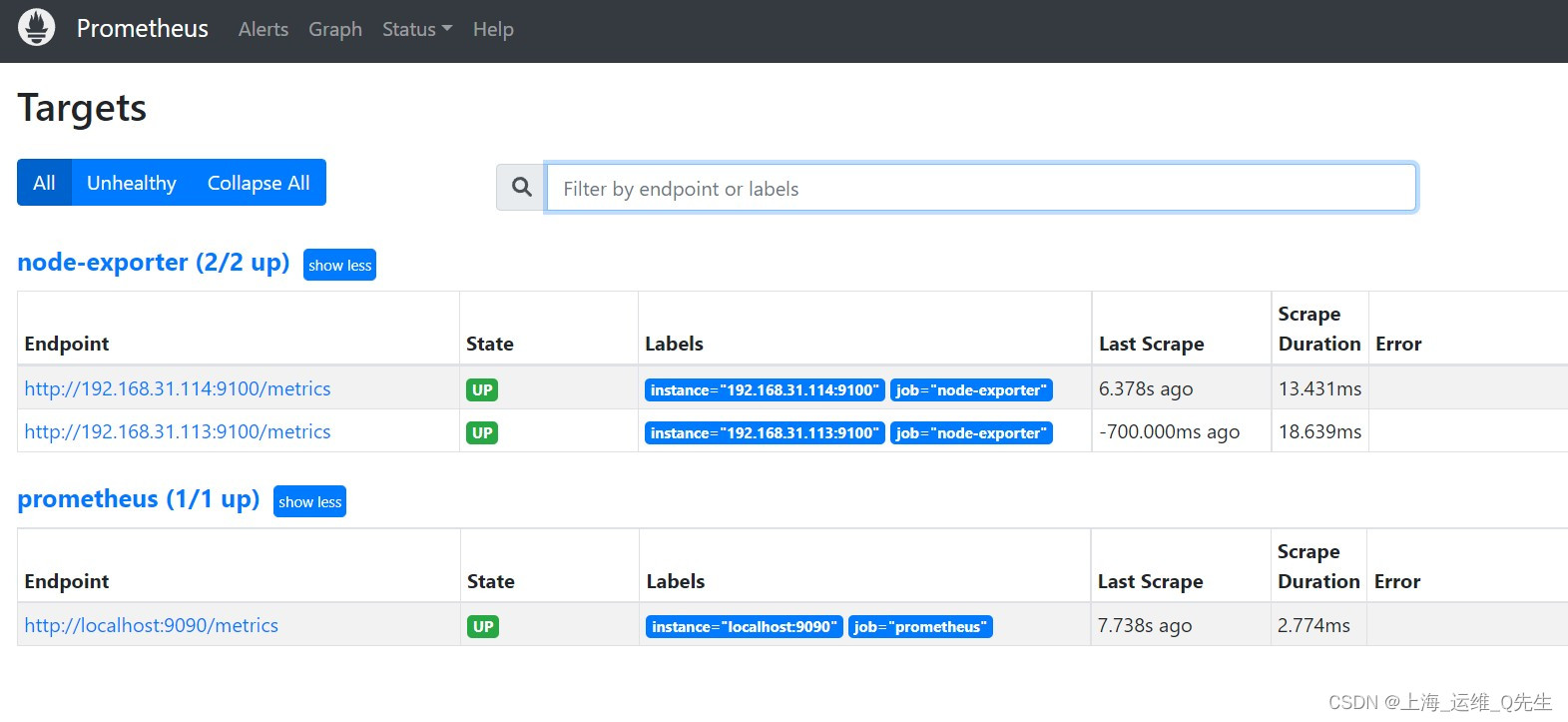

在web页面上查看是否正常获取到数据

3.2 其他prometheus-node节点

prometheus-node2

- job_name: "node-exporter" static_configs: - targets: ["192.168.31.111:9100","192.168.31.112:9100"]- 1

- 2

- 3

prometheus-node3

- job_name: "node-exporter" static_configs: - targets: ["192.168.31.113:9100","192.168.31.114:9100"]- 1

- 2

- 3

4. 配置prometheus-server

修改配置prometheus.yml将以下配置加入scrape_configs:

scrape_configs: - job_name: "prometheus-federate" scrape_interval: 10s honor_labels: true metrics_path: '/federate' params: 'match[]': - '{job="prometheus"}' - '{__name__=~"job:.*"}' - '{__name__=~"node.*"}' static_configs: - targets: - '192.168.31.121:9090' - '192.168.31.122:9090' - '192.168.31.123:9090'- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

重启prometheus服务后

在Targets下看到了联邦的3个node节点.

此时其他配置都已经删除了,所以只有这么一个配置.

查询node_load1时可以看到3个node分别收集的node信息在server上都能正常获取到

5. Grafana导入

8919

-

相关阅读:

Python批量爬取简历模板

我从技术到产品经理的几点体会

35.cuBLAS开发指南中文版--cuBLAS中的Level-2函数hbmv()

ABB GFD563A101 3BHE046836R0101ABB GFD563A101 3BHE046836R0101

Leetcode 155. 最小栈

uniapp全局组件全局使用(不在每个页面template使用,仅支持H5),函数式调用全局组件方法

9月第4周榜单丨飞瓜数据B站UP主排行榜(哔哩哔哩平台)发布!

markdown希腊字母

脑结构-功能耦合解码大脑状态和个体指纹

C++面试八股文:C++中,函数的参数应该传值还是传引用?

- 原文地址:https://blog.csdn.net/qq_29974229/article/details/126757207