-

大数据学习笔记第1课 Hadoop基础理论与集群搭建

大数据学习笔记第1课 Hadoop基础理论与集群搭建

- 一、环境准备

- 二、下载JDK

- 三、安装JDK

- 四、下载hadoop

- 五、安装hadoop集群

- 六、打通3台服务器的免密登录

- 七、hadoop集群配置

- 八、hadoop集群初始化

- 九、启动hadoop集群

- 十、hadoop集群第一坑填坑记。

- 结束

最近利用业余时间学习一些大数据的课程,趁周末的时间把学习笔记整理一下,直接上干货吧!

一、环境准备

1、服务器:三台华为云ECS(都是4核8G)

计算机名

内网IP

备注

ecs-ae8a-0001

192.168.0.177

namenode/datanode

ecs-ae8a-0002

192.168.0.56

datanode

ecs-ae8a-0003

192.168.0.59

datanode

2、系统版本:CentOS Linux release 7.5.1804 (Core)

3、修改3台服务器的/etc/hosts

ecs-ae8a-0001::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 #127.0.0.1 ecs-ae8a-0001 ecs-ae8a-0001 192.168.0.177 hadoop01 ecs-ae8a-0001 192.168.0.56 hadoop02 ecs-ae8a-0002 192.168.0.59 hadoop03 ecs-ae8a-0003- 1

- 2

- 3

- 4

- 5

- 6

- 7

ecs-ae8a-0002

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 #127.0.0.1 ecs-ae8a-0002 ecs-ae8a-0002 192.168.0.177 hadoop01 ecs-ae8a-0001 192.168.0.56 hadoop02 ecs-ae8a-0002 192.168.0.59 hadoop03 ecs-ae8a-0003- 1

- 2

- 3

- 4

- 5

- 6

- 7

ecs-ae8a-0003

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 #127.0.0.1 ecs-ae8a-0003 ecs-ae8a-0003 192.168.0.177 hadoop01 ecs-ae8a-0001 192.168.0.56 hadoop02 ecs-ae8a-0002 192.168.0.59 hadoop03 ecs-ae8a-0003- 1

- 2

- 3

- 4

- 5

- 6

- 7

注意:

1、修改完毕确保hadoop01、hadoop02、hadoop03都能互相ping通。

2、需要把以上3图中的以下内容前通过#注释掉,不然启动集群时会导致所有datanode的clusterID值相同,会导致在hadoop图形化界面上只有一个datanode。ecs-ae8a-0001

#127.0.0.1 ecs-ae8a-0001 ecs-ae8a-0001- 1

ecs-ae8a-0002

#127.0.0.1 ecs-ae8a-0002 ecs-ae8a-0002- 1

ecs-ae8a-0003

#127.0.0.1 ecs-ae8a-0003 ecs-ae8a-0003- 1

二、下载JDK

1、本地浏览器进入甲骨文官网:https://www.oracle.cn

2、创建一个Oracle帐户,然后登录,如果已有用户,则直接登录。

点击页面顶端的[查看账户],出现下图界面。

3、点击登录按钮,会进入登录界面如下图:

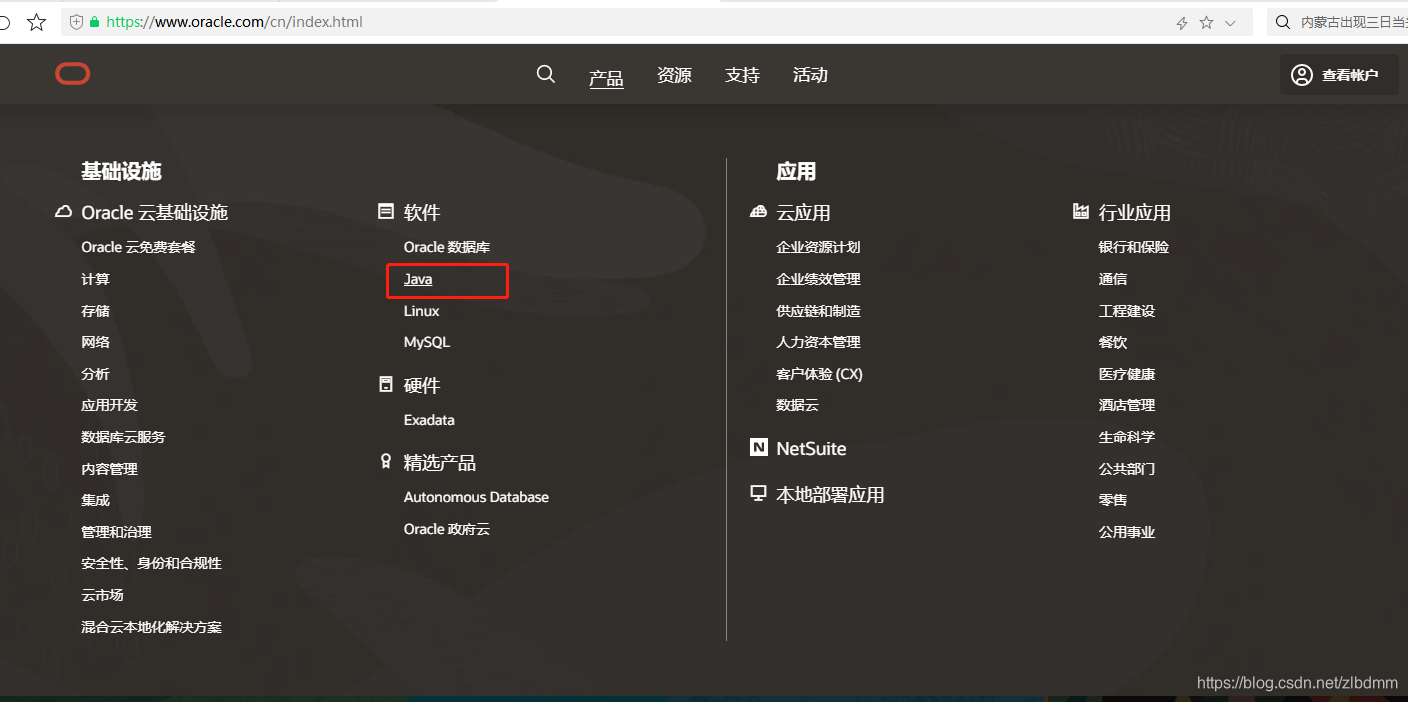

4、输入你的用户名和密码进行登录,登录成功后,点击页面顶端的[产品]菜单项,出现子级菜单项,如下图:

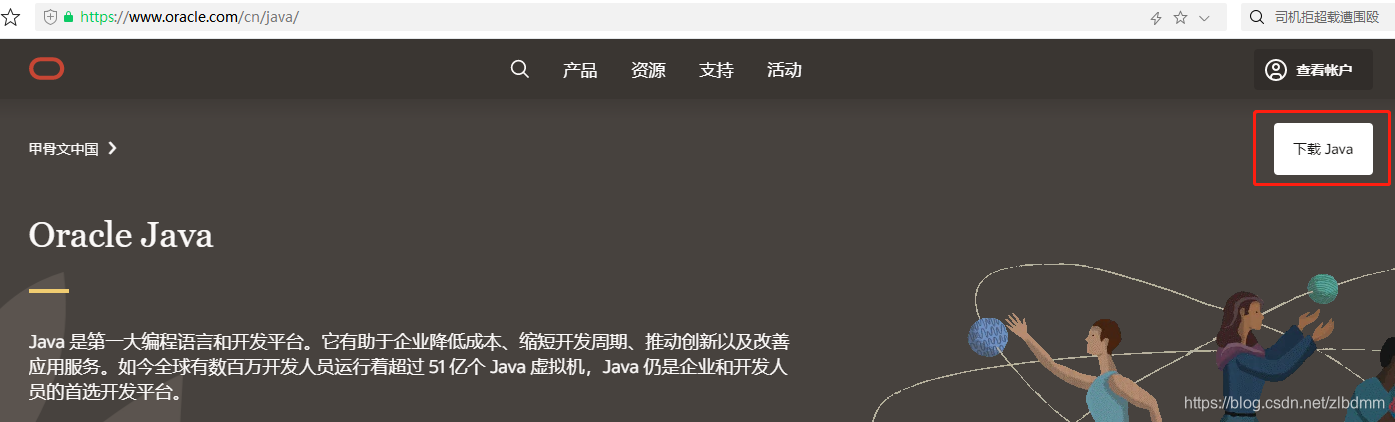

5、点击上图中的Java选项,进入Java介绍页面,如下图:

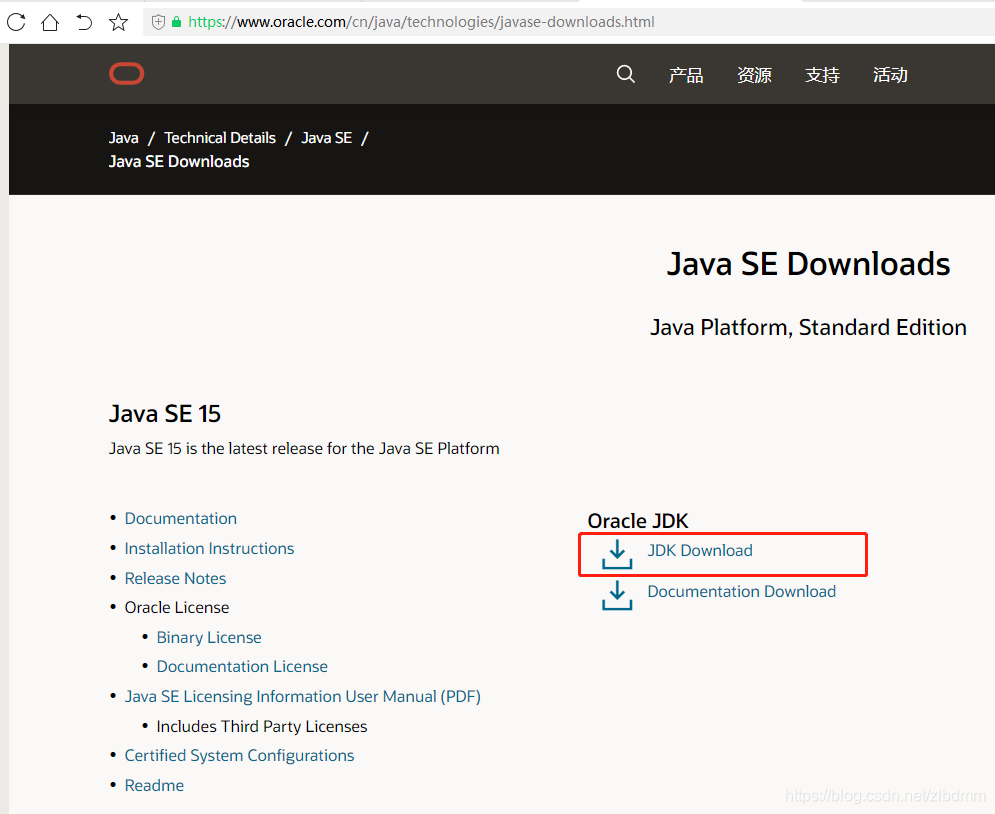

6、点击上图中顶端右侧的[下载Java]按钮,进入Java下载页面,如下图:

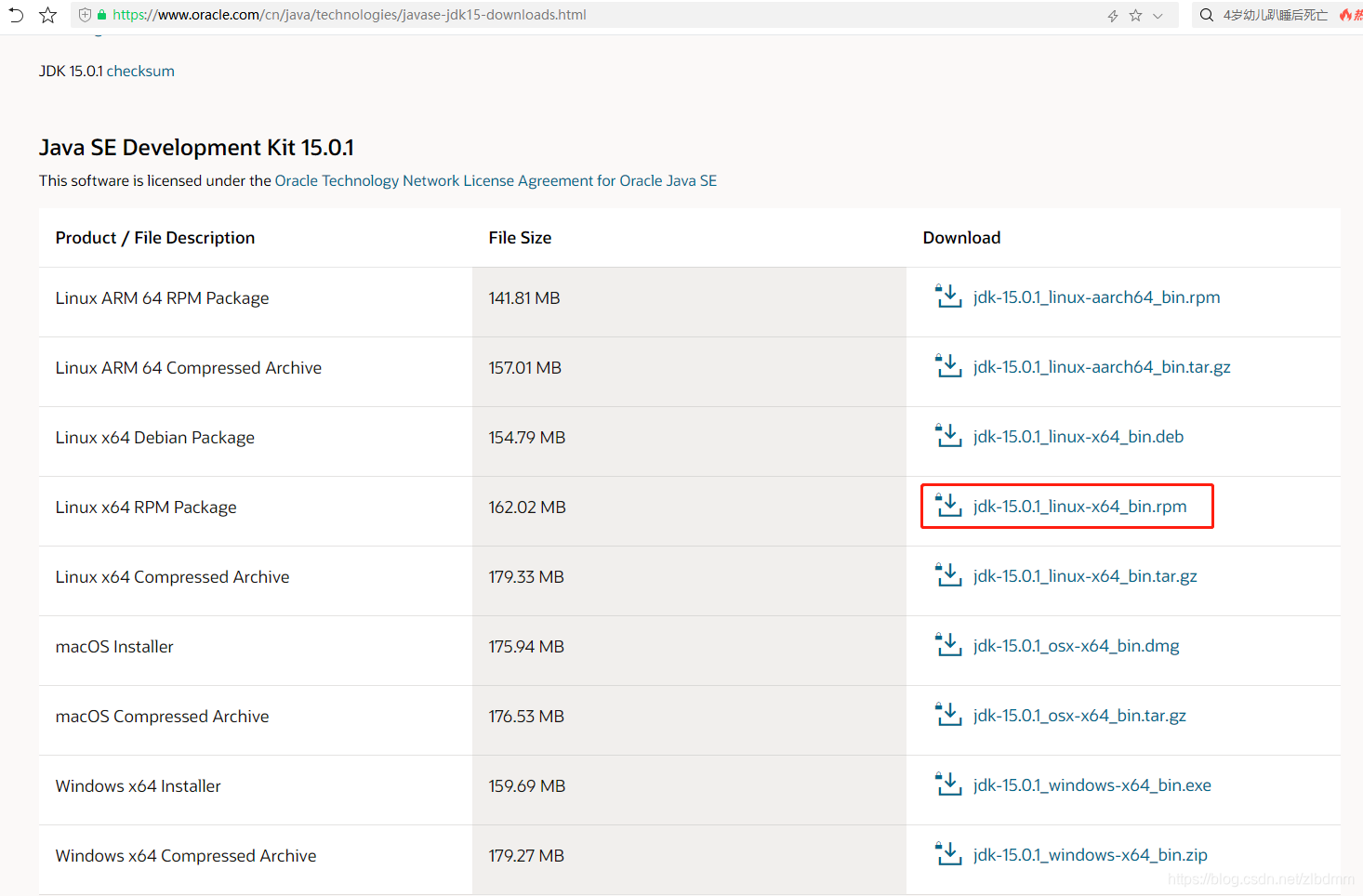

7、从上图中可以看出最新版为JavaSE15,可以点击上图中的[JDK Download]链接,出现下图:

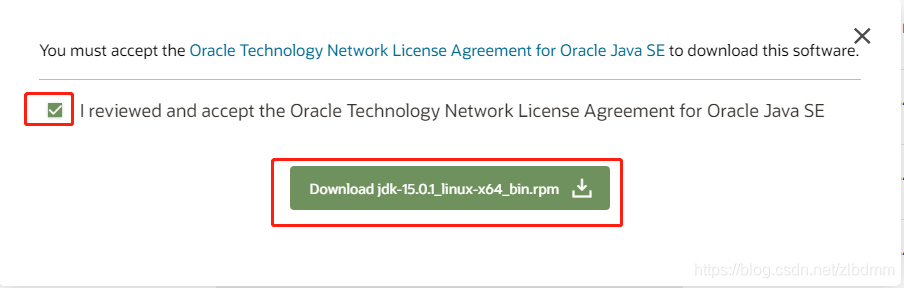

8、如上图根据你的目标操作系统选择相应的版本,因为我们是在Linux下安装,可以点击[jdk-15.0.1_linux-x64_bin.rpm],首次下载会弹出一个对话框,如下图:

9、如上图,首先勾选[I reviewed and accept the Oracle Technology Network License Agreement for Oracle Java SE]选项,直接点击[Download jdk-15.0.1_linux-x64_bin.rpm]按钮出现下载对话框,如下图:

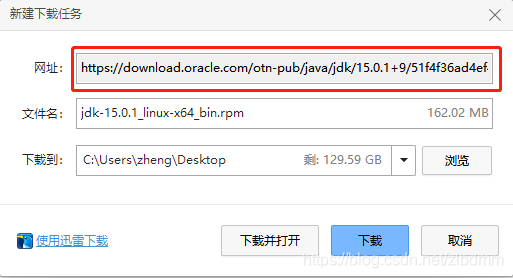

如果想下载到本地可以点击[下载]。因为我这里是要在centos系统中直接下载,因此只要复制下载地址就行。

10、回到xshell的终端系统中,新建一个/opt/soft目录用于存放下载的软件。

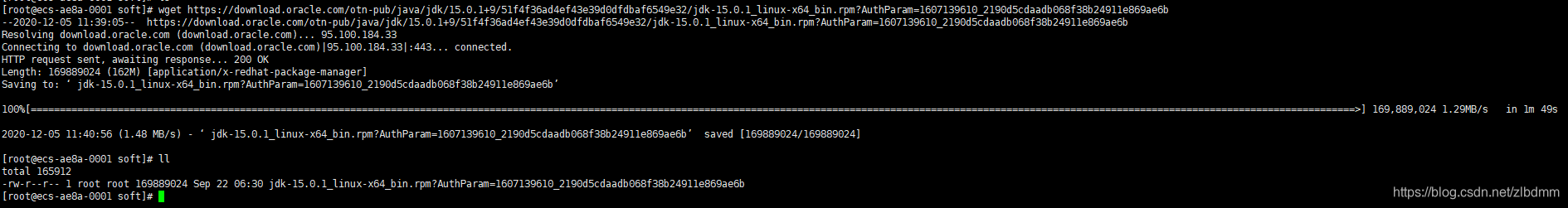

11、 通过wget下载jdk的rpm包,如下:wget https://download.oracle.com/otn-pub/java/jdk/15.0.1+9/51f4f36ad4ef43e39d0dfdbaf6549e32/jdk-15.0.1_linux-x64_bin.rpm?AuthParam=1607139610_2190d5cdaadb068f38b24911e869ae6b- 1

下载过程如下图:

下载后的文件名会带AuthParm后缀,需要先重命名一下,如下:mv jdk-15.0.1_linux-x64_bin.rpm?AuthParam=1607139610_2190d5cdaadb068f38b24911e869ae6b jdk-15.0.1_linux-x64_bin.rpm- 1

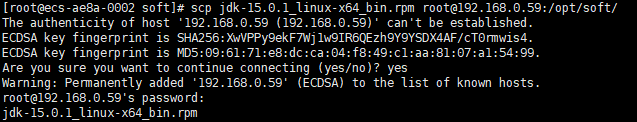

12、3台服务器执行相同的下载操作,当然也可以使用scp命令把rpm包直接远程复制到其他2台服务器上,如下:

scp jdk-15.0.1_linux-x64_bin.rpm root@192.168.0.59:/opt/soft/- 1

需要输入用户密码进行验证,如下图:

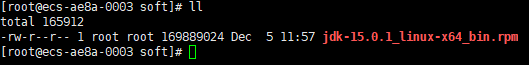

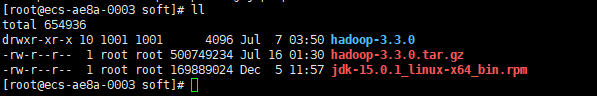

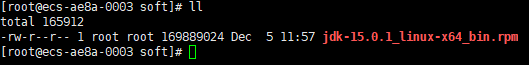

这样在192.168.0.59服务器上就有jdk的rpm包了,如下图:

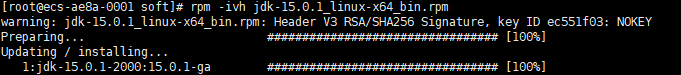

三、安装JDK

1、在xshell终端中执行rpm命令安装rpm包,如下:

rpm -ivh jdk-15.0.1_linux-x64_bin.rpm- 1

安装过程如下图:

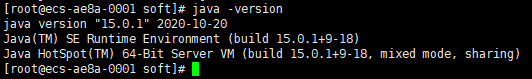

2、通过java -version验证jdk是否安装成功,如下图:

3、3台服务器执行相同的安装和验证操作。四、下载hadoop

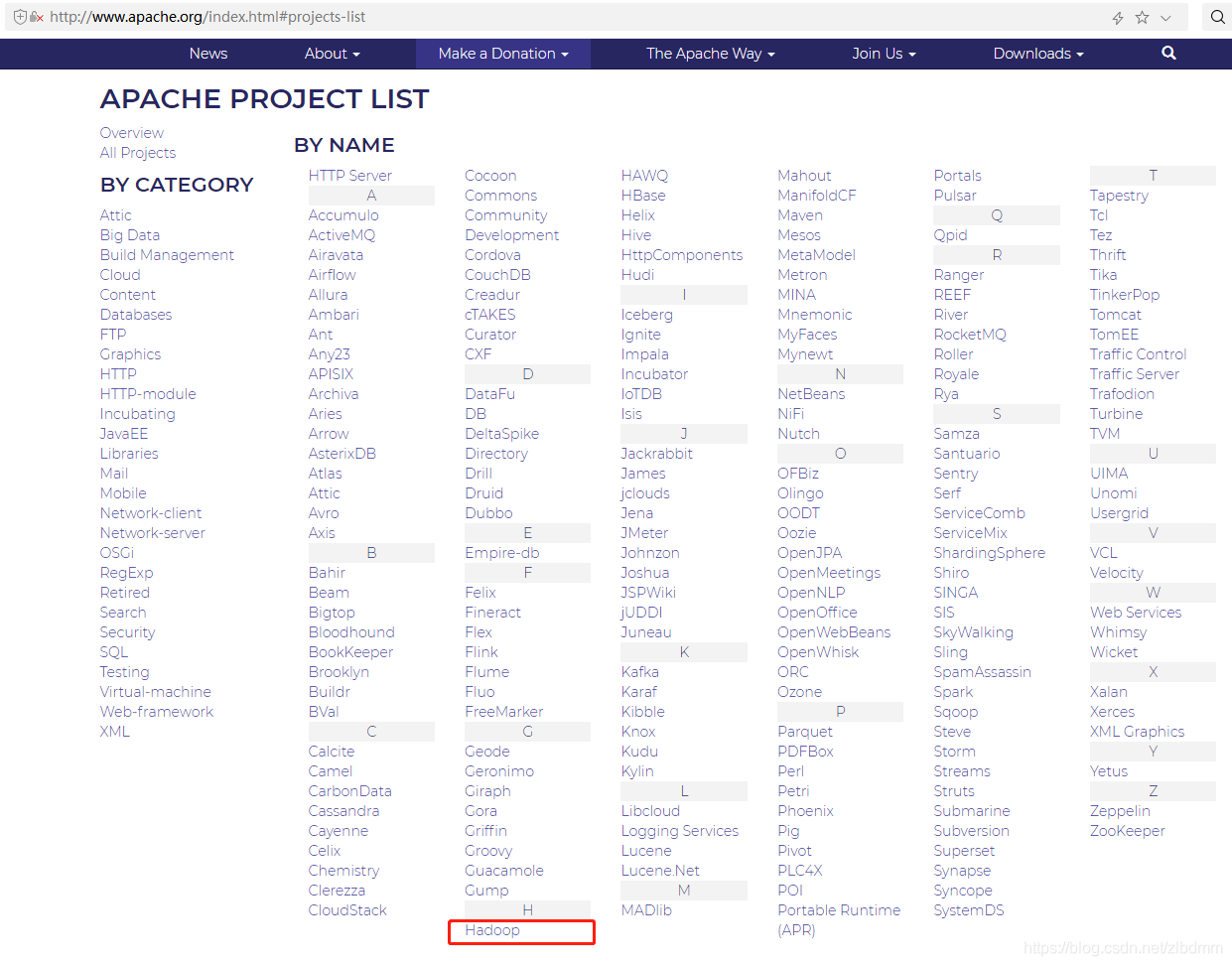

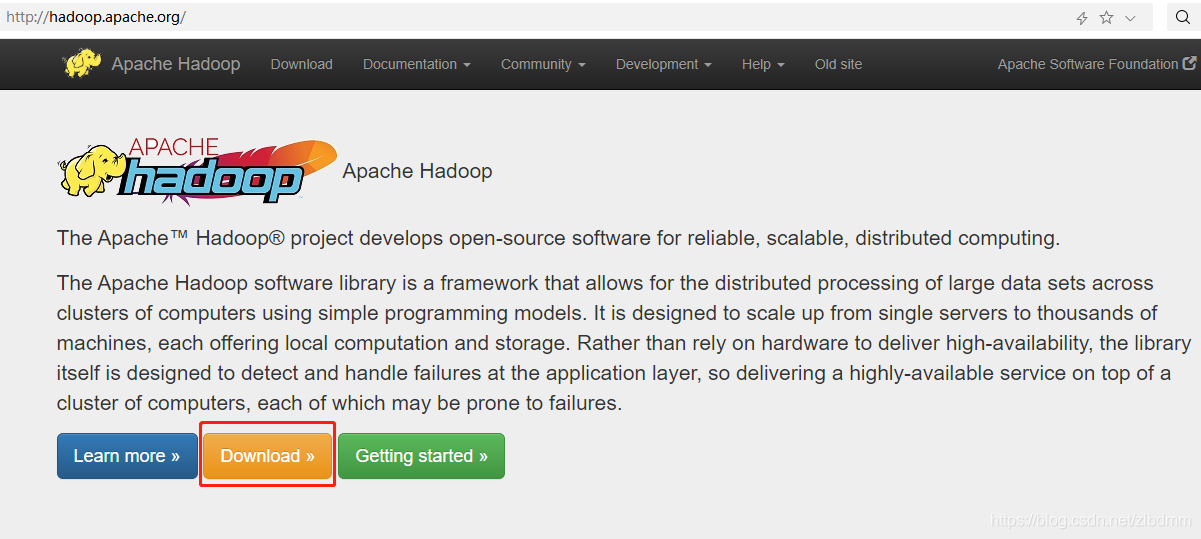

1、先进入apache官网,地址为:http://www.apache.org/,如下图:

2、点击首页的Projects菜单项会弹出子菜单列表,如下图:

3、选中Project List菜单项,进入项目列表页面,如下图:

4、在项目列表页面中找H字母开头的,目前第一项就是Hadoop,点击进入Hadoop主页,如下图:

5、 点击Hadoop主页上的Download按钮,进入Hadoop下载页面,如下图:

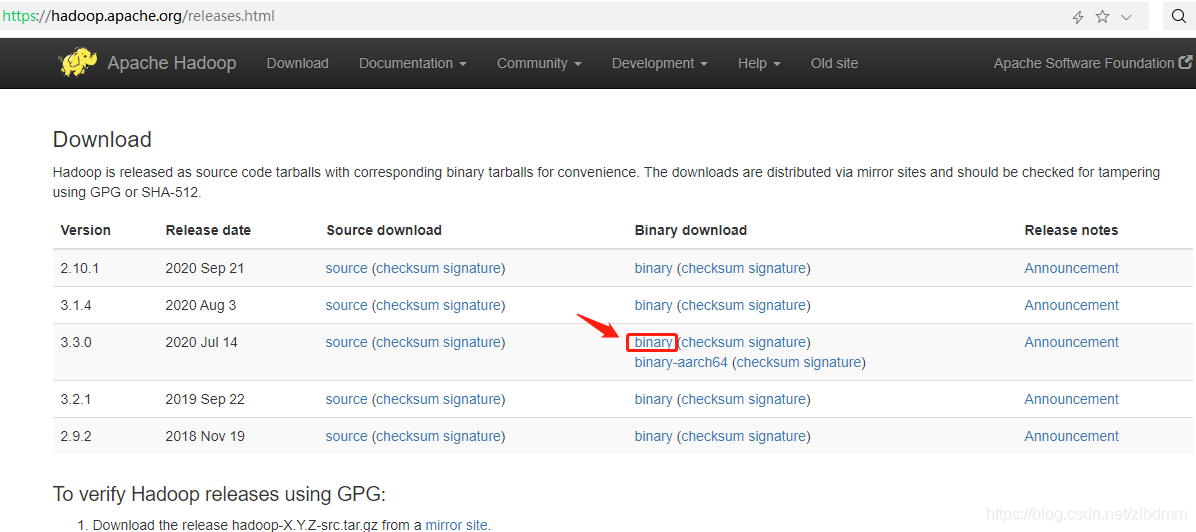

6、在Hadoop的下载页面中选择最新版的二进制下载,这里是3.3.0版本的binary,会进入hadoop-3.3.0.tar.gz的镜像下载地址页面,如下图:

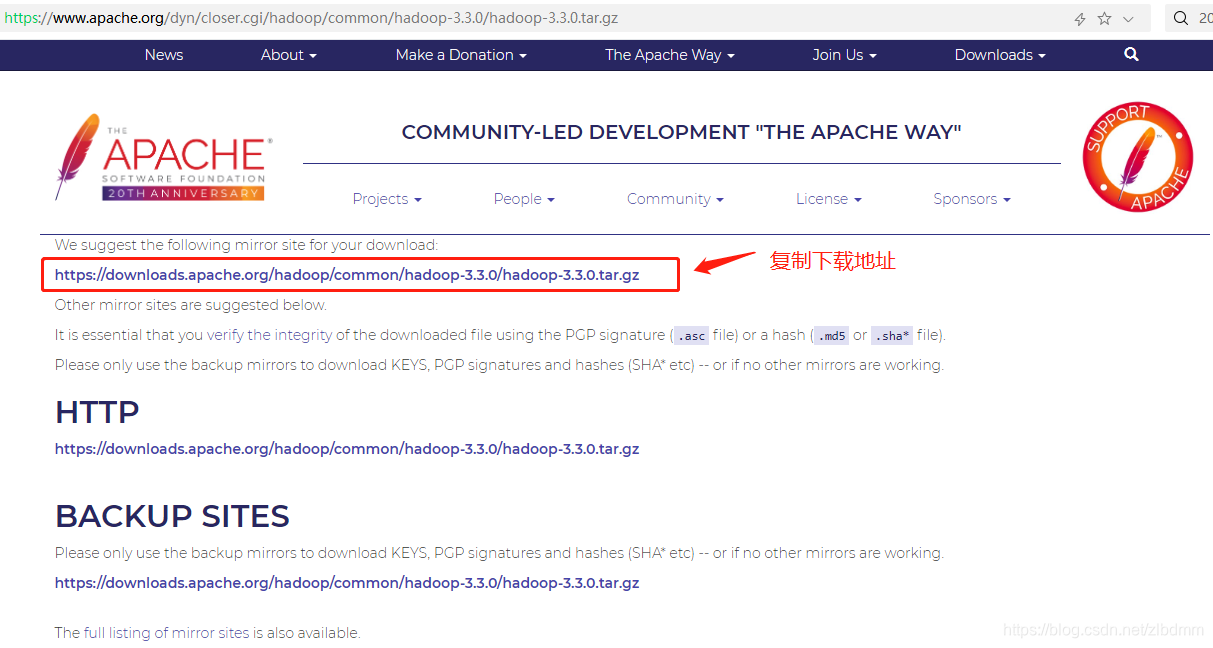

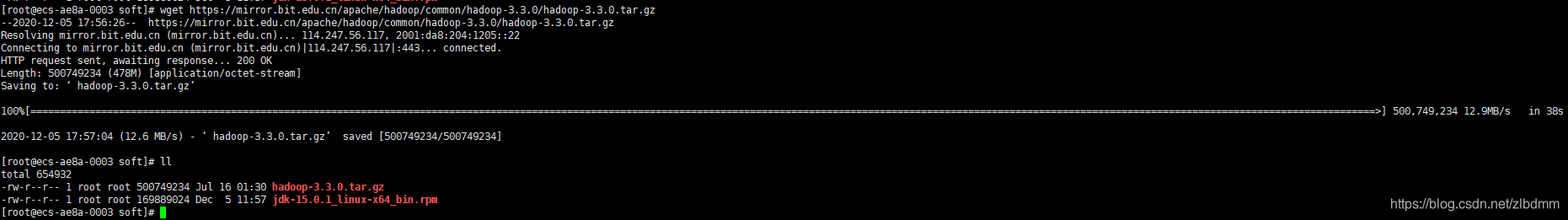

7、复制其中的一个下载地址,然后回到xshell的终端系统中,使用wget进行下载,为了下载速度我在华为云服务器上使用的是另一个镜像地址,如下:wget https://mirror.bit.edu.cn/apache/hadoop/common/hadoop-3.3.0/hadoop-3.3.0.tar.gz- 1

下载过程如下图:

8、使用tar -xzvf命令解压缩hadoop-3.3.0.tar.gz,如下:tar -xzvf haddop-3.3.0.tar.gz- 1

解压后如下图:

9、 三台服务器执行相同的下载与解压操作。

10、新建一个程序目录/program,如下:mkdir /program- 1

11、 把解压的hadoop-3.3.0目录复制到/program/目录下,如下:

cp -r hadoop-3.3.0 /program/- 1

12、3台服务器执行相同的创建目录与复制操作。

五、安装hadoop集群

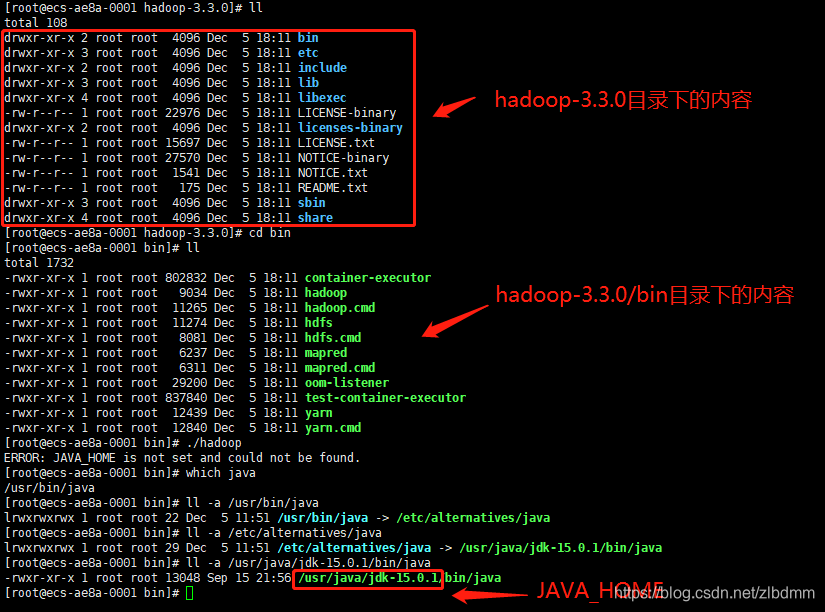

1、切换当前目录为/program/hadoop-3.3.0,了解一下目录内容。

2、切换当前目录为/program/hadoop-3.3.0/bin,理解一下目录下的内容。

3、通过./hadoop命令执行hadoop安装。这是会出现未找到JAVA_HOME错误信息:ERROR: JAVA_HOME is not set and could not be found.- 1

4、因为之前已经安装了jdk,只是没有配置JAVA_HOME环境变量而已,这里先通过which java找到jdk的安装目录。which java执行后输出的目录有可能是软链接,因此需要通过ll -a 文件目录,看看有没有->符号,如果有则说明是软链接。这里找到的JAVA_HOME为/usr/java/jdk-15.0.1。如下图:

5、为了解决这个文件首先切换目录至/program/hadoop-3.3.0/etc/hadoop目录

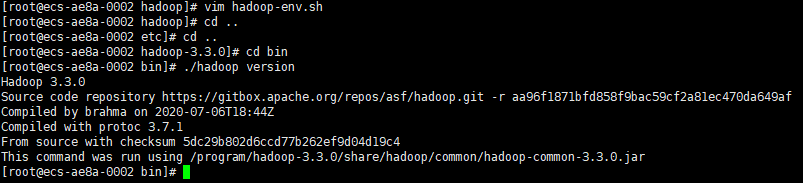

6、然后通过vim命令编辑文件/program/hadoop-3.3.0/etc/hadoop/hadoop-env.sh。找到# export JAVA_HOME=- 1

取消前面的#,改为

export JAVA_HOME=/usr/java/jdk-15.0.1- 1

保存hadoop-env.sh,并退出。

7、切换回/program/hadoop-3.3.0/bin目录,使用./hadoop version命令查看hadoop的版本,如下图:

8、3台服务器执行相同的配置JAVA_HOME操作。六、打通3台服务器的免密登录

1、目标是在namenode(hadoop01)服务器上能够免密登录2个datanode(hadoop02、hadoop03)服务器。

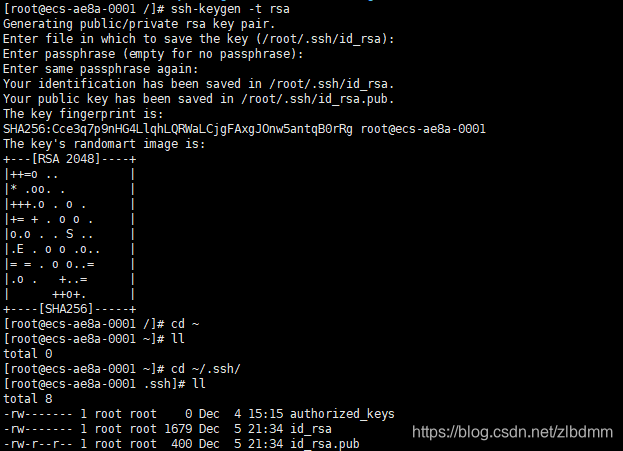

2、在namenode(hadoop01)服务器上通过ssh-keygen -t rsa命令生成密钥对,如下:ssh-keygen -t rsa- 1

执行过程如下图:

这样在~/.ssh/目录下就会有几个文件

id_rsa是私钥文件

id_rsa.pub是公钥文件

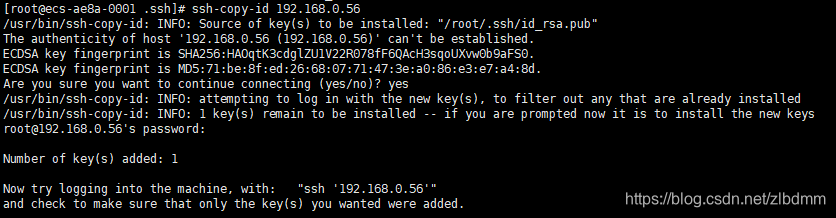

3、通过ssh-copy-id命令把公钥内容发送至2个datanode服务器,这是还是会再让输入一次密码的。如下:

192.168.0.56对应的是hadoop02,为了确保能够在hadoop01上直接ssh登录hadoop02,需要在hadoop01上再执行一次

ssh-copy-id hadoop02

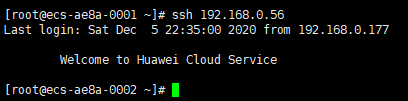

4、通过ssh命令验证是否能够直接登录,如下图:

5、 重复上面的操作实现能在hadoop01上直接免密登录hadoop03。七、hadoop集群配置

1、namenode(hadoop01、hadoop02、hadoop03)的配置,设置namenode的IP地址。

通过vim命令打开/program/hadoop-3.3.0/etc/hadoop/core-site.xml文件,在中增加如下内容:fs.defaultFS hdfs://192.168.0.177:9000 - 1

- 2

- 3

- 4

- 5

- 6

注意:为了实现3台服务器都要设置,可以现在hadoop01上修改后,再把core-site.xml文件复制到另外2台服务器上。

2、datanode(hadoop01、hadoop02、hadoop03)的配置,设置数据存储目录。

通过vim命令打开/program/hadoop-3.3.0/etc/haddop/hdfs-site.xml文件,在中增加的内容如下:dfs.datanode.data.dir /home/data - 1

- 2

- 3

- 4

- 5

- 6

注意:可以现在hadoop01服务器上修改完毕,再把hdfs-site.xml复制到另外2台服务器。

八、hadoop集群初始化

首先进入hadoop01并切换目录至/program/hadoop-3.3.0/bin/

执行以下命令进行集群初始化,其中-format后的名字可以自己定义,这里定义为csdn./hdfs namenode -format csdn- 1

执行日志如下:

2020-12-06 22:04:04,536 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = ecs-ae8a-0001/192.168.0.177 STARTUP_MSG: args = [-format, csdn] STARTUP_MSG: version = 3.3.0 STARTUP_MSG: classpath = /program/hadoop-3.3.0/etc/hadoop:/program/hadoop-3.3.0/share/hadoop/common/lib/kerby-util-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-collections-3.2.2.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerby-asn1-1.0.1.jar: /program/hadoop-3.3.0/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jsr305-3.0.2.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-compress-1.19.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-daemon-1.0.13.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/hadoop-shaded-protobuf_3_7-1.0.0.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jetty-servlet-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jackson-databind-2.10.3.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerb-client-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/stax2-api-3.1.4.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/gson-2.2.4.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerb-server-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/httpcore-4.4.10.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerb-core-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-lang3-3.7.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/log4j-1.2.17.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/j2objc-annotations-1.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/nimbus-jose-jwt-7.9.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/zookeeper-jute-3.5.6.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/netty-3.10.6.Final.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jersey-servlet-1.19.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-net-3.6.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/dnsjava-2.1.7.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jersey-json-1.19.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/accessors-smart-1.2.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/failureaccess-1.0.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerby-config-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/re2j-1.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/guava-27.0-jre.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-text-1.4.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jackson-annotations-2.10.3.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jackson-core-2.10.3.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jettison-1.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jsch-0.1.55.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/token-provider-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/snappy-java-1.0.5.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/metrics-core-3.2.4.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-codec-1.11.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jetty-webapp-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/zookeeper-3.5.6.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/paranamer-2.3.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/curator-framework-4.2.0.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerb-util-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-beanutils-1.9.4.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jsp-api-2.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-cli-1.2.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/animal-sniffer-annotations-1.17.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/hadoop-auth-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jetty-xml-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jetty-io-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/checker-qual-2.5.2.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/javax.activation-api-1.2.0.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/httpclient-4.5.6.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/asm-5.0.4.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/json-smart-2.3.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/curator-recipes-4.2.0.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jetty-security-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-math3-3.1.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-io-2.5.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jersey-server-1.19.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jersey-core-1.19.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jetty-util-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jetty-server-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jetty-http-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/curator-client-4.2.0.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/commons-logging-1.1.3.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/kerb-common-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/avro-1.7.7.jar:/program/hadoop-3.3.0/share/hadoop/common/lib/hadoop-annotations-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/common/hadoop-kms-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/common/hadoop-common-3.3.0-tests.jar:/program/hadoop-3.3.0/share/hadoop/common/hadoop-registry-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/common/hadoop-common-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/common/hadoop-nfs-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jsr305-3.0.2.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-compress-1.19.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/hadoop-shaded-protobuf_3_7-1.0.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jetty-servlet-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jackson-databind-2.10.3.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/gson-2.2.4.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/httpcore-4.4.10.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-lang3-3.7.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/j2objc-annotations-1.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/nimbus-jose-jwt-7.9.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/zookeeper-jute-3.5.6.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/netty-3.10.6.Final.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-net-3.6.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/dnsjava-2.1.7.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/failureaccess-1.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/re2j-1.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/guava-27.0-jre.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-text-1.4.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jackson-annotations-2.10.3.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jackson-core-2.10.3.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jettison-1.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jsch-0.1.55.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jetty-webapp-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/zookeeper-3.5.6.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/paranamer-2.3.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/curator-framework-4.2.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-beanutils-1.9.4.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/animal-sniffer-annotations-1.17.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/hadoop-auth-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jetty-xml-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jetty-io-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/checker-qual-2.5.2.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/javax.activation-api-1.2.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/httpclient-4.5.6.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/asm-5.0.4.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/json-smart-2.3.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/curator-recipes-4.2.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jetty-security-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jetty-util-ajax-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-io-2.5.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/netty-all-4.1.50.Final.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jetty-util-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jetty-server-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jetty-http-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/curator-client-4.2.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/okio-1.6.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/avro-1.7.7.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/lib/hadoop-annotations-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/hadoop-hdfs-native-client-3.3.0-tests.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/hadoop-hdfs-3.3.0-tests.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/hadoop-hdfs-nfs-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/hadoop-hdfs-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/hadoop-hdfs-rbf-3.3.0-tests.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/hadoop-hdfs-client-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/hadoop-hdfs-client-3.3.0-tests.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/hadoop-hdfs-native-client-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/hadoop-hdfs-rbf-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.3.0-tests.jar:/program/hadoop-3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn:/program/hadoop-3.3.0/share/hadoop/yarn/lib/websocket-client-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/asm-analysis-7.1.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/websocket-api-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/javax-websocket-server-impl-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/bcprov-jdk15on-1.60.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/asm-commons-7.1.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/javax.inject-1.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jetty-client-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/json-io-2.5.1.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jackson-jaxrs-base-2.10.3.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/websocket-servlet-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jna-5.2.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jetty-annotations-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.10.3.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/bcpkix-jdk15on-1.60.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jline-3.9.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/java-util-1.9.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.10.3.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/javax.websocket-client-api-1.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jetty-jndi-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jakarta.xml.bind-api-2.3.2.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/objenesis-2.6.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jakarta.activation-api-1.2.1.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/websocket-common-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/asm-tree-7.1.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/aopalliance-1.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jersey-client-1.19.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/websocket-server-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/jetty-plus-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/guice-4.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/javax-websocket-client-impl-9.4.20.v20190813.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/javax.websocket-api-1.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/fst-2.50.jar:/program/hadoop-3.3.0/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-client-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-api-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-services-core-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-server-common-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-applications-mawo-core-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-registry-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-services-api-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-server-tests-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-server-router-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.3.0.jar:/program/hadoop-3.3.0/share/hadoop/yarn/hadoop-yarn-common-3.3.0.jarSTARTUP_MSG: build = https://gitbox.apache.org/repos/asf/hadoop.git -r aa96f1871bfd858f9bac59cf2a81ec470da649af; compiled by 'brahma' on 2020-07-06T18:44Z STARTUP_MSG: java = 15.0.1 ************************************************************/ 2020-12-06 22:04:04,544 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 2020-12-06 22:04:04,613 INFO namenode.NameNode: createNameNode [-format, csdn] 2020-12-06 22:04:04,949 INFO namenode.NameNode: Formatting using clusterid: CID-6fd94f42-31a1-4fb3-933b-de6e91ba3bfb 2020-12-06 22:04:04,967 INFO namenode.FSEditLog: Edit logging is async:true 2020-12-06 22:04:04,989 INFO namenode.FSNamesystem: KeyProvider: null 2020-12-06 22:04:04,990 INFO namenode.FSNamesystem: fsLock is fair: true 2020-12-06 22:04:04,990 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false 2020-12-06 22:04:05,018 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE) 2020-12-06 22:04:05,018 INFO namenode.FSNamesystem: supergroup = supergroup 2020-12-06 22:04:05,018 INFO namenode.FSNamesystem: isPermissionEnabled = true 2020-12-06 22:04:05,018 INFO namenode.FSNamesystem: isStoragePolicyEnabled = true 2020-12-06 22:04:05,018 INFO namenode.FSNamesystem: HA Enabled: false 2020-12-06 22:04:05,048 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling 2020-12-06 22:04:05,055 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000 2020-12-06 22:04:05,055 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 2020-12-06 22:04:05,057 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 2020-12-06 22:04:05,057 INFO blockmanagement.BlockManager: The block deletion will start around 2020 Dec 06 22:04:05 2020-12-06 22:04:05,058 INFO util.GSet: Computing capacity for map BlocksMap 2020-12-06 22:04:05,058 INFO util.GSet: VM type = 64-bit 2020-12-06 22:04:05,059 INFO util.GSet: 2.0% max memory 1.9 GB = 39.1 MB 2020-12-06 22:04:05,059 INFO util.GSet: capacity = 2^22 = 4194304 entries 2020-12-06 22:04:05,069 INFO blockmanagement.BlockManager: Storage policy satisfier is disabled 2020-12-06 22:04:05,069 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false 2020-12-06 22:04:05,073 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.999 2020-12-06 22:04:05,073 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0 2020-12-06 22:04:05,073 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000 2020-12-06 22:04:05,073 INFO blockmanagement.BlockManager: defaultReplication = 3 2020-12-06 22:04:05,073 INFO blockmanagement.BlockManager: maxReplication = 512 2020-12-06 22:04:05,074 INFO blockmanagement.BlockManager: minReplication = 1 2020-12-06 22:04:05,074 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 2020-12-06 22:04:05,074 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms 2020-12-06 22:04:05,074 INFO blockmanagement.BlockManager: encryptDataTransfer = false 2020-12-06 22:04:05,074 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 2020-12-06 22:04:05,089 INFO namenode.FSDirectory: GLOBAL serial map: bits=29 maxEntries=536870911 2020-12-06 22:04:05,089 INFO namenode.FSDirectory: USER serial map: bits=24 maxEntries=16777215 2020-12-06 22:04:05,089 INFO namenode.FSDirectory: GROUP serial map: bits=24 maxEntries=16777215 2020-12-06 22:04:05,090 INFO namenode.FSDirectory: XATTR serial map: bits=24 maxEntries=16777215 2020-12-06 22:04:05,098 INFO util.GSet: Computing capacity for map INodeMap 2020-12-06 22:04:05,098 INFO util.GSet: VM type = 64-bit 2020-12-06 22:04:05,098 INFO util.GSet: 1.0% max memory 1.9 GB = 19.6 MB 2020-12-06 22:04:05,098 INFO util.GSet: capacity = 2^21 = 2097152 entries 2020-12-06 22:04:05,101 INFO namenode.FSDirectory: ACLs enabled? true 2020-12-06 22:04:05,101 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true 2020-12-06 22:04:05,101 INFO namenode.FSDirectory: XAttrs enabled? true 2020-12-06 22:04:05,101 INFO namenode.NameNode: Caching file names occurring more than 10 times 2020-12-06 22:04:05,104 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536 2020-12-06 22:04:05,106 INFO snapshot.SnapshotManager: SkipList is disabled 2020-12-06 22:04:05,108 INFO util.GSet: Computing capacity for map cachedBlocks 2020-12-06 22:04:05,108 INFO util.GSet: VM type = 64-bit 2020-12-06 22:04:05,109 INFO util.GSet: 0.25% max memory 1.9 GB = 4.9 MB 2020-12-06 22:04:05,109 INFO util.GSet: capacity = 2^19 = 524288 entries 2020-12-06 22:04:05,115 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 2020-12-06 22:04:05,115 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 2020-12-06 22:04:05,115 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 2020-12-06 22:04:05,118 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 2020-12-06 22:04:05,118 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 2020-12-06 22:04:05,119 INFO util.GSet: Computing capacity for map NameNodeRetryCache 2020-12-06 22:04:05,119 INFO util.GSet: VM type = 64-bit 2020-12-06 22:04:05,119 INFO util.GSet: 0.029999999329447746% max memory 1.9 GB = 600.9 KB 2020-12-06 22:04:05,119 INFO util.GSet: capacity = 2^16 = 65536 entries 2020-12-06 22:04:05,135 INFO namenode.FSImage: Allocated new BlockPoolId: BP-549884843-192.168.0.177-1607263445130 2020-12-06 22:04:05,149 INFO common.Storage: Storage directory /tmp/hadoop-root/dfs/name has been successfully formatted. 2020-12-06 22:04:05,167 INFO namenode.FSImageFormatProtobuf: Saving image file /tmp/hadoop-root/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression 2020-12-06 22:04:05,240 INFO namenode.FSImageFormatProtobuf: Image file /tmp/hadoop-root/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 399 bytes saved in 0 seconds . 2020-12-06 22:04:05,251 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 2020-12-06 22:04:05,255 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown. 2020-12-06 22:04:05,255 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at ecs-ae8a-0001/192.168.0.177 ************************************************************/- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

九、启动hadoop集群

1、为了集中启动,可以修改hadoop01下的/program/hadoop-3.3.0/etc/hadoop/workers文件,具体修改为:

hadoop01 hadoop02 hadoop03- 1

- 2

- 3

注意:确保已在各自的/etc/hosts文件中进行了ip映射,确保能够互相ping通。

切换目录至/program/hadoop-3.3.0/sbin/

2、执行命令./start-dfs.sh,这时会报错误,如下图:

4、为了解决上面的错误,需要修改/program/hadoop-3.3.0/etc/hadoop/hadoop-env.sh文件内容。

在export JAVA_HOME=/usr/java/jdk-15.0.1之后增加一下3行内容export HDFS_NAMENODE_USER=root export HDFS_DATANODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root- 1

- 2

- 3

然后保存退出。

5、重新执行命令./start-dfs.sh,如下图:

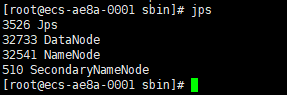

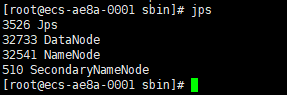

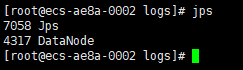

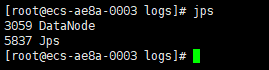

6、通过jps命令查看守护进程是否成功启动,三台服务器的输出如下图:

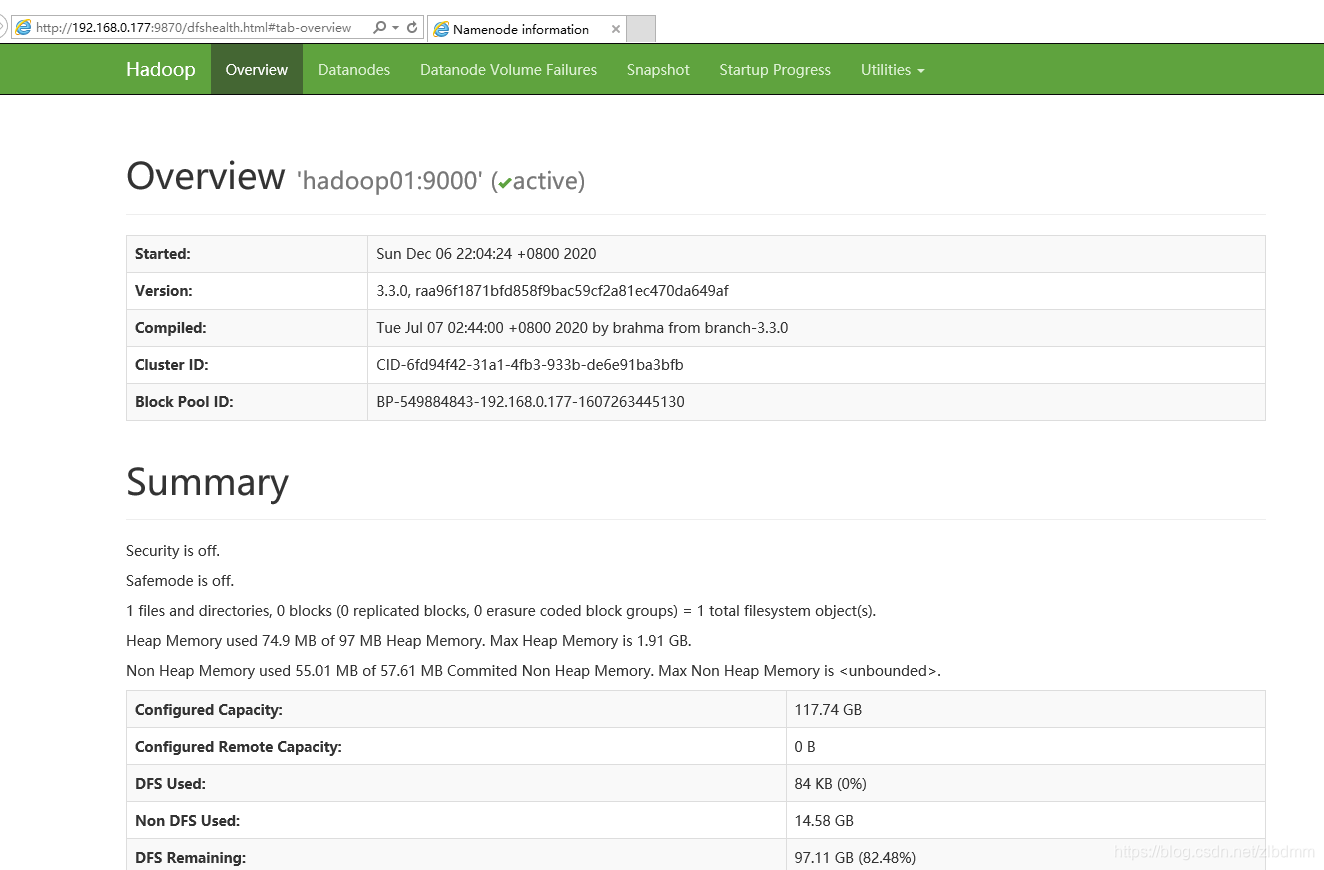

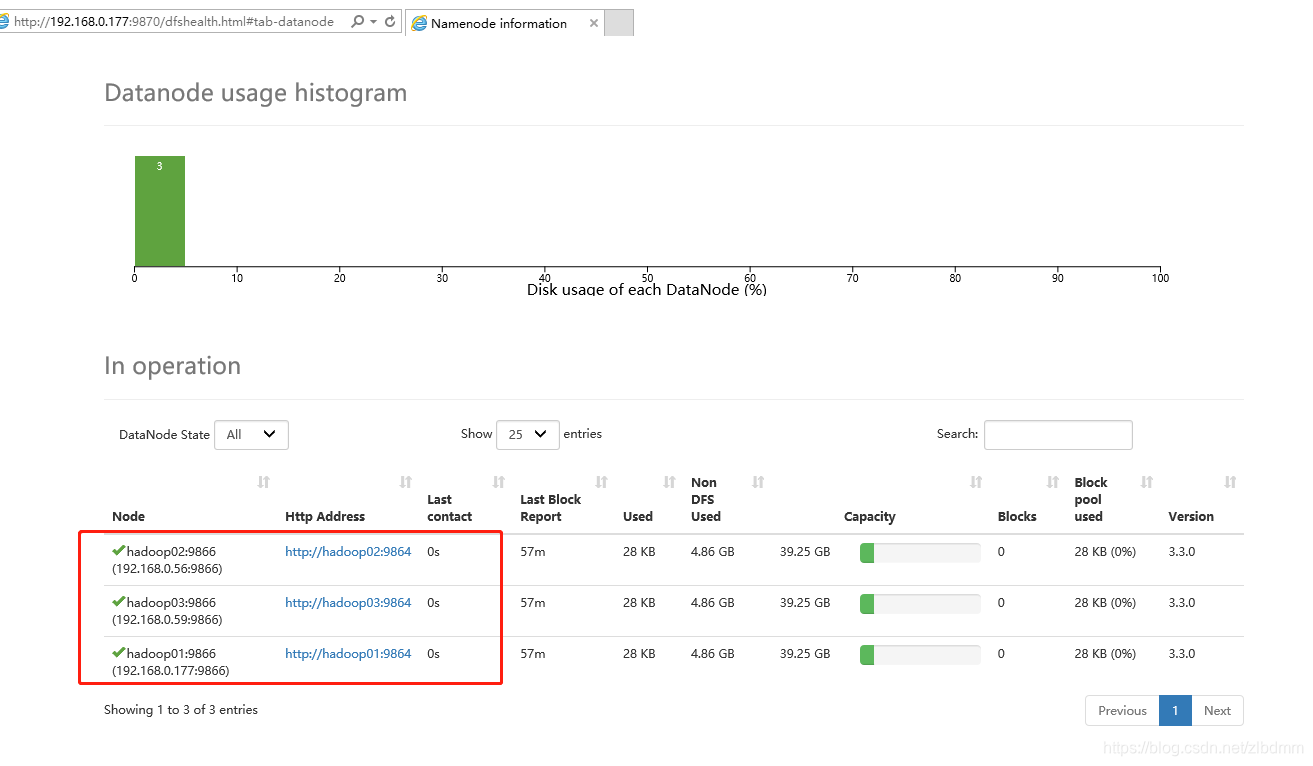

7、在浏览器中使用hadoop图形界面查看,在(另一台windows的华为云ECS中,本地电脑需要使用公网IP)浏览器地址在中输入http://192.168.0.177:9870/,出现下图:

8、选择顶部菜单的Datanodes,出现下图:

十、hadoop集群第一坑填坑记。

由于第一次操作不熟练,因此搭建的过程中不可避免出现一些问题。关键的一个问题就是一切启动都正常,但是在hadoop的图片管理界面中只看到1个数据节点。通过分析发现是各个数据节点的clusterID值相同导致(/home/data/current/VERSION文件中记录)。中间也进行过多次格式化包括网上说的删除3台服务器/tmp/目录内容和/home/data/目录的内容,再重新初始化还是不行。最后通过修改/etc/hosts文件再格式化就可以了。

需要把3台服务器/etc/hosts的以下内容前通过#注释掉,不然启动集群时会导致所有datanode的clusterID值相同,会导致在hadoop图形化界面上只有一个datanode。

ecs-ae8a-0001

#127.0.0.1 ecs-ae8a-0001 ecs-ae8a-0001- 1

ecs-ae8a-0002

#127.0.0.1 ecs-ae8a-0002 ecs-ae8a-0002- 1

ecs-ae8a-0003

#127.0.0.1 ecs-ae8a-0003 ecs-ae8a-0003- 1

结束

至此一个简单的hadoop集群搭建完毕。希望对初学的朋友能有个参考。最后感谢一下csdn大数据的老师吧,毕竟有个人带着学要快的多。如果觉得有帮助点个赞吧~

-

相关阅读:

Qt扫盲-QJsonDocument理论总结

oracle执行计划中,同一条语句块,在不同情况下执行计划不一样问题。子查询,union 导致索引失效。

vue实现【接口数据渲染随机显示】和【仅显示前五条数据】

libusb系列-006-Qt下使用libusb1.0.9源码

ssm基于web的网络租房系统毕业设计源码250910

Python 字典排序

Centos7下通过Docker安装并配置MINIO和NextCloud

JS / DOM

11.数组的分类和定义

第三章《数组与循环》第5节:do...while循环

- 原文地址:https://blog.csdn.net/m0_67392182/article/details/126565628