-

基于ubuntu 22, jdk 8x64搭建图数据库环境 hugegraph

基于ubuntu 22, jdk 8x64搭建图数据库环境 hugegraph

环境

uname -a #Linux whiltez 5.15.0-46-generic #49-Ubuntu SMP Thu Aug 4 18:03:25 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux which javac #/adoptopen-jdk8u332-b09/bin/javac which java #/adoptopen-jdk8u332-b09/bin/java- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

0. download and unpack

cd ~ #cd /home/z/ mkdir hugegraph; cd hugegraph; #pwd: /home/z/hugegraph wget https://github.com/hugegraph/hugegraph/releases/download/v0.12.0/hugegraph-0.12.0.tar.gz wget https://github.com/hugegraph/hugegraph-hubble/releases/download/v1.6.0/hugegraph-hubble-1.6.0.tar.gz tar -zxvf hugegraph-0.12.0.tar.gz tar -zxvf hugegraph-hubble-1.6.0.tar.gz tree -L 2 . """ . ├── hugegraph-0.12.0 │ ├── bin │ ├── conf │ ├── ext │ ├── lib │ ├── logs │ ├── plugins │ ├── rocksdb-data │ └── scripts ├── hugegraph-hubble-1.6.0 │ ├── bin │ ├── conf │ ├── db.mv.db │ ├── lib │ ├── LICENSE │ ├── logs │ ├── README.md │ └── ui """- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

1.启动hugegraph服务

cd ~/hugegraph/hugegraph-0.12.0/- 1

1.a 默认使用内置rocketmq数据库, 可修改为mysql

配置文件为: conf/graphs/hugegraph.properties

~/hugegraph/hugegraph-0.12.0/conf/graphs/hugegraph.properties

1.b 可修改web rest服务外部可访问:

#find ~/hugegraph/hugegraph-0.12.0/conf -type f | xargs -I@ grep -Hn 127 @ sed -i "s/restserver.url=http:\/\/127.0.0.1:8080/restserver.url=http:\/\/0.0.0.0:8080/g" conf/rest-server.properties #sed -i "g/restserver.url=http://127.0.0.1:8080/restserver.url=http://0.0.0.0:8080/s" conf/rest-server.properties #~/hugegraph/hugegraph-0.12.0/conf/rest-server.properties- 1

- 2

- 3

- 4

1.c 可修改gremlin-server端口8182 监听任意ip

sed -i "s/#host: 127.0.0.1/host: 0.0.0.0/" conf/gremlin-server.yaml ##~/hugegraph/hugegraph-0.12.0/conf/gremlin-server.yaml- 1

- 2

- 3

注意 hugegraph的gremlin-server端口8182 理论上是可以被Tinerpop的gremlin-client : ‘org.apache.tinkerpop:gremlin-driver:3.6.1’ 正常连上的,

因为 gremlin-server确实是符合gremlin规范的服务 , 但实际上 二者会有细微差异, 比如以下代码部分报错:import org.apache.tinkerpop.gremlin.driver.remote.DriverRemoteConnection; import org.apache.tinkerpop.gremlin.process.traversal.dsl.graph.GraphTraversal; import org.apache.tinkerpop.gremlin.process.traversal.dsl.graph.GraphTraversalSource; import org.apache.tinkerpop.gremlin.structure.Vertex; import java.util.Map; import static org.apache.tinkerpop.gremlin.process.traversal.AnonymousTraversalSource.traversal; public class Test2 { public static void main(String[] args){ GraphTraversalSource g = traversal().withRemote(DriverRemoteConnection.using("192.168.0.3",8182,"g")); GraphTraversal<Vertex, Map<Object, Object>> list = g.V().elementMap(); test2(g); try { g.close(); } catch (Exception e) { e.printStackTrace(); } } public static void test2(GraphTraversalSource g){ Long vertexCnt = g.V().count().next(); System.out.println("vertexCnt:"+vertexCnt); } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

行"Long vertexCnt = g.V().count().next();", 报错如下:

Exception in thread "main" java.util.concurrent.CompletionException: org.apache.tinkerpop.gremlin.driver.exception.ResponseException: The traversal source [g] for alias [g] is not configured on the server. at java.util.concurrent.CompletableFuture.reportJoin(CompletableFuture.java:375) at java.util.concurrent.CompletableFuture.join(CompletableFuture.java:1934) at org.apache.tinkerpop.gremlin.driver.ResultSet.one(ResultSet.java:123) at org.apache.tinkerpop.gremlin.driver.ResultSet$1.hasNext(ResultSet.java:175) at org.apache.tinkerpop.gremlin.driver.ResultSet$1.next(ResultSet.java:182) at org.apache.tinkerpop.gremlin.driver.ResultSet$1.next(ResultSet.java:169) at org.apache.tinkerpop.gremlin.driver.remote.DriverRemoteTraversal$TraverserIterator.next(DriverRemoteTraversal.java:115) at org.apache.tinkerpop.gremlin.driver.remote.DriverRemoteTraversal$TraverserIterator.next(DriverRemoteTraversal.java:100) at org.apache.tinkerpop.gremlin.driver.remote.DriverRemoteTraversal.nextTraverser(DriverRemoteTraversal.java:92) at org.apache.tinkerpop.gremlin.process.remote.traversal.step.map.RemoteStep.processNextStart(RemoteStep.java:80) at org.apache.tinkerpop.gremlin.process.traversal.step.util.AbstractStep.next(AbstractStep.java:135) at org.apache.tinkerpop.gremlin.process.traversal.step.util.AbstractStep.next(AbstractStep.java:40) at org.apache.tinkerpop.gremlin.process.traversal.util.DefaultTraversal.next(DefaultTraversal.java:249) at Test2.test2(Test2.java:28) at Test2.main(Test2.java:20) Caused by: org.apache.tinkerpop.gremlin.driver.exception.ResponseException: The traversal source [g] for alias [g] is not configured on the server. at org.apache.tinkerpop.gremlin.driver.Handler$GremlinResponseHandler.channelRead0(Handler.java:245) at org.apache.tinkerpop.gremlin.driver.Handler$GremlinResponseHandler.channelRead0(Handler.java:200) at io.netty.channel.SimpleChannelInboundHandler.channelRead(SimpleChannelInboundHandler.java:99) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) at org.apache.tinkerpop.gremlin.driver.Handler$GremlinSaslAuthenticationHandler.channelRead0(Handler.java:126) at org.apache.tinkerpop.gremlin.driver.Handler$GremlinSaslAuthenticationHandler.channelRead0(Handler.java:68) at io.netty.channel.SimpleChannelInboundHandler.channelRead(SimpleChannelInboundHandler.java:99) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) at io.netty.channel.ChannelInboundHandlerAdapter.channelRead(ChannelInboundHandlerAdapter.java:93) at io.netty.handler.codec.http.websocketx.Utf8FrameValidator.channelRead(Utf8FrameValidator.java:89) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:327) at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:299) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919) at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166) at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:722) at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:658) at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:584) at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:496) at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:995) at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74) at java.lang.Thread.run(Thread.java:745)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

1.1 初始化hugegraph存储

./bin/init-store.sh #~/hugegraph/hugegraph-0.12.0/bin/init-store.sh #执行后,将会多出目录rocksdb-data tree -L 2 ~/hugegraph/hugegraph-0.12.0/rocksdb-data/ """ rocksdb-data/ ├── g │ ├── 000006.log │ ├── CURRENT │ ├── IDENTITY │ ├── LOCK │ ├── LOG │ ├── LOG.old.1661579557252372 │ ├── MANIFEST-000005 │ ├── OPTIONS-000008 │ └── OPTIONS-000010 ├── m │ ├── 000006.log │ ├── CURRENT │ ├── IDENTITY │ ├── LOCK │ ├── LOG │ ├── LOG.old.1661579555337140 │ ├── MANIFEST-000005 │ ├── OPTIONS-000008 │ └── OPTIONS-000010 └── s ├── 000006.log ├── CURRENT ├── IDENTITY ├── LOCK ├── LOG ├── LOG.old.1661579555688062 ├── MANIFEST-000005 ├── OPTIONS-000008 └── OPTIONS-000010 """- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

1.2 启动hugegraph服务

./bin/start-hugegraph.sh #~/hugegraph/hugegraph-0.12.0/bin/start-hugegraph.sh jps #35673 HugeGraphServer #huge web rest api: wget http://localhost:8080- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

2.启动hugegraph-hubble(web界面)

cd ~/hugegraph/hugegraph-hubble-1.6.0/- 1

2.1修改为允许外部访问web界面

#find conf -type f |xargs -I@ grep -Hn localhost @ sed -i "s/server.host=localhost/server.host=0.0.0.0/g" conf/hugegraph-hubble.properties #~/hugegraph/hugegraph-0.12.0/conf/hugegraph-hubble.properties- 1

- 2

- 3

- 4

2.2 启动hugegraph web服务

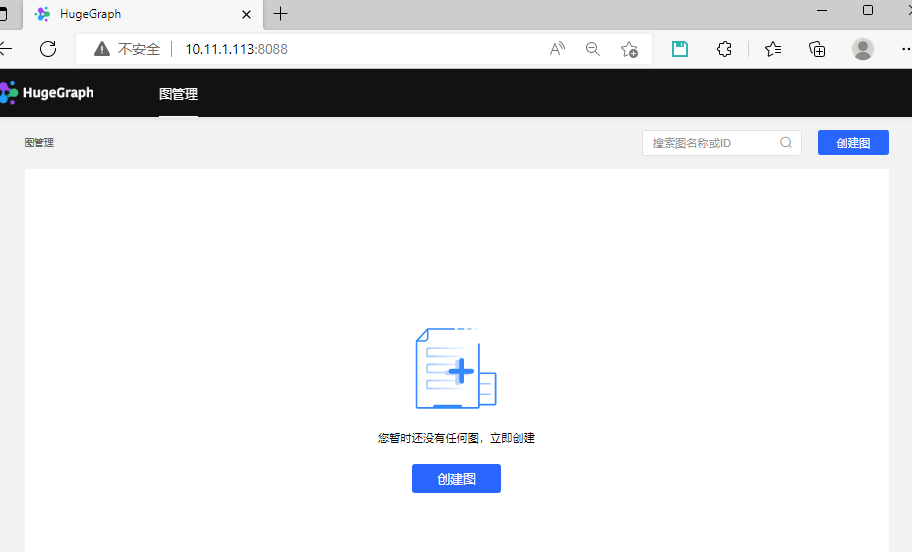

./bin/start-hubble.sh #~/hugegraph/hugegraph-0.12.0/bin/start-hubble.sh jps #36640 HugeGraphHubble #35673 HugeGraphServer sudo netstat -lntp | grep java #tcp6 0 0 :::8088 :::* LISTEN 36640/java #tcp6 0 0 :::8080 :::* LISTEN 35673/java #tcp6 0 0 127.0.0.1:8182 :::* LISTEN 35673/java #浏览器访问 hugegraph web界面:http://10.11.1.113:8088/- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

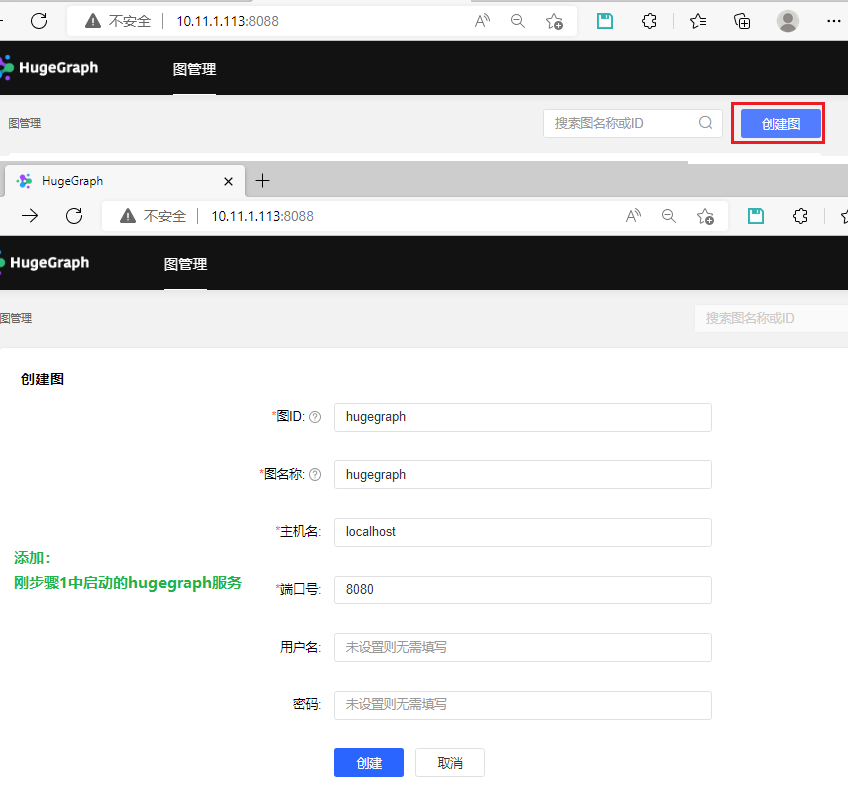

web界面创建图

注意 hubbleweb界面此时并不知道本机的hugegraph web服务8080端口的存在,所以要添加把本机服务添加到web界面上:

web点击创建图

hugegraph的client 语言支持貌似较弱(只有java client, 而且使用人数很少. 更没有官方py client) ,故而放弃hugegraph转向janusgraph

-

相关阅读:

Android 保持ImageVIew大小不变,让图片按比例拉伸

unittest自动测试多个用例时,logging模块重复打印解决

【PyCharm】设置(风格 | 字体 | 模板)

Git 的基本概念和使用方式

【pytorch】torchvion.transforms.RandomResizedCrop

A-古代汉语复习重点

docker容器安装gdb

商家收款一万手续费多少

Vue3 中使用 TypeScript --- 给 Vue 中的 数据 标注类型

Replace the Numbers

- 原文地址:https://blog.csdn.net/hfcaoguilin/article/details/126557211