-

Kubernetes---通过ansible自动化快速部署k8s集群

Kubernetes-通过Ansible自动化快速部署Kubernetes集群

一、安装ansible

使用官方epel源

yum install -y epel-release- 1

使用阿里源

cat >/etc/yum.repos.d/epel-7.repo<<EOF [epel] name=Extra Packages for Enterprise Linux 7 - $basearch baseurl=http://mirrors.aliyun.com/epel/7/$basearch failovermethod=priority enabled=1 gpgcheck=0 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 [epel-debuginfo] name=Extra Packages for Enterprise Linux 7 - $basearch - Debug baseurl=http://mirrors.aliyun.com/epel/7/$basearch/debug failovermethod=priority enabled=0 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 gpgcheck=0 [epel-source] name=Extra Packages for Enterprise Linux 7 - $basearch - Source baseurl=http://mirrors.aliyun.com/epel/7/SRPMS failovermethod=priority enabled=0 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 gpgcheck=0 EOF- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

安装ansible

yum -y install ansible- 1

二、环境准备

此次使用三台虚拟机,每台配置最低2cpu,2G内存。

一台做k8s-master,两台做k8s-node1、k8s-node2。

三台虚拟机均需安装ansible。1.添加本地解析,修改hostname。

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.76.100 master 192.168.76.101 node1 192.168.76.102 node2- 1

- 2

- 3

- 4

- 5

[root@master /]# cat /etc/hostname master [root@master /]# echo "master" > /proc/sys/kernel/hostname #将hostname写入内核进行修改 exit后重新登录,修改成功。- 1

- 2

- 3

- 4

- 5

- 6

2.配置ansible主机清单,发送公钥

[root@master /]# cd /etc/ansible/ [root@master ansible]# cat hosts.ini [k8s-master] master [k8s-nodes] node1 node2 [k8s] [k8s:children] k8s-master k8s-nodes- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

[root@master ansible]# cat hosts.yml --- - name: 同步所有节点的 /etc/hosts 文件 并且设置主机名 hosts: k8s gather_facts: no tasks: - name: 同步 hosts 文件 copy: src=/etc/hosts dest=/etc/hosts - name: 设置各自的主机名 shell: cmd: hostnamectl set-hostname "{{ inventory_hostname }}" register: sethostname - name: 验证是否成功设置了主机名 debug: var=sethostname.rc ...- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

[root@master ansible]# cat send-pubkey.yml - hosts: all gather_facts: no remote_user: root vars: ansible_ssh_pass: 123 tasks: - name: Set authorized key taken from file authorized_key: user: root state: present key: "{{ lookup('file', '/root/.ssh/id_rsa.pub') }}"- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

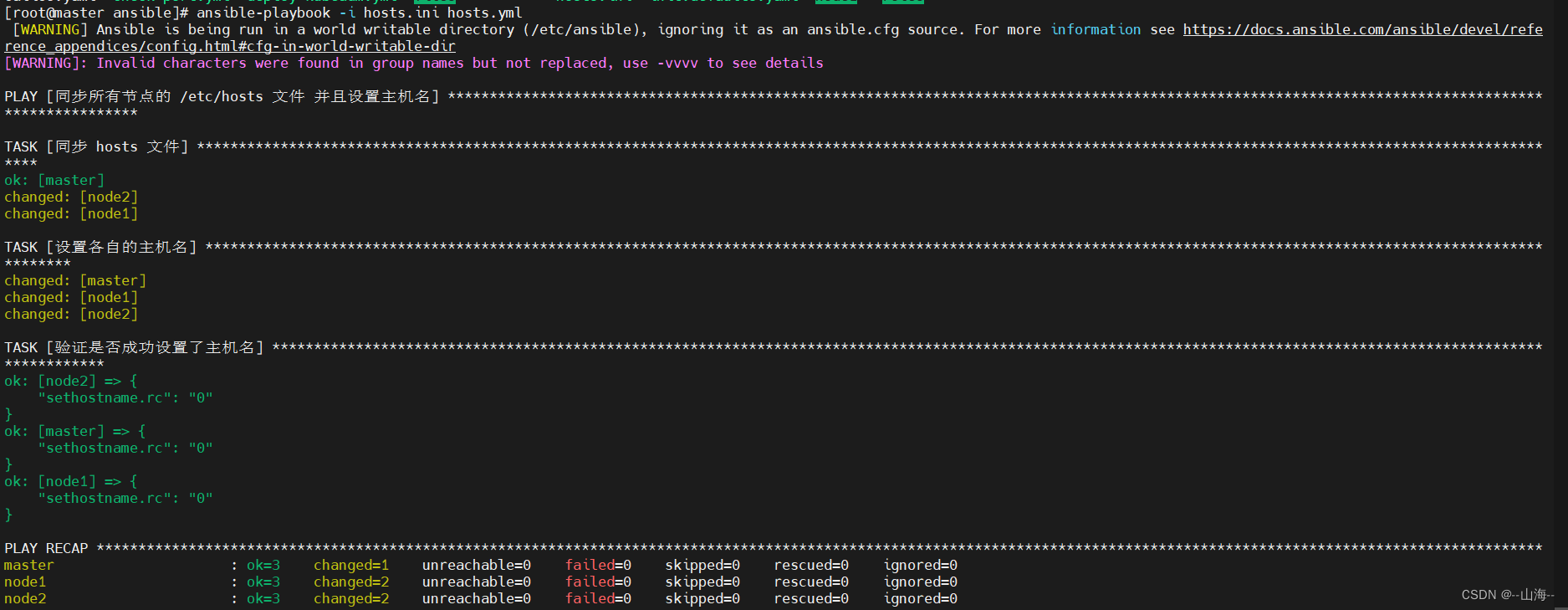

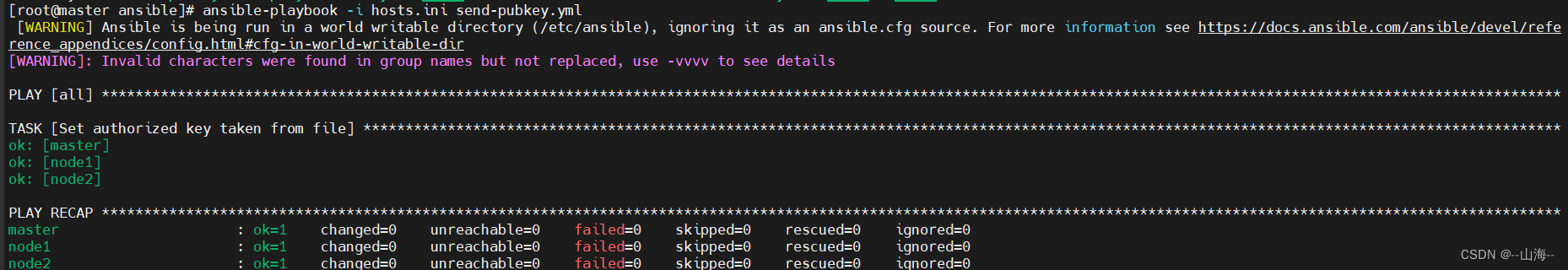

执行ansible指令

[root@master ansible]# ansible-playbook -i hosts.ini hosts.yml- 1

[root@master ansible]# ansible-playbook -i hosts.ini send-pubkey.yml- 1

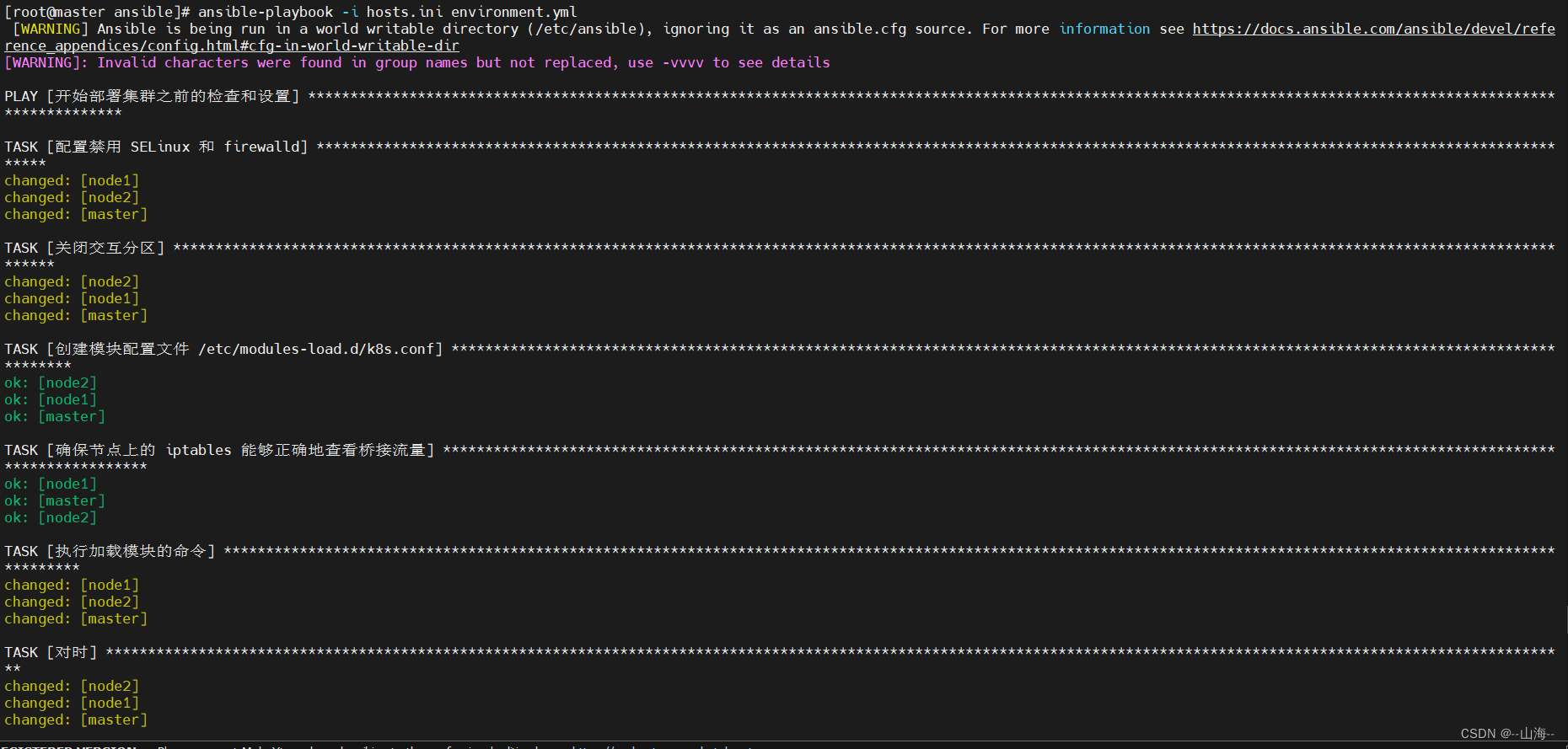

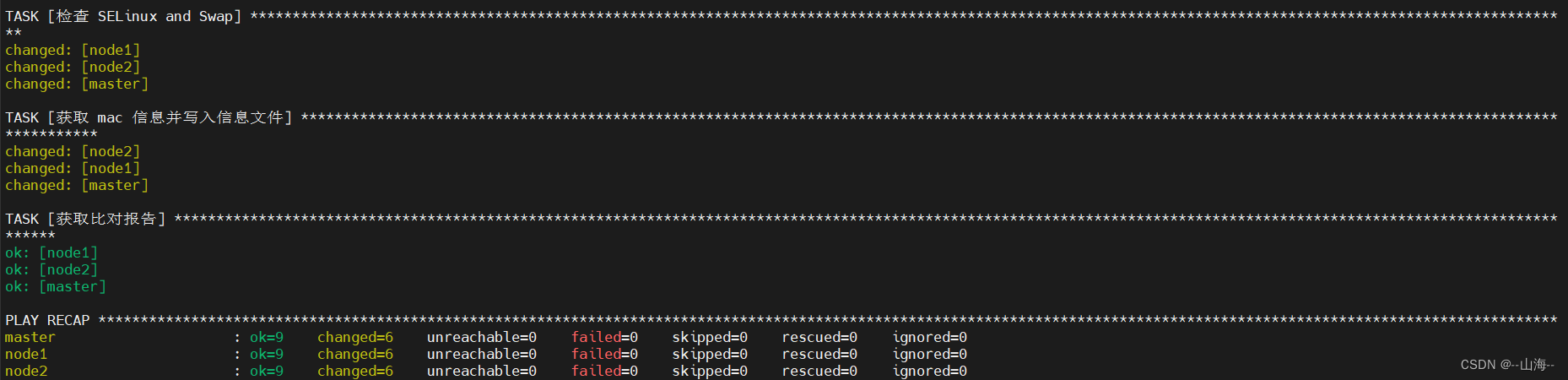

3.系统环境设置

[root@master ansible]# cat environment.yml --- - name: 开始部署集群之前的检查和设置 hosts: k8s gather_facts: no tasks: - name: 配置禁用 SELinux 和 firewalld shell: | systemctl stop firewalld systemctl disable firewalld setenforce 0; sed -ri '/^SELINUX=/ c SELINUX=disabled' /etc/selinux/config tags: - swap - name: 关闭交互分区 shell: cmd: swapoff -a; sed -ri 's/.*swap.*/#&/g' /etc/fstab warn: no tags: - swap - name: 创建模块配置文件 /etc/modules-load.d/k8s.conf blockinfile: path: /etc/modules-load.d/k8s.conf create: yes block: | br_netfilter ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack_ipv4 - name: 确保节点上的 iptables 能够正确地查看桥接流量 blockinfile: path: /etc/sysctl.d/k8s.conf create: yes block: | net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 - name: 执行加载模块的命令 shell: modprobe br_netfilter - name: 对时 shell: ntpdate cn.pool.ntp.org - name: 检查 SELinux and Swap shell: | hostname > /tmp/host-info; getenforce >> /tmp/host-info; free -m |grep 'Swap' >> /tmp/host-info; lsmod | grep br_netfilter >> /tmp/host-info; sysctl --system |grep 'k8s.conf' -A 2 >> /tmp/host-info; - name: 获取 mac 信息并写入信息文件 shell: | host=$(hostname); ip link | awk -v host=$host '/link\/ether/ {print $2, host}' >> /tmp/host-info ; echo "---------------------------" >> /tmp/host-info - name: 获取比对报告 fetch: src: /tmp/host-info dest: ./ ...- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

执行指令

[root@master ansible]# ansible-playbook -i hosts.ini environment.yml- 1

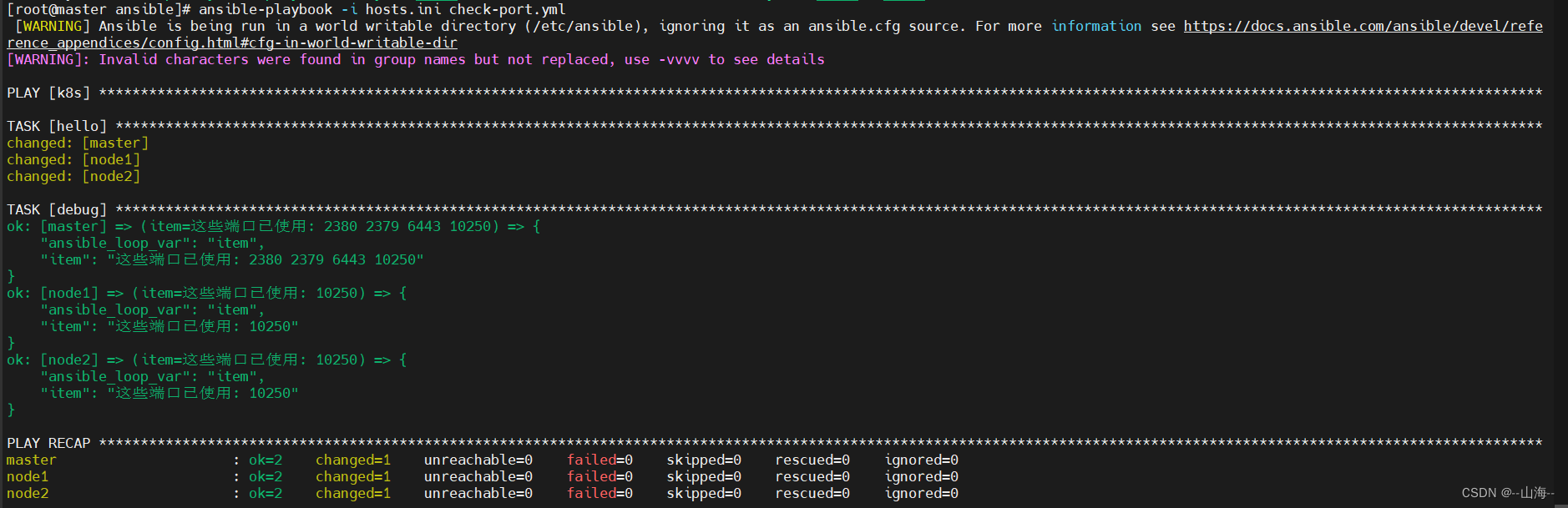

4.检查端口

[root@master ansible]# cat check-port.yml - hosts: k8s gather_facts: no tasks: - name: hello script: ./check-port.py register: ret - debug: var=item loop: "{{ ret.stdout_lines }}"- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

[root@master ansible]# cat check-port.py #!/bin/env python #coding:utf-8 import re import subprocess import socket hostname = socket.gethostname() ports_set = set() if 'master' in hostname: check_ports = {"6443", "10250", "10251", "102502", "2379", "2380"} else: check_ports = {str(i) for i in xrange(30000, 32768) } check_ports.add("10250") r = subprocess.Popen("ss -nta", stdout=subprocess.PIPE,shell=True) result = r.stdout.read() for line in result.splitlines(): if re.match('^(ESTAB|LISTEN|SYN-SENT)', line): line = line.split()[3] port = line.split(':')[-1] ports_set.add(port) used_ports = check_ports & ports_set used_ports = ' '.join(used_ports) if used_ports: print("这些端口已使用: %s" % used_ports) else: print("端口未占用")- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

[root@master ansible]# ansible-playbook -i hosts.ini check-port.yml- 1

三、部署docker

[root@master ansible]# cat deploy-docker.yml - name: deploy docker hosts: k8s gather_facts: no vars: pkgs_dir: /docker-pkg pkgs: - device-mapper-persistent-data - lvm2 - docker-ce - docker-ce-cli - containerd.io #变量 download_host 需要手动设置 #且值需要是此 playbook 目标主机中的一个 #需要写在 inventory 文件中的名称 download_host: "master" tasks: - name: "只需要给 {{ download_host }} 的主机安装仓库文件" when: inventory_hostname == download_host get_url: url: https://download.docker.com/linux/centos/docker-ce.repo dest: /etc/yum.repos.d/docker-ce.repo tags: - deploy - name: 创建存放 rmp 包的目录 when: inventory_hostname == download_host file: path: "{{ pkgs_dir }}" state: directory tags: - deploy - name: 开始下载软件包 when: inventory_hostname == download_host yum: name: "{{ pkgs }}" download_only: yes download_dir: "{{ pkgs_dir }}" tags: - deploy - name: 传输 rpm 包到远程节点 when: inventory_hostname != download_host copy: src: "{{ pkgs_dir }}" dest: "/" tags: - deploy - name: 正在执行从本地安装软件包 shell: cmd: yum -y localinstall * chdir: "{{ pkgs_dir }}" warn: no async: 600 poll: 0 tags: - deploy - name: 设置 /etc/docker/daemon.json copy: src=files/daemon.json dest=/etc/docker/daemon.json notify: restart docker tags: - start - update - name: 启动 docker systemd: name: docker enabled: yes state: started tags: - start handlers: - name: restart docker systemd: name: docker state: restarted ...- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

[root@master files]# cat daemon.json { "registry-mirrors": ["https://ut9q2ix5.mirror.aliyuncs.com"], #自己的阿里云镜像加速地址 "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2" }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

部署执行

ansible-playbook -i hosts.ini deploy-docker.yml- 1

四、部署kubeadm、kubelet和kubectl

[root@master ansible]# cat deploy-kubeadm.yml - name: Deploy kubeadm kubelet kubectl hosts: k8s gather_facts: no vars: pkgs_dir: /kubeadm-pkg pkgs: ["kubelet-1.23.5", "kubeadm-1.23.5", "kubectl-1.23.5", "ipvsadm"] download_host: "master" tasks: - name: "只需要给 {{ download_host }}安装仓库文件" when: inventory_hostname == download_host copy: src: files/kubernetes.repo dest: /etc/yum.repos.d/kubernetes.repo - name: "创建存放rpm包的目录" when: inventory_hostname == download_host file: path: "{{ pkgs_dir }}" state: directory - name: "下载软件包" when: inventory_hostname == download_host yum: name: "{{ pkgs }}" download_only: yes download_dir: "{{ pkgs_dir }}" - name: "传输rpm包到远程节点" when: inventory_hostname != download_host copy: src: "{{ pkgs_dir }}" dest: "/" - name: "正在执行从本地安装软件包" shell: cmd: yum -y localinstall * chdir: "{{ pkgs_dir }}" warn: no async: 600 poll: 0 register: yum_info- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

[root@master ansible]# cat files/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

执行部署

ansible-playbook -i hosts.ini deploy-kubeadm.yml- 1

修改"/etc/sysconfig/kubelet"文件的内容:

[root@master ansible]# cat /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS="--cgroup-driver=systemd" KUBE_PROXY_MODE="ipvs"- 1

- 2

- 3

[root@master ansible]# cat kubelet_config.yml --- - name: 同步所有节点的 /etc/sysconfig/kubelet 文件 hosts: k8s gather_facts: no tasks: - name: 同步 kubelet 文件 copy: src=/etc/sysconfig/kubelet dest=/etc/sysconfig/kubelet - name: 开机自启动 systemd: name: kubelet enabled: yes ...- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

执行指令,同步各个节点

[root@master ansible]# ansible-playbook -i hosts.ini kubelet_config.yml- 1

五、初始化集群

1.在master节点上进行初始化

kubeadm init \ --apiserver-advertise-address=192.168.76.100 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.23.5 \ --service-cidr=10.96.0.0/12 \ --pod-network-cidr=10.244.0.0/16- 1

- 2

- 3

- 4

- 5

- 6

根据提示消息,在Master节点上使用kubectl工具:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config- 1

- 2

- 3

2.安装网络插件

kubernetes支持多种网络插件,比如flannel、calico、canal等,推荐安装calico。

kubectl apply -f calico.yaml- 1

六、将node节点加入集群

在node节点执行:

kubeadm join 192.168.76.100:6443 --token vdwao4.45tna7vnwacomafw --discovery-token-ca-cert-hash sha256:41fb812339dd74fc23eafc0680da4210d2f1415161ca872fc2cbf556ef4f8b38- 1

注意查看在master节点初始化完成后的屏显,会有将nodes加入集群的指令,token是不一样的。

也可重新创建token:

kubeadm token create --print-join-command # 在添加新node节点时,也需要此指令,将新node节点加入集群- 1

查看安装进度:

[root@master ansible]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-7d467674b-kc2fj 1/1 Running 1 (125m ago) 17h calico-node-8hvtt 1/1 Running 15 (79m ago) 17h calico-node-n6srw 1/1 Running 13 (120m ago) 17h calico-node-xrl58 1/1 Running 15 (79m ago) 17h coredns-6d8c4cb4d-9lzs8 1/1 Running 1 (125m ago) 18h coredns-6d8c4cb4d-v5kqc 1/1 Running 1 (125m ago) 18h etcd-master 1/1 Running 1 (125m ago) 18h kube-apiserver-master 1/1 Running 1 (125m ago) 18h kube-controller-manager-master 1/1 Running 3 (113m ago) 18h kube-proxy-m29br 1/1 Running 1 (126m ago) 17h kube-proxy-nbss9 1/1 Running 1 (125m ago) 18h kube-proxy-ngl59 1/1 Running 1 (125m ago) 17h kube-scheduler-master 1/1 Running 3 (113m ago) 18h- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

[root@master ansible]# kubectl get pods -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-7d467674b-kc2fj 1/1 Running 1 (4h34m ago) 20h 10.244.219.68 master <none> <none> calico-node-8hvtt 1/1 Running 15 (3h48m ago) 20h 192.168.76.102 node2 <none> <none> calico-node-n6srw 1/1 Running 13 (4h28m ago) 20h 192.168.76.100 master <none> <none> calico-node-xrl58 1/1 Running 15 (3h48m ago) 20h 192.168.76.101 node1 <none> <none> coredns-6d8c4cb4d-9lzs8 1/1 Running 1 (4h34m ago) 20h 10.244.219.70 master <none> <none> coredns-6d8c4cb4d-v5kqc 1/1 Running 1 (4h34m ago) 20h 10.244.219.69 master <none> <none> etcd-master 1/1 Running 1 (4h34m ago) 20h 192.168.76.100 master <none> <none> kube-apiserver-master 1/1 Running 1 (4h34m ago) 20h 192.168.76.100 master <none> <none> kube-controller-manager-master 1/1 Running 3 (4h22m ago) 20h 192.168.76.100 master <none> <none> kube-proxy-m29br 1/1 Running 1 (4h34m ago) 20h 192.168.76.101 node1 <none> <none> kube-proxy-nbss9 1/1 Running 1 (4h34m ago) 20h 192.168.76.100 master <none> <none> kube-proxy-ngl59 1/1 Running 1 (4h34m ago) 20h 192.168.76.102 node2 <none> <none> kube-scheduler-master 1/1 Running 3 (4h22m ago) 20h 192.168.76.100 master <none> <none>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

查看集群状态:

[root@master ansible]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane,master 18h v1.23.5 node1 Ready <none> 17h v1.23.5 node2 Ready <none> 17h v1.23.5- 1

- 2

- 3

- 4

- 5

修改node的ROLES:

[root@master ansible]# kubectl label nodes node1 node-role.kubernetes.io/node1= node/node1 labeled [root@master ansible]# kubectl label nodes node2 node-role.kubernetes.io/node2= node/node2 labeled [root@master ansible]# kubectl get node NAME STATUS ROLES AGE VERSION master Ready control-plane,master 20h v1.23.5 node1 Ready node1 20h v1.23.5 node2 Ready node2 20h v1.23.5- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

-

相关阅读:

软件交互加密工具

数据结构之顺序表

陈芳允于1971年提出双星定位

【Git】Gitbash使用ssh 上传本地项目到github

php-fpm自定义zabbix监控

windows环境下python连接openGauss数据库

AI目标分割能力,无需绿幕即可实现快速视频抠图

基于高效多分支卷积神经网络的生长点精确检测与生态友好型除草

Python之线程(三)

springcloud22:sentinal的使用

- 原文地址:https://blog.csdn.net/AnNan1997/article/details/126498255