-

【踩坑实录】datax从pg同步数据到hive数据全为null问题

目录

3、文件类型和压缩格式不对(ORC、TEXTFILE...)

一、问题描述

hive建表ddl:

- create table table_name(

- a bigint,

- b string

- )

- comment 'xx表'

- partitioned by (`ds` string) ;

datax自定义json:

- {

- "job": {

- "content": [

- {

- "reader": {

- "name": "postgresqlreader",

- "parameter": {

- "connection": [

- {

- "jdbcUrl": ["jdbc:postgresql://ip:host/db"],

- "querySql": ["select a,b from table_name"],

- }

- ],

- "username": "name",

- "password": "pwd"

- }

- },

- "writer": {

- "name": "hdfswriter",

- "parameter": {

- "defaultFS": "hdfs://ip:host",

- "fileType": "text",

- "path": "/user/hive/warehouse/db.db/table_name/ds=${ds}",

- "fileName": "table_name",

- "column": [

- {"name":"a","type":"bigint"},

- {"name":"b","type":"string"}

- ],

- "writeMode": "append",

- "fieldDelimiter": "\t",

- "encoding": "utf-8"

- }

- }

- }],

- "setting": {

- "speed": {

- "channel": "1"

- }

- }

- }

- }

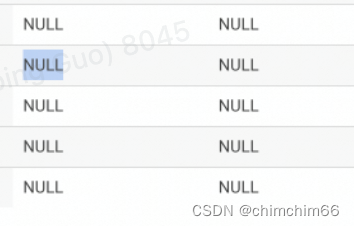

执行后在hue上查询该表数据发现所有数据都为null

二、定位原因

可能原因如下:

1.建表分隔符和导入时的分隔符不一致

2.字段的数据类型不一致

3.文件类型和压缩格式不对(ORC、TEXTFILE...)

4.字段值包含了分隔符,可以换一个分隔符试试

三、解决方案

1、建表分隔符和导入时的分隔符不一致

1.修改建表分隔符

alter table ds.ods_user_info_dd set serdeproperties('field.delim'='\t');2.建表时直接指定好分隔符

- create table table_name(

- a bigint,

- b string

- )

- comment 'xx表'

- partitioned by (`ds` string)

- row format delimited fields terminated by '\t';

3.针对分区表和无分区表的区别

2、字段的数据类型不一致

修改字段类型与源表一致

alter table 表名 change column 原字段名 现字段名 字段类型;3、文件类型和压缩格式不对(ORC、TEXTFILE...)

- --修改为ORC格式

- ALTER TABLE 表名 SET FILEFORMAT ORC

- --修改为Text

- ALTER TABLE 表名 SET FILEFORMAT TEXTFILE

4、字段值包含了分隔符,可以换一个分隔符试试

-

相关阅读:

useEffect和useLayoutEffect的区别

PHP刷leetcode第一弹: 两数之和

【周赛复盘】力扣第 312 场单周赛

指针进阶,字符串函数

PostgreSQL数据库统计信息——analyze命令

大数据面试重点之kafka(四)

MathType2024优秀的数学公式编辑工具

java抽象的使用

冲刺金九银十!2022 最新 Java 核心知识大全吃透轻松年薪 50 万

vscode 资源管理器移动到右边

- 原文地址:https://blog.csdn.net/chimchim66/article/details/126489068