-

pandas 基础和示例

pandas 基础

pandas应用

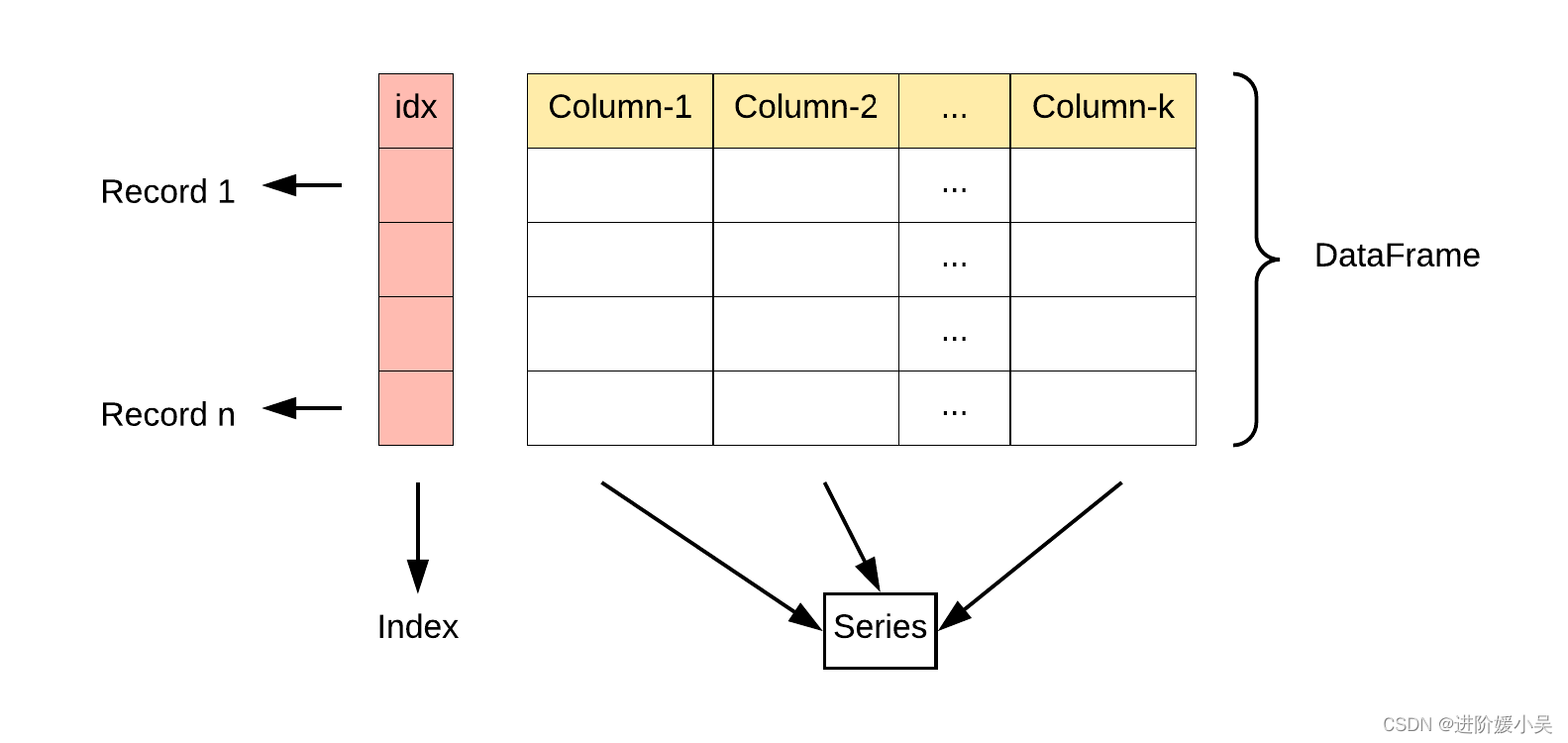

pandas主要的数据结构是Series(一维数据)和DataFrame(二维数据)。

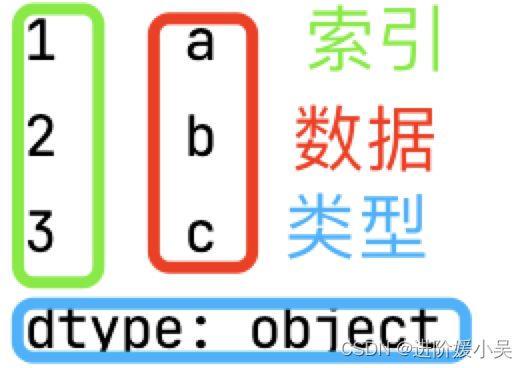

- Series是一种类似一维数组的对象,它由一组数据(各种Numpy数据类型)以及一组与之相关的数据标签(即索引)组成。

- DataFrame是表格型的数据结构,含有一组有序的列,每列可以是不同的值类型(数值、字符串、布尔型值)。DataFrame 既有行索引也有列索引,它可以被看做由 Series 组成的字典(共同用一个索引)。

Pandas官网:https://pandas.pydata.org/

Pandas源代码:https://github.com/pandas-dev/pandas

对于python 3,只需编写以下代码即可忽略所有警告。

from warnings import filterwarnings filterwarnings("ignore")- 1

- 2

还是没有消除警告

Pandas安装

pip install pandas- 1

查看是否安装成功

import pandas as pd print(pd.__version__) # 查看pandas版本 # 一个简单的demo data = {'Time': ['year', 'month', 'day'], 'Now': [2022, 8, 22]} df_data = pd.DataFrame(data) print(df_data)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

执行结果

Pandas数据结构

Series

Parameters:

- data : array-like, Iterable, dict, or scalar value

包含存储在系列中的数据。如果 data 是一个 dict,则保持参数顺序。

- index : array-like or Index (1d)

数据索引标签,如果不指定,默认从 0 开始。值必须是可散列的,并且与“数据”具有相同的长度。允许使用非唯一索引值。如果未提供,将默认为 RangeIndex (0, 1, 2, …, n)。如果 data 是 dict-like 并且 index 是 None,那么 data 中的键被用作索引。如果索引不是 None,则使用索引值重新索引生成的 Series。

- dtype : str, numpy.dtype, or ExtensionDtype, optional

输出系列的数据类型。如果未指定,这将从

data推断。-

name:设置名称。

-

copy : bool, default False

拷贝数据,默认为 False。

import pandas as pd data = {'a': 1, 'b': 2, 'c': 3} ser_data = pd.Series(data=data, index=['a', 'b', 'c']) print(ser_data)- 1

- 2

- 3

- 4

输出结果:

import pandas as pd data = {'a': 1, 'b': 2, 'c': 3} ser_data = pd.Series(data=data, index=['x', 'y', 'z']) print(ser_data)- 1

- 2

- 3

- 4

输出结果:

⚠️请注意,索引首先使用字典中的键构建。在此之后,Series 使用给定的 Index 值重新索引,因此我们得到所有 NaN 作为结果。import pandas as pd a = [1, 2, 3] myvar = pd.Series(a) print(myvar)- 1

- 2

- 3

- 4

输出结果:

如果没有指定索引,索引值就从 0 开始import pandas as pd data = ['a', 'b', 'c'] ser_data = pd.Series(data=data, index=[1, 2, 3]) print(ser_data)- 1

- 2

- 3

- 4

指定索引,输出结果:

DataFrame

既有行索引也有列索引。

图片来自菜鸟教程

Parameters:-

data: ndarray (structured or homogeneous), Iterable, dict, or DataFrame

一组数据(ndarray、series, map, lists, dict 等类型)。Dict 可以包含系列、数组、常量、数据类或类似列表的对象。如果数据是一个字典,列顺序遵循插入顺序。

-

index : Index or array-like

索引值,或者可以称为行标签。如果输入数据没有索引信息部分并且没有提供索引,则默认为 RangeIndex。

-

columns : Index or array-like

列标签。如果没有提供列标签,将默认为 RangeIndex (0, 1, 2, …, n)。

-

dtype : dtype, default None

要强制的数据类型。只允许使用一个 dtype。如果没有,推断。

-

copy : bool, default False

拷贝数据,默认为 False。

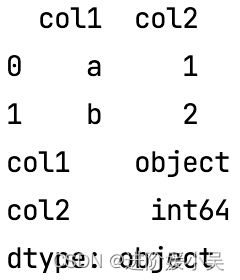

import pandas as pd data = {'col1': ['a', 'b'], 'col2': [1, 2]} # 使用 ndarrays 创建 # data = [{'col1': 'a', 'col2': 1}, {'col1': 'b', 'col2': 2}] # 使用字典创建 # data = [['a', 1], ['b', 2]] # 使用列表创建 df_data = pd.DataFrame(data=data) # df_data = pd.DataFrame(data=data, columns=['col1', 'col2']) print(df_data) print(df_data.dtypes) # 查看数据类型- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

输出结果:

从dataclass构造 DataFrameimport pandas as pd from dataclasses import make_dataclass Point = make_dataclass("Point", [("x", str), ("y", int)]) class_data = pd.DataFrame([Point('a', 1), Point('b', 2), Point('c', 3)]) print(class_data) print(class_data.dtypes) # 查看数据类型- 1

- 2

- 3

- 4

- 5

- 6

输出结果:

没有对应的部分数据为 NaN。Pandas 可以使用

loc属性返回指定行的数据,如果没有设置索引,第一行索引为 0,第二行索引为 1import pandas as pd data = { "calories": [420, 380, 390], "duration": [50, 40, 45] } df = pd.DataFrame(data) # 数据载入到 DataFrame 对象 print(df) print('第一行:\n{}'.format(df.loc[0])) # 返回第一行 print('第二行:\n{}'.format(df.loc[1])) # 返回第二行 print(df.loc[[0, 1]]) # 返回第一行和第二行 # df = pd.DataFrame(data, index = ["day1", "day2", "day3"]) # print(df.loc["day2"]) # 指定索引- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

输出结果:

CSV文件

CSV(Comma-Separated Values,逗号分隔值,有时也称为字符分隔值,因为分隔字符也可以不是逗号),其文件以纯文本形式存储表格数据(数字和文本)。

Pandas 可以很方便的处理 CSV 文件,本文以鸢尾花数据集

iris.csv为例,扫码获取数据。

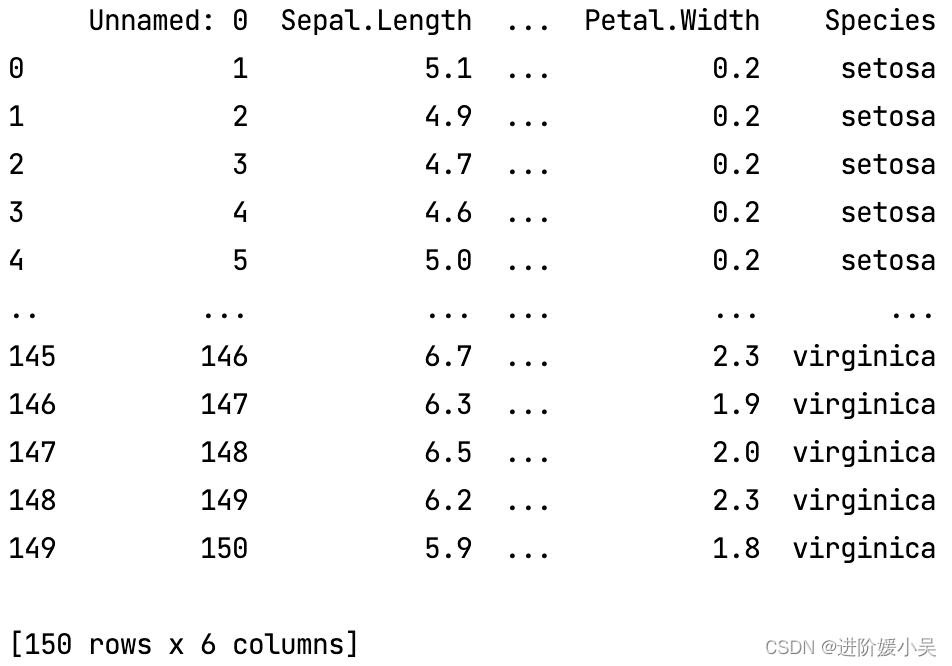

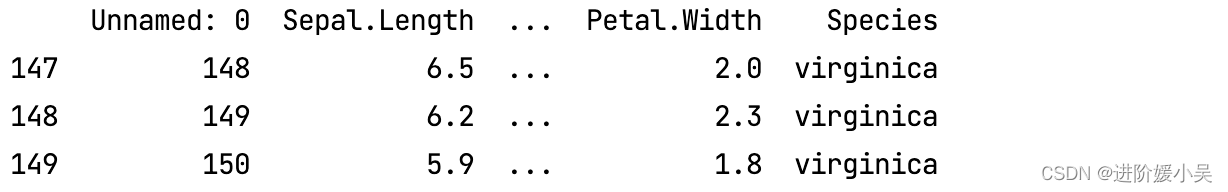

to_string() 用于返回 DataFrame 类型的数据,如果不使用该函数,则输出结果为数据的前面 5 行和末尾 5 行,中间部分以 … 代替。import pandas as pd csv_data = pd.read_csv('./iris.csv') # print(csv_data) # 显示前五行和后五行数据,中间...表示 print(csv_data.to_string()) # 显示全部数据 print(csv_data.head(3)) # 显示前3行 print(csv_data.tail(3)) # 显示后3行- 1

- 2

- 3

- 4

- 5

- 6

- 7

print(csv_data)输出:

print(csv_data.to_string())输出:

显示前3行输出结果:

显示后3行输出结果:

使用 to_csv() 方法将 DataFrame 存储为 csv 文件

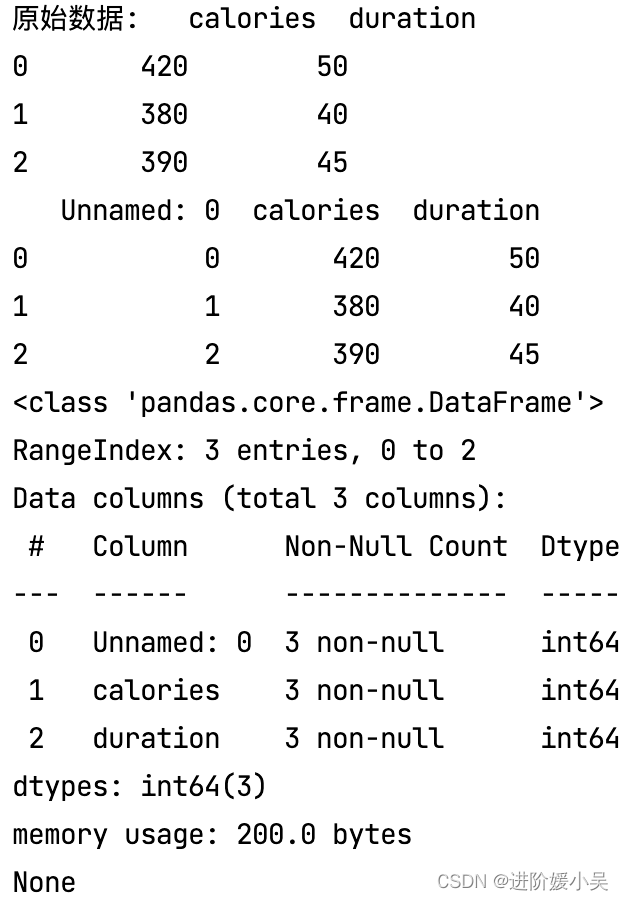

import pandas as pd data = { "calories": [420, 380, 390], "duration": [50, 40, 45] } df = pd.DataFrame(data) # 数据载入到 DataFrame 对象 print('原始数据:{}'.format(df)) # 保存 dataframe df.to_csv("set.csv") # 读取生成的csv文件 read_new_set = pd.read_csv('set.csv') print(read_new_set) print(read_new_set.info()) # 显示文件基本信息- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

输出结果

JSON文件

JSON 对象与 Python 字典具有相同的格式

从 URL 中读取 JSON 数据

import pandas as pd # 直接读JSON文件 df = pd.read_json('sites.json') print(df.to_string()) # 直接处理 JSON 字符串 data = [ { "id": "A001", "name": "菜鸟教程", "url": "www.runoob.com", "likes": 61 }, { "id": "A002", "name": "Google", "url": "www.google.com", "likes": 124 }, { "id": "A003", "name": "淘宝", "url": "www.taobao.com", "likes": 45 } ] df = pd.DataFrame(data) print(df) # 从 URL 中读取 JSON 数据 URL = 'https://static.runoob.com/download/sites.json' df = pd.read_json(URL) print(df)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

结果显示:

内嵌的 JSON 数据,inner.json如下:{ "school_name": "ABC primary school", "class": "Year 1", "students": [ { "id": "A001", "name": "Tom", "math": 60, "physics": 66, "chemistry": 61 }, { "id": "A002", "name": "James", "math": 89, "physics": 76, "chemistry": 51 } ] }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

查看json文件

import pandas as pd inner_json = pd.read_json('./inner.json') print(inner_json)- 1

- 2

- 3

使用 json_normalize() 方法将内嵌的数据完整的解析出来

import pandas as pd import json # inner_json = pd.read_json('./inner.json') # 使用 Python JSON 模块载入数据 with open('./inner.json', 'r') as f: inner_json = json.loads(f.read()) # 展平数据 # meta 参数来显示这些元数据 meta=['school_name', 'class'] student_inner = pd.json_normalize(data=inner_json, record_path=['students']) print(student_inner)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

数据嵌套了列表和字典,文件inner_list_dic.json如下:

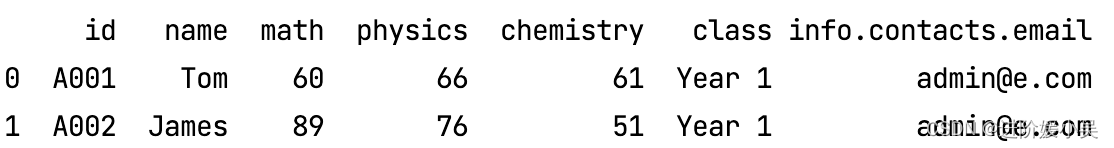

{ "school_name": "local primary school", "class": "Year 1", "info": { "president": "John Kasich", "address": "ABC road, London, UK", "contacts": { "email": "admin@e.com", "tel": "123456789" } }, "students": [ { "id": "A001", "name": "Tom", "math": 60, "physics": 66, "chemistry": 61 }, { "id": "A002", "name": "James", "math": 89, "physics": 76, "chemistry": 51 } ] }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

inner_list_dic.json文件转换为 DataFrame

import pandas as pd import json # inner_json = pd.read_json('./inner_list_dic.json') # 使用 Python JSON 模块载入数据 with open('./inner_list_dic.json', 'r') as f: inner_json = json.loads(f.read()) # 展平数据 # meta 参数来显示这些元数据 meta=['school_name', 'class'] student_inner = pd.json_normalize(data=inner_json, record_path=['students'], meta=['class', ['info', 'contacts', 'email']]) print(student_inner)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

输出结果:

只读取内嵌中的某字段,inner_one.json{ "school_name": "local primary school", "class": "Year 1", "students": [ { "id": "A001", "name": "Tom", "grade": { "math": 60, "physics": 66, "chemistry": 61 } }, { "id": "A002", "name": "James", "grade": { "math": 89, "physics": 76, "chemistry": 51 } } ] }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

需要使用到 glom 模块来处理数据套嵌,glom 模块允许我们使用

.来访问内嵌对象的属性import pandas as pd from glom import glom glom_data = pd.read_json('./inner_one.json') data = glom_data['students'].apply(lambda row: glom(row, 'grade.physics')) print(data)- 1

- 2

- 3

- 4

- 5

- 6

输出:

数据清洗

dropna() 方法删除包含空字段的行。

import pandas as pd df = pd.read_csv('./data.csv') print (df['NUM_BEDROOMS']) # 显示改列 print (df['NUM_BEDROOMS'].isnull()) #通过 isnull() 判断各个单元格是否为空,True表示为空- 1

- 2

- 3

- 4

Pandas 把 n/a 和 NA 当作空数据

import pandas as pd missing_values = ["n/a", "na", "--"] # 指定空数据类型 df = pd.read_csv('./data.csv', na_values = missing_values) new_df = df.dropna() # 删除包含空数据的行 new_df = df.dropna(inplace = True) # 修改源数据 DataFrame, 可以使用 inplace = True 参数 print(new_df.to_string())- 1

- 2

- 3

- 4

- 5

- 6

移除指定列有空值的行

import pandas as pd df = pd.read_csv('./data.csv') df.dropna(subset=['col2'], inplace = True) print(df.to_string())- 1

- 2

- 3

- 4

- 5

fillna() 方法来替换一些空字段

import pandas as pd df = pd.read_csv('./data.csv') df.fillna(123, inplace = True) # 用 123 替换空字段 # 指定某一个列来替换数据 df['PID'].fillna(123, inplace = True) # 用 12345 替换 PID 为空数据 print(df.to_string())- 1

- 2

- 3

- 4

- 5

- 6

- 7

替换空单元格的常用方法是计算列的均值、中位数值或众数。

Pandas使用 mean()、median() 和 mode() 方法计算列的均值(所有值加起来的平均值)、中位数值(排序后排在中间的数)和众数(出现频率最高的数)。

import pandas as pd df = pd.read_csv('./data.csv') # 用 mean() 方法计算列的均值并替换空单元格 x_mean = df["ST_NUM"].mean() df["ST_NUM"].fillna(x_mean, inplace = True) # 用 median() 方法计算列的中位数并替换空单元格 x_median = df["ST_NUM"].median() df["ST_NUM"].fillna(x_median, inplace = True) # 用 mode() 方法计算列的众数并替换空单元格 x_mode = df["ST_NUM"].mode() df["ST_NUM"].fillna(x_mode, inplace = True) print(df.to_string())- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

Pandas 清洗格式错误数据

格式化日期

import pandas as pd # 第三个日期格式错误 data = { "Date": ['2020/12/01', '2020/12/02' , '20201226'], "duration": [50, 40, 45] } df = pd.DataFrame(data, index = ["day1", "day2", "day3"]) df['Date'] = pd.to_datetime(df['Date']) print(df.to_string())- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

输出

Date duration day1 2020-12-01 50 day2 2020-12-02 40 day3 2020-12-26 45- 1

- 2

- 3

- 4

替换错误年龄的数据

import pandas as pd person = { "name": ['Google', 'Runoob' , 'Taobao'], "age": [50, 40, 12345] # 12345 年龄数据是错误的 } df = pd.DataFrame(person) df.loc[2, 'age'] = 30 # 修改数据 # 设置条件语句 # for x in df.index: # if df.loc[x, "age"] > 120: # df.loc[x, "age"] = 120 # 大于120的修改为120 # # df.drop(x, inplace=True) # 将错误数据的行删除 print(df.to_string())- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

输出:

name age

0 Google 50

1 Runoob 40

2 Taobao 30清洗重复数据

可以使用 duplicated() 和 drop_duplicates() 方法

如果对应的数据是重复的,duplicated() 会返回 True,否则返回 False。

删除重复数据,可以直接使用drop_duplicates() 方法

import pandas as pd person = { "name": ['Google', 'Runoob', 'Runoob', 'Taobao'], "age": [50, 40, 40, 23] } df = pd.DataFrame(person) print(df.duplicated()) df.drop_duplicates(inplace = True) # 删除重复数据 print(df)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

输出结果:

0 False

1 False

2 True

3 False

| | |

|–|–|

| | |

dtype: bool删除重复数据

name age

0 Google 50

1 Runoob 40

3 Taobao 23其他

1

解压文件

# 解压缩zip文件 import zipfile f = zipfile.ZipFile("../input/A榜/toUserA.zip",'r') # 原压缩文件在服务器的位置 for file in f.namelist(): f.extract(file,"../output/") #解压到的位置 f.close()- 1

- 2

- 3

- 4

- 5

- 6

# 判断是否有效的zipfile import zipfile zipfilename = '../input/A榜/toUserA.zip' print(zipfile.is_zipfile(zipfilename)) zfile = zipfile.ZipFile(zipfilename, 'r') # print(zfile) # 读取所有文件 with zipfile.ZipFile(zipfilename, 'r') as zfile: print(zfile.infolist()) # 抽取文件 with zipfile.ZipFile(zipfilename, 'r') as zfile: zfile.extractall('../input/A榜/')- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

读取基本信息和可视化

import pandas as pd train_data = pd.read_csv('../output/toUserA/train.csv') train_data.head(50) train_data.info() # 去重显示userid train_data.userid.drop_duplicates() # from IPython.display import display, HTML from IPython.display import display train_data.info() # 查看训练集基本信息 display(train_data.shape) # 查看训练集数据的维度 # 使用pandas_profiling模块工具一键生成探索性数据分析报告 import pandas_profiling as ppf ppf.ProfileReport(train_data) # 一键进行探索性可视化分析- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

2

文献导出并筛选

下载搜索到的文献csv表格 ,并修改名字为sample

代码:import pandas as pd df = pd.read_csv('sample.csv') # 筛选出含有title中含有'标注' biaozhu=df[df['title'].str.contains('标注')] print(biaozhu.count()) # 统计个数 biaozhu.to_excel("sample589.xlsx") # 保存为excel # 筛选出含有title中含有'图像标注' tuxiang=df[df['title'].str.contains('图像标注')] tuxiang.to_excel("sample89.xlsx") # 保存为excel # 合并两个表格,并保存 result = pd.concat([biaozhu, tuxiang], sort=Fasle) writer = pd.ExcelWriter('result.xlsx') result.to_excel(writer,index=False) writer.save()- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

3

导入所需的库

#基础 import numpy as np import pandas as pd import time #绘图 import seaborn as sns import matplotlib.pyplot as plt %matplotlib inline #模型 from sklearn.linear_model import Lasso, LassoCV, ElasticNet, ElasticNetCV, Ridge, RidgeCV from sklearn.ensemble import RandomForestRegressor, GradientBoostingRegressor, StackingRegressor from mlxtend.regressor import StackingCVRegressor from sklearn.svm import SVR import lightgbm as lgb import xgboost as xgb #模型相关 from sklearn.pipeline import make_pipeline from sklearn.preprocessing import RobustScaler from sklearn.model_selection import KFold, cross_val_score from sklearn.metrics import mean_squared_error #忽略警告 import warnings def ignore_warn(*args, **kwargs): pass warnings.warn = ignore_warn- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

读取数据集,对正偏斜的目标值取对数处理

train = pd.read_csv('train_data.csv') test = pd.read_csv('test_data.csv') print('The shape of training data:', train.shape) print('The shape of testing data:', test.shape) # 画图看数据分布 from scipy.stats import skew, kurtosis, norm y = train['SalePrice'] print('Skewness of target:', y.skew()) print('kurtosis of target:', y.kurtosis()) sns.distplot(y, fit=norm); # 未处理的目标值明显右偏,不满足正态分布 y = np.log1p(y) print('Skewness of target:', y.skew()) print('kurtosis of target:', y.kurtosis()) sns.distplot(y, fit=norm); # 处理后的目标值接近正态分布 train = train.drop('SalePrice', axis=1) #检查训练集与测试集的维度是否一致 print('The shape of training data:', train.shape) print('The length of y:', len(y)) print('The shape of testing data:', test.shape)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

定义交叉验证策略及评估方法

#采用十折交叉验证 n_folds = 10 def rmse_cv(model): kf = KFold(n_folds, shuffle=True, random_state=20) rmse = np.sqrt(-cross_val_score(model, train.values, y, scoring='neg_mean_squared_error', cv=kf)) return(rmse)- 1

- 2

- 3

- 4

- 5

- 6

- 7

单个模型参数设置

采用六个模型:- Lasso

- ElasticNet

- Ridge

- Gradient Boosting

- LightGBM

- XGBoost

#Lasso lasso_alpha = [0.00005, 0.0001, 0.0002, 0.0005, 0.001, 0.002, 0.005, 0.01, 0.02, 0.05, 0.1, 0.2, 0.5, 1.0] lasso = make_pipeline(RobustScaler(), LassoCV(alphas=lasso_alpha, random_state=2)) #ElasticNet enet_beta = [0.1, 0.2, 0.5, 0.6, 0.8, 0.9] enet_alpha = [0.00005, 0.0001, 0.0002, 0.0005, 0.001, 0.002, 0.005, 0.01] ENet = make_pipeline(RobustScaler(), ElasticNetCV(l1_ratio=enet_beta, alphas=enet_alpha, random_state=12)) #Ridge rid_alpha = [0.00005, 0.0001, 0.0002, 0.0005, 0.001, 0.002, 0.005, 0.01, 0.02, 0.05, 0.1, 0.2, 0.5, 1.0] rid = make_pipeline(RobustScaler(), RidgeCV(alphas=rid_alpha)) #Gradient Boosting gbr_params = {'loss': 'huber', 'criterion': 'mse', 'learning_rate': 0.1, 'n_estimators': 600, 'max_depth': 4, 'subsample': 0.6, 'min_samples_split': 20, 'min_samples_leaf': 5, 'max_features': 0.6, 'random_state': 32, 'alpha': 0.5} gbr = GradientBoostingRegressor(**gbr_params) #LightGBM lgbr_params = {'learning_rate': 0.01, 'n_estimators': 1850, 'max_depth': 4, 'num_leaves': 20, 'subsample': 0.6, 'colsample_bytree': 0.6, 'min_child_weight': 0.001, 'min_child_samples': 21, 'random_state': 42, 'reg_alpha': 0, 'reg_lambda': 0.05} lgbr = lgb.LGBMRegressor(**lgbr_params) #XGBoost xgbr_params = {'learning_rate': 0.01, 'n_estimators': 3000, 'max_depth': 5, 'subsample': 0.6, 'colsample_bytree': 0.7, 'min_child_weight': 3, 'seed': 52, 'gamma': 0, 'reg_alpha': 0, 'reg_lambda': 1} xgbr = xgb.XGBRegressor(**xgbr_params)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

单个模型评估

models_name = ['Lasso', 'ElasticNet', 'Ridge', 'Gradient Boosting', 'LightGBM', 'XGBoost'] models = [lasso, ENet, rid, gbr, lgbr, xgbr] for i, model in enumerate(models): score = rmse_cv(model) print('{} score: {}({})'.format(models_name[i], score.mean(), score.std()))- 1

- 2

- 3

- 4

- 5

设置Stacking模型参数

stack_model = StackingCVRegressor(regressors=(lasso, ENet, rid, gbr, lgbr, xgbr), meta_regressor=lasso, use_features_in_secondary=True)- 1

在整个训练集上训练各模型

#Lasso lasso_trained = lasso.fit(np.array(train), np.array(y)) #ElasticNet ENet_trained = ENet.fit(np.array(train), np.array(y)) #Ridge rid_trained = rid.fit(np.array(train), np.array(y)) #Gradient Boosting gbr_trained = gbr.fit(np.array(train), np.array(y)) #LightGBM lgbr_trained = lgbr.fit(np.array(train), np.array(y)) #XGBoost xgbr_trained = xgbr.fit(np.array(train), np.array(y)) #Stacking stack_model_trained = stack_model.fit(np.array(train), np.array(y))- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

评估各个模型在完整训练集上的表现

def rmse(y, y_preds): return np.sqrt(mean_squared_error(y, y_preds)) models.append(stack_model) models_name.append('Stacking_model') for i, model in enumerate(models): y_preds = model.predict(np.array(train)) model_score = rmse(y, y_preds) print('RMSE of {}: {}'.format(models_name[i], model_score))- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

提交各个模型的预测结果

sample_submission = pd.read_csv('sample_submission.csv') for i, model in enumerate(models): preds = model.predict(np.array(test)) submission = pd.DataFrame({'Id': sample_submission['Id'], 'SalePrice': np.expm1(preds)}) submission.to_csv('House_Price_submission_'+models_name[i]+'_optimation.csv', index=False) print('{} finished.'.format(models_name[i]))- 1

- 2

- 3

- 4

- 5

- 6

均值融合

preds_in_train = np.zeros((len(y), len(models))) for i, model in enumerate(models): preds_in_train[:, i] = model.predict(np.array(train)) average_preds_in_train = preds_in_train.mean(axis=1) average_score = rmse(y, average_preds_in_train) print('RMSE of average model on training data:', average_score) #提交均值融合预测结果 preds_in_test = np.zeros((len(test), len(models))) for i, model in enumerate(models): preds_in_test[:, i] = model.predict(np.array(test)) average_preds_in_test = preds_in_test.mean(axis=1) average_submission = pd.DataFrame({'Id': sample_submission['Id'], 'SalePrice': np.expm1(average_preds_in_test)}) average_submission.to_csv('House_Price_submission_average_model_optimation.csv', index=False)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

权值融合

model_weights = [0.15, 0.12, 0.08, 0.08, 0.12, 0.15, 0.3] weight_preds_in_train = np.matmul(preds_in_train, model_weights) weight_score = rmse(y, weight_preds_in_train) print('RMSE of weight model on training data:', weight_score) #提交权值融合预测结果 weight_preds_in_test = np.matmul(preds_in_test, model_weights) weight_submission = pd.DataFrame({'Id': sample_submission['Id'], 'SalePrice': np.expm1(weight_preds_in_test)}) weight_submission.to_csv('House_Price_submission_weight_model_optimation.csv', index=False)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

保存预测结果

#保存训练集上的预测结果 train_prediction = pd.DataFrame(preds_in_train, columns=models_name) train_prediction.to_csv('train_prediction_of_7_models.csv', index=False) #保存测试集上的预测结果 test_prediction = pd.DataFrame(preds_in_test, columns=models_name) test_prediction.to_csv('test_prediction_of_7_models.csv', index=False)- 1

- 2

- 3

- 4

- 5

- 6

- 7

Kaggle:Telco-Customer churn(电信公司用户流失预测)

这个比赛内容有点多,下次再分享!参考:

-

相关阅读:

fslmaths/fslstats/fslmeants

Linux内核4.14版本——I2C子系统(5)_I2C死锁相关

Ubuntu开机后图像化界面消失只有命令行界面

我的有趣的英语学习经历

postgresql之page分配管理(一)

git学习——第5节 远程仓库

Ubuntu 16.04升级OpenSSH

lv3 嵌入式开发-8 linux shell脚本函数

Java后端底座从无到有的搭建(随笔)

【SQL Server】数据库开发指南(六)索引和视图的使用技巧、方法与综合应用

- 原文地址:https://blog.csdn.net/wuli_xin/article/details/126459047