-

十二、Sequential

一、Sequential介绍

torch.nn.Sequential(*args)

由官网给的Example可以大概了解到Sequential是将多层网络进行便捷整合,方便可视化以及简化网络复杂性二、复现网络模型训练CIFAR-10数据集

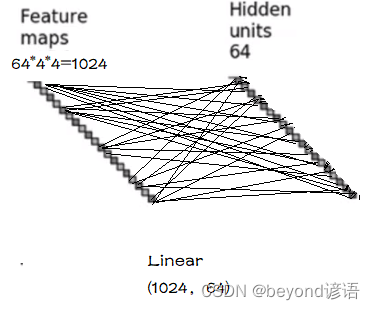

这里面有个Hidden units隐藏单元其实就是连个线性层

把隐藏层全部展开整个神经网络架构如下:

①输入图像为3通道的(32,32)图像,(C,H,W)=(3,32,32)

②通过一层(5,5)的卷积Convolution,输出特征图为(32,32,32),特征图的(H,W),通过(5,5)的卷积核大小没有发生变换,这说明卷积层肯定对原始图像进行了加边

查看下官网给的卷积层padding的计算公式

分析一下:

故padding = 2,加了两成外边,之所以channel由3变成了32,是因为卷积核有多个并非一个卷积核

最终:输入3通道;输出32通道;stride = 1;padding = 2;dilation = 1(默认值);kernel_size = 5;

torch.nn.Conv2d(in_channels=3,out_channels=32,kernel_size=5,stride=1,padding=2)③接着将(32,32,32)特征通过Max-Pooling,池化核为(2,2),输出为(32,16,16)的特征图

torch.nn.MaxPool2d(kernel_size=2)④接着将(32,16,16)特征图通过(5,5)大小的卷积核进行卷积,输出特征图为(32,16,16),特征图的(H,W),通过(5,5)的卷积核大小没有发生变换,这说明卷积层肯定对原始图像进行了加边

同理根据官网给的计算公式可以求出padding = 2

通过上面两次的计算可以看出,只要通过卷积核大小为(5,5),卷积之后的大小不变则padding肯定为2故padding = 2,加了两成外边,这里channel由32变成了32,可以得知仅使用了一个卷积核

最终:输入32通道;输出32通道;stride = 1;padding = 2;dilation = 1(默认值);kernel_size = 5;

torch.nn.Conv2d(in_channels=32,out_channels=32,kernel_size=5,stride=1,padding=2)⑤接着将(32,16,16)的特征图通过Max-Pooling,池化核为(2,2),输出为(32,8,8)的特征图

torch.nn.MaxPool2d(kernel_size=2)⑥再将(32,8,8)的特征图输入到卷积核为(5,5)的卷积层中,得到(64,8,8)特征图,特征图的(H,W),通过(5,5)的卷积核大小没有发生变换,这说明卷积层肯定对原始图像进行了加边,由前两次的计算得出的结果可以得知padding=2,这里channel由32变成了64,是因为使用了多个卷积核分别进行卷积

torch.nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2)⑦再将(64,8,8)的特征图通过Max-Pooling,池化核为(2,2),输出为(64,4,4)的特征图

torch.nn.MaxPool2d(kernel_size=2)⑧再将(64,4,4)的特征图进行Flatten展平成(1024)特征

torch.nn.Flatten()

⑨再将(1024)特征传入第一个Linear层,输出(64)

torch.nn.Linear(1024,64)⑩最后将(64)特征图经过第二个Linear层,输出(10),从而达到CIFAR-10数据集的10分类任务

torch.nn.Linear(64,10)三、传统神经网络实现

import torch from torch import nn from torch.nn import Conv2d from torch.utils.tensorboard import SummaryWriter class Beyond(nn.Module): def __init__(self): super(Beyond,self).__init__() self.conv_1 = torch.nn.Conv2d(in_channels=3,out_channels=32,kernel_size=5,stride=1,padding=2) self.maxpool_1 = torch.nn.MaxPool2d(kernel_size=2) self.conv_2 = torch.nn.Conv2d(in_channels=32,out_channels=32,kernel_size=5,stride=1,padding=2) self.maxpool_2 = torch.nn.MaxPool2d(kernel_size=2) self.conv_3 = torch.nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2) self.maxpool_3 = torch.nn.MaxPool2d(kernel_size=2) self.flatten = torch.nn.Flatten() self.linear_1 = torch.nn.Linear(1024,64) self.linear_2 = torch.nn.Linear(64,10) def forward(self,x): x = self.conv_1(x) x = self.maxpool_1(x) x = self.conv_2(x) x = self.maxpool_2(x) x = self.conv_3(x) x = self.maxpool_3(x) x = self.flatten(x) x = self.linear_1(x) x = self.linear_2(x) return x beyond = Beyond() print(beyond) """ Beyond( (conv_1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool_1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (conv_2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool_2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (conv_3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool_3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (flatten): Flatten(start_dim=1, end_dim=-1) (linear_1): Linear(in_features=1024, out_features=64, bias=True) (linear_2): Linear(in_features=64, out_features=10, bias=True) ) """ input = torch.zeros((64,3,32,32)) print(input.shape)#torch.Size([64, 3, 32, 32]) output = beyond(input) print(output.shape)#torch.Size([64, 10]) #将网络图上传值tensorboard中进行可视化展示 writer = SummaryWriter("y_log") writer.add_graph(beyond,input) writer.close()- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

在Terminal下运行

tensorboard --logdir=y_log --port=7870,logdir为打开事件文件的路径,port为指定端口打开;

通过指定端口2312进行打开tensorboard,若不设置port参数,默认通过6006端口进行打开。

四、使用Sequential实现神经网络

import torch from torch import nn from torch.nn import Conv2d from torch.utils.tensorboard import SummaryWriter class Beyond(nn.Module): def __init__(self): super(Beyond,self).__init__() self.model = torch.nn.Sequential( torch.nn.Conv2d(3,32,5,padding=2), torch.nn.MaxPool2d(2), torch.nn.Conv2d(32,32,5,padding=2), torch.nn.MaxPool2d(2), torch.nn.Conv2d(32,64,5,padding=2), torch.nn.MaxPool2d(2), torch.nn.Flatten(), torch.nn.Linear(1024,64), torch.nn.Linear(64,10) ) def forward(self,x): x = self.model(x) return x beyond = Beyond() print(beyond) """ Beyond( (conv_1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool_1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (conv_2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool_2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (conv_3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool_3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (flatten): Flatten(start_dim=1, end_dim=-1) (linear_1): Linear(in_features=1024, out_features=64, bias=True) (linear_2): Linear(in_features=64, out_features=10, bias=True) ) """ input = torch.zeros((64,3,32,32)) print(input.shape)#torch.Size([64, 3, 32, 32]) output = beyond(input) print(output.shape)#torch.Size([64, 10]) #将网络图上传值tensorboard中进行可视化展示 writer = SummaryWriter("y_log") writer.add_graph(beyond,input) writer.close()- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

在Terminal下运行

tensorboard --logdir=y_log --port=7870,logdir为打开事件文件的路径,port为指定端口打开;

通过指定端口2312进行打开tensorboard,若不设置port参数,默认通过6006端口进行打开。

实现效果是完全一样的,使用Sequential看起来更加简介,可视化效果更好些。

-

相关阅读:

TypeScript基础语法

数据结构第三遍补充(图的应用)

批量上传文件,以input上传文件,后端以List<MultipartFile>类型接收

前端构建效率优化之路

【洛谷 P2678】[NOIP2015 提高组] 跳石头 题解(二分答案+递归)

神经压缩文本训练:提升大型语言模型效率的新方法

2.6文件服务器

【云原生之k8s】K8s 管理工具 kubectl 详解(一)

C++链表创建、删除、排序、奇偶数和计算输出的课程实践源码

【批处理DOS-CMD命令-汇总和小结】-cmd的内部命令和外部命令怎么区分,CMD命令和运行(win+r)命令的区别,

- 原文地址:https://blog.csdn.net/qq_41264055/article/details/126450704