-

Flink内核源码(八)Flink Checkpoint

Flink中Checkpoint是使Flink 能从故障恢复的一种内部机制。检查点是 Flink 应用状态的一个一致性副本,在发生故障时,Flink 通过从检查点加载应用程序状态来恢复。

核心思想:是在 input source 端插入 barrier,控制 barrier 的同步 (分界线对齐)来实现 snapshot 的备份 和 exactly-once 语义。

1. checkpoint执行过程

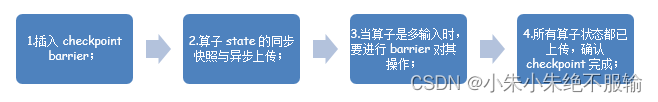

chechpoint 在执行过程中,可以简化为可以简化为以下四大步:

- 在数据流中插入 checkpoint barrier;

- 每执行到当前算子时,对算子 state 状态进行同步快照与异步上传;

- 当算子是多输入时,要进行 barrier 对齐操作;

- 所有算子状态都已上传,确认 checkpoint 完成;

2. checkpoint 执行失败问题分析

Flink 机制是基于 Chandy-Lamport 算法实现的 checkpoint 机制,在正常情况下,能够实现对正常处理流程非常小的影响,来完成状态的备份。但仍存在一些异常情况,可能造成 checkpoint 代价较大。

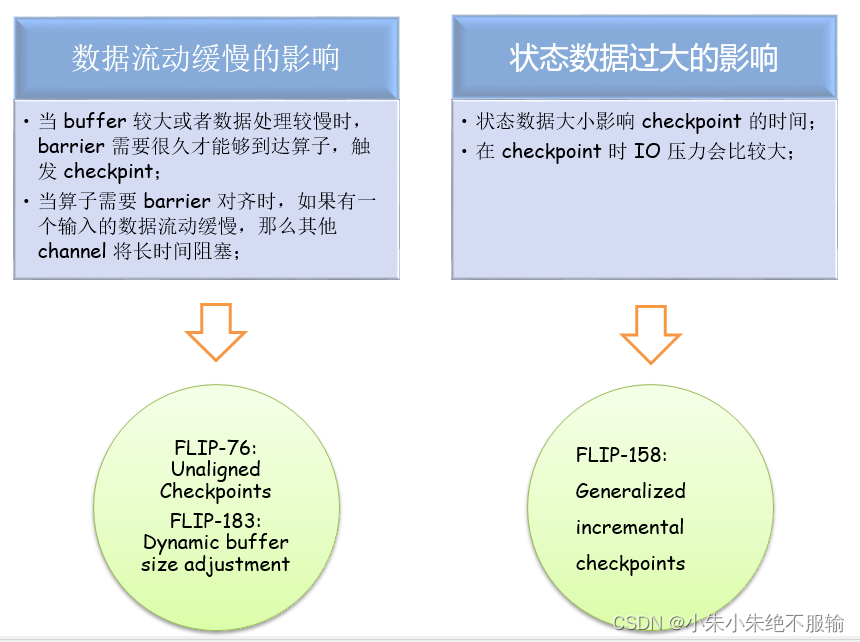

问题1:数据流动缓慢

我们知道 Flink 的 checkpoint 机制是基于 barrier 的,在数据处理过程中,barrier 也需要像普通数据一样,在 buffer 中排队,等待被处理。

-

当 buffer 较大或者数据处理较慢时,barrier 需要很久才能够到达算子,触发 checkpint。尤其是当应用触发反压时,barrier 可能要在 buffer 中流动数个小时,这显然是不合适的。

-

而另外一种情况则更为严重,当算子需要 barrier 对齐时,如果一个输入的 barrier 已经到达,那么该输入 barrier 后面的数据会阻塞住,不能被处理的,需要等待其他输入 barrier 到达之后,才能继续处理。如果有一个输入的数据流动缓慢,那么等待barrier 对齐的过程中,其他输入的数据处理都要暂停,这将严重影响应用的实时性。

针对数据流动缓慢的问题,解决思路有两个:

- 让 buffer 中的数据变少;

- 让 barrier 能跳过 buffer 中缓存的数据;

上述的解决办法正是 Flink 社区提出的两个优化方案,分别为

FLIP-76: Unaligned Checkpoints,FLIP-183: Dynamic buffer size adjustment;问题2:状态数据过大

状态数据的大小也会影响 checkpoint 的时间,并且在 checkpoint 时 IO 压力也会较大。对于像 RocksDB 这种支持增量 checkpoint 的 StateBackend,如果两次 checkpoint 之间状态变化不大,那么增量 checkpoint 能够极大减少状态上传时间。但当前的增量 checkpoint 仍存在一些问题,一是不通用,不是所有的 StateBackend 都能够支持增量 checkpoint,二是存在由于状态合并的影响,增量状态数据仍会非常大。

针对状态数据过大问题,解决思路 如下:

- 社区提出的优化方案为

FLIP-158: Generalized incremental checkpoints,这是一种同样的增量快照方案,能够很大程度上减少 checkpoint 时的状态数据大小

3. 非对齐 checkpoint

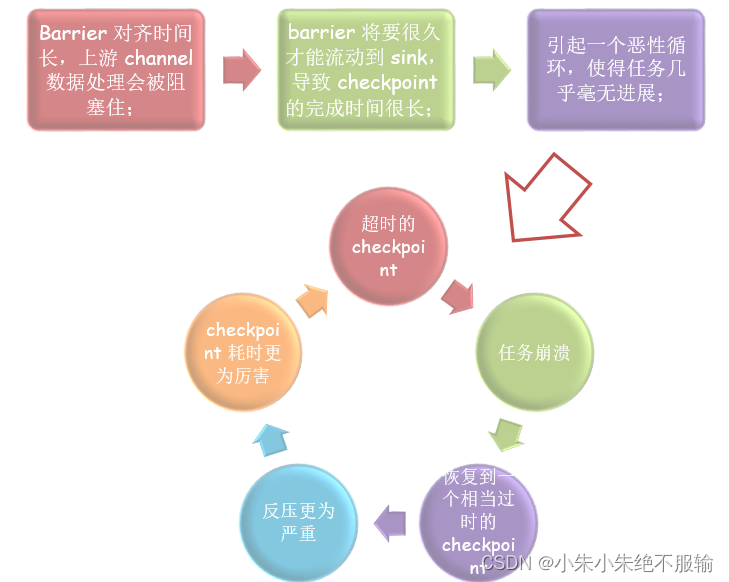

Flink 在做 Checkpoint 时,如果一个 operator 有多个 input,那么要做 barrier 的对齐。对齐阶段始于任何一个 channel 接收到了 barrier,此时该 channel 的数据处理会被阻塞住,直到 operator 接收到所有 channel 的 barrier。一般情况下,这种对齐机制可以很好地工作,但当应用产生反压时,将会面对下面几个问题:

- 反压时,buffer 中缓存了大量的数据,导致 barrier 流动缓慢,对于 barrier 已经到达的channel,由于需要对齐其他 channel 的 barrier,其上游数据处理会被阻塞住;

- barrier 将要很久才能流动到 sink,这导致 checkpoint 的完成时间很长。一个完成时间很长的 checkpoint意味着,其在完成的那个时刻就已经过时了。

- 因为一个过度使用的 pipeline 是相当脆弱的,这可能会引起一个恶性循环:超时的checkpoint、任务崩溃、恢复到一个相当过时的 checkpoint、反压更为严重、checkpoint 更为厉害,这样的一个恶性循环会使得任务几乎毫无进展;

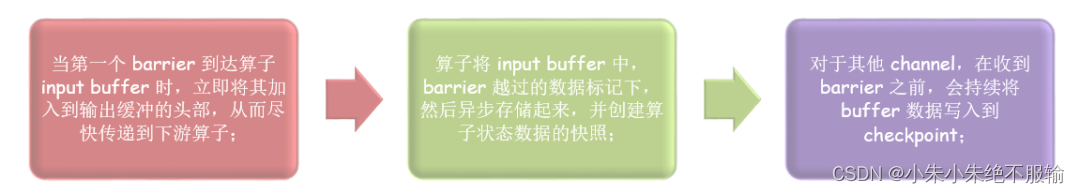

针对上述问题,FLIP-76 提出了一个非阻塞的无需 barrier 对齐的 checkpoint 机制,非对齐 checkpoint 只会短暂阻塞任务一瞬,用于标记 buffer、转发 barrier、创建状态快照,它能够尽可能快地将 barrier 传递到 sink,因此很适用由于某些路径数据处理缓慢,barrier 对齐耗时较多的应用场景。非对齐 checkpoint 最根本的思想就是将缓冲的数据当做算子状态的一部分,该机制仍会使用 barrier,用来触发 checkpoint,

其优势在于:- 即使 operator 的 barrier 没有全部到达,数据处理也不会被阻塞;

- checkpoint 耗时将极大减少,即使对于单输入的 operator 也是如此;

- 即使在不稳定的环境中,任务也会有更多的进展,因为从更新的 checkpoint 恢复能够避免许多重复计算;

- 更快的扩缩容;

非对齐 checkpoint 最根本的思想就是将缓冲的数据当做算子状态的一部分,该机制仍会使用 barrier,用来触发 checkpoint

但非对齐 checkpoint 也存在缺点:

- 需要保存 buffer 数据,状态大小会膨胀,磁盘压力大。尤其是当集群 IO 存在瓶颈时,该问题更为明显

- 恢复时需要额外恢复 buffer 数据,作业重新拉起的耗时可能会很长;

当前 FLIP-76 的设计方案在 Flink 1.11 中已实现。

4. 动态调整 buffer 大小

FLIP-183 也能够有效减少 checkpoint 时, barrier 的对齐时间,从而提高 checkpoint 性能,这实际上与非对齐 checkpoint 的目标一致,所以 FLIP-183 可以视作非对齐 checkpoint 的替代方案。

目前,Flink 的网络内存是静态配置的,所以可以预测出最大的 buffer 空间占用,但是 buffer 中缓存的数据多久可以处理完成是未知的,因此 checkpoint 在做 barrier 对齐时,具体的延迟也无法估算,可能会非常久。实际上,配置一个很大的 buffer 空间除了能够缓存多一些数据,其他并没有什么意义,也不能带来很大的吞吐提升。

FLIP-183 的想法是动态调整 Buffer 大小,只缓存配置时间内可以处理的数据量,这可以很好地控制 checkpoint 时,barrier 对齐所需的时间,同样也可以提升非对齐 checkpoint 的性能,因为 buffer 中缓存更少的数据,意味着 checkpoint 时所需持久化的数据量变小。

Flink 流控机制

Flink 采用

Credit-based流控机制,确保发送端已经发送的任何数据,接收端都具有足够的 Buffer 来接收。流量控制机制基于网络缓冲区的可用性,每个上游输入(A1、A2)在下游(B1、B2)都有自己的一组独占缓冲区,并共享一个本地缓冲池。

本地缓冲池中的缓冲区称为

流动缓冲区(Floating Buffer),因为它们会在输出通道间流动并且可用于每个输入通道。- 数据接收方会将自身的可用 Buffer 作为 Credit 告知数据发送方(1 buffer = 1 credit),每个SubPartition 会跟踪下游接收端的 Credit(也就是可用于接收数据的 Buffer 数目),只有在相应的通道有Credit 的时候,Flink 才会向更底层的网络协议栈发送数据(以 Buffer 为粒度),并且每发送一个 Buffer的数据,相应的通道上的 Credit 会减 1。

- 除了发送数据本身外,数据发送端还会发送相应 SubPartition 中有多少正在排队发送的 Buffer 数(称之为Backlog)给下游。

- 数据接收端会利用这一信息去申请合适数量的 Floating Buffer用于接收发送端的数据,这可以加快发送端堆积数据的处理。接收端会首先申请和 Backlog 数量相等的Buffer,但可能无法申请到全部,甚至一个都申请不到,这时接收端会利用已经申请到的 Buffer 进行数据接收,并监听是否有新的Buffer 可用。

FLIP-183 中的 Buffer 不是静态配置的,而是基于吞吐量动态调整的。关键步骤为:

- subtask 计算吞吐量,然后根据配置计算 buffer 大小

- 在 subtask 的整个生命周期中,subtask 会观测吞吐量的变化,然后调整 buffer 大小

- 下游 subtask 发送新的 buffer 大小以及可用 buffer 到上游对应的 subpartition

- 上游 subpartition 根据接收到的信息,调整新分配的 buffer 大小,当前 filled buffer 不变

- 上游发送 filled buffer 及队列中 filled buffer 的数量

当前 FLIP-183 的设计方案在 Flink 1.14 中已实现。

5. 通用增量快照

Flink Checkpoint 分为同步快照和异步上传两部分,同步快照部分操作较快,异步上传时间无法预测。

如果状态数据较大,那么整个 checkpoint 的耗时可能会非常长。虽然像 RocksDB 之类的状态后端提供了增量 checkpoint 能力,但由于数据合并,增量部分的状态数据大小依然是不可控。

所以即使采用增量 checkpoint,耗时仍可能非常久,并且也不是所有的状态后端都支持增量 checkpoint。

针对全量 checkpoint 与 增量 checkpoint 不稳定问题,FLIP-158 提出了一种基于日志的、通用的增量 checkpoing 方案,能够使得 Flink checkpoint 过程快速稳定。

RocksDBStatebackend 的增量设计方案在状态数据变化不大的情况下,能够极大减少 checkpoint 状态上传时长。但也有一定局限:

FLIP-158 提出了一种通用的增量快照方案,其核心思想是基于 state changelog,changelog 能够细粒度地记录状态数据的变化,具体描述如下:- 有状态算子除了将状态变化写入状态后端外,再写一份到预写日志中;

- 预写日志上传到持久化存储后,operator 确认 checkpoint 完成;

- 独立于 checkpoint 之外,state table 周期性上传,这些上传到持久存储中的数据被称为物化状态;

- state stable 上传后,之前部分预写日志就没用了,可以被裁剪;

FLIP-158 的设计方案具有如下优势:

- 可以显著减少 checkpoint 耗时,从而能够支持更为频繁的 checkpoint;

- checkpoint 间隔小,任务恢复时,需要重新处理的数据量变少;

- 因为事务 sink 在 checkpoint 时提交,所以更为频繁的 checkpoint 能够降低事务 Sink 的时延;

- checkpoint 的时间间隔更容易设置。checkpoint 耗时主要取决于需要持久化数据的大小

6. checkpoint执行过程

Flink1.12版本

Checkpoint作为Flink的核心容错机制,在Flink故障或人为重启后能快速恢复挂掉时的中间状态。Checkpoint流程顺序大概如下:

JM启动Checkpoint->远程ExecutionRPC发送Checkpoint通知->StreamTask的subtaskCheckpointCoordinator触发checkpoint->生成并广播CheckpointBarrier->执行快照->向jobMaster发送完成报告

1. JM启动Checkpoint

最开始在JM选主成功后会启动Scheduler,这里Scheduler除了用来分发部署Task外还用来生成Checkpoint的周期线程并通过JM上ExecutionGraph的CheckpointCoordinator并触发Checkpoint

进入启动Scheduler调度入口:

protected void startSchedulingInternal() { log.info("Starting scheduling with scheduling strategy [{}]", schedulingStrategy.getClass().getName()); // 通知ExecutionGraph的CheckpointCoordinator改变状态为running并准备执行checkpoint // 生成的ScheduledTriggerRunnable主要包含checkpoint周期调用(CheckpointCoordinator.startCheckpointScheduler)的逻辑 // JM的Checkpoint会判断Execution的assignedResource是否为空,否则不会向TM提交Checkpoint // 当提交申请TM部署slot成功后,Execution的assignedResource才会被赋值,此时JM的Checkpoint周期线程才会被往后继续执行调用TM的task执行checkpoint prepareExecutionGraphForNgScheduling(); schedulingStrategy.startScheduling(); } // 转化JobStatus为Running public void transitionToRunning() { if (!transitionState(JobStatus.CREATED, JobStatus.RUNNING)) { throw new IllegalStateException("Job may only be scheduled from state " + JobStatus.CREATED); } } @Override // 当job状态改为running的时候才真正开始触发Checkpoint public void jobStatusChanges(JobID jobId, JobStatus newJobStatus, long timestamp, Throwable error) { if (newJobStatus == JobStatus.RUNNING) { // start the checkpoint scheduler coordinator.startCheckpointScheduler(); } else { // anything else should stop the trigger for now coordinator.stopCheckpointScheduler(); } } private ScheduledFuture<?> scheduleTriggerWithDelay(long initDelay) { // 底层调用的是ExecutionGraph创建的SingleThreadScheduledExecutor.scheduleAtFixedRate return timer.scheduleAtFixedRate( new ScheduledTrigger(), // 执行线程 initDelay // 初始化延迟时间 , baseInterval // 线程调用间隔 , TimeUnit.MILLISECONDS // 计时单位毫秒 ); }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

进入ScheduledTrigger线程逻辑

- ExecutionGraph的CheckpointCoordinator开始触发Checkpoint,CheckpointCoordinator初始化原子自增CheckpointID和state存储位置

- 每个OperatorCoordinatorCheckpointContext(创建JobVertex时划分出的SourceOperator)开始依次调用checkpoint

- 这里的checkpoint其实就是JM把同一个当前CheckpointID写入到state存储位置,也就仅仅是JM端的CheckpointID初始化和存储

- 当CheckpointID写入完成并未发现任何异常后开始遍历所有executions依次调用Checkpoint

- 由于JM的Checkpoint周期线程启动较早,只有当TM和Slot申请完毕后才会向Execution提交CheckpointBarrier

private final class ScheduledTrigger implements Runnable { @Override public void run() { try { triggerCheckpoint(true); } catch (Exception e) { LOG.error("Exception while triggering checkpoint for job {}.", job, e); } } } private void startTriggeringCheckpoint(CheckpointTriggerRequest request) { try { synchronized (lock) { preCheckGlobalState(request.isPeriodic); } final Execution[] executions = getTriggerExecutions(); final Map<ExecutionAttemptID, ExecutionVertex> ackTasks = getAckTasks(); // we will actually trigger this checkpoint! Preconditions.checkState(!isTriggering); isTriggering = true; final long timestamp = System.currentTimeMillis(); final CompletableFuture<PendingCheckpoint> pendingCheckpointCompletableFuture = // 初始化原子自增CheckpointID和Checkpoint存储State位置 initializeCheckpoint(request.props, request.externalSavepointLocation) .thenApplyAsync( (checkpointIdAndStorageLocation) -> createPendingCheckpoint( timestamp, request.props, ackTasks, request.isPeriodic, checkpointIdAndStorageLocation.checkpointId, checkpointIdAndStorageLocation.checkpointStorageLocation, request.getOnCompletionFuture()), timer); final CompletableFuture<?> coordinatorCheckpointsComplete = pendingCheckpointCompletableFuture .thenComposeAsync((pendingCheckpoint) -> //coordinatorsToCheckpoint存放的是在JobVertex创建过程中存储的所有source的jobVertex OperatorCoordinatorCheckpoints.triggerAndAcknowledgeAllCoordinatorCheckpointsWithCompletion( coordinatorsToCheckpoint, pendingCheckpoint, timer), timer); .... // no exception, no discarding, everything is OK final long checkpointId = checkpoint.getCheckpointId(); //未发现任何异常后开始调用TM上每个Execution的Checkpoint snapshotTaskState( timestamp, checkpointId, checkpoint.getCheckpointStorageLocation(), request.props, executions, request.advanceToEndOfTime); .... } public static CompletableFuture<AllCoordinatorSnapshots> triggerAllCoordinatorCheckpoints( final Collection<OperatorCoordinatorCheckpointContext> coordinators, final long checkpointId) throws Exception { // 每个coordinator对应一个SourcejobVertex final Collection<CompletableFuture<CoordinatorSnapshot>> individualSnapshots = new ArrayList<>(coordinators.size()); for (final OperatorCoordinatorCheckpointContext coordinator : coordinators) { // JM根据SourceOperator个数依次持久化checkpointId。 final CompletableFuture<CoordinatorSnapshot> checkpointFuture = triggerCoordinatorCheckpoint(coordinator, checkpointId); individualSnapshots.add(checkpointFuture); } return FutureUtils.combineAll(individualSnapshots).thenApply(AllCoordinatorSnapshots::new); } public static CompletableFuture<CoordinatorSnapshot> triggerCoordinatorCheckpoint( final OperatorCoordinatorCheckpointContext coordinatorContext, final long checkpointId) throws Exception { final CompletableFuture<byte[]> checkpointFuture = new CompletableFuture<>(); // 持久化checkpointId到state中 coordinatorContext.checkpointCoordinator(checkpointId, checkpointFuture); // checkpointId写入完成后封装成CoordinatorSnapshot返回 return checkpointFuture.thenApply( (state) -> new CoordinatorSnapshot( coordinatorContext, new ByteStreamStateHandle(coordinatorContext.operatorId().toString(), state)) ); }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

2. 远程ExecutionRPC发送Checkpoint通知

当JM端初始化完CheckpointID后,开始依次调用TM上每个Execution的Checkpoint,这里的executions是从source端开始的有序集合

private void snapshotTaskState( long timestamp, long checkpointID, CheckpointStorageLocation checkpointStorageLocation, CheckpointProperties props, Execution[] executions, boolean advanceToEndOfTime) { final CheckpointOptions checkpointOptions = new CheckpointOptions( props.getCheckpointType(), checkpointStorageLocation.getLocationReference(), isExactlyOnceMode, props.getCheckpointType() == CheckpointType.CHECKPOINT && unalignedCheckpointsEnabled); // send the messages to the tasks that trigger their checkpoint // 通过rpcGateway依次调用每个taskExecutor上每个task的checkpoint for (Execution execution: executions) { if (props.isSynchronous()) { execution.triggerSynchronousSavepoint(checkpointID, timestamp, checkpointOptions, advanceToEndOfTime); } else { execution.triggerCheckpoint(checkpointID, timestamp, checkpointOptions); } } } private void triggerCheckpointHelper(long checkpointId, long timestamp, CheckpointOptions checkpointOptions, boolean advanceToEndOfEventTime) { final CheckpointType checkpointType = checkpointOptions.getCheckpointType(); if (advanceToEndOfEventTime && !(checkpointType.isSynchronous() && checkpointType.isSavepoint())) { throw new IllegalArgumentException("Only synchronous savepoints are allowed to advance the watermark to MAX."); } final LogicalSlot slot = assignedResource; //当JM申请部署TM和Slot完成后 slot才会被赋值 //所以虽然一开始就启动了Checkpoint周期线程但并不会提前触发向TM提交Checkpoint任务 if (slot != null) { //调用RpcTaskManagerGateway final TaskManagerGateway taskManagerGateway = slot.getTaskManagerGateway(); taskManagerGateway.triggerCheckpoint(attemptId, getVertex().getJobId(), checkpointId, timestamp, checkpointOptions, advanceToEndOfEventTime); } else { LOG.debug("The execution has no slot assigned. This indicates that the execution is no longer running."); } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

3. StreamTask的subtaskCheckpointCoordinator触发checkpoint

进入TM的SubtaskCheckpointCoordinatorImpl的checkpoint核心逻辑,主要分为五步:

- 从CheckpointMetaData获取CheckpointId并检查是否需要终止checkpoint,如果是发送CancelCheckpointMarker事件对象

- 调用是否需要预处理

- 生成CheckpointBarrier并广播到下游,Barrier主要是以CheckpointID和timestamp组成,广播主要逻辑是封装Barrier成基于Heap的HybridMemorySegment的Buffer后添加到ResultSubpartition的PrioritizedDeque中并通知下游InpuGate消费

- 写入UnalignedCheckpoint模式下的数据,默认使用仅一次语义的alignedCheckpoint模式,故这里略过

- 执行state和checkpointId快照,完成以上步骤后向JM发送报告

public void checkpointState( CheckpointMetaData metadata,//主要封装了JM生成的CheckpointID和timestamp CheckpointOptions options, CheckpointMetricsBuilder metrics, OperatorChain<?, ?> operatorChain, Supplier<Boolean> isCanceled) throws Exception { .... // Step (0): Record the last triggered checkpointId and abort the sync phase of checkpoint if necessary. //JM端生成的CheckpointID lastCheckpointId = metadata.getCheckpointId(); //首先检查ck是否需要终止 if (checkAndClearAbortedStatus(metadata.getCheckpointId())) { // broadcast cancel checkpoint marker to avoid downstream back-pressure due to checkpoint barrier align. operatorChain.broadcastEvent(new CancelCheckpointMarker(metadata.getCheckpointId())); LOG.info("Checkpoint {} has been notified as aborted, would not trigger any checkpoint.", metadata.getCheckpointId()); return; } // Step (1): Prepare the checkpoint, allow operators to do some pre-barrier work. // The pre-barrier work should be nothing or minimal in the common case. //第一步,预处理,一般我们调用的streamOperator无任何逻辑 operatorChain.prepareSnapshotPreBarrier(metadata.getCheckpointId()); // Step (2): Send the checkpoint barrier downstream // flink1.11版本新引入的Unaligned特性,参考社区FLIP-76,在需要提高checkpoint吞吐量并且不要求数据精准一次性情况下可考虑使用 // 封装优先级buffer后add到ResultSubpartition的PrioritizedDeque队列中,更新buffer和backlog数 // 当notifyDataAvailable=true时 通知下游消费 // 下游CheckpointedInputGate拿到buffer后匹配到是checkpoint事件做出相应动作 operatorChain.broadcastEvent( //创建Barrier,主要封装的CheckpointID和timestamp new CheckpointBarrier(metadata.getCheckpointId(), metadata.getTimestamp(), options), options.isUnalignedCheckpoint()); // Step (3): Prepare to spill the in-flight buffers for input and output // aligned模式直接跳过 if (options.isUnalignedCheckpoint()) { // output data already written while broadcasting event channelStateWriter.finishOutput(metadata.getCheckpointId()); } // Step (4): Take the state snapshot. This should be largely asynchronous, to not impact progress of the // streaming topology Map<OperatorID, OperatorSnapshotFutures> snapshotFutures = new HashMap<>(operatorChain.getNumberOfOperators()); try {// takeSnapshotSync 执行checkpoint核心逻辑的入口 if (takeSnapshotSync(snapshotFutures, metadata, metrics, options, operatorChain, isCanceled)) { // finishAndReportAsync 完成snapshot后,向jobMaster发送报告 finishAndReportAsync(snapshotFutures, metadata, metrics, options); } else { cleanup(snapshotFutures, metadata, metrics, new Exception("Checkpoint declined")); } } catch (Exception ex) { cleanup(snapshotFutures, metadata, metrics, ex); throw ex; } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

4. 向下游广播checkpointEvent

默认Stream模式下ResultPartitionType是PIPELINED或者PIPELINED_BOUNDED,故这里调用 BufferWritingResultPartition的broadcastEvent

public void broadcastEvent(AbstractEvent event, boolean isPriorityEvent) throws IOException { for (RecordWriterOutput<?> streamOutput : streamOutputs) { streamOutput.broadcastEvent(event, isPriorityEvent); } } public void broadcastEvent(AbstractEvent event, boolean isPriorityEvent) throws IOException { checkInProduceState(); finishBroadcastBufferBuilder(); finishUnicastBufferBuilders(); // 封装成带优先级的buffer并add到(Pipelined/BoundedBlocking)ResultSubpartition的PrioritizedDeque队列中 try (BufferConsumer eventBufferConsumer = EventSerializer.toBufferConsumer(event, isPriorityEvent)) { for (ResultSubpartition subpartition : subpartitions) { // Retain the buffer so that it can be recycled by each channel of targetPartition subpartition.add(eventBufferConsumer.copy(), 0); } } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

生成基于heap的最小内存数据结构segment并封装成BufferConsumer

public static BufferConsumer toBufferConsumer(AbstractEvent event, boolean hasPriority) throws IOException { final ByteBuffer serializedEvent = EventSerializer.toSerializedEvent(event); //调用基于Heap的HybridMemorySegment构造函数 MemorySegment data = MemorySegmentFactory.wrap(serializedEvent.array()); return new BufferConsumer(data, FreeingBufferRecycler.INSTANCE, getDataType(event, hasPriority)); } public static MemorySegment wrap(byte[] buffer) { return new HybridMemorySegment(buffer, null); }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

添加到ResultSubpartition的PrioritizedDeque中并通知下游消费

private boolean add(BufferConsumer bufferConsumer, int partialRecordLength, boolean finish) { checkNotNull(bufferConsumer); final boolean notifyDataAvailable; int prioritySequenceNumber = -1; synchronized (buffers) { if (isFinished || isReleased) { bufferConsumer.close(); return false; } // Add the bufferConsumer and update the stats //增加buffer到PrioritizedDeque里,优先级高的buffer放入队列头 if (addBuffer(bufferConsumer, partialRecordLength)) { prioritySequenceNumber = sequenceNumber; } updateStatistics(bufferConsumer);//总buffer数+1 increaseBuffersInBacklog(bufferConsumer);//总backlog数+1 notifyDataAvailable = finish || shouldNotifyDataAvailable(); isFinished |= finish; } if (prioritySequenceNumber != -1) { notifyPriorityEvent(prioritySequenceNumber); } //如果可用(比如数据完整,非阻塞模式等) 就通知下游inputGate来消费 if (notifyDataAvailable) { notifyDataAvailable(); } return true; } private boolean addBuffer(BufferConsumer bufferConsumer, int partialRecordLength) { assert Thread.holdsLock(buffers); if (bufferConsumer.getDataType().hasPriority()) { return processPriorityBuffer(bufferConsumer, partialRecordLength); } //生产BufferConsumerWithPartialRecordLength(可能这个buffer只包含了部分record(长度过长溢出到下一个record里了) buffers.add(new BufferConsumerWithPartialRecordLength(bufferConsumer, partialRecordLength)); return false; } //包括checkpoint的事件也会放进来,老版本好像是ArrayDeque,并不支持事件优先级 private final PrioritizedDeque<BufferConsumerWithPartialRecordLength> buffers = new PrioritizedDeque<>();- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

继续调用CreditBasedSequenceNumberingViewReader的notifyReaderNonEmpty

这里调用的是Netty的ChannelPipeline的fireUserEventTriggered向下游监听的availabilityListener发送事件消息

void notifyReaderNonEmpty(final NetworkSequenceViewReader reader) { // The notification might come from the same thread. For the initial writes this // might happen before the reader has set its reference to the view, because // creating the queue and the initial notification happen in the same method call. // This can be resolved by separating the creation of the view and allowing // notifications. // TODO This could potentially have a bad performance impact as in the // worst case (network consumes faster than the producer) each buffer // will trigger a separate event loop task being scheduled. ctx.executor().execute(() -> ctx.pipeline().fireUserEventTriggered(reader)); } //netty4.io.netty.channel包下的ChannelPipeline ChannelPipeline fireUserEventTriggered(Object var1);- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

5. 执行快照

广播完CheckpointBarrier后我们再主要看看执行checkpoint的快照逻辑,这里也会涉及到Flink的State状态管理和持久化方式

private boolean takeSnapshotSync( Map<OperatorID, OperatorSnapshotFutures> operatorSnapshotsInProgress, CheckpointMetaData checkpointMetaData, CheckpointMetricsBuilder checkpointMetrics, CheckpointOptions checkpointOptions, OperatorChain<?, ?> operatorChain, Supplier<Boolean> isCanceled) throws Exception { .... long checkpointId = checkpointMetaData.getCheckpointId(); long started = System.nanoTime(); ChannelStateWriteResult channelStateWriteResult = checkpointOptions.isUnalignedCheckpoint() ? channelStateWriter.getAndRemoveWriteResult(checkpointId) : ChannelStateWriteResult.EMPTY; //解索checkpoint的存储位置(Memory/FS/RocksDB) CheckpointStreamFactory storage = checkpointStorage.resolveCheckpointStorageLocation(checkpointId, checkpointOptions.getTargetLocation()); try { for (StreamOperatorWrapper<?, ?> operatorWrapper : operatorChain.getAllOperators(true)) { if (!operatorWrapper.isClosed()) { operatorSnapshotsInProgress.put( operatorWrapper.getStreamOperator().getOperatorID(), //执行checkpoint入口 buildOperatorSnapshotFutures( checkpointMetaData, checkpointOptions, operatorChain, operatorWrapper.getStreamOperator(), isCanceled, channelStateWriteResult, storage)); } } } finally { checkpointStorage.clearCacheFor(checkpointId); } ... }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

持久化State和checkpointId

void snapshotState( CheckpointedStreamOperator streamOperator, Optional<InternalTimeServiceManager<?>> timeServiceManager, String operatorName, long checkpointId, long timestamp, CheckpointOptions checkpointOptions, CheckpointStreamFactory factory, OperatorSnapshotFutures snapshotInProgress, StateSnapshotContextSynchronousImpl snapshotContext) throws CheckpointException { try { if (timeServiceManager.isPresent()) { checkState(keyedStateBackend != null, "keyedStateBackend should be available with timeServiceManager"); timeServiceManager.get().snapshotState(snapshotContext, operatorName); } //执行需要持久化state的操作 //比如map操作,它生成的是StreamMap属于AbstractUdfStreamOperator子类,里面封装了snapshotState逻辑,如果没实现ck接口就跳过此步骤 streamOperator.snapshotState(snapshotContext); snapshotInProgress.setKeyedStateRawFuture(snapshotContext.getKeyedStateStreamFuture()); snapshotInProgress.setOperatorStateRawFuture(snapshotContext.getOperatorStateStreamFuture()); //DefaultOperatorStateBackend,持久化到内存中 if (null != operatorStateBackend) { snapshotInProgress.setOperatorStateManagedFuture( //持久化当前checkpointId等属性 operatorStateBackend.snapshot(checkpointId, timestamp, factory, checkpointOptions)); } //有HeapKeyedStateBackend,RocksDBKeyedStateBackend等基于Key的持久化方式 if (null != keyedStateBackend) { snapshotInProgress.setKeyedStateManagedFuture( //持久化当前checkpointId等属性 keyedStateBackend.snapshot(checkpointId, timestamp, factory, checkpointOptions)); } } catch (Exception snapshotException) { try { snapshotInProgress.cancel(); } catch (Exception e) { snapshotException.addSuppressed(e); } String snapshotFailMessage = "Could not complete snapshot " + checkpointId + " for operator " + operatorName + "."; try { snapshotContext.closeExceptionally(); } catch (IOException e) { snapshotException.addSuppressed(e); } throw new CheckpointException(snapshotFailMessage, CheckpointFailureReason.CHECKPOINT_DECLINED, snapshotException); } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

当state持久化完毕后就会把checkpoint也持久到指定的stateBackend中。这样整个快照生成完毕,

最后Flink会调用finishAndReportAsync向Master发送完成报告,而下游的Operator继续重复以上步骤直到Master收到所有节点的完成报告,这时Master会生成CompletedCheckpoint持久化到指定stateBackend中(如果整个Checkpoint中间有超时或者节点挂掉等造成Master无法收集完整的各节点报告则会宣告失败并删除这次所有产生的状态数据),至此 整个Checkpoint结束。

-

相关阅读:

Uniapp自定义动态加载组件(2024.7更新)

【国际化Intl】Flutter 国际化多语言实践

VUE3.0对比VUE2.0

1、配置zabbix邮件报警和微信报警。 2、配置zabbix自动发现和自动注册。

双指针——盛水最多的容器

解决MyBatis-Plus updateById方法更新不了空字符串或null

vue3实现el-table表格单选功能以及默认选中某一行数据

面试官:我们简单聊一下kafka的一些东西吧。

为什么C语言执行效率高,运行快?

【C】关于动态内存的试题及解析

- 原文地址:https://blog.csdn.net/weixin_44052055/article/details/126386069