-

【cs231n】Lecture 2 : Image Classification pipeline 图像分类管道

Assignment 1

- K - Nearest Neighbor (k - 最近邻分类器)

- Linear classifiers (线性分类器) :SVM,Softmax

- Two - layer neural network (两层神经网络)

- Image Features (图像特征)

Ⅰ. Image Classification : A core task in Computer Vision

- The problem : Semantic Gap (语义鸿沟)

- Challenges : Viewpoint variation (视角变化)

- Challenges : Occlusion (包藏)

- Challenges: Background Clutter (背景混乱)

Ⅱ. Data-driven Approach (数据驱动方法)

- Collect a dataset of images and labels

- Use Machine Learning to train a classifier

- Evaluate the classifier on new images

def train(images, labels): # Machine Learning! return model;- 1

- 2

- 3

def predict(images, labels): # Use model to predict labels return test_labels;- 1

- 2

- 3

First classifier : Nearest Neighbor (近邻分类器)

The function is usually used to memorize all data and labels

def train(images, labels): # Machine Learning! return model;- 1

- 2

- 3

The function is usually used to predict the label of the most similar training image

def predict(images, labels): # Use model to predict labels return test_labels;- 1

- 2

- 3

Example Dateset : CIFAR10

- 10 classes

- 50,000 training images

- 10,000 testing images

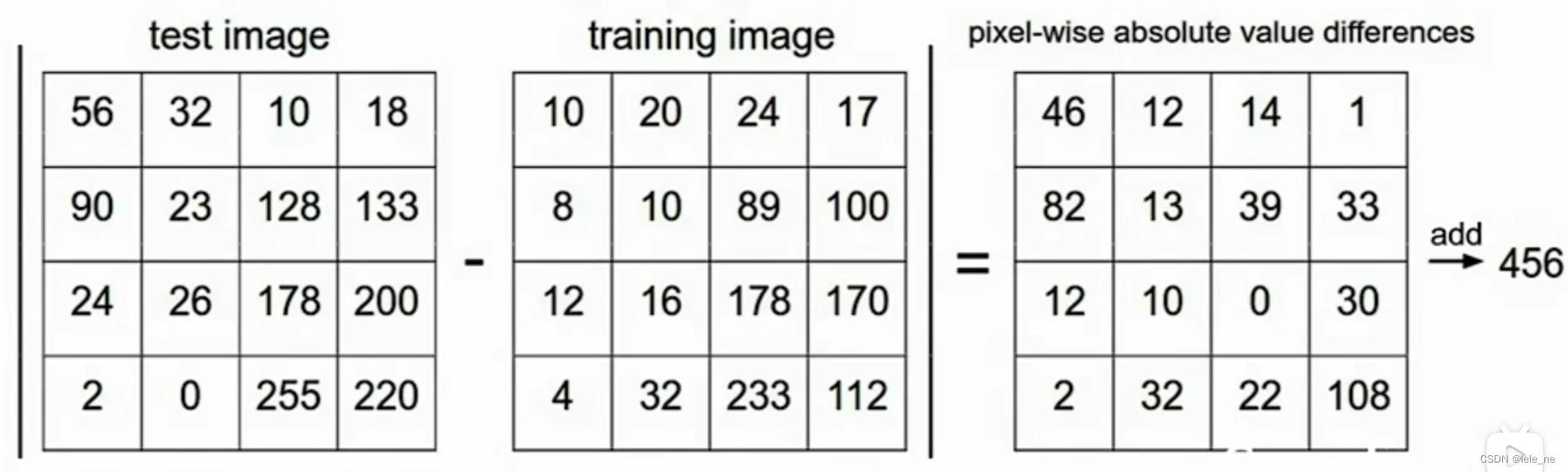

Distance Metric to compare images

L 1 d i s t a n c e : d 1 ( I 1 , I 2 ) = ∑ P ∣ I 1 P − I 2 P ∣ L1\ distance :\quad d1(I1,I2) = \sum_{P} \left | I_{1}^{P} - I_{2}^{P} \right | L1 distance:d1(I1,I2)=P∑∣ ∣I1P−I2P∣ ∣

Nearest Neighbor Classifier

import numpy as np class NearestNeighbor: def __init__(self): pass def train(self, X, Y): """ X is N * D where each row is an example. Y is 1-dimension of size N """ # the nearest neighbor classifier simply remembers all the training data self.Xtr = X self.ytr = y def predict(self, X): """ X is N * D where each row is an example we wish to predict label for """ num_test = X.shape[0] # lets make sure that the output type matches the input type Y_pred = np.zeros(num_test, dtype = self.ytr.dtype) # loop over all test rows for i in xrange(num_test): # find the nearest training image to the i'th test image # using the L1 distance (sum of absolute value differences) distances = np.sum(np.abs(self.Xtr - X[i,:]), axis = 1) # get the index with smallest distance min_index = np.argmin(distances) # predict the label of the nearest example Ypred[i] = self.ytr[min_index] return Ypred- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

Q & A :

Q: How fast are training and prediction?

A: Train O(1) Predict O(N)

This is bad. Because we want to classifier that are fast at prediction; and slow for training is okK-Nearest Neighbor Classifier

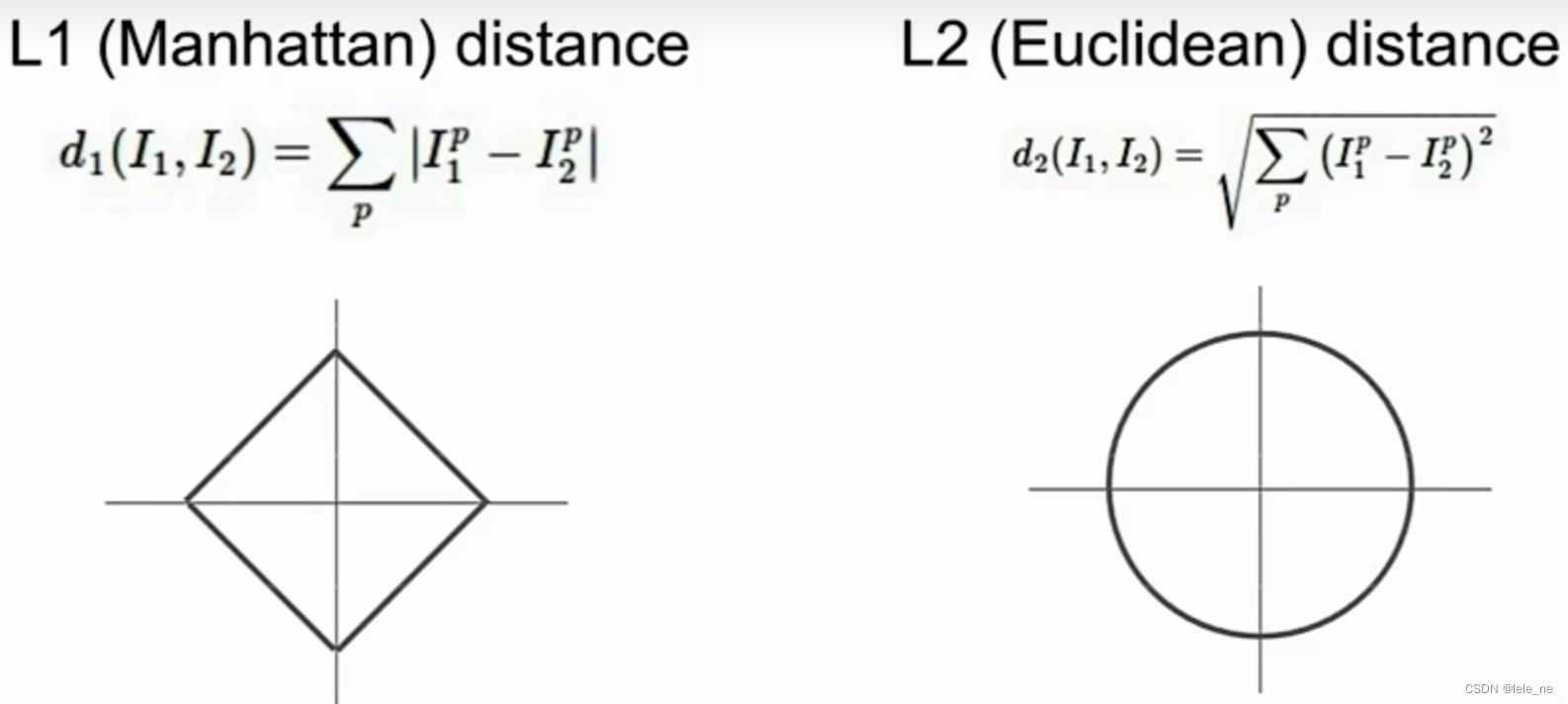

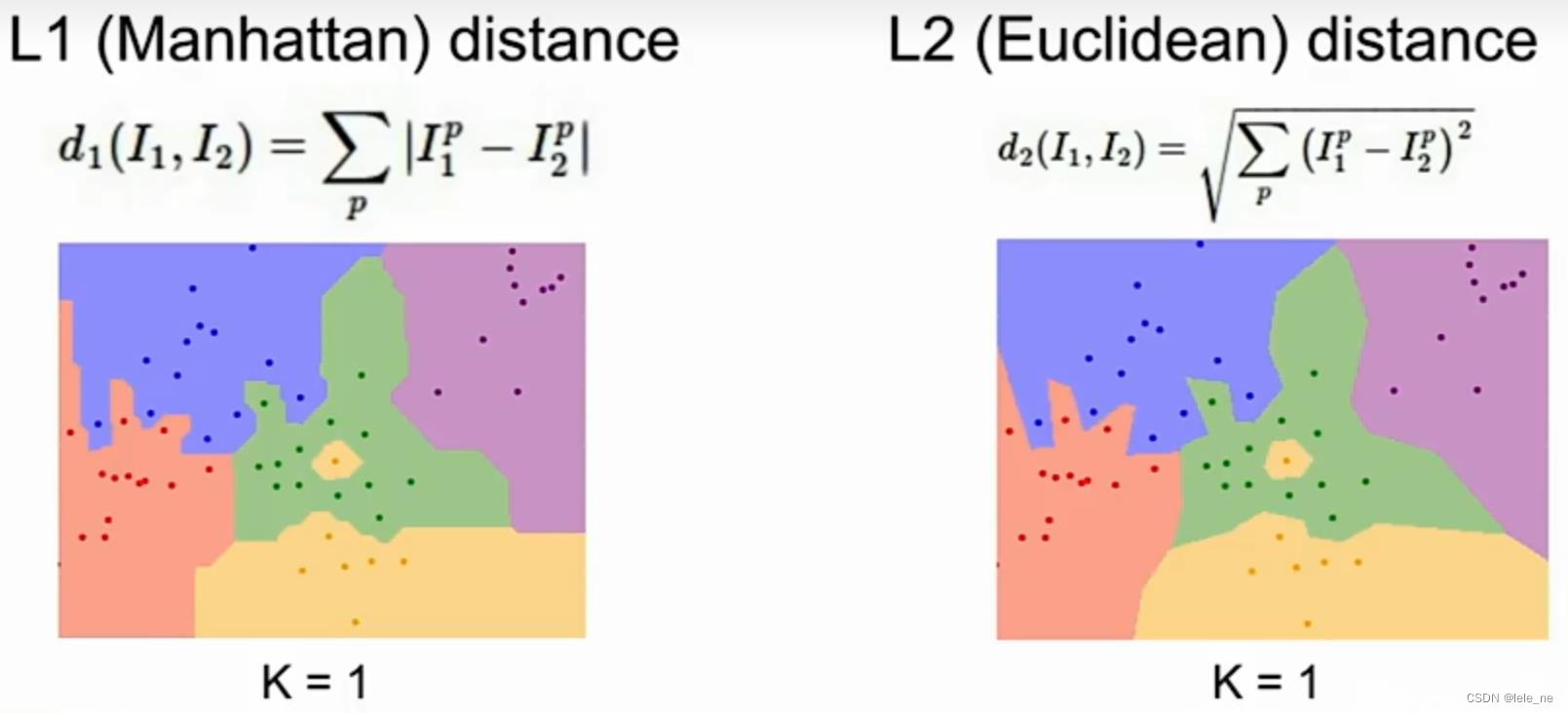

K-Nearest Neighbors :Distance Metric (距离度量标准)

Hyperparameters

Setting Hyperpararmeters

Summary

-

相关阅读:

ModuleNotFoundError: No module named ‘sklearn.cross_validation‘

Spring Boot简介

线程安全问题的产生条件、解决方式

Friedman检验和Nemenyi检验画图代码

4.16每日一题(交换二次积分的积分次序:画域——重新定项)

Attention Transformer

java入门-基本数据类型

Springboot启动流程

【Python】Python基础

ubuntu2204任务栏显示cpu 网速信息

- 原文地址:https://blog.csdn.net/lele_ne/article/details/126277834