复习ceph003

存储池为逻辑概念,存储池可以占用整个集群的所有空间

[root@ceph01 ~]# ceph osd pool create pool1

pool 'pool1' created

[root@ceph01 ~]# ceph osd pool application enable pool1 rgw

enabled application 'rgw' on pool 'pool1'

[root@ceph01 ~]# ceph osd pool ls detail

pool 1 'device_health_metrics' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 1 pgp_num 1 autoscale_mode on last_change 300 flags hashpspool stripe_width 0 pg_num_min 1 application mgr_devicehealth

pool 4 'pool1' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 309 flags hashpspool stripe_width 0 application rgw

[root@ceph01 ~]# ceph osd pool ls detail

pool 1 'device_health_metrics' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 1 pgp_num 1 autoscale_mode on last_change 300 flags hashpspool stripe_width 0 pg_num_min 1 application mgr_devicehealth

pool 4 'pool1' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 309 flags hashpspool stripe_width 0 application rgw

[root@ceph01 ~]# ceph pg dump pgs_brief | grep ^4

dumped pgs_brief

4.1f active+clean [6,5,1] 6 [6,5,1] 6

4.1e active+clean [0,4,7] 0 [0,4,7] 0

4.1d active+clean [7,3,1] 7 [7,3,1] 7

4.1c active+clean [6,1,4] 6 [6,1,4] 6

4.1b active+clean [4,1,8] 4 [4,1,8] 4

4.1a active+clean [4,0,7] 4 [4,0,7] 4

4.19 active+clean [6,0,5] 6 [6,0,5] 6

4.18 active+clean [3,1,6] 3 [3,1,6] 3

4.17 active+clean [0,7,3] 0 [0,7,3] 0

4.16 active+clean [0,4,8] 0 [0,4,8] 0

4.15 active+clean [8,0,5] 8 [8,0,5] 8

4.14 active+clean [1,5,7] 1 [1,5,7] 1

4.13 active+clean [4,6,1] 4 [4,6,1] 4

4.12 active+clean [1,8,4] 1 [1,8,4] 1

4.11 active+clean [4,0,7] 4 [4,0,7] 4

4.10 active+clean [1,6,4] 1 [1,6,4] 1

4.f active+clean [7,1,5] 7 [7,1,5] 7

4.4 active+clean [1,7,3] 1 [1,7,3] 1

4.3 active+clean [6,5,1] 6 [6,5,1] 6

4.2 active+clean [5,6,2] 5 [5,6,2] 5

4.1 active+clean [6,5,0] 6 [6,5,0] 6

4.5 active+clean [3,6,0] 3 [3,6,0] 3

4.0 active+clean [0,4,6] 0 [0,4,6] 0

4.6 active+clean [1,4,6] 1 [1,4,6] 1

4.7 active+clean [1,6,3] 1 [1,6,3] 1

4.8 active+clean [8,3,0] 8 [8,3,0] 8

4.9 active+clean [6,1,5] 6 [6,1,5] 6

4.a active+clean [5,1,7] 5 [5,1,7] 5

4.b active+clean [8,1,4] 8 [8,1,4] 8

4.c active+clean [4,1,8] 4 [4,1,8] 4

4.d active+clean [7,1,4] 7 [7,1,4] 7

4.e active+clean [4,6,1] 4 [4,6,1] 4

先写主后写备。三副本有自我恢复机制,在主osd坏掉后,备暂时成为主。然后再找一个osd成为第三个副本

老版不会自动扩

pg自动扩大 从 32开始

pg计算器

100osd 每个osd承载pg数量有限(100-200 pg/osd)

100osd * 200 = 20000pg

规划创建2个存储池

pool1 10000pg

pool2 10000pg

这个池osd承载数量可以改,但最好不要

在这个20000pg平均下来,性能会好一些

rados为集群内部的操作命令,排错调试

mom_allow_pool_delete = true全局图像化修改此参数允许删除存储池

ceph osd pool set pool1 nodelete false 单独设置存储池可不可删除

存储池命名空间

分隔存储池中数据,允许访问哪一个存储池里面的哪一个命名空间

[root@ceph01 ~]# cp /etc/passwd .

[root@ceph01 ~]#

[root@ceph01 ~]# rados -p pool1 put password passwd

[root@ceph01 ~]# rados -p pool1 ls

password

[root@ceph01 ~]# rados -p pool1 -N sys put password01 passwd

[root@ceph01 ~]# rados -p pool1 ls

password

[root@ceph01 ~]# rados -p pool1 -N sys ls

password01

[root@ceph01 ~]# rados -p pool1 --all ls

password

sys password01

允许用户访问存储池,就可以访问所有的命名空间

只限制用户只能访问 sys这个命名空间,其他命名空间就无法访问

纠删码池

10M 复制池 30M

10M 纠删码 < 30M 纠删码池可以节省空间

n = k + m

4M

k为数据块 2 每个数据块大小2M 如果为3数据块则为1.3 1.3*3

m为编码块 2 编码块2M 1.3*2 1.3*5=6.5M

两个数据块基于算法,会得到两个校验块 (raid6)

10M占了20M

pool 5 'pool2' erasure profile default size 4 min_size 3 crush_rule 1 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 313 flags hashpspool stripe_width 8192

[root@ceph01 ~]# ceph osd erasure-code-profile get default

k=2

m=2

plugin=jerasure

technique=reed_sol_van

[root@ceph01 ~]#

四个副本最多只能坏一个副本

坏2校验块数据不丢,但谁能保证坏的两个都是校验快呢

[root@ceph01 ~]# ceph osd pool application enable pool2 rgw

enabled application 'rgw' on pool 'pool2'

[root@ceph01 ~]# rados -p pool2 put passwd02 passwd

[root@ceph01 ~]# ceph pg dump pgs_brief | grep ^5

dumped pgs_brief

5.f active+undersized [5,2147483647,7,0] 5 [5,2147483647,7,0] 5

5.c active+undersized [1,3,6,2147483647] 1 [1,3,6,2147483647] 1

5.d active+undersized [7,1,3,2147483647] 7 [7,1,3,2147483647] 7

5.a active+undersized [2,6,2147483647,5] 2 [2,6,2147483647,5] 2

5.b active+undersized [7,2,4,2147483647] 7 [7,2,4,2147483647] 7

5.8 active+undersized [6,1,2147483647,4] 6 [6,1,2147483647,4] 6

5.9 active+undersized [7,5,2147483647,2] 7 [7,5,2147483647,2] 7

5.6 active+undersized [2,4,8,2147483647] 2 [2,4,8,2147483647] 2

5.7 active+undersized [5,0,7,2147483647] 5 [5,0,7,2147483647] 5

5.1 active+undersized [3,0,2147483647,7] 3 [3,0,2147483647,7] 3

5.4 active+undersized [7,2,4,2147483647] 7 [7,2,4,2147483647] 7

5.0 active+undersized [6,2,3,2147483647] 6 [6,2,3,2147483647] 6

5.3 active+undersized [0,2147483647,4,6] 0 [0,2147483647,4,6] 0

5.2 active+undersized [5,2,7,2147483647] 5 [5,2,7,2147483647] 5

5.5 active+undersized [0,7,4,2147483647] 0 [0,7,4,2147483647] 0

5.e active+undersized [6,4,1,2147483647] 6 [6,4,1,2147483647] 6

5.11 active+undersized [6,3,2147483647,1] 6 [6,3,2147483647,1] 6

5.10 active+undersized [4,6,2,2147483647] 4 [4,6,2,2147483647] 4

5.13 active+undersized [6,1,2147483647,4] 6 [6,1,2147483647,4] 6

5.12 active+undersized [6,3,2,2147483647] 6 [6,3,2,2147483647] 6

5.15 active+undersized [5,0,8,2147483647] 5 [5,0,8,2147483647] 5

5.14 active+undersized [0,7,4,2147483647] 0 [0,7,4,2147483647] 0

5.17 active+undersized [2,8,2147483647,4] 2 [2,8,2147483647,4] 2

5.16 active+undersized [3,1,2147483647,8] 3 [3,1,2147483647,8] 3

5.19 active+undersized [1,5,2147483647,7] 1 [1,5,2147483647,7] 1

5.18 active+undersized [4,7,1,2147483647] 4 [4,7,1,2147483647] 4

5.1b active+undersized [5,1,2147483647,7] 5 [5,1,2147483647,7] 5

5.1a active+undersized [8,4,0,2147483647] 8 [8,4,0,2147483647] 8

5.1d active+undersized [1,6,5,2147483647] 1 [1,6,5,2147483647] 1

5.1c active+undersized [4,6,0,2147483647] 4 [4,6,0,2147483647] 4

5.1f active+undersized [3,2147483647,0,8] 3 [3,2147483647,0,8] 3

5.1e active+undersized [0,7,3,2147483647] 0 [0,7,3,2147483647] 0

[root@ceph01 ~]# ceph osd map pool2 passwd02

osdmap e314 pool 'pool2' (5) object 'passwd02' -> pg 5.e793df76 (5.16) -> up ([3,1,NONE,8], p3) acting ([3,1,NONE,8], p3)

[root@ceph01 ~]#

更改故障域host 为 osd

[root@ceph01 ~]# ceph osd erasure-code-profile set ec01 crush-failure-domain=osd k=3 m=2

[root@ceph01 ~]# ceph osd erasure-code-profile get ec01

crush-device-class=

crush-failure-domain=osd

crush-root=default

jerasure-per-chunk-alignment=false

k=3

m=2

plugin=jerasure

technique=reed_sol_van

w=8

[root@ceph01 ~]# ceph osd pool create pool3 erasure ec01

pool 'pool3' created

[root@ceph01 ~]# ceph pg dump pgs_brief | grep ^6

dumped pgs_brief

6.1d active+clean [1,8,6,2,5] 1 [1,8,6,2,5] 1

6.1c active+clean [7,1,3,4,2] 7 [7,1,3,4,2] 7

6.1f active+clean [2,6,8,7,3] 2 [2,6,8,7,3] 2

6.1e active+clean [8,6,5,2,4] 8 [8,6,5,2,4] 8

6.19 active+clean [5,1,8,6,0] 5 [5,1,8,6,0] 5

6.18 active+clean [2,1,0,6,3] 2 [2,1,0,6,3] 2

6.1b active+clean [6,8,7,5,3] 6 [6,8,7,5,3] 6

6.1a active+clean [4,3,1,8,6] 4 [4,3,1,8,6] 4

6.15 active+clean [3,0,8,2,7] 3 [3,0,8,2,7] 3

6.14 active+clean [2,4,3,5,8] 2 [2,4,3,5,8] 2

6.17 active+clean [6,2,8,7,0] 6 [6,2,8,7,0] 6

6.16 active+clean [0,4,2,3,5] 0 [0,4,2,3,5] 0

6.11 active+clean [2,0,8,6,7] 2 [2,0,8,6,7] 2

6.10 active+clean [0,8,1,5,7] 0 [0,8,1,5,7] 0

6.13 active+clean [2,1,8,3,4] 2 [2,1,8,3,4] 2

6.12 active+clean [8,7,6,2,3] 8 [8,7,6,2,3] 8

6.d active+clean [5,1,8,0,7] 5 [5,1,8,0,7] 5

6.6 active+clean [1,4,3,8,0] 1 [1,4,3,8,0] 1

6.1 active+clean [6,4,1,3,5] 6 [6,4,1,3,5] 6

6.0 active+clean [0,7,6,5,3] 0 [0,7,6,5,3] 0

6.3 active+clean [4,5,7,3,0] 4 [4,5,7,3,0] 4

6.7 active+clean [5,2,0,4,1] 5 [5,2,0,4,1] 5

6.2 active+clean [3,0,2,8,5] 3 [3,0,2,8,5] 3

6.4 active+clean [1,5,6,2,3] 1 [1,5,6,2,3] 1

6.5 active+clean [5,4,1,2,3] 5 [5,4,1,2,3] 5

6.a active+clean [5,4,0,7,2] 5 [5,4,0,7,2] 5

6.b active+clean [1,3,4,6,7] 1 [1,3,4,6,7] 1

6.8 active+clean [3,6,2,8,4] 3 [3,6,2,8,4] 3

6.9 active+clean [0,5,7,2,6] 0 [0,5,7,2,6] 0

6.e active+clean [4,8,1,2,5] 4 [4,8,1,2,5] 4

6.f active+clean [2,6,7,3,4] 2 [2,6,7,3,4] 2

6.c active+clean [1,4,5,8,3] 1 [1,4,5,8,3] 1

4M的对象你切分成3个数据块,

数据发生变化也要重新计算

k=3 m=2 就最多坏2个 3+2好一些 但是最多让你坏1个保险

创建完ec01规则不能修改 如果有存储池在应用这个规则

性能比复制池差,因为时通过校验块,来保证可靠性,所以会消耗cpu计算

一个文件被切成对象,如果用的是纠删码池,对象还得被切块,就是/k的数量,然后计算出编码块来保证可靠性。

一些相关的参数

k m rule(crush-failure-domain)

crush-device-class 仅将某一类设备支持的osd用于池 ssd hdd nvme

crush-root 设置crush规则集的根节点

ceph的配置

/etc/ceph

ceph.conf 集群配置文件的入口

[root@ceph01 ceph]# cat ceph.conf

# minimal ceph.conf for cb8f4abe-14a7-11ed-a76d-000c2939fb75

[global]

fsid = cb8f4abe-14a7-11ed-a76d-000c2939fb75

mon_host = [v2:192.168.92.11:3300/0,v1:192.168.92.11:6789/0]

mon其实有三个,可以手动更新一下

更新ceph配置

集群网络(心跳,重平衡之类),和客户端访问的网络得分开好一些

[root@ceph01 ceph]# ceph config get mon public_network

192.168.92.0/24

[root@ceph01 ceph]# ceph config get mon cluster_network

xxxxxx

我没设置

可以通过set改

[root@ceph01 ceph]# ceph -s --cluster ceph

cluster:

id: cb8f4abe-14a7-11ed-a76d-000c2939fb75

health: HEALTH_WARN

1 osds exist in the crush map but not in the osdmap

Degraded data redundancy: 1/10 objects degraded (10.000%), 1 pg degraded, 32 pgs undersized

services:

mon: 3 daemons, quorum ceph01.example.com,ceph02,ceph03 (age 22h)

mgr: ceph01.example.com.wvuoii(active, since 22h), standbys: ceph02.alqzfq

osd: 9 osds: 9 up (since 22h), 9 in (since 2d)

data:

pools: 4 pools, 97 pgs

objects: 3 objects, 2.9 KiB

usage: 9.4 GiB used, 171 GiB / 180 GiB avail

pgs: 1/10 objects degraded (10.000%)

65 active+clean

31 active+undersized

1 active+undersized+degraded

[root@ceph01 ceph]# ls

COPYING sample.ceph.conf

[root@ceph01 ceph]# pwd

/usr/share/doc/ceph

[root@ceph01 ceph]# cd /etc/ceph/

[root@ceph01 ceph]# ls

ceph.client.admin.keyring ceph.conf ceph.pub rbdmap

[root@ceph01 ceph]#

ceph.conf .conf前面的就是集群名

区分集群名

ceph -s

health: HEALTH_WARN

1 osds exist in the crush map but not in the osdmap

Degraded data redundancy: 1/10 objects degraded (10.000%), 1 pg degraded, 32 pgs undersized

纠删码那个池引发的报错

[root@ceph01 ceph]# ceph osd pool delete pool2 pool2 --yes-i-really-really-mean-it

Error EPERM: pool deletion is disabled; you must first set the mon_allow_pool_delete config option to true before you can destroy a pool

[root@ceph01 ceph]# ceph config set mon mon_allow_pool_delete true

[root@ceph01 ceph]# ceph osd pool delete pool2 pool2 --yes-i-really-really-mean-it

pool 'pool2' removed

[root@ceph01 ceph]#

[root@ceph01 ceph]# ceph -s

cluster:

id: cb8f4abe-14a7-11ed-a76d-000c2939fb75

health: HEALTH_WARN

1 osds exist in the crush map but not in the osdmap

services:

mon: 3 daemons, quorum ceph01.example.com,ceph02,ceph03 (age 23h)

mgr: ceph01.example.com.wvuoii(active, since 23h), standbys: ceph02.alqzfq

osd: 9 osds: 9 up (since 23h), 9 in (since 2d)

data:

health_warn一般问题不大

报错信息就会少一条

ceph -s --name client.admin

ceph -s --id admin

元数据用来区分

在客户端区分做区分集群名之类

配置参数

以前改配置文件,现在通过命令行改

图形界面也可以看

[root@ceph01 ceph]# ceph config ls | grep mon | grep delete

mon_allow_pool_delete

mon_fake_pool_delete

[root@ceph01 ceph]# ceph config get mon mon_allow_pool_delete

true

对存储池操作由mon

对osd读写由osd决定

写在数据库,立即生效

ceph orch restart mon 重启集群所有mon

ceph orch daemon restart ods.1 针对性重启

ceph config dump

查看数据库的当前值

[root@ceph01 ~]# ceph config ls | grep mon | grep delete

mon_allow_pool_delete

mon_fake_pool_delete

过滤有用的设置值

[root@ceph01 ~]# ceph config set mon mon_allow_pool_delete false

[root@ceph01 ~]# ceph config get mon mon_allow_pool_delete

false

更改值并查看

或者

写一个配置文件

[root@ceph01 ~]# cat ceph.conf

[global]

mon_allow_pool_delete = false

使用此配置文件修改值

使用这个文件生效

ceph config assimilate-conf -i ceph.conf

可以知道自己写了哪些内容

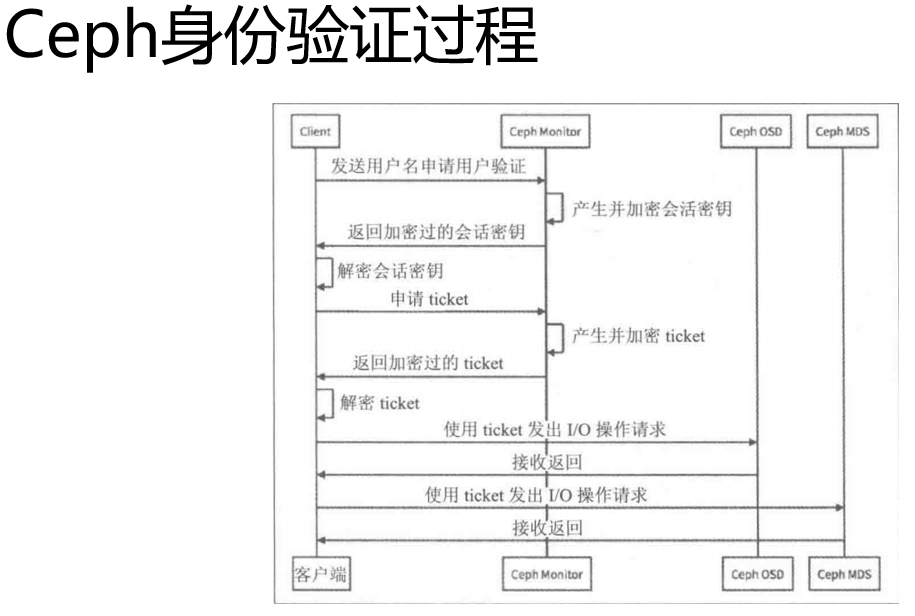

ceph 认证授权

ceph auth ls 查看所有用户

[root@serverc ~]# ceph config ls | grep auth | grep required

auth_cluster_required

auth_service_required

auth_client_required

[root@serverc ~]# ceph config get mon auth_cluster_required

cephx

创建密钥

key来认证,然后访问集群

访问

创建用户

[root@ceph01 ~]# ceph auth get-or-create client.user1 --id admin

[client.user1]

key = AQB+PPdiAgZZBxAAWKZ3s0Cu3y7o7yEVZqgKKQ==

使用id admin创建的

[root@ceph01 ~]# ceph auth get-or-create client.admin > /etc/ceph/ceph.client.user1.keyring

[root@ceph01 ~]# ceph -s --name client.user1

2022-08-13T13:56:00.302+0800 7f6eaaffd700 -1 monclient(hunting): handle_auth_bad_method server allowed_methods [2] but i only support [2,1]

[errno 13] RADOS permission denied (error connecting to the cluster)

[root@ceph01 ~]#

无权限

权限:

r:mon 获取集群信息

osd 获取对象信息

w:mon 修改集群信息,mon参数,创建和修改存储池

osd 上传对象 删除对象

授权

[root@serverc ~]# ceph auth caps client.user1 mon 'allow r'

updated caps for client.user1

[root@serverc ~]# ceph osd pool create pool1 --id user1

Error EACCES: access denied

[root@serverc ~]# ceph auth caps client.user1 mon 'allow rw'

updated caps for client.user1

[root@serverc ~]# ceph osd pool create pool1 --id user1

pool 'pool1' created

[root@serverc ~]#

[root@serverc ceph]# ceph auth caps client.user1 mon 'allow rw' osd 'allow r'

updated caps for client.user1

[root@serverc ceph]# rados -p pool1 ls --id user1

[root@serverc ceph]#

[root@serverc ceph]# ceph auth caps client.user1 mon 'allow rw' osd 'allow rw'

updated caps for client.user1

[root@serverc ceph]# rados -p pool1 ls --id user1

[root@serverc ceph]# cp /etc/passwd .

[root@serverc ceph]# rados -p pool1 put file2 passwd --id user1

[root@serverc ceph]# rados -p pool1 ls --id user1

file2

查看权限

[root@ceph01 ceph]# ceph auth get client.user1

exported keyring for client.user1

[client.user1]

key = AQB+PPdiAgZZBxAAWKZ3s0Cu3y7o7yEVZqgKKQ==

caps mon = "allow r"

[root@ceph01 ceph]#