-

项目:CV和NLP结合的Attention视频字幕生成算法实现

参考:

课程:学堂在线的清华训练营《驭风计划:培养人工智能青年人才》(满分作业)

paper:《Show, Attend and Tell Neural Image Caption Generation with Visual Attention》

需要的理论知识:LSTM BLEU Resnet-101 COCO数据集 Attention beam算法

理论知识也可以参考博客:

Monte Carlo《Show, Attend and Tell: Neural Image Caption Generation with Visual Attention》论文阅读(详细

《Show and Tell: A Neural Image Caption Generator》论文解读

项目配置、训练过程(环境:百度飞浆平台 V100 cuda9.2):

conda create --prefix=/home/aistudio/external-libraries/caption python=3.6 -y conda init bash conda activate /home/aistudio/external-libraries/caption conda install pytorch=0.4.1 cuda92 -c https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/pytorch/linux-64/ -y pip install torchvision==0.2.2 numpy nltk tqdm h5py pillow matplotlib scikit-image scipy==1.1.0 -i https://pypi.tuna.tsinghua.edu.cn/simple python train.py > train_att.log python caption.py --img '/home/aistudio/data/data124850/test2014/COCO_test2014_000000090228.jpg' --model '../code/BEST_checkpoint_coco_5_cap_per_img_5_min_word_freq.pth.tar' --word_map '/home/aistudio/data/data124851/WORDMAP_coco_5_cap_per_img_5_min_word_freq.json'- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

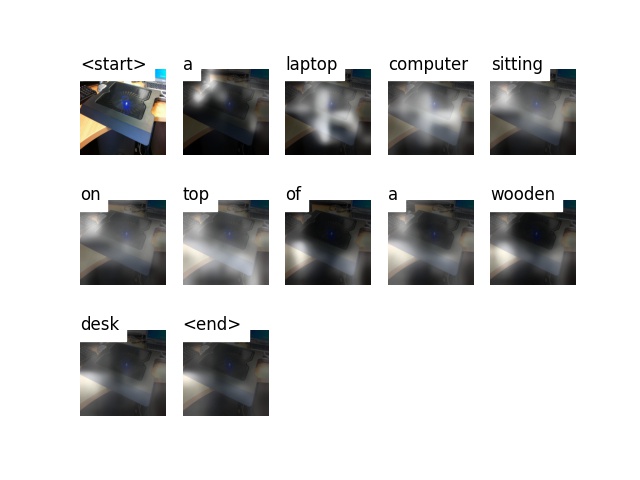

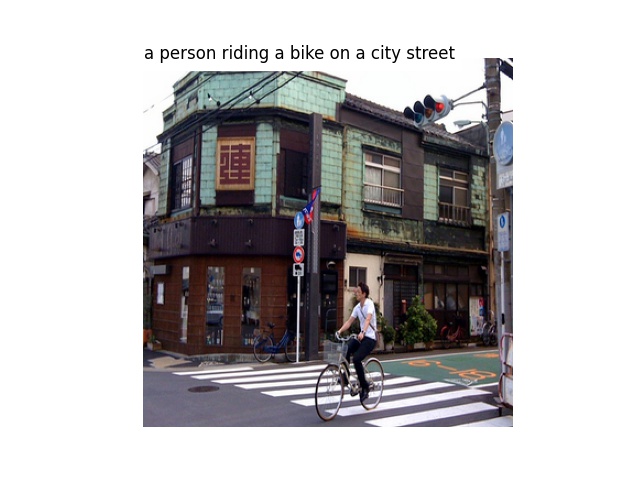

实验结果示例:

图中的白色亮光是指注意力,很明显,可以看到对于清晰和明显的图片这个模型还是有较好的效果,注意力也能关注到图片中的重要部分,而且注意力是随着词的变化而改变位置的,例如第2张图,当词是a laptop的时候,注意力正好关注电脑;当词是wooden desk的时候,注意力正好关注着桌子。

核心代码:

import torch from torch import nn import torchvision device = torch.device("cuda" if torch.cuda.is_available() else "cpu") class Encoder(nn.Module): """ Encoder """ def __init__(self, encoded_image_size=14): super(Encoder, self).__init__() self.enc_image_size = encoded_image_size # Pretrained ImageNet ResNet-101 # Remove linear and pool layers resnet = torchvision.models.resnet101(pretrained=True) modules = list(resnet.children())[:-2] self.resnet = nn.Sequential(*modules) # Resize image to fixed size to allow input images of variable size self.adaptive_pool = nn.AdaptiveAvgPool2d((encoded_image_size, encoded_image_size)) self.fine_tune(fine_tune=True) def forward(self, images): """ Forward propagation. :param images: images, a tensor of dimensions (batch_size, 3, image_size, image_size) :return: encoded images """ out = self.resnet(images) # (batch_size, 2048, image_size/32, image_size/32) out = self.adaptive_pool(out) # (batch_size, 2048, encoded_image_size, encoded_image_size) out = out.permute(0, 2, 3, 1) # (batch_size, encoded_image_size, encoded_image_size, 2048) return out def fine_tune(self, fine_tune=True): """ Allow or prevent the computation of gradients for convolutional blocks 2 through 4 of the encoder. :param fine_tune: boolean """ for p in self.resnet.parameters(): p.requires_grad = False # If fine-tuning, only fine-tune convolutional blocks 2 through 4 for c in list(self.resnet.children())[5:]: for p in c.parameters(): p.requires_grad = fine_tune class Attention(nn.Module): """ Attention Network. """ def __init__(self, encoder_dim, decoder_dim, attention_dim): """ :param encoder_dim: feature size of encoded images :param decoder_dim: size of decoder's RNN :param attention_dim: size of the attention network """ super(Attention, self).__init__() self.encoder_att = nn.Linear(encoder_dim, attention_dim) # linear layer to transform encoded image self.decoder_att = nn.Linear(decoder_dim, attention_dim) # linear layer to transform decoder's output self.full_att = nn.Linear(attention_dim, 1) # linear layer to calculate values to be softmax-ed self.relu = nn.ReLU() self.softmax = nn.Softmax(dim=1) # softmax layer to calculate weights def forward(self, encoder_out, decoder_hidden): """ Forward pass. :param encoder_out: encoded images, a tensor of dimension (batch_size, num_pixels, encoder_dim) :param decoder_hidden: previous decoder output, a tensor of dimension (batch_size, decoder_dim) :return: attention weighted encoding, weights """ att1 = self.encoder_att(encoder_out) # (batch_size, num_pixels, attention_dim) att2 = self.decoder_att(decoder_hidden) # (batch_size, attention_dim) att = self.full_att(self.relu(att1 + att2.unsqueeze(1))).squeeze(2) # (batch_size, num_pixels) alpha = self.softmax(att) # (batch_size, num_pixels) z = (encoder_out * alpha.unsqueeze(2)).sum(dim=1) # (batch_size, encoder_dim) return z, alpha class DecoderWithAttention(nn.Module): """ Decoder. """ def __init__(self, cfg, encoder_dim=2048): """ :param attention_dim: size of attention network :param embed_dim: embedding size :param decoder_dim: size of decoder's RNN :param vocab_size: size of vocabulary :param encoder_dim: feature size of encoded images :param dropout: dropout """ super(DecoderWithAttention, self).__init__() self.encoder_dim = encoder_dim self.decoder_dim = cfg['decoder_dim'] self.attention_dim = cfg['attention_dim'] self.embed_dim = cfg['embed_dim'] self.vocab_size = cfg['vocab_size'] self.dropout = cfg['dropout'] self.device = cfg['device'] self.attention = Attention(encoder_dim, self.decoder_dim, self.attention_dim) # attention network self.embedding = nn.Embedding(self.vocab_size, self.embed_dim) # embedding layer self.dropout = nn.Dropout(0.1) self.decode_step = nn.LSTMCell(self.embed_dim + encoder_dim, self.decoder_dim, bias=True) # decoding LSTMCell self.init_h = nn.Linear(encoder_dim, self.decoder_dim) # linear layer to find initial hidden state of LSTMCell self.init_c = nn.Linear(encoder_dim, self.decoder_dim) # linear layer to find initial cell state of LSTMCell self.f_beta = nn.Linear(self.decoder_dim, encoder_dim) # linear layer to create a sigmoid-activated gate self.sigmoid = nn.Sigmoid() self.fc = nn.Linear(self.decoder_dim, self.vocab_size) # linear layer to find scores over vocabulary self.init_weights() # initialize some layers with the uniform distribution # initialize some layers with the uniform distribution self.embedding.weight.data.uniform_(-0.1, 0.1) self.fc.bias.data.fill_(0) self.fc.weight.data.uniform_(-0.1, 0.1) def load_pretrained_embeddings(self, embeddings): """ Loads embedding layer with pre-trained embeddings. :param embeddings: pre-trained embeddings """ self.embedding.weight = nn.Parameter(embeddings) def fine_tune_embeddings(self, fine_tune=True): """ Allow fine-tuning of embedding layer? (Only makes sense to not-allow if using pre-trained embeddings). :param fine_tune: Allow? """ for p in self.embedding.parameters(): p.requires_grad = fine_tune def init_weights(self): """ Initializes some parameters with values from the uniform distribution, for easier convergence. """ self.embedding.weight.data.uniform_(-0.1, 0.1) self.fc.bias.data.fill_(0) self.fc.weight.data.uniform_(-0.1, 0.1) def forward(self, encoder_out, encoded_captions, caption_lengths): """ Forward propagation. :param encoder_out: encoded images, a tensor of dimension (batch_size, enc_image_size, enc_image_size, encoder_dim) :param encoded_captions: encoded captions, a tensor of dimension (batch_size, max_caption_length) :param caption_lengths: caption lengths, a tensor of dimension (batch_size, 1) :return: scores for vocabulary, sorted encoded captions, decode lengths, weights, sort indices """ batch_size = encoder_out.size(0) encoder_dim = encoder_out.size(-1) vocab_size = self.vocab_size # Flatten image encoder_out = encoder_out.view(batch_size, -1, encoder_dim) # (batch_size, num_pixels, encoder_dim) num_pixels = encoder_out.size(1) # Sort input data by decreasing lengths; caption_lengths, sort_ind = caption_lengths.squeeze(1).sort(dim=0, descending=True) encoder_out = encoder_out[sort_ind] encoded_captions = encoded_captions[sort_ind] # Embedding embeddings = self.embedding(encoded_captions) # (batch_size, max_caption_length, embed_dim) # We won't decode at theposition, since we've finished generating as soon as we generate # So, decoding lengths are actual lengths - 1 decode_lengths = (caption_lengths - 1).tolist() # Create tensors to hold word predicion scores and alphas predictions = torch.zeros(batch_size, max(decode_lengths), vocab_size).to(self.device) alphas = torch.zeros(batch_size, max(decode_lengths), num_pixels).to(self.device) # Initialize LSTM state mean_encoder_out = encoder_out.mean(dim=1) h = self.init_h(mean_encoder_out) # (batch_size, decoder_dim) c = self.init_c(mean_encoder_out) for t in range(max(decode_lengths)): batch_size_t = sum([l > t for l in decode_lengths]) preds, alpha, h, c = self.one_step(t, batch_size_t, embeddings, encoder_out, h, c) predictions[:batch_size_t, t, :] = preds alphas[:batch_size_t, t, :] = alpha return predictions, encoded_captions, decode_lengths, alphas, sort_ind def one_step(self, t, batch_size_t, embeddings, encoder_out, h, c): """ :param t: :param batch_size_t: :param embeddings: :param encoder_out: :param h: :param c: :return: """ attention_weighted_encoding, alpha = self.attention(encoder_out[:batch_size_t], h[:batch_size_t]) gate = self.sigmoid(self.f_beta(h[:batch_size_t])) # gating scalar, (batch_size_t, encoder_dim) attention_weighted_encoding = gate * attention_weighted_encoding h, c = self.decode_step( torch.cat([embeddings[:batch_size_t, t, :], attention_weighted_encoding], dim=1), (h[:batch_size_t], c[:batch_size_t])) # (batch_size_t, decoder_dim) preds = self.fc(self.dropout(h)) # (batch_size_t, vocab_size) return preds, alpha, h, c- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

-

相关阅读:

.NET 8 Video教程介绍(开篇)

6年技术迭代,阿里全球化出海&合规的挑战和探索

Fast unsupervised embedding learning with anchor-based

JVM栈帧的内部结构

浅谈vue的自定义指令

3D感知技术(4)双目立体视觉测距

Spring Boot源码分析一:启动流程

国金证券DevOps建设项目分享——嘉为蓝鲸

AutoCAD Electrical 2022—元件的绘制

CDS(Core Data Service)Annotation 常用属性

- 原文地址:https://blog.csdn.net/KPer_Yang/article/details/126255942