-

【mmaction2 入门教程】 slowfast训练配置 日志分析 测试结果分析

需要下载的资源(免费):

22-8-6 mmaction2 slowfast训练配置 训练日志分析:https://download.csdn.net/download/WhiffeYF/86339417b站:https://www.bilibili.com/video/BV16B4y1474U

在开始这篇博客前,你需要已经可以在mmaction2上用slowfast训练数据集(或者使用自定义数据集),在我之前的博客有相关教程:

自定义ava数据集及训练与测试 完整版 时空动作/行为 视频数据集制作 yolov5, deep sort, VIA MMAction, SlowFast

【mmaction2 slowfast 行为分析(商用级别)】总目录

0 参考资料

MMAction2 学习笔记 (四)——实用工具及demo演示:https://blog.csdn.net/Jason_____Wang/article/details/121488466

mmaction2官网_日志分析:https://mmaction2.readthedocs.io/zh_CN/latest/useful_tools.html#id2

mmaction2官网_训练配置:https://mmaction2.readthedocs.io/zh_CN/latest/getting_started.html#id10

mmaction2官网_如何编写配置文件:https://mmaction2.readthedocs.io/zh_CN/latest/tutorials/1_config.html#id7

1 GPU平台

使用的AI极链平台搭建:https://cloud.videojj.com/auth/register?inviter=18452&activityChannel=student_invite

2 训练配置(Training setting)

对日志的分析,当然是需要先进行训练咯,为了能得到更加详细全面的训练日志,我们需要先知道,训练有哪些配置?

2.1 官网的训练配置文档

在mmaction2的中训练配置(下面的大部分是从官网的文档里抄的,有中英对照处即为我抄的)

所有的输出(日志文件和模型权重文件)会被将保存到工作目录下。工作目录通过配置文件中的参数

work_dir指定。All outputs (log files and checkpoints) will be saved to the working directory, which is specified by

work_dirin the config file.默认情况下,MMAction2 在每个周期后会在验证集上评估模型,可以通过在训练配置中修改 interval 参数来更改评估间隔

By default we evaluate the model on the validation set after each epoch, you can change the evaluation interval by modifying the interval argument in the training config

evaluation = dict(interval=5) # This evaluate the model per 5 epoch.- 1

上面这段代码在(其中一个config):https://github.com/open-mmlab/mmaction2/blob/master/configs/detection/ava/slowfast_context_kinetics_pretrained_r50_4x16x1_20e_ava_rgb.py

根据 Linear Scaling Rule,当 GPU 数量或每个 GPU 上的视频批大小改变时,用户可根据批大小按比例地调整学习率,如,当 4 GPUs x 2 video/gpu 时,lr=0.01;当 16 GPUs x 4 video/gpu 时,lr=0.08。According to the Linear Scaling Rule, you need to set the

learning rateproportional to the batch size if you use different GPUs or videos per GPU, e.g., lr=0.01 for 4 GPUs x 2 video/gpu and lr=0.08 for 16 GPUs x 4 video/gpu.python tools/train.py ${CONFIG_FILE} [optional arguments]- 1

接下来说说可选参数(Optional arguments):

--validate (强烈建议):在训练期间每 k 个周期进行一次验证(默认值为 5,可通过修改每个配置文件中的 evaluation 字典变量的 interval 值进行改变)。

--validate (strongly recommended): Perform evaluation at every k (default value is 5, which can be modified by changing the interval value in evaluation dict in each config file) epochs during the training.--test-last:在训练结束后使用最后一个检查点的参数进行测试,将测试结果存储在 ${WORK_DIR}/last_pred.pkl 中。

--test-last: Test the final checkpoint when training is over, save the prediction to ${WORK_DIR}/last_pred.pkl.--test-best:在训练结束后使用效果最好的检查点的参数进行测试,将测试结果存储在 ${WORK_DIR}/best_pred.pkl 中。

--test-best: Test the best checkpoint when training is over, save the prediction to ${WORK_DIR}/best_pred.pkl.--work-dir ${WORK_DIR}:覆盖配置文件中指定的工作目录。

--work-dir ${WORK_DIR}: Override the working directory specified in the config file.--resume-from ${CHECKPOINT_FILE}:从以前的模型权重文件恢复训练。

--resume-from ${CHECKPOINT_FILE}: Resume from a previous checkpoint file.

resume-from 和 load-from 的不同点: resume-from 加载模型参数和优化器状态,并且保留检查点所在的周期数,常被用于恢复意外被中断的训练。 load-from 只加载模型参数,但周期数从 0 开始计数,常被用于微调模型。

Difference between resume-from and load-from: resume-from loads both the model weights and optimizer status, and the epoch is also inherited from the specified checkpoint. It is usually used for resuming the training process that is interrupted accidentally. load-from only loads the model weights and the training epoch starts from 0. It is usually used for finetuning.

2.2 官网的时空动作检测的配置文件系统解析(Config System for Spatio-Temporal Action Detection)

这一小节抄的官网,因为我所使用的配置文件,几乎与官网相同,所以直接用官网的解析。

以 FastRCNN 为例

An Example of FastRCNN

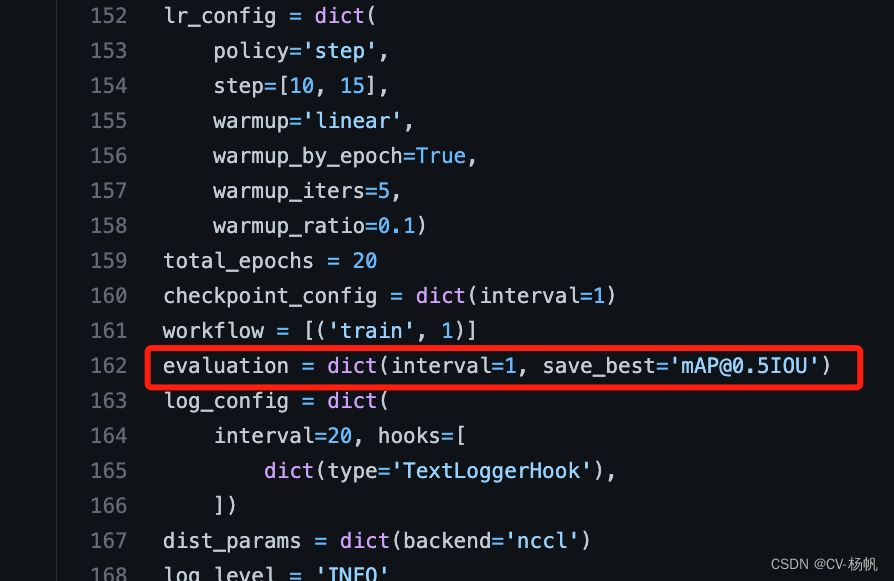

# 模型设置 model setting model = dict( # 模型的配置 Config of the model type='FastRCNN', # 时空检测器类型 Type of the detector backbone=dict( # Backbone 字典设置 Dict for backbone type='ResNet3dSlowOnly', # Backbone 名 Name of the backbone depth=50, # ResNet 模型深度 Depth of ResNet model pretrained=None, # 预训练模型的 url 或文件位置 The url/site of the pretrained model pretrained2d=False, # 预训练模型是否为 2D 模型 If the pretrained model is 2D lateral=False, # backbone 是否有侧连接 If the backbone is with lateral connections num_stages=4, # ResNet 模型阶数 Stages of ResNet model conv1_kernel=(1, 7, 7), # Conv1 卷积核尺寸 Conv1 kernel size conv1_stride_t=1, # Conv1 时序步长 Conv1 temporal stride pool1_stride_t=1, # Pool1 时序步长 Pool1 temporal stride spatial_strides=(1, 2, 2, 1)), # 每个 ResNet 阶的空间步长 The spatial stride for each ResNet stage roi_head=dict( # roi_head 字典设置 Dict for roi_head type='AVARoIHead', # roi_head 名 Name of the roi_head bbox_roi_extractor=dict( # bbox_roi_extractor 字典设置 Dict for bbox_roi_extractor type='SingleRoIExtractor3D', # bbox_roi_extractor 名 Name of the bbox_roi_extractor roi_layer_type='RoIAlign', # RoI op 类型 Type of the RoI op output_size=8, # RoI op 输出特征尺寸 Output feature size of the RoI op with_temporal_pool=True), # 时序维度是否要经过池化 If temporal dim is pooled bbox_head=dict( # bbox_head 字典设置 Dict for bbox_head type='BBoxHeadAVA', # bbox_head 名 Name of the bbox_head in_channels=2048, # 输入特征通道数 Number of channels of the input feature num_classes=81, # 动作类别数 + 1(背景) Number of action classes + 1 multilabel=True, # 数据集是否多标签 If the dataset is multilabel dropout_ratio=0.5)), # dropout 比率 The dropout ratio used # 模型训练和测试的设置 model training and testing settings train_cfg=dict( # 训练 FastRCNN 的超参配置 Training config of FastRCNN rcnn=dict( # rcnn 训练字典设置 Dict for rcnn training config assigner=dict( # assigner 字典设置 Dict for assigner type='MaxIoUAssignerAVA', # assigner 名 Name of the assigner pos_iou_thr=0.9, # 正样本 IoU 阈值, > pos_iou_thr -> positive ,IoU threshold for positive examples, > pos_iou_thr -> positive neg_iou_thr=0.9, # 负样本 IoU 阈值, < neg_iou_thr -> negative,IoU threshold for negative examples, < neg_iou_thr -> negative min_pos_iou=0.9), # 正样本最小可接受 IoU Minimum acceptable IoU for positive examples sampler=dict( # sample 字典设置 Dict for sample type='RandomSampler', # sampler 名 Name of the sampler num=32, # sampler 批大小 Batch Size of the sampler pos_fraction=1, # sampler 正样本边界框比率 Positive bbox fraction of the sampler neg_pos_ub=-1, # 负样本数转正样本数的比率上界 Upper bound of the ratio of num negative to num positive add_gt_as_proposals=True), # 是否添加 ground truth 为候选 Add gt bboxes as proposals pos_weight=1.0, # 正样本 loss 权重 Loss weight of positive examples debug=False)), # 是否为 debug 模式 Debug mode test_cfg=dict( # 测试 FastRCNN 的超参设置 Testing config of FastRCNN rcnn=dict( # rcnn 测试字典设置 Dict for rcnn testing config action_thr=0.002))) # 某行为的阈值 The threshold of an action # 数据集设置 dataset settings dataset_type = 'AVADataset' # 训练,验证,测试的数据集类型 Type of dataset for training, validation and testing data_root = 'data/ava/rawframes' # 训练集的根目录 Root path to data anno_root = 'data/ava/annotations' # 标注文件目录 Root path to annotations ann_file_train = f'{anno_root}/ava_train_v2.1.csv' # 训练集的标注文件 Path to the annotation file for training ann_file_val = f'{anno_root}/ava_val_v2.1.csv' # 验证集的标注文件 Path to the annotation file for validation exclude_file_train = f'{anno_root}/ava_train_excluded_timestamps_v2.1.csv' # 训练除外数据集文件路径 Path to the exclude annotation file for training exclude_file_val = f'{anno_root}/ava_val_excluded_timestamps_v2.1.csv' # 验证除外数据集文件路径 Path to the exclude annotation file for validation label_file = f'{anno_root}/ava_action_list_v2.1_for_activitynet_2018.pbtxt' # 标签文件路径 Path to the label file proposal_file_train = f'{anno_root}/ava_dense_proposals_train.FAIR.recall_93.9.pkl' # 训练样本检测候选框的文件路径 Path to the human detection proposals for training examples proposal_file_val = f'{anno_root}/ava_dense_proposals_val.FAIR.recall_93.9.pkl' # 验证样本检测候选框的文件路径 Path to the human detection proposals for validation examples img_norm_cfg = dict( # 图像正则化参数设置 Config of image normalization used in data pipeline mean=[123.675, 116.28, 103.53], # 图像正则化平均值 Mean values of different channels to normalize std=[58.395, 57.12, 57.375], # 图像正则化方差 Std values of different channels to normalize to_bgr=False) # 是否将通道数从 RGB 转为 BGR Whether to convert channels from RGB to BGR train_pipeline = [ # 训练数据前处理流水线步骤组成的列表 List of training pipeline steps dict( # SampleFrames 类的配置 Config of SampleFrames type='AVASampleFrames', # 选定采样哪些视频帧 Sample frames pipeline, sampling frames from video clip_len=4, # 每个输出视频片段的帧 Frames of each sampled output clip frame_interval=16), # 所采相邻帧的时序间隔 Temporal interval of adjacent sampled frames dict( # RawFrameDecode 类的配置 Config of RawFrameDecode type='RawFrameDecode'), # 给定帧序列,加载对应帧,解码对应帧 Load and decode Frames pipeline, picking raw frames with given indices dict( # RandomRescale 类的配置 Config of RandomRescale type='RandomRescale', # 给定一个范围,进行随机短边缩放 Randomly rescale the shortedge by a given range scale_range=(256, 320)), # RandomRescale 的短边缩放范围 The shortedge size range of RandomRescale dict( # RandomCrop 类的配置 Config of RandomCrop type='RandomCrop', # 给定一个尺寸进行随机裁剪 Randomly crop a patch with the given size size=256), # 裁剪尺寸 The size of the cropped patch dict( # Flip 类的配置 Config of Flip type='Flip', # 图片翻转 Flip Pipeline flip_ratio=0.5), # 执行翻转几率 Probability of implementing flip dict( # Normalize 类的配置 Config of Normalize type='Normalize', # 图片正则化 Normalize pipeline **img_norm_cfg), # 图片正则化参数 Config of image normalization dict( # FormatShape 类的配置 Config of FormatShape type='FormatShape', # 将图片格式转变为给定的输入格式 Format shape pipeline, Format final image shape to the given input_format input_format='NCTHW', # 最终的图片组成格式 Final image shape format collapse=True), # 去掉 N 梯度当 N == 1 Collapse the dim N if N == 1 dict( # Rename 类的配置 Config of Rename type='Rename', # 重命名 key 名 Rename keys mapping=dict(imgs='img')), # 改名映射字典 The old name to new name mapping dict( # ToTensor 类的配置 Config of ToTensor type='ToTensor', # ToTensor 类将其他类型转化为 Tensor 类型 Convert other types to tensor type pipeline keys=['img', 'proposals', 'gt_bboxes', 'gt_labels']), # 将被从其他类型转化为 Tensor 类型的特征 Keys to be converted from image to tensor dict( # ToDataContainer 类的配置 Config of ToDataContainer type='ToDataContainer', # 将一些信息转入到 ToDataContainer 中 Convert other types to DataContainer type pipeline fields=[ # 转化为 Datacontainer 的域 Fields to convert to DataContainer dict( # 域字典 Dict of fields key=['proposals', 'gt_bboxes', 'gt_labels'], # 将转化为 DataContainer 的键 Keys to Convert to DataContainer stack=False)]), # 是否要堆列这些 tensor Whether to stack these tensor dict( # Collect 类的配置 Config of Collect type='Collect', # Collect 类决定哪些键会被传递到时空检测器中 Collect pipeline that decides which keys in the data should be passed to the detector keys=['img', 'proposals', 'gt_bboxes', 'gt_labels'], # 输入的键 Keys of input ,Keys of input meta_keys=['scores', 'entity_ids']), # 输入的元键 Meta keys of input ] val_pipeline = [ # 验证数据前处理流水线步骤组成的列表 List of validation pipeline steps dict( # SampleFrames 类的配置 Config of SampleFrames type='AVASampleFrames', # 选定采样哪些视频帧 Sample frames pipeline, sampling frames from video clip_len=4, # 每个输出视频片段的帧 Frames of each sampled output clip frame_interval=16), # 所采相邻帧的时序间隔 Temporal interval of adjacent sampled frames dict( # RawFrameDecode 类的配置 Config of RawFrameDecode type='RawFrameDecode'), # 给定帧序列,加载对应帧,解码对应帧 Load and decode Frames pipeline, picking raw frames with given indices dict( # Resize 类的配置 Config of Resize type='Resize', # 调整图片尺寸 Resize pipeline scale=(-1, 256)), # 调整比例 The scale to resize images dict( # Normalize 类的配置 Config of Normalize type='Normalize', # 图片正则化 Normalize pipeline **img_norm_cfg), # 图片正则化参数 Config of image normalization dict( # FormatShape 类的配置 Config of FormatShape type='FormatShape', # 将图片格式转变为给定的输入格式 Format shape pipeline, Format final image shape to the given input_format input_format='NCTHW', # 最终的图片组成格式 Final image shape format collapse=True), # 去掉 N 梯度当 N == 1 Collapse the dim N if N == 1 dict( # Rename 类的配置 Config of Rename type='Rename', # 重命名 key 名 Rename keys mapping=dict(imgs='img')), # 改名映射字典 The old name to new name mapping dict( # ToTensor 类的配置 Config of ToTensor type='ToTensor', # ToTensor 类将其他类型转化为 Tensor 类型 Convert other types to tensor type pipeline keys=['img', 'proposals']), # 将被从其他类型转化为 Tensor 类型的特征 Keys to be converted from image to tensor dict( # ToDataContainer 类的配置 Config of ToDataContainer ,Convert other types to DataContainer type pipeline type='ToDataContainer', # 将一些信息转入到 ToDataContainer 中 fields=[ # 转化为 Datacontainer 的域 dict( # 域字典 key=['proposals'], # 将转化为 DataContainer 的键 stack=False)]), # 是否要堆列这些 tensor dict( # Collect 类的配置 type='Collect', # Collect 类决定哪些键会被传递到时空检测器中 keys=['img', 'proposals'], # 输入的键 meta_keys=['scores', 'entity_ids'], # 输入的元键 nested=True) # 是否将数据包装为嵌套列表 ] data = dict( # 数据的配置 videos_per_gpu=16, # 单个 GPU 的批大小 workers_per_gpu=2, # 单个 GPU 的 dataloader 的进程 val_dataloader=dict( # 验证过程 dataloader 的额外设置 videos_per_gpu=1), # 单个 GPU 的批大小 train=dict( # 训练数据集的设置 type=dataset_type, ann_file=ann_file_train, exclude_file=exclude_file_train, pipeline=train_pipeline, label_file=label_file, proposal_file=proposal_file_train, person_det_score_thr=0.9, data_prefix=data_root), val=dict( # 验证数据集的设置 type=dataset_type, ann_file=ann_file_val, exclude_file=exclude_file_val, pipeline=val_pipeline, label_file=label_file, proposal_file=proposal_file_val, person_det_score_thr=0.9, data_prefix=data_root)) data['test'] = data['val'] # 将验证数据集设置复制到测试数据集设置 # 优化器设置 optimizer = dict( # 构建优化器的设置,支持: # (1) 所有 PyTorch 原生的优化器,这些优化器的参数和 PyTorch 对应的一致; # (2) 自定义的优化器,这些优化器在 `constructor` 的基础上构建。 # 更多细节可参考 "tutorials/5_new_modules.md" 部分 type='SGD', # 优化器类型, 参考 https://github.com/open-mmlab/mmcv/blob/master/mmcv/runner/optimizer/default_constructor.py#L13 lr=0.2, # 学习率, 参数的细节使用可参考 PyTorch 的对应文档 momentum=0.9, # 动量大小 weight_decay=0.00001) # SGD 优化器权重衰减 optimizer_config = dict( # 用于构建优化器钩子的设置 grad_clip=dict(max_norm=40, norm_type=2)) # 使用梯度裁剪 lr_config = dict( # 用于注册学习率调整钩子的设置 policy='step', # 调整器策略, 支持 CosineAnnealing,Cyclic等方法。更多细节可参考 https://github.com/open-mmlab/mmcv/blob/master/mmcv/runner/hooks/lr_updater.py#L9 step=[40, 80], # 学习率衰减步长 warmup='linear', # Warmup 策略 warmup_by_epoch=True, # Warmup 单位为 epoch 还是 iteration warmup_iters=5, # warmup 数 warmup_ratio=0.1) # 初始学习率为 warmup_ratio * lr total_epochs = 20 # 训练模型的总周期数 checkpoint_config = dict( # 模型权重文件钩子设置,更多细节可参考 https://github.com/open-mmlab/mmcv/blob/master/mmcv/runner/hooks/checkpoint.py interval=1) # 模型权重文件保存间隔 workflow = [('train', 1)] # runner 的执行流. [('train', 1)] 代表只有一个执行流,并且这个名为 train 的执行流只执行一次 evaluation = dict( # 训练期间做验证的设置 interval=1, save_best='mAP@0.5IOU') # 执行验证的间隔,以及设置 `mAP@0.5IOU` 作为指示器,用于存储最好的模型权重文件 log_config = dict( # 注册日志钩子的设置 interval=20, # 打印日志间隔 hooks=[ # 训练期间执行的钩子 dict(type='TextLoggerHook'), # 记录训练过程信息的日志 ]) # 运行设置 dist_params = dict(backend='nccl') # 建立分布式训练的设置,其中端口号也可以设置 log_level = 'INFO' # 日志等级 work_dir = ('./work_dirs/ava/' # 记录当前实验日志和模型权重文件的文件夹 'slowonly_kinetics_pretrained_r50_4x16x1_20e_ava_rgb') load_from = ('https://download.openmmlab.com/mmaction/recognition/slowonly/' # 从给定路径加载模型作为预训练模型. 这个选项不会用于断点恢复训练 'slowonly_r50_4x16x1_256e_kinetics400_rgb/' 'slowonly_r50_4x16x1_256e_kinetics400_rgb_20200704-a69556c6.pth') resume_from = None # 加载给定路径的模型权重文件作为断点续连的模型, 训练将从该时间点保存的周期点继续进行- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

2.3 我的训练配置

我所采用的单个GPU上的指令如下:

cd /home/MPCLST/mmaction2_YF python tools/train.py configs/detection/ava/my_slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb.py --validate- 1

- 2

其中

/home/MPCLST/mmaction2_YF为mmation2的地址

configs/detection/ava/my_slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb.py为配置文件地址如果出现中断,可以使用一下命令继续训练:

cd /home/MPCLST/mmaction2_YF python tools/train.py configs/detection/ava/my_slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb.py --validate --resume-from ./work_dirs/ava2/slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb/latest.pth --validate- 1

- 2

我这里就讲训练后的配置文件放在csdn中,供大家下载使用(免费)

22-8-6 mmaction2 slowfast训练配置 训练日志分析:https://download.csdn.net/download/WhiffeYF/86339417

包含配置文件:

训练日志:20220805_165139.log.json

训练配置:my_slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb.py

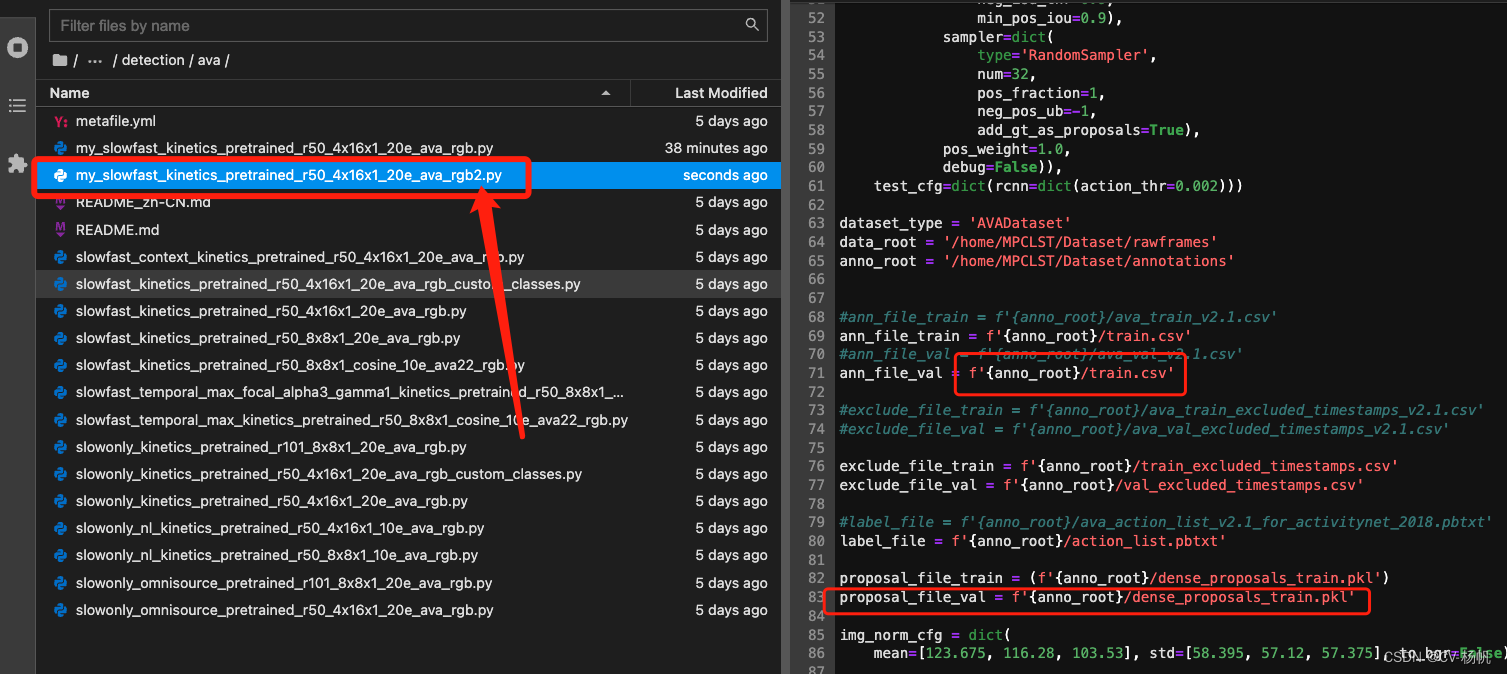

训练配置(为了测试训练集的效果):my_slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb2.py然后说说训练配置文件有哪些地方我修改了:

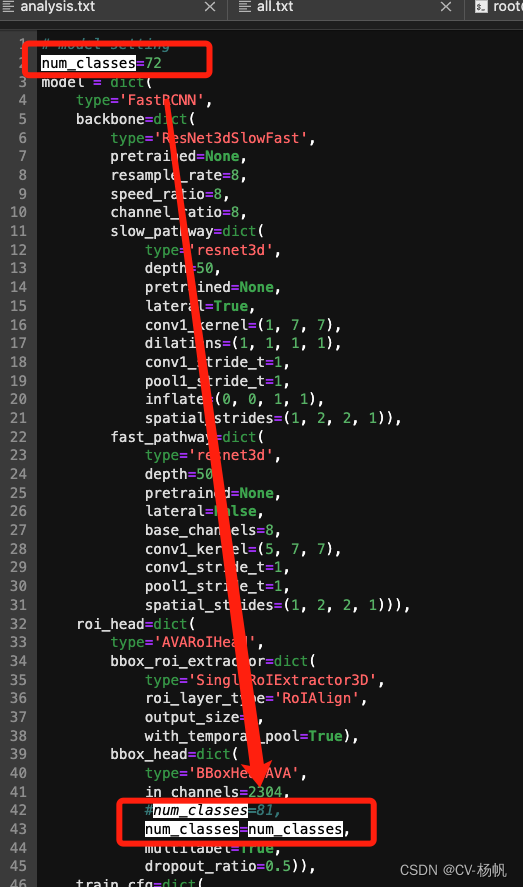

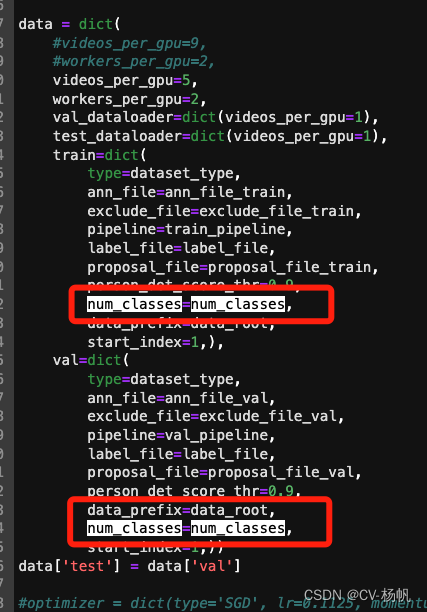

首先修改类别数量,我的动作类别71个,所有num_classes = 动作类别数 + 1(背景) = 72

在data中也要加入num_classes,因为默认的是80,不添加的话,训练时会出现维度不匹配的情况。

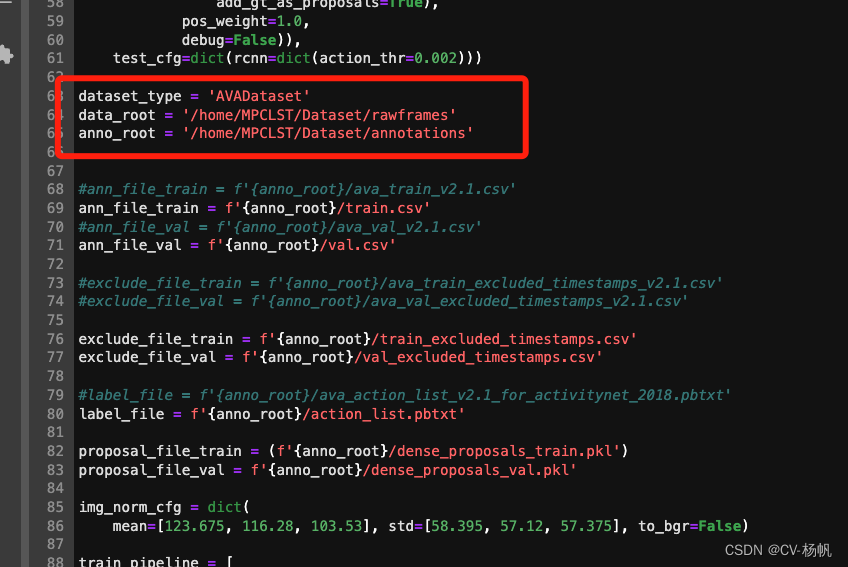

data_root、anno_root 修改为自定义数据集的位置

3 日志分析

3.1 官网日志分析教程

官网给了多个日志分析的代码:https://mmaction2.readthedocs.io/zh_CN/latest/useful_tools.html#id2

输入变量指定一个训练日志文件,可通过 tools/analysis/analyze_logs.py 脚本绘制 loss/top-k 曲线。本功能依赖于 seaborn,使用前请先通过 pip install seaborn 安装依赖包。

tools/analysis/analyze_logs.py plots loss/top-k acc curves given a training log file. Run pip install seaborn first to install the dependency.

python tools/analysis/analyze_logs.py plot_curve ${JSON_LOGS} [--keys ${KEYS}] [--title ${TITLE}] [--legend ${LEGEND}] [--backend ${BACKEND}] [--style ${STYLE}] [--out ${OUT_FILE}]- 1

例如(Examples:):

绘制某日志文件对应的分类损失曲线图。

Plot the classification loss of some run.

python tools/analysis/analyze_logs.py plot_curve log.json --keys loss_cls --legend loss_cls- 1

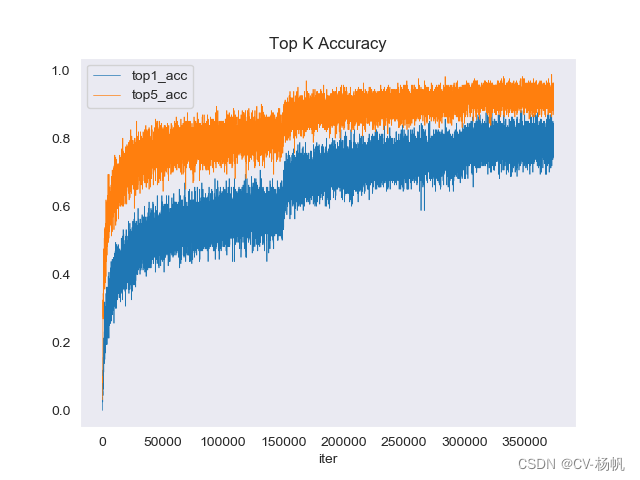

绘制某日志文件对应的 top-1 和 top-5 准确率曲线图,并将曲线图导出为 PDF 文件。

Plot the top-1 acc and top-5 acc of some run, and save the figure to a pdf.

python tools/analysis/analyze_logs.py plot_curve log.json --keys top1_acc top5_acc --out results.pdf- 1

3.2 我的日志分析

当然,如果你直接使用官网的代码,大概率会报错,因为跑slowfast的训练后,生成的训练日志里面,没有:loss_cls、top-5。

只有:recall@top5、loss_action_cls3.2.1 错误展示

展示一个错误例子

执行:cd /home/MPCLST/mmaction2_YF/ python ./tools/analysis/analyze_logs.py plot_curve ./work_dirs/avaT2/slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb/20220804_141745.log.json \ --keys loss_cls \ --legend loss_cls- 1

- 2

- 3

- 4

然后就会有如下报错

Traceback (most recent call last): File "./tools/analysis/analyze_logs.py", line 176, inmain() File "./tools/analysis/analyze_logs.py", line 172, in main eval(args.task)(log_dicts, args) File "./tools/analysis/analyze_logs.py", line 61, in plot_curve raise KeyError( KeyError: './work_dirs/avaT2/slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb/20220804_141745.log.json does not contain metric loss_cls' - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

原因就是训练日志里没有:loss_cls,要换成loss_action_cls

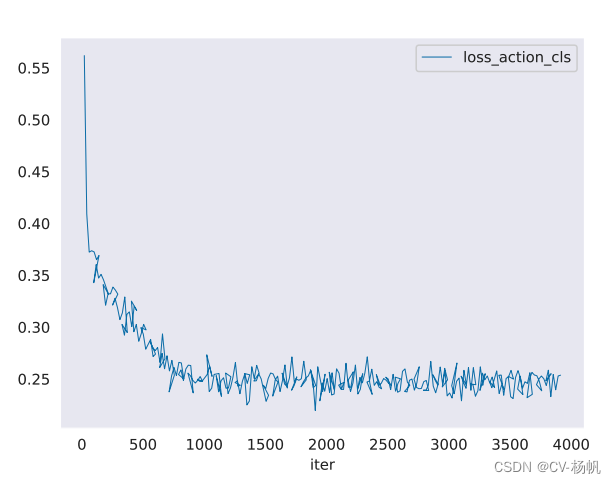

3.2.2 损失曲线图

正确的命令如下:

cd /home/MPCLST/mmaction2_YF/ python ./tools/analysis/analyze_logs.py plot_curve ./1.log.json \ --keys loss_action_cls \ --legend loss_action_cls \ --out ./Aresults.pdf- 1

- 2

- 3

- 4

- 5

其中./1.log.json为训练日志,原本在:home/MPCLST/mmaction2_YF/work_dirs/ava2/slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb/20220805_165139.log.json,然后我重新命名,将其放在了: /home/MPCLST/mmaction2_YF/ 目录下。

结果如下:

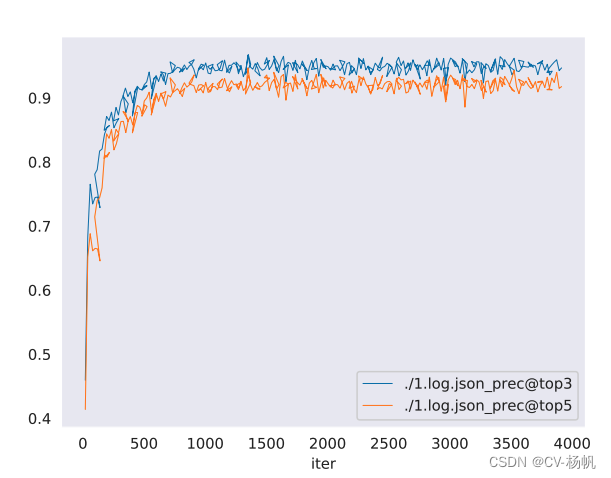

3.2.3 prec@top3 prec@top5曲线

python ./tools/analysis/analyze_logs.py plot_curve ./1.log.json \ --keys prec@top3 prec@top5 \ --out Aresults2.pdf- 1

- 2

- 3

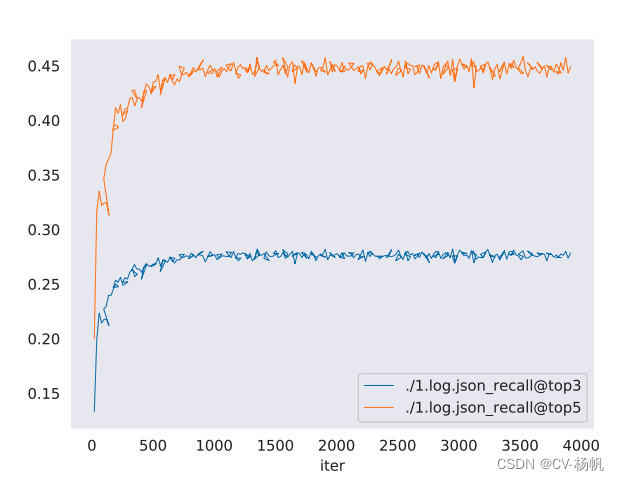

3.2.4 recall@top3 recall@top5

python ./tools/analysis/analyze_logs.py plot_curve ./1.log.json \ --keys recall@top3 recall@top5 \ --out Aresults3.pdf- 1

- 2

- 3

4 测试结果分析

4.1 测试集的分析

模型测试(选用最好的模型进行测试)

cd /home/MPCLST/mmaction2_YF/ python tools/test.py configs/detection/ava/my_slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb.py ./work_dirs/ava2/slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb/best_mAP@0.5IOU_epoch_19.pth --eval mAP- 1

- 2

测试结果如下:

[>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>] 77/77, 3.4 task/s, elapsed: 22s, ETA: 0s Evaluating mAP ... ==> 0.119518 seconds to read file /home/MPCLST/Dataset/annotations/val.csv ==> 0.121228 seconds to Reading detection results ==> 0.243677 seconds to read file AVA_20220806_135555_result.csv ==> 0.24525 seconds to Reading detection results ==> 0.0171266 seconds to Convert groundtruth ==> 1.28865 seconds to convert detections ==> 0.0697978 seconds to run_evaluator mAP@0.5IOU= 0.16093717926227658 PerformanceByCategory/AP@0.5IOU/eye Invisible= 0.21957583593164443 PerformanceByCategory/AP@0.5IOU/eye ohter= nan PerformanceByCategory/AP@0.5IOU/open eyes= 0.3986643043503887 PerformanceByCategory/AP@0.5IOU/close eyes= 0.020177562550443905 PerformanceByCategory/AP@0.5IOU/lip Invisible= 0.21175944581786216 PerformanceByCategory/AP@0.5IOU/lip ohter= nan PerformanceByCategory/AP@0.5IOU/open mouth= 0.01958912037037037 PerformanceByCategory/AP@0.5IOU/close mouth= 0.48263934087246974 PerformanceByCategory/AP@0.5IOU/body Invisible= 0.0 PerformanceByCategory/AP@0.5IOU/body other= 0.0 PerformanceByCategory/AP@0.5IOU/body sit= 0.30172789978134984 PerformanceByCategory/AP@0.5IOU/body side Sit= 0.06424825174825174 PerformanceByCategory/AP@0.5IOU/body stand= 0.4350772380337405 PerformanceByCategory/AP@0.5IOU/body lying down= nan PerformanceByCategory/AP@0.5IOU/body bend over= 0.011695906432748537 PerformanceByCategory/AP@0.5IOU/body squat= nan PerformanceByCategory/AP@0.5IOU/body rely= nan PerformanceByCategory/AP@0.5IOU/body lie flat= nan PerformanceByCategory/AP@0.5IOU/body lateral= nan PerformanceByCategory/AP@0.5IOU/left hand invisible= 0.21793355606129478 PerformanceByCategory/AP@0.5IOU/left hand other= nan PerformanceByCategory/AP@0.5IOU/left hand palm grip= 0.2490742588261378 PerformanceByCategory/AP@0.5IOU/left hand palm spread= 0.3632941972340388 PerformanceByCategory/AP@0.5IOU/left hand palm Point= 0.009846153846153846 PerformanceByCategory/AP@0.5IOU/left hand applause= nan PerformanceByCategory/AP@0.5IOU/left hand write= nan PerformanceByCategory/AP@0.5IOU/left arm invisible= 0.11782264436125489 PerformanceByCategory/AP@0.5IOU/left arm other= nan PerformanceByCategory/AP@0.5IOU/left arm flat= 0.024817518248175185 PerformanceByCategory/AP@0.5IOU/left arm droop= 0.18134611163016337 PerformanceByCategory/AP@0.5IOU/left arm forward= 0.00874243168057601 PerformanceByCategory/AP@0.5IOU/left arm flexion= 0.3574295887923012 PerformanceByCategory/AP@0.5IOU/left arm raised= 0.29577938647229507 PerformanceByCategory/AP@0.5IOU/left handed behavior object invisible= 0.2231242540734685 PerformanceByCategory/AP@0.5IOU/left handed behavior object other= 0.004079634464751958 PerformanceByCategory/AP@0.5IOU/left handed behavior object book = 0.0743542109449761 PerformanceByCategory/AP@0.5IOU/left handed behavior object exercise book= 0.2824925842529067 PerformanceByCategory/AP@0.5IOU/left handed behavior object spare head= 0.0 PerformanceByCategory/AP@0.5IOU/left handed behavior object electronic equipment= 0.07692307692307693 PerformanceByCategory/AP@0.5IOU/left handed behavior object electronic pointing at others= 0.002242152466367713 PerformanceByCategory/AP@0.5IOU/left handed behavior object chalk= nan PerformanceByCategory/AP@0.5IOU/left handed behavior object no interaction= 0.4987584575529982 PerformanceByCategory/AP@0.5IOU/right hand invisible= 0.25949074047589055 PerformanceByCategory/AP@0.5IOU/right hand other= 0.01671826625386997 PerformanceByCategory/AP@0.5IOU/right hand palm grip= 0.24416659319005685 PerformanceByCategory/AP@0.5IOU/right hand palm spread= 0.32756514114018953 PerformanceByCategory/AP@0.5IOU/right hand palm Point= 0.0005482456140350877 PerformanceByCategory/AP@0.5IOU/right hand applause= nan PerformanceByCategory/AP@0.5IOU/right hand write= 0.0 PerformanceByCategory/AP@0.5IOU/right arm invisible= 0.08796983661655658 PerformanceByCategory/AP@0.5IOU/right arm other= nan PerformanceByCategory/AP@0.5IOU/right arm flat= 0.05728406468574387 PerformanceByCategory/AP@0.5IOU/right arm droop= 0.1296277801807914 PerformanceByCategory/AP@0.5IOU/right arm forward= 0.0034883720930232558 PerformanceByCategory/AP@0.5IOU/right arm flexion= 0.29807071272587105 PerformanceByCategory/AP@0.5IOU/right arm raised= 0.11511462192356786 PerformanceByCategory/AP@0.5IOU/right handed behavior object invisible= 0.25630071018790945 PerformanceByCategory/AP@0.5IOU/right handed behavior object other= 0.04049659611278428 PerformanceByCategory/AP@0.5IOU/right handed behavior object book = 0.005098051743325904 PerformanceByCategory/AP@0.5IOU/right handed behavior object exercise book= 0.10037713766604955 PerformanceByCategory/AP@0.5IOU/right handed behavior object spare head= 0.0 PerformanceByCategory/AP@0.5IOU/right handed behavior object electronic equipment= 0.02247191011235955 PerformanceByCategory/AP@0.5IOU/right handed behavior object electronic pointing at others= nan PerformanceByCategory/AP@0.5IOU/right handed behavior object chalk= nan PerformanceByCategory/AP@0.5IOU/leg invisible= 0.3829590019619401 PerformanceByCategory/AP@0.5IOU/leg other= 0.29039130666363366 PerformanceByCategory/AP@0.5IOU/leg stand= 0.21725870086796184 PerformanceByCategory/AP@0.5IOU/leg run= 0.5045035329108362 PerformanceByCategory/AP@0.5IOU/leg walk= nan PerformanceByCategory/AP@0.5IOU/leg jump= 0.01655405405405405 PerformanceByCategory/AP@0.5IOU/leg Kick= nan mAP@0.5IOU 0.1609 mAP@0.5IOU: 0.1609- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

4.2 训练集的分析

如果想对训练集进行测试的话,我是采用9:1的方式划分训练与测试集,如果想对训练集的每个动作的准确度进行计算,那么修改一下配置文件(修改后的配置文件我也上传了CSDN资源中),如下:

cd /home/MPCLST/mmaction2_YF/ python tools/test.py configs/detection/ava/my_slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb2.py ./work_dirs/ava2/slowfast_kinetics_pretrained_r50_4x16x1_20e_ava_rgb/best_mAP@0.5IOU_epoch_19.pth --eval mAP- 1

- 2

[>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>] 726/726, 3.5 task/s, elapsed: 206s, ETA: 0s Evaluating mAP ... ==> 1.46952 seconds to read file /home/MPCLST/Dataset/annotations/train.csv ==> 1.48599 seconds to Reading detection results ==> 0.842957 seconds to read file AVA_20220806_140626_result.csv ==> 0.851932 seconds to Reading detection results ==> 0.196215 seconds to Convert groundtruth ==> 11.8547 seconds to convert detections ==> 0.54392 seconds to run_evaluator mAP@0.5IOU= 0.19678775434136514 PerformanceByCategory/AP@0.5IOU/eye Invisible= 0.12645567913113967 PerformanceByCategory/AP@0.5IOU/eye ohter= 0.0 PerformanceByCategory/AP@0.5IOU/open eyes= 0.31708135334566584 PerformanceByCategory/AP@0.5IOU/close eyes= 0.25428730997649285 PerformanceByCategory/AP@0.5IOU/lip Invisible= 0.11397992971416905 PerformanceByCategory/AP@0.5IOU/lip ohter= 0.0 PerformanceByCategory/AP@0.5IOU/open mouth= 0.37103021706235095 PerformanceByCategory/AP@0.5IOU/close mouth= 0.3225470046664377 PerformanceByCategory/AP@0.5IOU/body Invisible= 0.0688460870946835 PerformanceByCategory/AP@0.5IOU/body other= 4.749240121580547e-05 PerformanceByCategory/AP@0.5IOU/body sit= 0.14118405291274552 PerformanceByCategory/AP@0.5IOU/body side Sit= 0.12306737241061394 PerformanceByCategory/AP@0.5IOU/body stand= 0.44386059216886853 PerformanceByCategory/AP@0.5IOU/body lying down= 0.0 PerformanceByCategory/AP@0.5IOU/body bend over= 0.036801890481544024 PerformanceByCategory/AP@0.5IOU/body squat= 0.0002886836027713626 PerformanceByCategory/AP@0.5IOU/body rely= 0.0022123893805309734 PerformanceByCategory/AP@0.5IOU/body lie flat= nan PerformanceByCategory/AP@0.5IOU/body lateral= nan PerformanceByCategory/AP@0.5IOU/left hand invisible= 0.09974807472439347 PerformanceByCategory/AP@0.5IOU/left hand other= 0.001987585502739207 PerformanceByCategory/AP@0.5IOU/left hand palm grip= 0.3573117900804288 PerformanceByCategory/AP@0.5IOU/left hand palm spread= 0.18167205413859483 PerformanceByCategory/AP@0.5IOU/left hand palm Point= 0.000757983615530252 PerformanceByCategory/AP@0.5IOU/left hand applause= 0.014035087719298246 PerformanceByCategory/AP@0.5IOU/left hand write= 0.08771929824561403 PerformanceByCategory/AP@0.5IOU/left arm invisible= 0.07325714077918277 PerformanceByCategory/AP@0.5IOU/left arm other= 0.029137529137529143 PerformanceByCategory/AP@0.5IOU/left arm flat= 0.20194234035277414 PerformanceByCategory/AP@0.5IOU/left arm droop= 0.3086699563592321 PerformanceByCategory/AP@0.5IOU/left arm forward= 0.11754773840177281 PerformanceByCategory/AP@0.5IOU/left arm flexion= 0.24046255259110355 PerformanceByCategory/AP@0.5IOU/left arm raised= 0.184117174890788 PerformanceByCategory/AP@0.5IOU/left handed behavior object invisible= 0.09916514173053004 PerformanceByCategory/AP@0.5IOU/left handed behavior object other= 0.3480829069492257 PerformanceByCategory/AP@0.5IOU/left handed behavior object book = 0.24313614492470254 PerformanceByCategory/AP@0.5IOU/left handed behavior object exercise book= 0.3713436674317362 PerformanceByCategory/AP@0.5IOU/left handed behavior object spare head= 0.002527731022507281 PerformanceByCategory/AP@0.5IOU/left handed behavior object electronic equipment= 0.6424483333669451 PerformanceByCategory/AP@0.5IOU/left handed behavior object electronic pointing at others= 0.9166666666666666 PerformanceByCategory/AP@0.5IOU/left handed behavior object chalk= 0.007552439580483356 PerformanceByCategory/AP@0.5IOU/left handed behavior object no interaction= 0.29060548802833236 PerformanceByCategory/AP@0.5IOU/right hand invisible= 0.09377881522755799 PerformanceByCategory/AP@0.5IOU/right hand other= 0.0033517656341853688 PerformanceByCategory/AP@0.5IOU/right hand palm grip= 0.2675440929295684 PerformanceByCategory/AP@0.5IOU/right hand palm spread= 0.22916889434576543 PerformanceByCategory/AP@0.5IOU/right hand palm Point= 0.2314629790615565 PerformanceByCategory/AP@0.5IOU/right hand applause= 0.09659090909090909 PerformanceByCategory/AP@0.5IOU/right hand write= 0.1866609886273387 PerformanceByCategory/AP@0.5IOU/right arm invisible= 0.06665292677403056 PerformanceByCategory/AP@0.5IOU/right arm other= 0.011404900405429227 PerformanceByCategory/AP@0.5IOU/right arm flat= 0.21954392879903079 PerformanceByCategory/AP@0.5IOU/right arm droop= 0.32872736315242523 PerformanceByCategory/AP@0.5IOU/right arm forward= 0.21775369133876343 PerformanceByCategory/AP@0.5IOU/right arm flexion= 0.21354090936404324 PerformanceByCategory/AP@0.5IOU/right arm raised= 0.1777483934967737 PerformanceByCategory/AP@0.5IOU/right handed behavior object invisible= 0.09563145964560554 PerformanceByCategory/AP@0.5IOU/right handed behavior object other= 0.1686719897439452 PerformanceByCategory/AP@0.5IOU/right handed behavior object book = 0.20026198746897292 PerformanceByCategory/AP@0.5IOU/right handed behavior object exercise book= 0.22472998828422455 PerformanceByCategory/AP@0.5IOU/right handed behavior object spare head= 0.01862149451754349 PerformanceByCategory/AP@0.5IOU/right handed behavior object electronic equipment= 0.6060217155637195 PerformanceByCategory/AP@0.5IOU/right handed behavior object electronic pointing at others= 0.675430576100219 PerformanceByCategory/AP@0.5IOU/right handed behavior object chalk= 0.25698651260273714 PerformanceByCategory/AP@0.5IOU/leg invisible= 0.33954092884900344 PerformanceByCategory/AP@0.5IOU/leg other= 0.13399553314977775 PerformanceByCategory/AP@0.5IOU/leg stand= 0.3200153849107191 PerformanceByCategory/AP@0.5IOU/leg run= 0.5807052855820038 PerformanceByCategory/AP@0.5IOU/leg walk= nan PerformanceByCategory/AP@0.5IOU/leg jump= 0.07865124561627221 PerformanceByCategory/AP@0.5IOU/leg Kick= nan mAP@0.5IOU 0.1968 mAP@0.5IOU: 0.1968- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

-

相关阅读:

C#9.0记录类型

机器学习实战:用SVD压缩图像

UICollectionView

IP RAN基站回传中的三大组网方案

CPI教程-异步接口创建及使用

PyTorch学习笔记(四)

Vue.js 响应式系统深度剖析

Centos, RockyLinux 常用软件安装汇总

DVWA之SQL注入

[英雄星球六月集训LeetCode解题日报] 第15日 树状数组

- 原文地址:https://blog.csdn.net/WhiffeYF/article/details/126192179