-

Pytorch模型model&data.to(device) | .cuda | .cpu()

模型model或数据data放到cpu或gpu上

模型和数据需要在同一个设备上,才能正常运行:

- model和data都在cpu上

- model和data都在gpu上

model = Model() input = dataloader() output = model(input)- 1

- 2

- 3

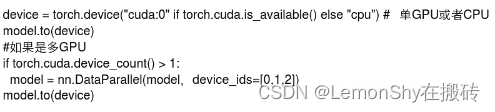

1. to.(device)

device自己定义好,可以是cpu或者gpu

device = 'gpu' model = model.to(device) data = data.to(device) # device = 'cpu' model = model.to(device) data = data.to(device)- 1

- 2

- 3

- 4

- 5

- 6

- 7

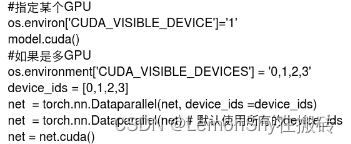

2. .cuda()

model = model.cuda() data = data.cuda()- 1

- 2

3. .cpu()

model = model.cpu() data = data.cpu()- 1

- 2

4. 检查

- 通过判断模型model的参数是否在cuda上来判定模型是否在gpu上。

print('Is model on gpu: ', next(model.parameters()).is_cuda)- 1

输出若是True,则model在gpu上;若是False,则model在cpu上。

- 输出数据data的device字段。

print('data device: ', data.device)- 1

输出gpu则在gpu上,输出cpu则在cpu上。

————————————————

https://blog.csdn.net/iLOVEJohnny/article/details/106021547

https://wenku.baidu.com/view/99bec6ef8aeb172ded630b1c59eef8c75fbf95ed.html -

相关阅读:

腾讯云TRTC服务实现Web视频会议

怎么判断一个ip地址是否正确

fmp4打包H265视频流

修改I2C上拉电阻的值

C语言刷题练习(Day2)

什么是C语言中的命名空间?

Mysql高级篇学习总结6:索引的概念及理解、B+树产生过程详解、MyISAM与InnoDB的对比

unity---接入Admob

Java后端开发(七)-- 在gitee上部署远程仓库,通过idea上传本地代码(用idea2022版本开发)

计算机毕设(附源码)JAVA-SSM基于JAVA的求职招聘网站的设计与实现

- 原文地址:https://blog.csdn.net/LemonShy2019/article/details/126156250