-

【云原生Kubernetes系列第四篇】KubeSphere(v3.1.1)学习---KubeSphere介绍和基于K8S的安装

KubeSphere介绍和基于K8S的安装

KubeSphere介绍

官网地址:https://kubesphere.com.cn/

首先先看下官网的介绍

1、KubeSphere是打造一个以Kubernetes为内核的云原生分布式操作系统。它的架构可以非常方便地使第三方应用与云原生生态组件进行即插即用(plug-and-play)的集成,支持云原生应用在多云与多集群的统一分发和运维管理。2、KubeSphere是个全栈的Kubernetes容器云PasS解决方案

3、KubeSphere 是在 Kubernetes 之上构建的以

应用为中心的多租户容器平台,提供全栈的 IT 自动化运维的能力,简化企业的 DevOps 工作流。KubeSphere 提供了运维友好的向导式操作界面,帮助企业快速构建一个强大和功能丰富的容器云平台。我的个人理解:

1、KubeSphere是个容器云平台,即PaaS平台,而**Kubernetes是个容器编排系统,二者不一样。而在DevOps时代,我们以Kubernetes为核心的技术展开,所以说KubeSphere是以Kubernetes为内核的,这就像Linux操作系统的内核一样,Linux操作系统依赖于内核,一样重要。但这并不能说明,KubeSphere只能基于Kubernetes去搭建,KubeSphere也能部署在裸机(虚拟机)中**。2、KubeSphere整体来说是一个

能够灵活组合云原生应用的一个平台,在这个平台里,可以把其它云原生应用当成个插件来使用,比如:DevOps中的:Jenkins,也集成了Service mesh的应用,比如:Istio。这也就是所说的即插即用,这对于运维人员行。3、KubeSphere可以简单的理解为是**

Kubernetes的dashboard**,但是KubeSphere不仅仅只满足于dashboard功能,并且可以实现众多复杂的功能。KubeSphere我也是粗略了解以下。

下面开始安装。一、kubesphere安装步骤

1.1 安装KubeSphere(v3.1.1)

安装KubeSphere最好的方法就是参考官方文档,而且官方文档是中文的。 官网地址:https://kubesphere.com.cn/- 1

- 2

1.2 安装环境说明

- Kubernetes:V1.20.9(1.17.x,1.18.x,1.19.x,1.20.x),注意:如果安装3.1.x版本,K8S不能大于1.20.x版本

- Docker:20.10.7

- 服务器配置: 使用华为云ECS弹性云服务器

k8s-master 4VCPU+8G

node1 8VCPU+16G

node2 8VCPU+16G

我上面的配置是针对所有插件都选用了,也就是说如果你开启了DevOps,Service Mesh,警告…等所有功能,就需要如上配置。如果你就最小化安装KubeSphere应该每个节点2VCPU+2G即可。

CentOS7.9

前提:

具备好一个最基本Kubernetes平台。

注:如果没有安装kubernetes平台请参考文档:

https://blog.csdn.net/weixin_59663288/article/details/125994307?spm=1001.2014.3001.5502

如果使用v1.20.9版本的话,使用就参考雷神老师的文档和镜像仓库:

https://www.yuque.com/leifengyang/oncloud/gz1sls1.3 安装并配置NFS存储

根据官方文档要求,在安装,KubeSphere之前,Kubernetes平台上需要有个默认的StorageClass类资源,也就是默认存储,提到StorageClass类资源,我们就要想到PV,PVC,这里的StorageClass类资源不再是传统的手动创建PV,PVC了,而是采用动态的方式绑定存储,比如:我写个PVC文件,底层会自动匹配相应的PV(如果没有对应的PV,则自动创建)。但是这一切的前提都需要有个存储,因此我们用NFS来实现。

这里以master节点为NFS服务器

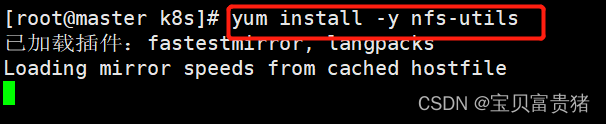

1、安装nfs-server(所有节点的操作)yum install -y nfs-utils- 1

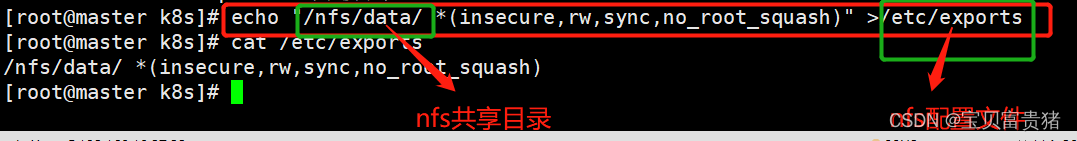

2、授权存储目录(master)

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports- 1

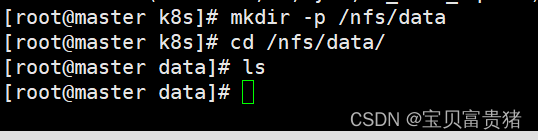

3、执行以下命令,启动 nfs 服务;创建共享目录mkdir -p /nfs/data- 1

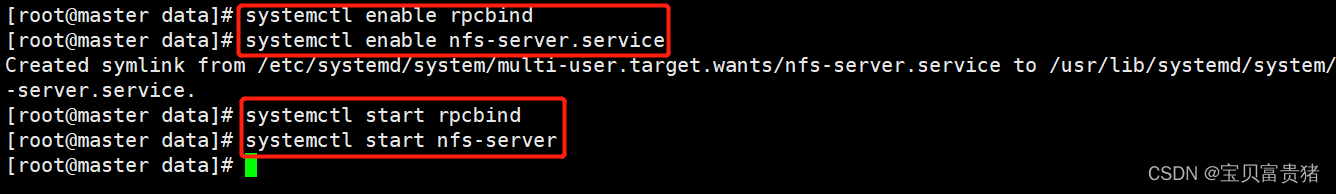

4、在master节点执行systemctl enable rpcbind systemctl enable nfs-server systemctl start rpcbind systemctl start nfs-server- 1

- 2

- 3

- 4

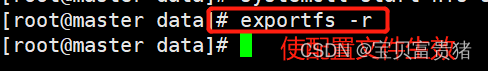

5、# 使配置生效exportfs -r- 1

#检查配置是否生效[root@k8s-master ~]# exportfs /nfs/data <world>- 1

- 2

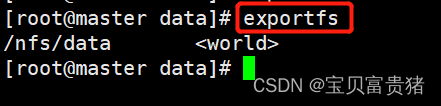

6、测试,在客户端[root@node2 ~]# showmount -e 192.168.10.27 Export list for 192.168.10.27: /nfs/data *- 1

- 2

- 3

1.4 配置默认存储

1、上面说到我们是采用StorageClass抽象来动态创建PV,但是使用StorageClass有个前提,就是需要个存储分配器。StorageClass是通过存储分配器(provisioner)来分配PV的,但是Kubernetes官方内置的分配器并不支持NFS,所以需要额外安装NFS存储分配器。它以deployment运行。也就是说我们需要创建个deployment。

2、由于存储分配器在Kubernetes集群内部,存储分配器想要操控NFS分配空间,就需要和API Server交互,这属于集群内部Pod和API Server交互,因此我们还需要创建个ServiceAccount,然后在创建存储类(StorageClass),之后创建ClusterRole,ClusterRoleBinding,Role,RoleBinding等账号权限配置

以上就是我们配置默认存储所执行的步骤:创建StorageClass资源,创建ServiceAccount资源,创建deployment资源,创建ClusterRole,ClusterRoleBinding,Role,RoleBinding等权限资源。

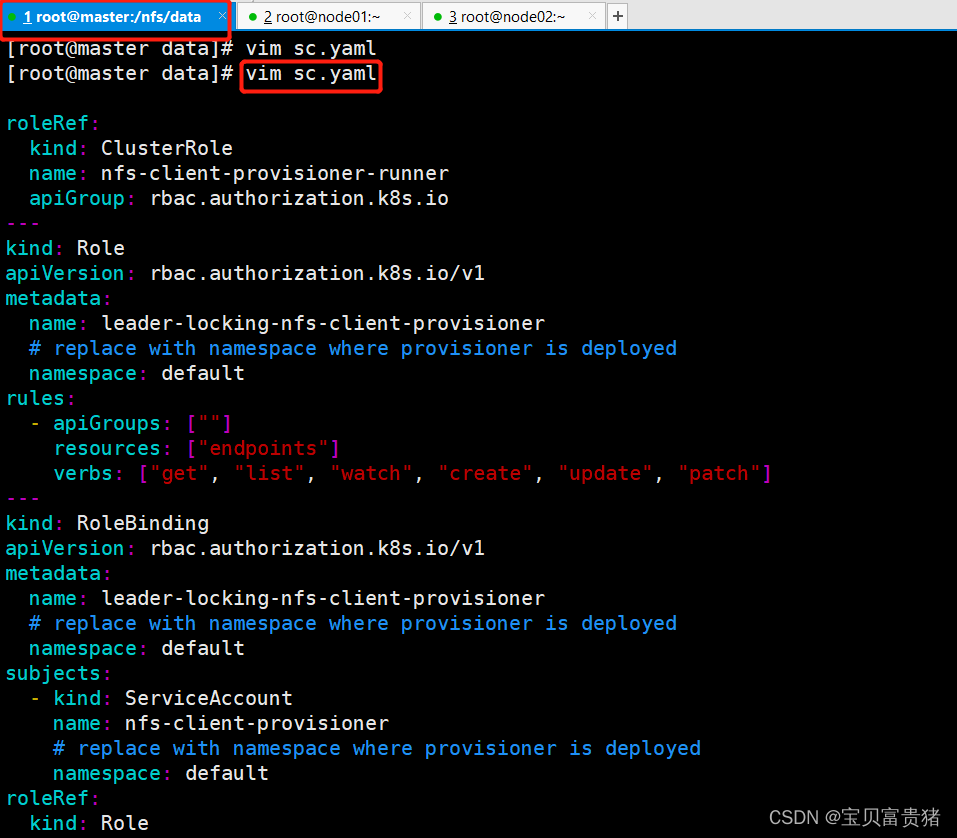

1.5 执行如下yaml文件

vim sc.yaml- 1

下面来逐行介绍:

## 创建了一个存储类 apiVersion: storage.k8s.io/v1 kind: StorageClass #存储类的资源名称 metadata: name: nfs-storage #存储类的名称,自定义 annotations: storageclass.kubernetes.io/is-default-class: "true" #注解,是否是默认的存储,注意:KubeSphere默认就需要个默认存储,因此这里注解要设置为“默认”的存储系统,表示为"true",代表默认。 provisioner: k8s-sigs.io/nfs-subdir-external-provisioner #存储分配器的名字,自定义 parameters: archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份 --- apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default spec: replicas: 1 #只运行一个副本应用 strategy: #描述了如何用新的POD替换现有的POD type: Recreate #Recreate表示重新创建Pod selector: #选择后端Pod matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner #创建账户 containers: - name: nfs-client-provisioner image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2 #使用NFS存储分配器的镜像 # resources: # limits: # cpu: 10m # requests: # cpu: 10m volumeMounts: - name: nfs-client-root #定义个存储卷, mountPath: /persistentvolumes #表示挂载容器内部的路径 env: - name: PROVISIONER_NAME #定义存储分配器的名称 value: k8s-sigs.io/nfs-subdir-external-provisioner #需要和上面定义的保持名称一致 - name: NFS_SERVER #指定NFS服务器的地址,你需要改成你的NFS服务器的IP地址 value: 192.168.10.27 ## 指定自己nfs服务器地址 - name: NFS_PATH value: /nfs/data ## nfs服务器共享的目录 #指定NFS服务器共享的目录 volumes: - name: nfs-client-root #存储卷的名称,和前面定义的保持一致 nfs: server: 192.168.10.27 #NFS服务器的地址,和上面保持一致,这里需要改为你的IP地址 path: /nfs/data #NFS共享的存储目录,和上面保持一致 --- apiVersion: v1 kind: ServiceAccount #创建个SA账号 metadata: name: nfs-client-provisioner #和上面的SA账号保持一致 # replace with namespace where provisioner is deployed namespace: default --- #以下就是ClusterRole,ClusterRoleBinding,Role,RoleBinding都是权限绑定配置,不在解释。直接复制即可。 kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["nodes"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

以上文件,只需要改动两个部分:就是把两处的IP地址,改为自己的NFS服务器的IP地址,即可。

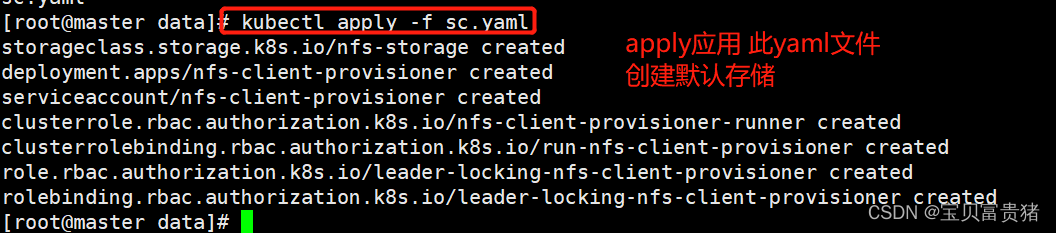

1.6 apply此Yaml文件,创建默认存储

kubectl apply -f sc.yaml- 1

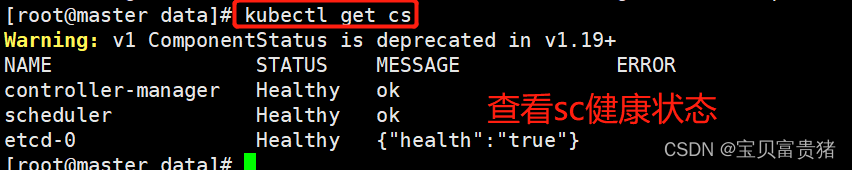

1.7 查看SC

[root@master ~]# kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE nfs-storage (default) k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 10h- 1

- 2

- 3

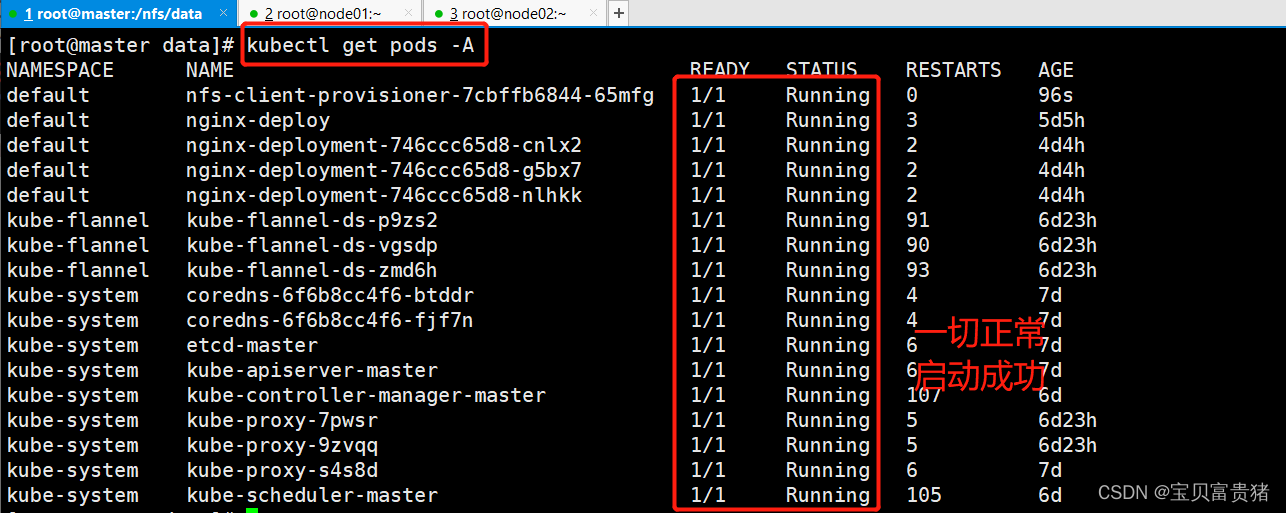

1.8 查看Pod,其否正常启动

等到两分钟,如果还是Running,那说明一切正常

[root@master ~]# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE default nfs-client-provisioner-65878c6456-hsxqf 1/1 Running 1 (35m ago) 10h- 1

- 2

- 3

1.9 验证StorageClass存储类的效果

说明:上面说到

采用StorageClass的方法,可以动态生成PV,上面我们已经创建好了StorageClass【存储类】,下面我们在没有任何PV的情况下来创建个PVC,看看PVC是否能立即绑定到PV。如果能就说明成功自动创建了PV,并进行了绑定。

pv全称: persistent volume,持久化存储卷,它是用来描述或者说用来定义一个存储卷的,这个通常都是由运维工程师来定义。

pvc: persistent volume claim,是持久化存储的请求,它是用来描述希望使用什么样的或者说是满足什么条件的pv存储。

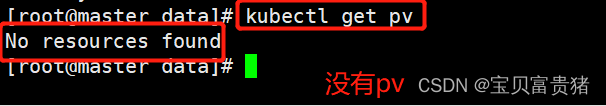

上面介绍的pv和pvc模式是需要运维人员先创建好pv,然后开发人员定义好pvc进行一对一的bond,但是如果pvc请求成千上万,那么就需要创建成千上万的pv,对于运维人员来说维护太高了,于是k8s提供一种自动创建pv的机制,叫做storageclass,然后k8s就会调用pv的模板。1、先查看是否有PV

[root@master ~]# kubectl get pv No resources found- 1

- 2

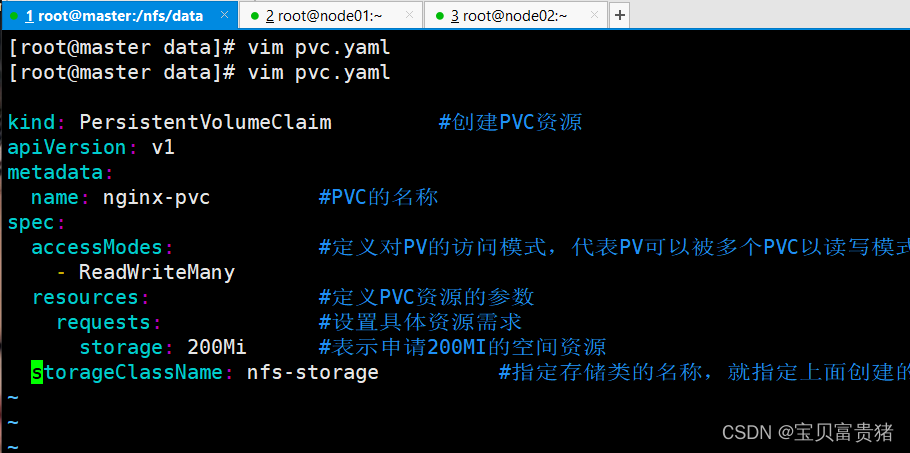

2、创建PVC[root@master ~]# vim pvc.yaml kind: PersistentVolumeClaim #创建PVC资源 apiVersion: v1 metadata: name: nginx-pvc #PVC的名称 spec: accessModes: #定义对PV的访问模式,代表PV可以被多个PVC以读写模式挂载 - ReadWriteMany resources: #定义PVC资源的参数 requests: #设置具体资源需求 storage: 200Mi #表示申请200MI的空间资源 storageClassName: nfs-storage #指定存储类的名称,就指定上面创建的那个存储类。- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

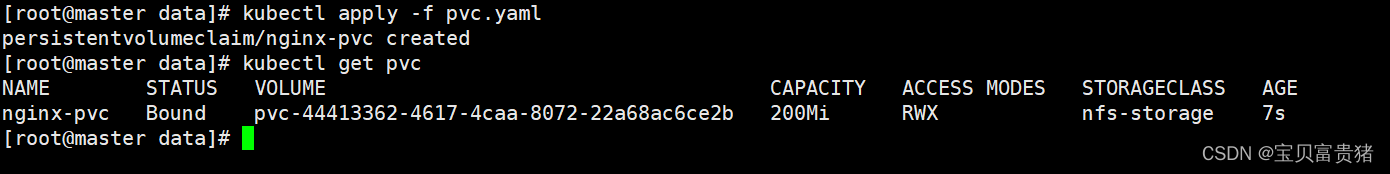

3、apply此Yaml文件,并查看PVC的状态[root@master ~]# kubectl apply -f pvc.yaml persistentvolumeclaim/nginx-pvc created [root@master data]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nginx-pvc Bound pvc-44413362-4617-4caa-8072-22a68ac6ce2b 200Mi RWX nfs-storage 7s #可以看到PVC成功显示Bound(绑定状态)- 1

- 2

- 3

- 4

- 5

- 6

- 7

在查看下PV[root@master ~]# kubectl get pv #可以看到已经自动创建了PV,并且是200MI空间大小 [root@master data]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-44413362-4617-4caa-8072-22a68ac6ce2b 200Mi RWX Delete Bound default/nginx-pvc nfs-storage- 1

- 2

- 3

- 4

二、安装Metrics-Server

Metrics-Server简介: 它是

集群指标监控组件,用于和API Server交互,获取(采集)Kubernetes集群中各项指标数据的。有了它我们可以查看各个Pod,Node等其他资源的CPU,Mem(内存)使用情况。为什么需要它?

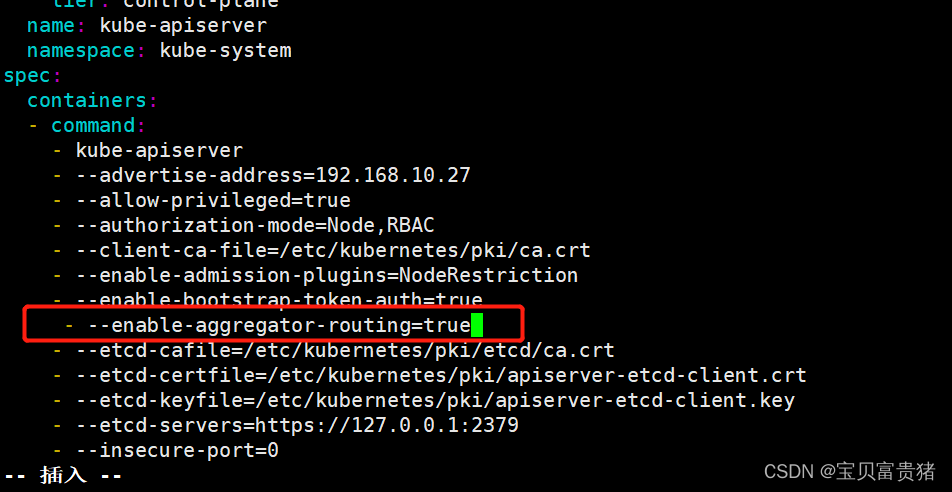

开头的时候说过,KubeSphere可以充当Kubernetes的dashboard(可视化面板)因此KubeSphere要想获取Kubernetes的各项数据,就需要某个组件去提供给想数据,这个数据采集功能由Metrics-Server实现。2.1 修改每个 API Server 的 kube-apiserver.yaml 配置开启 Aggregator Routing

[root@master ~]# vim /etc/kubernetes/manifests/kube-apiserver.yaml apiVersion: v1 kind: Pod metadata: annotations: kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.168.10.27:6443 creationTimestamp: null labels: component: kube-apiserver tier: control-plane name: kube-apiserver namespace: kube-system spec: containers: - command: - kube-apiserver - --advertise-address=192.168.10.27 - --allow-privileged=true - --authorization-mode=Node,RBAC - --client-ca-file=/etc/kubernetes/pki/ca.crt - --enable-admission-plugins=NodeRestriction - --enable-bootstrap-token-auth=true - --enable-aggregator-routing=true #添加此行,开启Aggregator Routing(聚合路由) - --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt ...- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

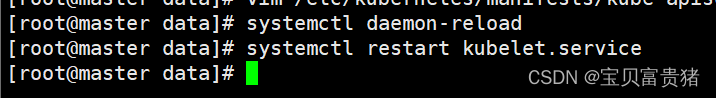

重启kubeletsystemctl daemon-reload systemctl restart kubelet- 1

- 2

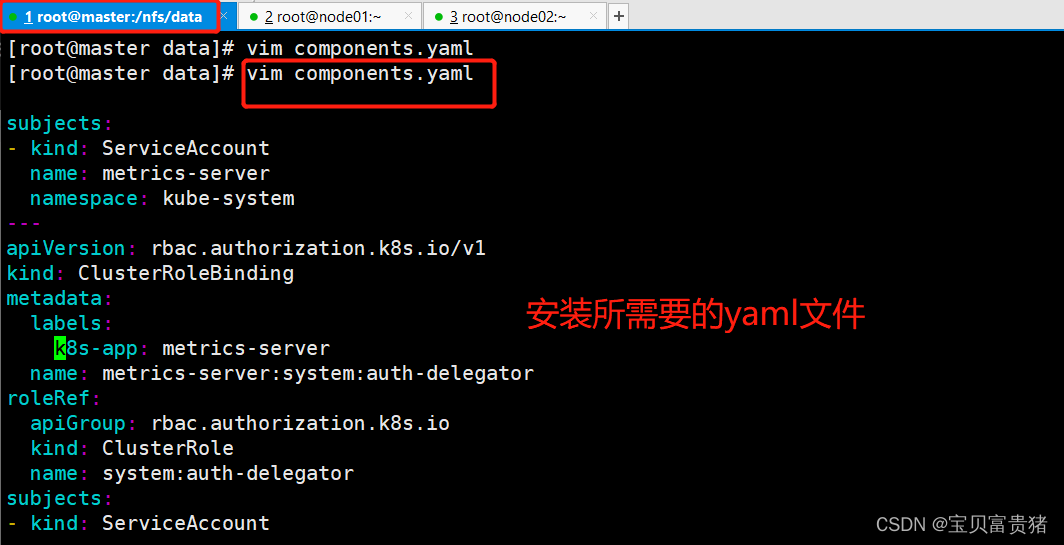

2.2 安装所需的Yaml文件

vim components.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server rbac.authorization.k8s.io/aggregate-to-admin: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-view: "true" name: system:aggregated-metrics-reader rules: - apiGroups: - metrics.k8s.io resources: - pods - nodes verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server name: system:metrics-server rules: - apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces - configmaps verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: v1 kind: Service metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: ports: - name: https port: 443 protocol: TCP targetPort: https selector: k8s-app: metrics-server --- apiVersion: apps/v1 kind: Deployment metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: selector: matchLabels: k8s-app: metrics-server strategy: rollingUpdate: maxUnavailable: 0 template: metadata: labels: k8s-app: metrics-server spec: containers: - args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-preferred-address-types=InternalIP #删掉 ExternalIP,Hostname这两个,这里已经改好了 - --kubelet-use-node-status-port - --kubelet-insecure-tls #加上该启动参数 image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.4.1 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 3 httpGet: path: /livez port: https scheme: HTTPS periodSeconds: 10 name: metrics-server ports: - containerPort: 4443 name: https protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /readyz port: https scheme: HTTPS periodSeconds: 10 securityContext: readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - mountPath: /tmp name: tmp-dir nodeSelector: kubernetes.io/os: linux priorityClassName: system-cluster-critical serviceAccountName: metrics-server volumes: - emptyDir: {} name: tmp-dir --- apiVersion: apiregistration.k8s.io/v1 kind: APIService metadata: labels: k8s-app: metrics-server name: v1beta1.metrics.k8s.io spec: group: metrics.k8s.io groupPriorityMinimum: 100 insecureSkipTLSVerify: true service: name: metrics-server namespace: kube-system version: v1beta1 versionPriority: 100- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

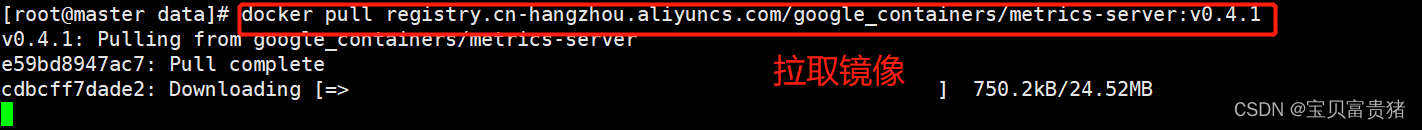

2.3 拉取镜像

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.4.1- 1

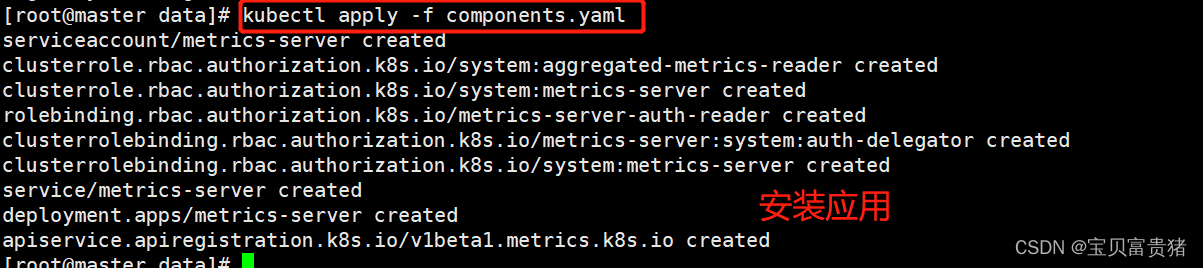

2.4 安装

kubectl apply -f components.yaml- 1

2.5 查看Metrics Server服务状态

[root@master ~]# kubectl get pods -n kube-system ... metrics-server-7d594964f5-5xzwd 1/1 Running 0 4h2m ...- 1

- 2

- 3

- 4

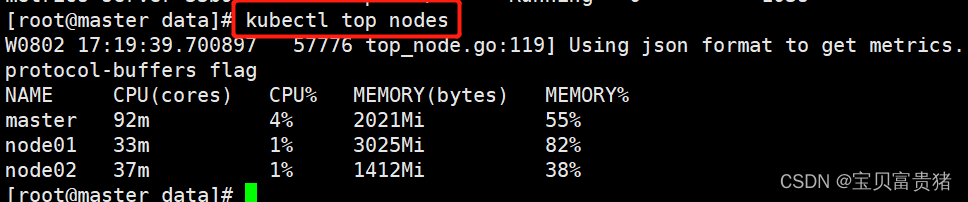

2.6 执行以下命令,检查节点占用性能情况。

[root@master data]# kubectl top nodes W0802 17:19:39.700897 57776 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 92m 4% 2021Mi 55% node01 33m 1% 3025Mi 82% node02 37m 1% 1412Mi 38% #说明Metrics-Server正常运行- 1

- 2

- 3

- 4

- 5

- 6

- 7

三、安装KubeSphere

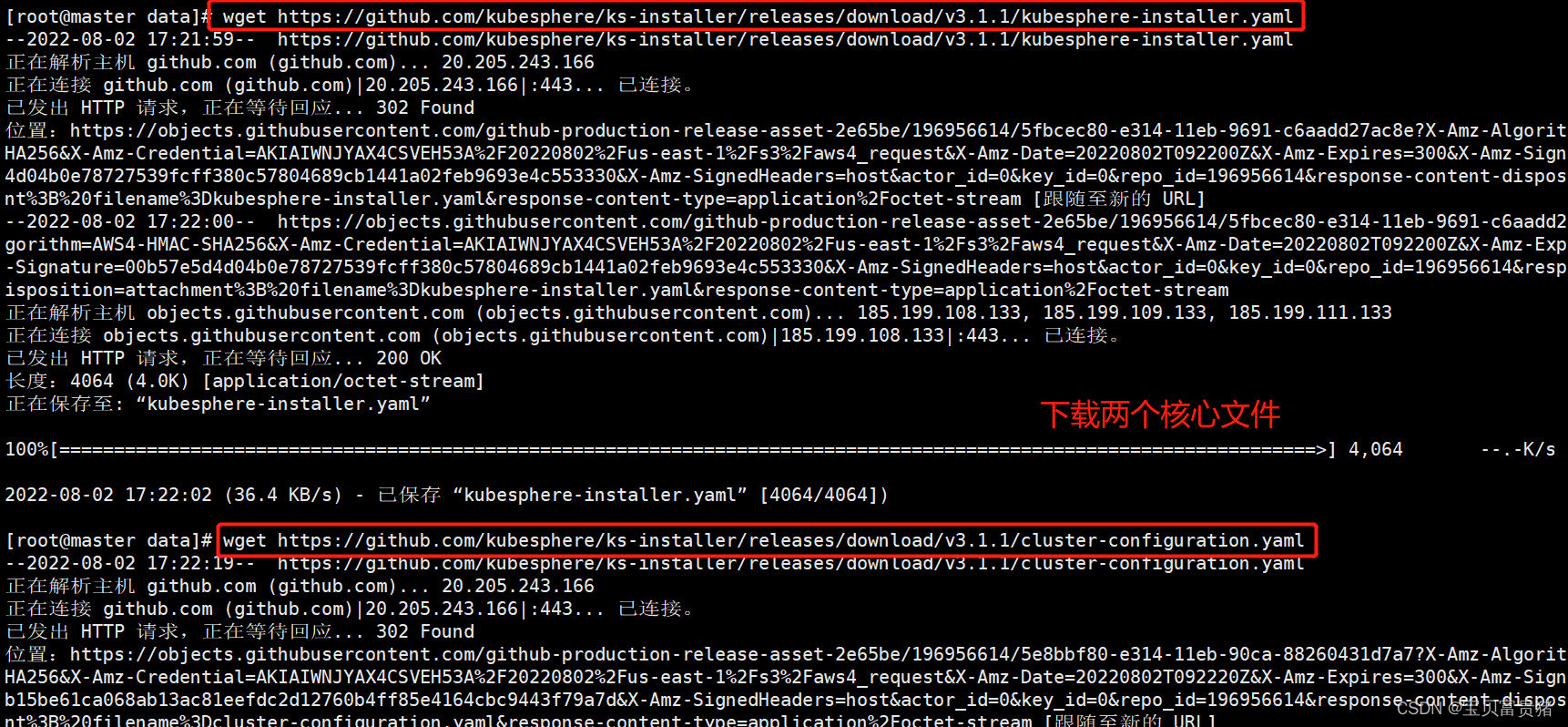

1、下载核心文件

wget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml wget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml- 1

- 2

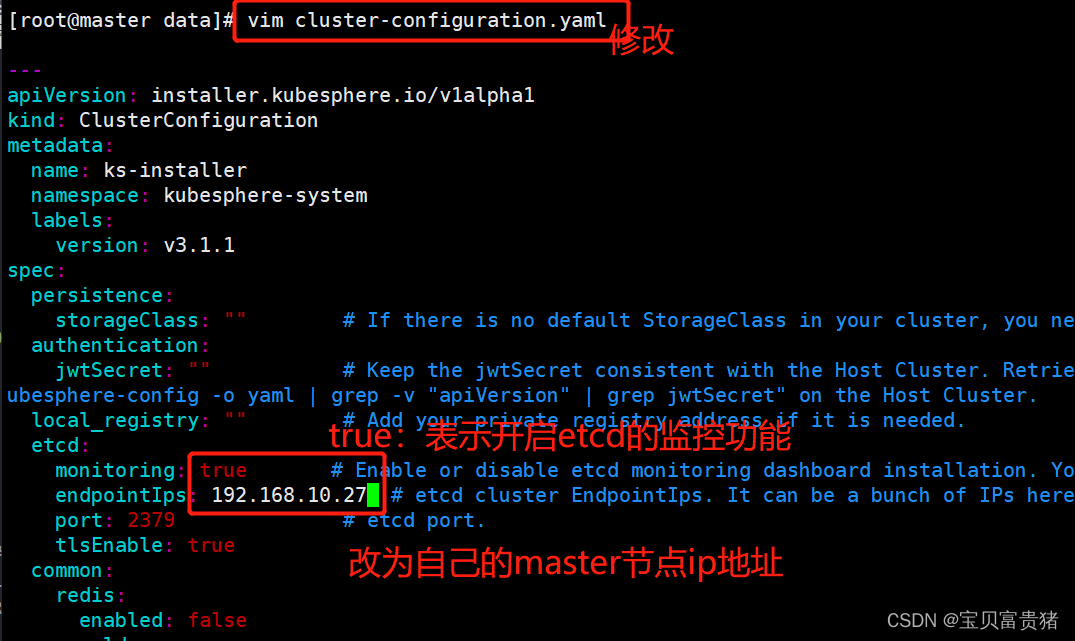

2、修改cluster-configuration集群配置说明:从 2.1.0 版本开始,KubeSphere 解耦了一些核心功能组件。这些组件设计成了可插拔式,您可以在安装之前或之后启用它们。如果您不启用它们,KubeSphere 会默认以最小化进行安装部署。不同的可插拔组件部署在不同的命名空间中。

上面是官方解释为啥需要修改cluster-configuration集群配置。

其实修改cluster-configuration集群配置就是额外启用一些KubeSphere插件,比如:启用DevOps的相关插件,开启ectd的监控功能,开启告警功能…在 cluster-configuration.yaml中指定我们需要开启的功能

参照官网“启用可插拔组件”

https://kubesphere.com.cn/docs/pluggable-components/overview/修改如下:

我们重点是改动spec下的字段,遇见"false"改为"true",但是几个不用改动,如下说明:vim cluster-configuration.yaml [root@master ~]# cat cluster-configuration.yaml --- apiVersion: installer.kubesphere.io/v1alpha1 kind: ClusterConfiguration metadata: name: ks-installer namespace: kubesphere-system labels: version: v3.1.1 spec: persistence: storageClass: "" #这里保持默认即可,因为偶们有了默认的存储类 authentication: jwtSecret: "" # Keep the jwtSecret consistent with the Host Cluster. Retrieve the jwtSecret by executing "kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret" on the Host Cluster. local_registry: "" # Add your private registry address if it is needed. etcd: monitoring: true # 改为"true",表示开启etcd的监控功能 endpointIps: 192.168.10.27 # 改为自己的master节点IP地址 port: 2379 # etcd port. tlsEnable: true common: redis: enabled: true #改为"true",开启redis功能 openldap: enabled: true #改为"true",开启轻量级目录协议 minioVolumeSize: 20Gi # Minio PVC size. openldapVolumeSize: 2Gi # openldap PVC size. redisVolumSize: 2Gi # Redis PVC size. monitoring: # type: external # Whether to specify the external prometheus stack, and need to modify the endpoint at the next line. endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090 # Prometheus endpoint to get metrics data. es: # Storage backend for logging, events and auditing. # elasticsearchMasterReplicas: 1 # The total number of master nodes. Even numbers are not allowed. # elasticsearchDataReplicas: 1 # The total number of data nodes. elasticsearchMasterVolumeSize: 4Gi # The volume size of Elasticsearch master nodes. elasticsearchDataVolumeSize: 20Gi # The volume size of Elasticsearch data nodes. logMaxAge: 7 # Log retention time in built-in Elasticsearch. It is 7 days by default. elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-<elk_prefix>-log. basicAuth: enabled: false #此处的"false"不用改为"true",这个标识在开启监控功能之后是否要连接ElasticSearch的账户和密码,此处不用 username: "" password: "" externalElasticsearchUrl: "" externalElasticsearchPort: "" console: enableMultiLogin: true # Enable or disable simultaneous logins. It allows different users to log in with the same account at the same time. port: 30880 alerting: # (CPU: 0.1 Core, Memory: 100 MiB) It enables users to customize alerting policies to send messages to receivers in time with different time intervals and alerting levels to choose from. enabled: true # 改为"true",开启告警功能 # thanosruler: # replicas: 1 # resources: {} auditing: enabled: true # 改为"true",开启审计功能 devops: # (CPU: 0.47 Core, Memory: 8.6 G) Provide an out-of-the-box CI/CD system based on Jenkins, and automated workflow tools including Source-to-Image & Binary-to-Image. enabled: true # 改为"true",开启DevOps功能 jenkinsMemoryLim: 2Gi # Jenkins memory limit. jenkinsMemoryReq: 1500Mi # Jenkins memory request. jenkinsVolumeSize: 8Gi # Jenkins volume size. jenkinsJavaOpts_Xms: 512m # The following three fields are JVM parameters. jenkinsJavaOpts_Xmx: 512m jenkinsJavaOpts_MaxRAM: 2g events: # Provide a graphical web console for Kubernetes Events exporting, filtering and alerting in multi-tenant Kubernetes clusters. enabled: true # 改为"true",开启集群的事件功能 ruler: enabled: true replicas: 2 logging: # (CPU: 57 m, Memory: 2.76 G) Flexible logging functions are provided for log query, collection and management in a unified console. Additional log collectors can be added, such as Elasticsearch, Kafka and Fluentd. enabled: true # 改为"true",开启日志功能 logsidecar: enabled: true replicas: 2 metrics_server: # (CPU: 56 m, Memory: 44.35 MiB) It enables HPA (Horizontal Pod Autoscaler). enabled: false # 这个不用修改,因为在上卖弄我们已经安装过了,如果这里开启,镜像是官方的,会拉取镜像失败 monitoring: storageClass: "" # prometheusReplicas: 1 # Prometheus replicas are responsible for monitoring different segments of data source and providing high availability. prometheusMemoryRequest: 400Mi # Prometheus request memory. prometheusVolumeSize: 20Gi # Prometheus PVC size. # alertmanagerReplicas: 1 # AlertManager Replicas. multicluster: clusterRole: none # host | member | none # You can install a solo cluster, or specify it as the Host or Member Cluster. network: networkpolicy: # Network policies allow network isolation within the same cluster, which means firewalls can be set up between certain instances (Pods). # Make sure that the CNI network plugin used by the cluster supports NetworkPolicy. There are a number of CNI network plugins that support NetworkPolicy, including Calico, Cilium, Kube-router, Romana and Weave Net. enabled: true # 改为"true",开启网络策略 ippool: # Use Pod IP Pools to manage the Pod network address space. Pods to be created can be assigned IP addresses from a Pod IP Pool. type: none #如果你的网络插件是calico,需要修改为"calico",这里我是Flannel,保持默认。 topology: # Use Service Topology to view Service-to-Service communication based on Weave Scope. type: none # Specify "weave-scope" for this field to enable Service Topology. "none" means that Service Topology is disabled. openpitrix: # An App Store that is accessible to all platform tenants. You can use it to manage apps across their entire lifecycle. store: enabled: true # 改为"true",开启应用商店 servicemesh: # (0.3 Core, 300 MiB) Provide fine-grained traffic management, observability and tracing, and visualized traffic topology. enabled: true # 改为"true",开启微服务治理 kubeedge: # Add edge nodes to your cluster and deploy workloads on edge nodes. enabled: false # 这个就不修改了,这个是边缘服务,我们也没有边缘的设备。 cloudCore: nodeSelector: {"node-role.kubernetes.io/worker": ""} tolerations: [] cloudhubPort: "10000" cloudhubQuicPort: "10001" cloudhubHttpsPort: "10002" cloudstreamPort: "10003" tunnelPort: "10004" cloudHub: advertiseAddress: # At least a public IP address or an IP address which can be accessed by edge nodes must be provided. - "" # Note that once KubeEdge is enabled, CloudCore will malfunction if the address is not provided. nodeLimit: "100" service: cloudhubNodePort: "30000" cloudhubQuicNodePort: "30001" cloudhubHttpsNodePort: "30002" cloudstreamNodePort: "30003" tunnelNodePort: "30004" edgeWatcher: nodeSelector: {"node-role.kubernetes.io/worker": ""} tolerations: [] edgeWatcherAgent: nodeSelector: {"node-role.kubernetes.io/worker": ""} tolerations: []- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

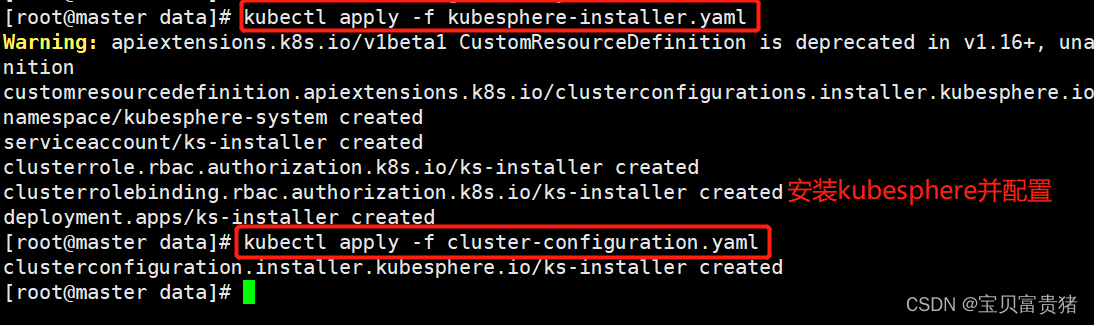

3、安装kubesphere并配置kubesphere(注意运行顺序)

kubectl apply -f kubesphere-installer.yaml kubectl apply -f cluster-configuration.yaml- 1

- 2

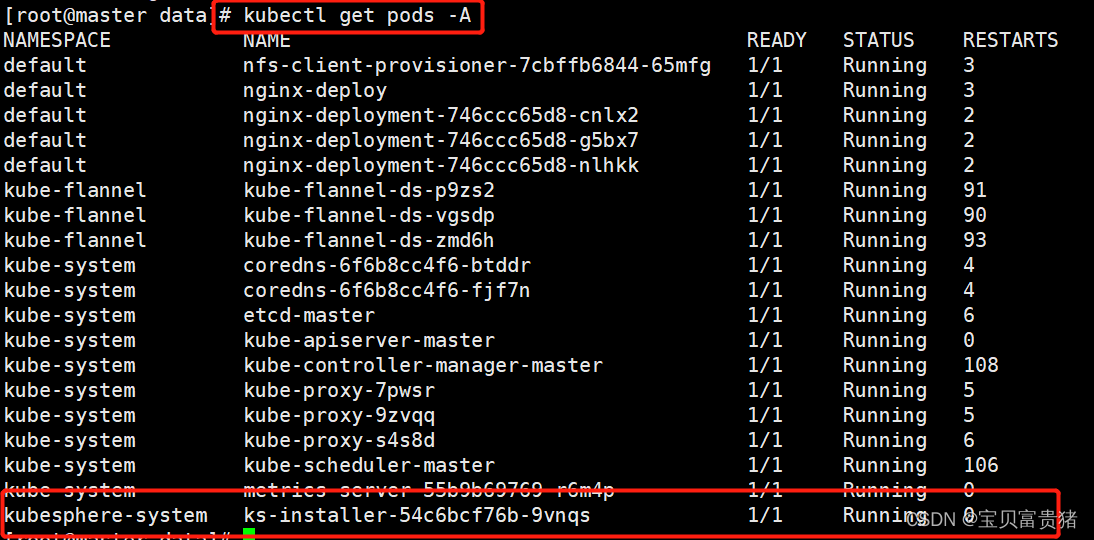

4、查看KubeSphere的状态[root@master ~]# kubectl get pods -A ... kubesphere-system ks-installer-54c6bcf76b-br9vq 1/1 Running 0 41m ...- 1

- 2

- 3

- 4

5、检查安装日志:

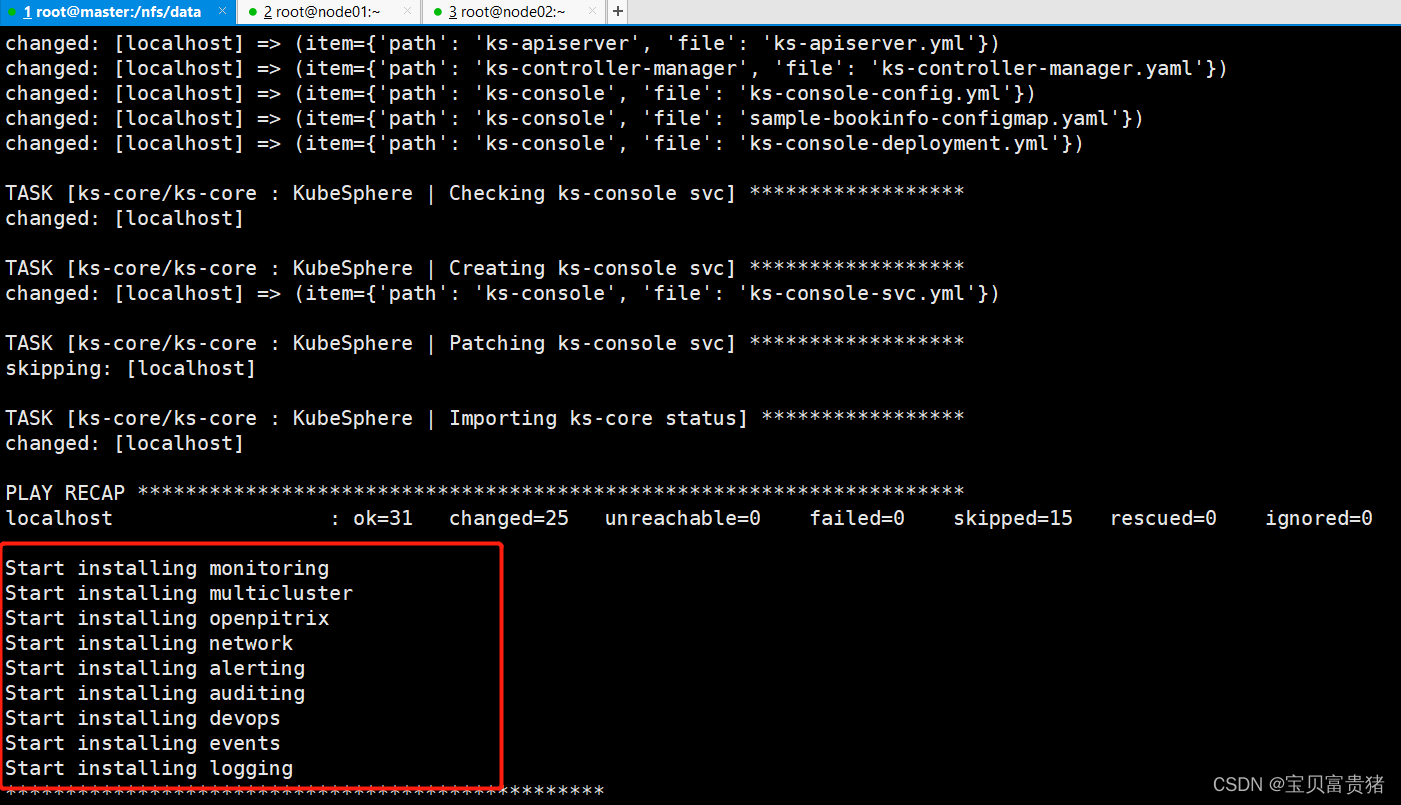

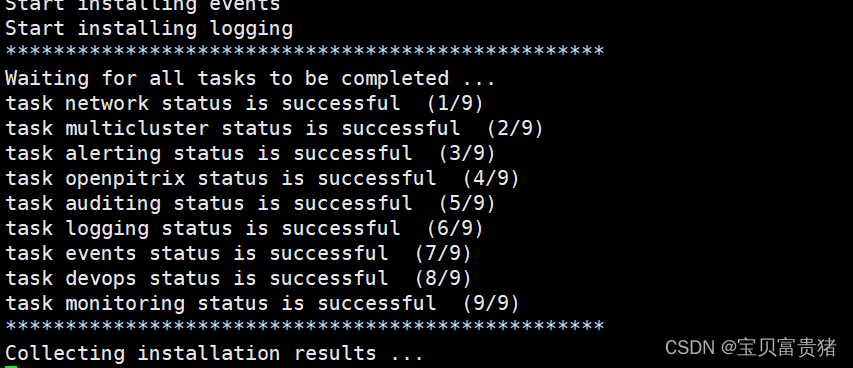

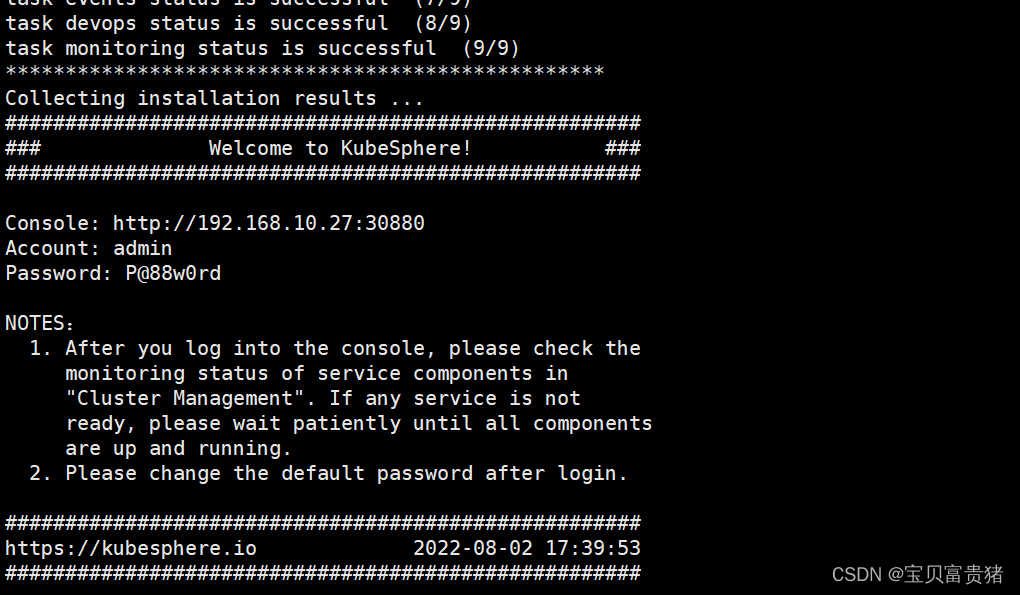

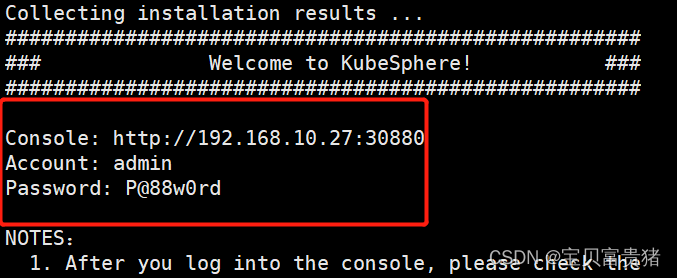

使用如下命令可以查看kubesphere安装的日志,[root@k8s-master ~]# kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f #显示结果: PLAY RECAP ********************************************************************* localhost : ok=31 changed=25 unreachable=0 failed=0 skipped=15 rescued=0 ignored=0 #注意查看failed=0,失败=0即可。 #下面就等待估计20min Start installing monitoring Start installing multicluster Start installing openpitrix Start installing network Start installing alerting Start installing auditing Start installing devops Start installing events Start installing kubeedge Start installing logging Start installing servicemesh ************************************************** Waiting for all tasks to be completed ... task multicluster status is successful (1/11) task network status is successful (2/11) task alerting status is successful (3/11) task openpitrix status is successful (4/11) task auditing status is successful (5/11) task logging status is successful (6/11) task events status is successful (7/11) task kubeedge status is successful (8/11) task devops status is successful (9/11) task monitoring status is successful (10/11) task servicemesh status is successful (11/11) ************************************************** Collecting installation results ... ##################################################### ### Welcome to KubeSphere! ### ##################################################### Console: http://192.168.0.206:30880 Account: admin Password: P@88w0rd NOTES: 1. After you log into the console, please check the monitoring status of service components in "Cluster Management". If any service is not ready, please wait patiently until all components are up and running. 2. Please change the default password after login. ##################################################### https://kubesphere.io 2022-04-08 17:14:52 ##################################################### #出现如上信息表示安装KubeSphere行- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

3.1 解决Prometheus监控etcd找不到Secret的问题

1、安装好了KubeSphere之后查看相关Pod,会发现有两个Prometheus(监控服务)一直处于ContainerCreating,那这时我们就需要排查一下错误了,首先describe

[root@k8s-master ~]# kubectl get pods -A kubesphere-monitoring-system prometheus-k8s-0 0/3 ContainerCreating 0 9m39s kubesphere-monitoring-system prometheus-k8s-1 0/3 ContainerCreating 0 9m39s- 1

- 2

- 3

2、describe查看原因

[root@k8s-master ~]# kubectl describe pods -n kubesphere-monitoring-system prometheus-k8s-0 Warning FailedMount 8m6s kubelet Unable to attach or mount volumes: unmounted volumes=[secret-kube-etcd-client-certs], unattached volumes=[prometheus-k8s-db prometheus-k8s-rulefiles-0 secret-kube-etcd-client-certs prometheus-k8s-token-nzqs8 config config-out tls-assets]: timed out waiting for the condition Warning FailedMount 3m34s (x2 over 5m51s) kubelet Unable to attach or mount volumes: unmounted volumes=[secret-kube-etcd-client-certs], unattached volumes=[config config-out tls-assets prometheus-k8s-db prometheus-k8s-rulefiles-0 secret-kube-etcd-client-certs prometheus-k8s-token-nzqs8]: timed out waiting for the condition Warning FailedMount 115s (x12 over 10m) kubelet MountVolume.SetUp failed for volume "secret-kube-etcd-client-certs" : secret "kube-etcd-client-certs" not found Warning FailedMount 80s kubelet Unable to attach or mount volumes: unmounted volumes=[secret-kube-etcd-client-certs], unattached volumes=[tls-assets prometheus-k8s-db prometheus-k8s-rulefiles-0 secret-kube-etcd-client-certs prometheus-k8s-token-nzqs8 config config-out]: timed out waiting for the condition- 1

- 2

- 3

- 4

- 5

说明: 由于我们在cluster-configuration.yaml文件中开启了监控功能,但是Prometheus无法获取到etcd的证书,因为我们知道etcd是整个Kubernetes的核心,存放着重要的数据,因此需要有它的证书允许才能进行监控。

因此我们创建secret里面放证书即可:

[root@k8s-master ~]# kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs --from-file=etcd-client-ca.crt=/etc/kubernetes/pki/etcd/ca.crt --from-file=etcd-client.crt=/etc/kubernetes/pki/apiserver-etcd-client.crt --from-file=etcd-client.key=/etc/kubernetes/pki/apiserver-etcd-client.key- 1

大概等个5分钟左右,再次查看,就Running了。

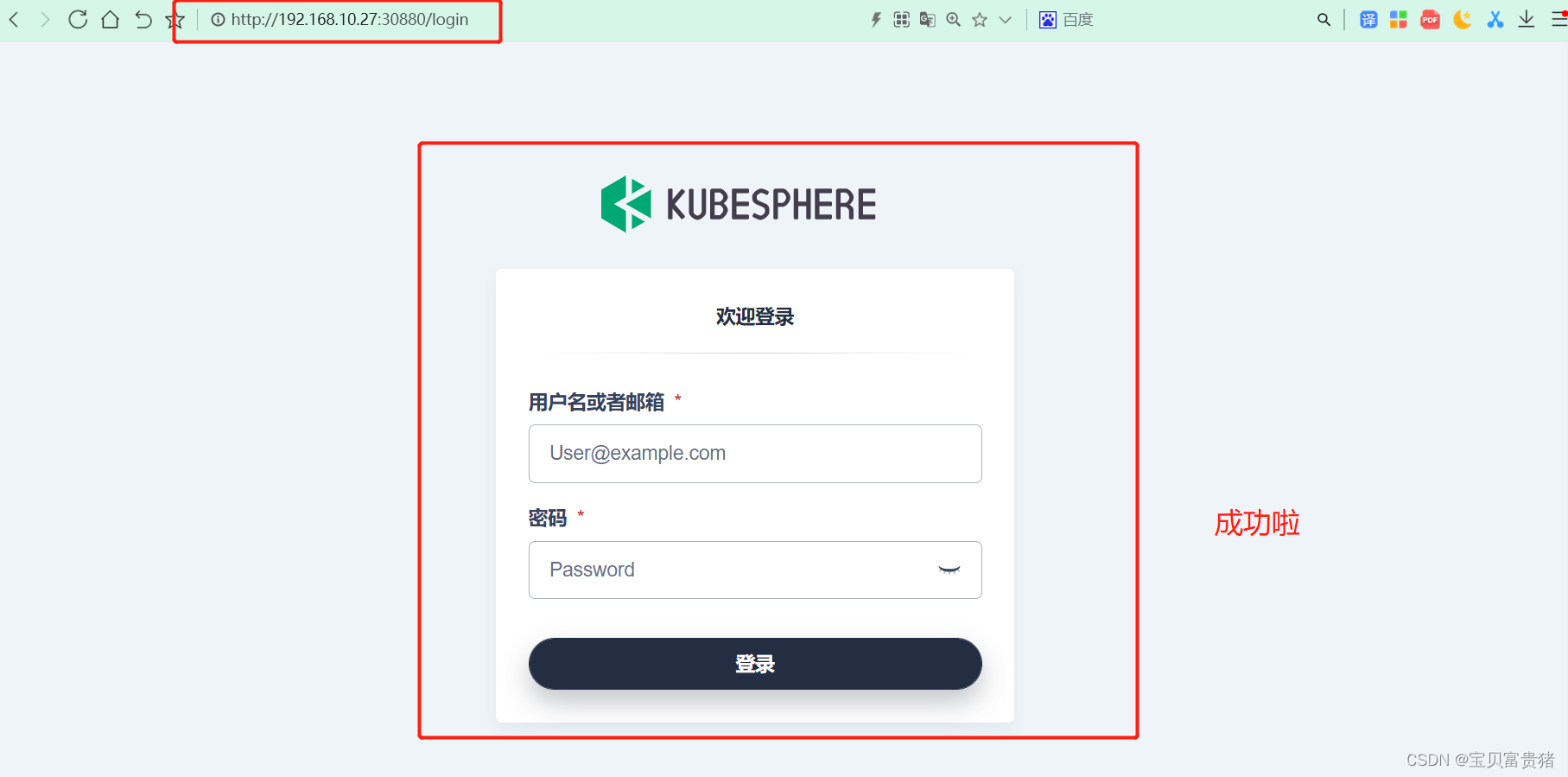

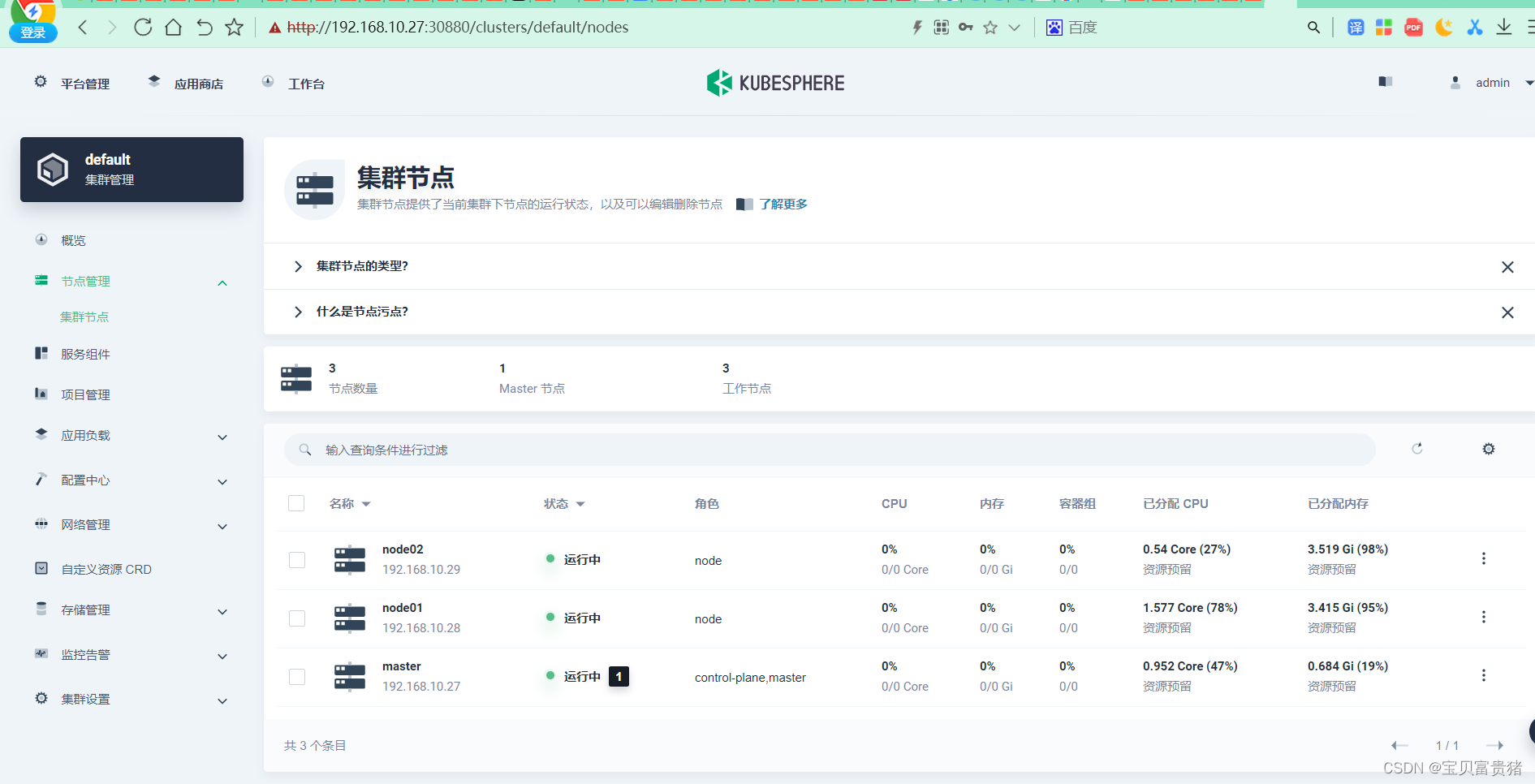

3.2 访问KubeSphere

在确保KubeSphere的相关插件都Running后,我们就能访问KubeSphere了(KubeSphere默认监听30880端口,如果是公有云环境需要注意安全组开放30880端口):IP:30880

用户:admin

初始密码:P@88w0rd

更改密码

进入KubeSphere主界面

兄弟们,看完记得点赞赞!!! -

相关阅读:

基于输入输出分离标识转换系统的路由协议健壮性分析模型

vivo霍金实验平台设计与实践-平台产品系列02

9月11-12日上课内容 第二章 GFS 分布式文件系统

职场小技巧分享,想要成为受欢迎的人快来

笔记:Python 字典和集合(练习题)

jenkins插件Publish Over SSH因安全问题下架

微服务实践Aspire项目发布到远程k8s集群

HTML+CSS大作业:众志成城 抗击疫情 抗击疫情网页制作作业 疫情防控网页设计

led护眼灯有蓝光吗?双十二选led护眼灯的好处有哪些

极客日报:苹果称刘海屏是个“聪明设计”;淘宝推出表情购物功能;Rust 1.56.0发布

- 原文地址:https://blog.csdn.net/weixin_59663288/article/details/126106196