-

集成学习、boosting、bagging、Adaboost、GBDT、随机森林

集成学习算法简介

什么是集成学习

- 集成学习通过建立几个模型来解决单一预测问题。它的工作原理是生成多个分类器/模型,各自独立地学习和作出预测。这些预测最后结合成组合预测,因此优于任何一个单分类的做出预测。

机器学习的两个核心任务

- 如何优化训练任务 → 欠拟合问题

- 如何提升泛化性能 → 过拟合问题

集成学习中Boosting(串行)和Bagging(并行)

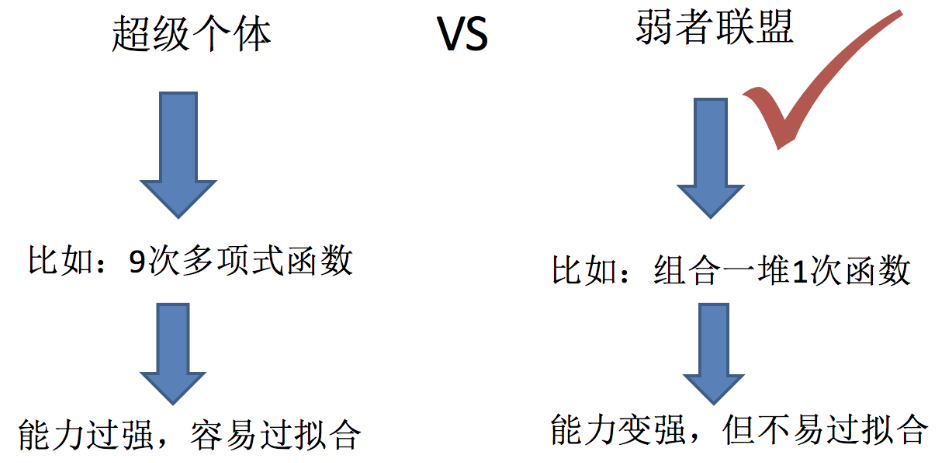

- 只要单分类器的表现不太差,集成学习的结果总是要好于单分类器的

Boosting

Boosting原理

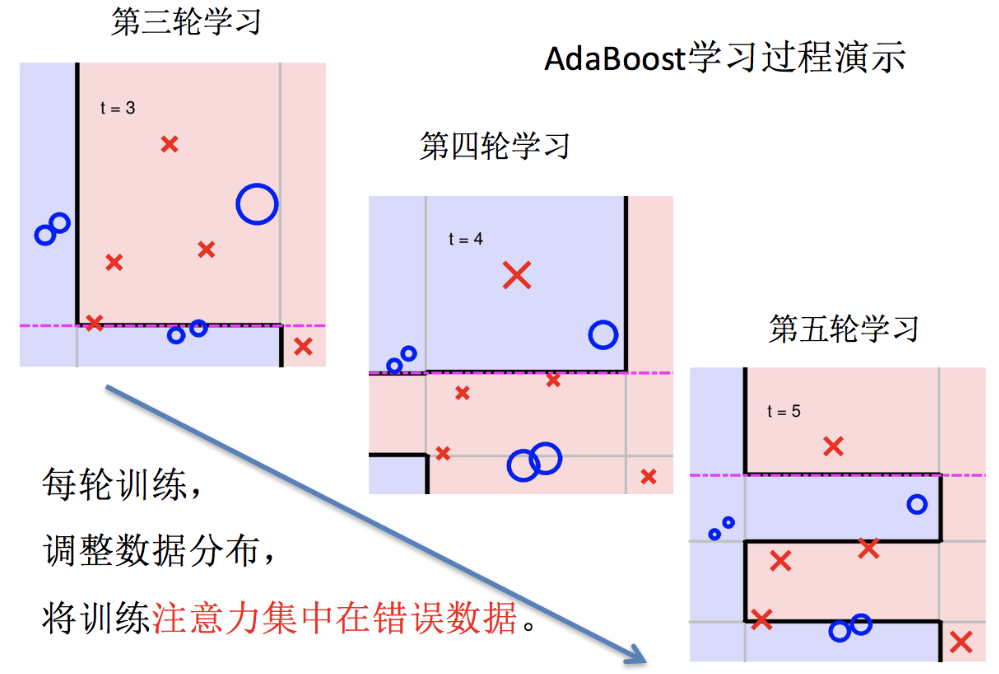

- Boosting 方法训练基分类器时采用串行的方式,各个基分类器之间有依赖

- 它的基本思路是将基分类器层层叠加,每一层在训练的时候,对前一层基分类器分错的样本, 给予更高的权重。测试时,根据各层分类器的结果的加权得到最终结果

Boosting 的过程很类似于人类学习的过程,我们学习新知识的过程往往是迭代式的,第一遍学习的时候,我们会记住一部分知识,但往往也会犯一些错误,对于这些错误,我们的印象会很深。第二遍学习的时候,就会针对犯过错误的知识加强学习,以减少类似的错误发生。不断循环往复,直到犯错误的次数减少到很低的程度

AdaBoost

AdaBoost实现过程

- 训练第一个学习器

- 调整数据结构

- 训练第二个分类器

- 再次调整数据结构

- 依次训练学习器,调整数据结构

- 整体实现过程

关键点:

如何确认投票权重?

如何调整数据分布?

AdaBoost的构造过程小结

AdaBoost实例

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets x, y = datasets.make_moons(n_samples=50000, noise=0.3, random_state=42) #默认分割比例是75%和25% from sklearn.model_selection import train_test_split x_train, x_test, y_train, y_test = train_test_split(x, y, random_state=42)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

from sklearn.ensemble import AdaBoostClassifier ada_clf = AdaBoostClassifier(DecisionTreeClassifier(), n_estimators=500) ada_clf.fit(x_train, y_train) ada_clf.score(x_test, y_test)- 1

- 2

- 3

- 4

GBDT

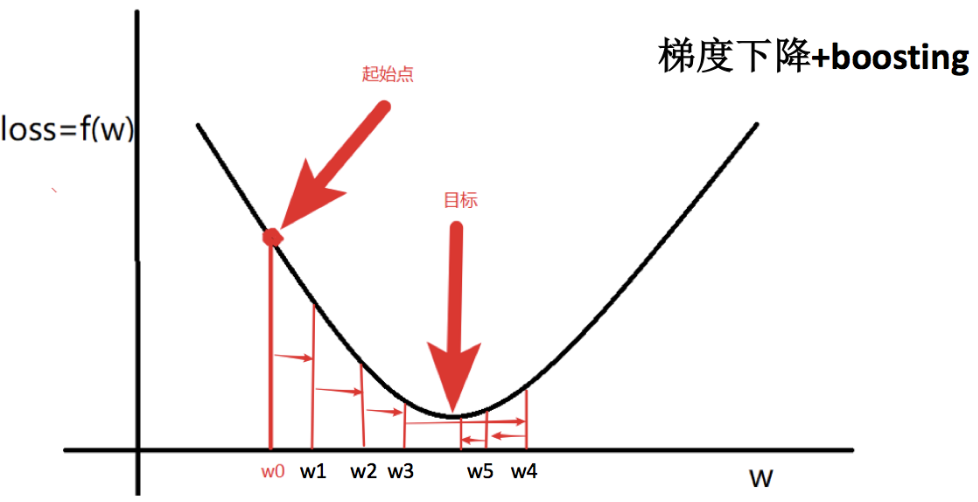

- Gradient Boosting( 梯度提升决策树 ) 是 Boosting 中的一大类算法,其基本思想是根据当前模型损失函数的负梯度信息来训练新加入的弱分类器,然后将训练好的弱分类器以累加 的形式结合到现有模型中

- GBDT = 梯度下降 + Boosting + 决策树( 通常为 CART )

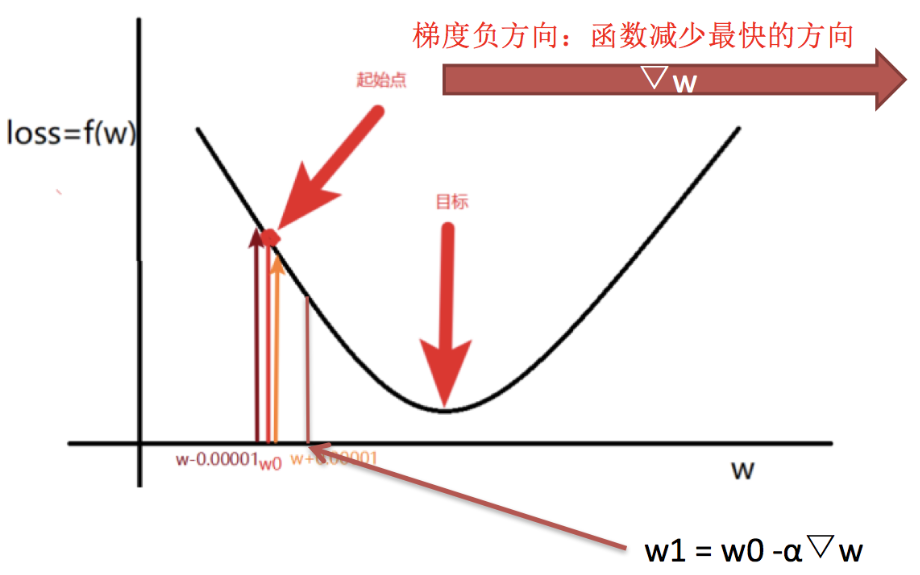

梯度的概念

w 1 = w 0 − α ▽ f ( w 0 ; x ) w 2 = w 1 − α ▽ f ( w 1 ; x ) w 3 = w 2 − α ▽ f ( w 2 ; x ) w 4 = w 3 − α ▽ f ( w 3 ; x ) w 5 = w 4 − α ▽ f ( w 4 ; x )w1=w0−α▽f(w0;x)w3=w2−α▽f(w2;x)w5=w4−α▽f(w4;x)w2=w1−α▽f(w1;x)w4=w3−α▽f(w3;x)w 1 = w 0 − α ▽ f ( w 0 ; x ) w 2 = w 1 − α ▽ f ( w 1 ; x ) w 3 = w 2 − α ▽ f ( w 2 ; x ) w 4 = w 3 − α ▽ f ( w 3 ; x ) w 5 = w 4 − α ▽ f ( w 4 ; x )

w 5 = w 0 − α ▽ f ( w 0 ; x ) − α ▽ f ( w 1 ; x ) − α ▽ f ( w 2 ; x ) − α ▽ f ( w 3 ; x ) − α ▽ f ( w 4 ; x )w5=w0−α▽f(w0;x)−α▽f(w1;x)−α▽f(w2;x)−α▽f(w3;x)−α▽f(w4;x)w 5 = w 0 − α ▽ f ( w 0 ; x ) − α ▽ f ( w 1 ; x ) − α ▽ f ( w 2 ; x ) − α ▽ f ( w 3 ; x ) − α ▽ f ( w 4 ; x ) GBDT执行流程

令 α = 1 , h i ( x ) = − ▽ f ( w i ; x ) \alpha=1,h_i(x)=-\bigtriangledown f(w_i;x) α=1,hi(x)=−▽f(wi;x)

H ( x ) = h 0 ( x ) + h 1 ( x ) + h 2 ( x ) . . . H(x)=h_0(x)+h_1(x)+h_2(x)... H(x)=h0(x)+h1(x)+h2(x)...

H ( x ) H(x) H(x)是boosting集成表达方式,如果上式中的 h i ( x ) = h_i(x)= hi(x)=决策树模型,则上式就变为:

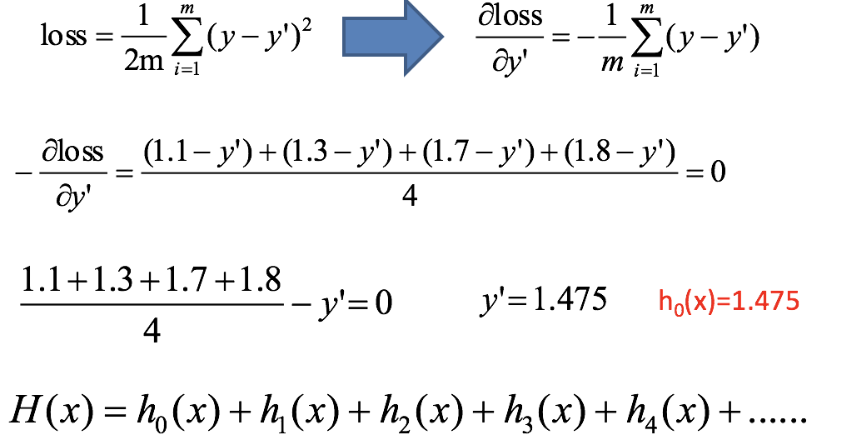

GBDT=梯度下降+Boosting+决策树GBDT案例

- 预测编号5的身高

编号 年龄(岁) 体重(KG) 身高(M) 1 5 20 1.1 2 7 30 1.3 3 21 70 1.7 4 30 60 1.8 5 25 65 ? - 计算损失函数,并求出第一个预测值

- 求解划分点

得出:年龄21为划分点的方差=0.01+0.0025=0.0125- 通过调整后目标值,求解得出 h 1 ( x ) h_1(x) h1(x)

- 求解 h 2 ( x ) h_2(x) h2(x)

- 得出结果

编号5身高 = 1.475 + 0.03 + 0.275 = 1.78GBDT主要执行思想

- 使用梯度下降法优化代价函数;

- 使用一层决策树作为弱学习器,负梯度作为目标值;

- 利用boosting思想进行集成。

GBDT实例

#它默认用的也是决策树,增加了基分类器的数目后,准确率提升 from sklearn.ensemble import GradientBoostingClassifier #梯度决策树 gb_clf = GradientBoostingClassifier(max_depth=2, n_estimators=500) gb_clf.fit(x_train, y_train) gb_clf.score(x_test, y_test)- 1

- 2

- 3

- 4

- 5

- 6

梯度提升和梯度下降的区别和联系

- 两者都是在每 一轮迭代中,利用损失函数相对于模型的负梯度方向的信息来对当前模型进行更新

- 在梯度下降中,模型是以参数化形式表示,从而模型的更新等价于参 数的更新。而在梯度提升中,模型并不需要进行参数化表示,而是直接定义在函 数空间中,从而大大扩展了可以使用的模型种类

GBDT 的优点和局限性

- 优点

- 预测阶段的计算速度快,树与树之间可并行化计算(是前一个有结果可以给下一个)

- 在分布稠密的数据集上,泛化能力和表达能力都很好,这使得 GBDT 在 Kaggle 的众多竞赛中,经常名列榜首

- 采用决策树作为弱分类器使得 GBDT 模型具有较好的解释性和鲁棒性, 能够自动发现特征间的高阶关系,并且也**不需要对数据进行特殊的预处理如归一化**等

- 缺点

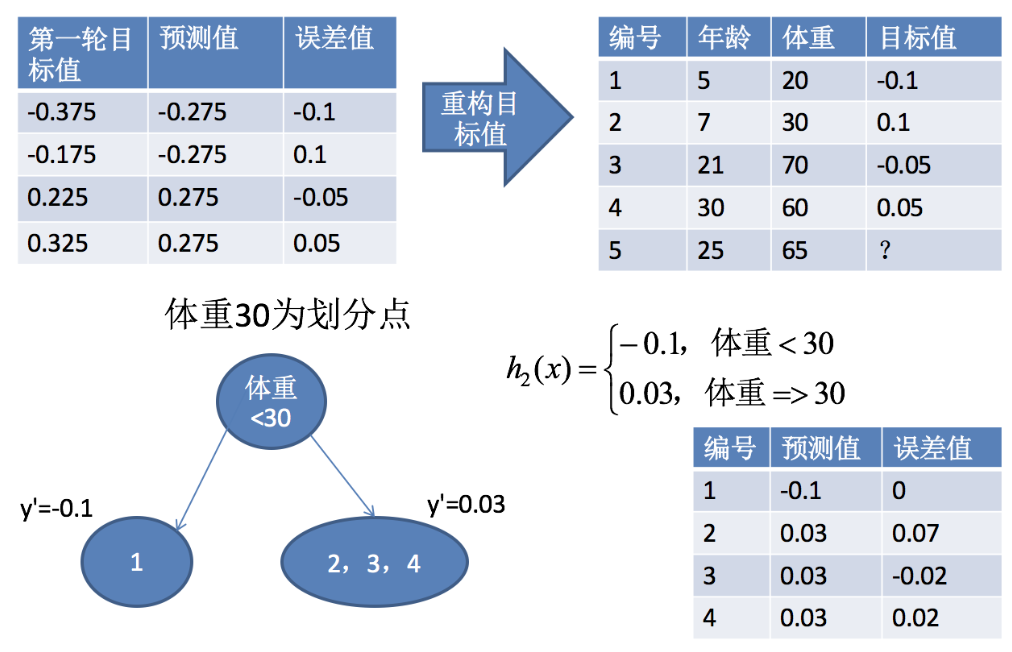

Bagging

- Bagging 与 Boosting 的串行训练方式不同,Bagging 方法在训练过程中,各基分类器之间无强依赖,可以进行并行训练。其中很著名的算法之一是基于决策树基分类器的随机森林 (Random Forest)。

- 为了让基分类器之间互相独立,将训练集分为若干子集(当训练样本数量较少时,子集之间可能有交叠)。

- Bagging 方法更像是一个集体决策的过程,每个个体都进行单独学习,学习的内容可以相同,也可以不同,也可以部分重叠。但由于个体之间存在差异性,最终做出的判断不会完全一致。在最终做决策时,每个个体单独作出判断,再通过投票的方式做出最后的集体决策。

Bagging实现过程

随机森林

随机森林构造过程

- 在机器学习中,随机森林是一个包含多个决策树的分类器,并且其输出的类别是由个别树输出的类别的众数而定

- 随机森林 = Bagging + 决策树

- 随机森林够造过程中的关键步骤(用N来表示训练用例(样本)的个数,M表示特征数目):

- 一次随机选出一个样本,有放回的抽样,重复N次(有可能出现重复的样本)

- 随机去选出m个特征, m <

- 为什么要随机抽样训练集:

- 如果不进行随机抽样,每棵树的训练集都一样,那么最终训练出的树分类结果也是完全一样的

- 为什么要有放回地抽样:

- 如果不是有放回的抽样,那么每棵树的训练样本都是不同的,都是没有交集的,每棵树训练出来都是有很大的差异的;而随机森林最后分类取决于多棵树(弱分类器)的投票表决

随机森林api介绍

- sklearn.ensemble.RandomForestClassifier(n_estimators=10, criterion=’gini’, max_depth=None, bootstrap=True, random_state=None, min_samples_split=2)

- n_estimators:integer,optional(default = 10)森林里的树木数量120,200,300,500,800,1200

- criterion:string,可选(default =“gini”)分割特征的测量方法

- max_depth:integer或None,可选(默认=无)树的最大深度 5,8,15,25,30

- max_features="auto”,每个决策树的最大特征数量

- If “auto”, then max_features=sqrt(n_features).

- If “sqrt”, then max_features=sqrt(n_features)(same as “auto”).

- If “log2”, then max_features=log2(n_features).

- If None, then max_features=n_features.

- bootstrap:boolean,optional(default = True)是否在构建树时使用放回抽样

- min_samples_split:节点划分最少样本数

- min_samples_leaf:叶子节点的最小样本数

- 超参数:n_estimator, max_depth, min_samples_split,min_samples_leaf

实例

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets x, y = datasets.make_moons(n_samples=50000, noise=0.3, random_state=42) rc_clf = RandomForestClassifier(n_estimators=500, random_state=666, oob_score=True, n_jobs=-1) rc_clf.fit(x, y)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

Bagging集成优点

- Bagging + 决策树/线性回归/逻辑回归/深度学习… = bagging集成学习方法

- 经过上面方式组成的集成学习方法:

- 均可在原有算法上提高约2%左右的泛化正确率

- 简单, 方便, 通用

bagging集成与boosting集成的区别:

基分类器

常用的基分类器是

- 决策树

- 决策树可以较为方便地将样本的权重整合到训练过程中,而不需要使用 过采样的方法来调整样本权重

- 决策树的表达能力和泛化能力,可以通过调节树的层数来做折中

- 数据样本的扰动对于决策树的影响较大,因此不同子样本集合生成的决策树基分类器随机性较大,这样的“不稳定学习器”更适合作为基分类器

- 神经网络模型

- 主要由于神经网络模型 也比较“不稳定”,而且还可以通过调整神经元数量、连接方式、网络层数、初始 权值等方式引入随机性

可否将随机森林中的基分类器,由决策树替换为线性分类器 或 K-近邻?请解释为什么?

- 随机森林属于 Bagging 类的集成学习。Bagging 的主要好处是集成后的分类器的 方差,比基分类器的方差小。Bagging 所采用的基分类器,最好是本身对样本分布 较为敏感的(即所谓不稳定的分类器),这样 Bagging 才能有用武之地

- 线性分类 器或者 K-近邻都是较为稳定的分类器,本身方差就不大,所以以它们为基分类器 使用 Bagging 并不能在原有基分类器的基础上获得更好的表现,甚至可能因为 Bagging 的采样,而导致他们在训练中更难收敛,从而增大了集成分类器的偏差

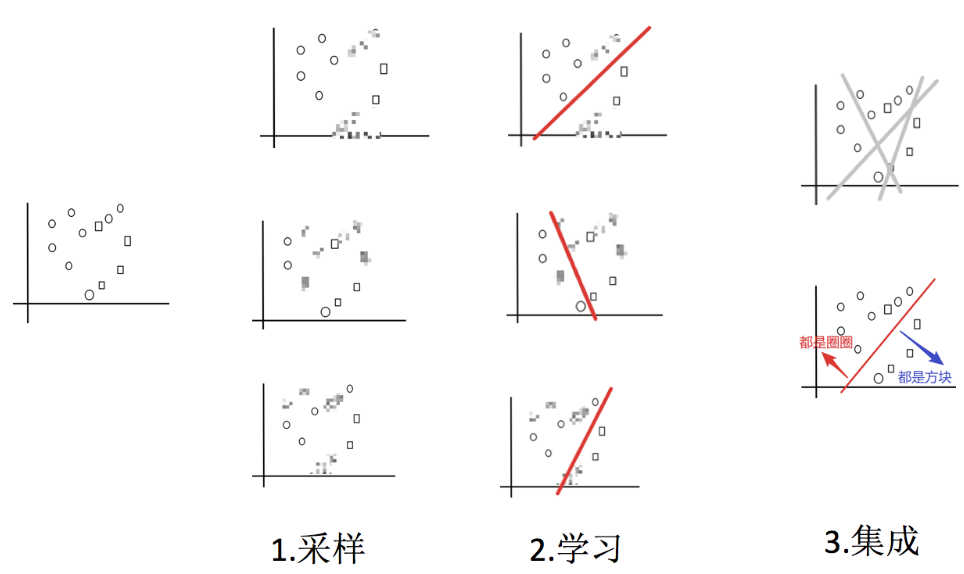

偏差与方差

- 在有监督学习中,模型的泛化误差来源于两个方面——偏差和方差

- 偏差指的是由所有采样得到的大小为 m 的训练数据集训练出的所有模型的输出 的平均值和真实模型输出之间的偏差

- 方差指的是由所有采样得到的大小为 m 的训练数据集训练出的所有模型的输出的方差

- 方差和偏差是相辅相成,矛盾又统一的,二者并不能完全独立的存在。对于给定的学习任务和训练数据集, 我们需要对模型的复杂度做合理的假设。如果模型复杂度过低,虽然方差很小,但是偏差会很高;如果模型复杂度过高,虽然偏差降低了,但是方差会很高。所以需要综合考虑偏差和 方差选择合适复杂度的模型进行 训练

如何从减小方差和偏差的角度解释 Boosting 和 Bagging 的原理?

- Bagging 能够提高弱分类器性能的原因是降低了方 差,Boosting 能够提升弱分类器性能的原因是降低了偏差

Soft Voting

- 要求集合的每一个模型都能估计概率

from sklearn.ensemble import VotingClassifier # 逻辑回归 from sklearn.linear_model import LogisticRegression # SVM from sklearn.svm import SVC # 决策树 from sklearn.tree import DecisionTreeClassifier #hard模式就是少数服从多数 voting_clf = VotingClassifier(estimators=[ ('log_clf', LogisticRegression()), ('svm_clf', SVC()), ('dt_clf', DecisionTreeClassifier())], voting='hard') voting_clf2 = VotingClassifier(estimators=[ ('log_clf', LogisticRegression()), ('svm_clf', SVC(probability=True)), #支持向量机中需要加入probability ('dt_clf', DecisionTreeClassifier())], voting='soft') voting_clf2.fit(x_train, y_train) voting_clf2.score(x_test, y_test)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

-

相关阅读:

2023.11.20 关于 Spring MVC 详解

Linux-初学者系列_docker

go web之一:hello world快速上手+handle(http.Handle和http.HandleFunc的区别与联系)

CompletableFuture异步编排

移远通信推出六款新型天线,为物联网客户带来更丰富的产品选择

8.cuBLAS开发指南中文版--cuBLAS中的cublasGetMatrix()和cublasSetMatrix()

多进程multiprocessing

Java中的synchronized、volatile、ThreadLocal

Python3 集合

医学图像分割利器:U-Net网络详解及实战

- 原文地址:https://blog.csdn.net/weixin_45775970/article/details/126097314