-

Kubernetes(k8s)的Pod控制器Horizontal Pod Autoscaler(HPA)详细讲解

1. 概述

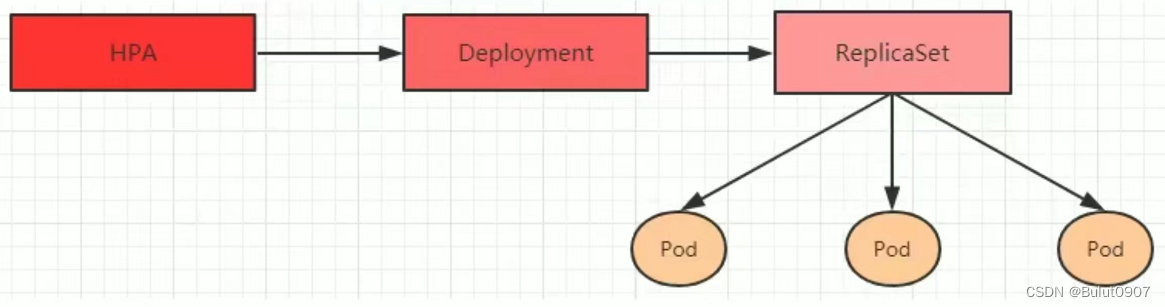

kubectl scale命令实现Pod的扩缩容需要人工操作。kubernetes可以通过监测Pod的使用情况,实现Pod数量的自动调整,这就是HPA控制器HPA可以获取每个Pod的利用率,然后和HPA中定义的指标进行对比,同时计算出需要伸缩的具体值,最后实现Pod的数量的调整

2. kubernetes-sigs/metrics-server的介绍和安装

可以用来收集K8s集群中的资源使用情况。不要将metrics-server用于常规的监控方案,可以直接使用kebelet的/metrics/resource

特点:

- 每15秒进行一次数据采集

- 占用资源少,每个Node占用1 mili core的CPU和2 MB内存

- 目前只能对CPU和内存进行监控

2.1 metrics-server安装

- 下载yaml文件。为replicas: 2的高可用服务

[root@k8s-master ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/high-availability.yaml- 1

当前使用的是metrics-server 0.6.1版本,支持Kubernetes 1.23.2

- 修改yaml文件配置

[root@k8s-master ~]# cat high-availability.yaml ......省略部分...... - args: - --cert-dir=/tmp - --secure-port=4443 # - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname # 注释上面那行,添加下面两行 - --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP # node address类型的优先权设置 - --kubelet-insecure-tls # 取消安全验证 - --kubelet-use-node-status-port - --metric-resolution=15s # image: k8s.gcr.io/metrics-server/metrics-server:v0.6.1 # 注释上面那行,添加下面这行 image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.1 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 3 ......省略部分...... [root@k8s-master ~]#- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 部署应用

[root@k8s-master ~]# kubectl apply -f high-availability.yaml serviceaccount/metrics-server created clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrole.rbac.authorization.k8s.io/system:metrics-server created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created service/metrics-server created deployment.apps/metrics-server created Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget poddisruptionbudget.policy/metrics-server created apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created [root@k8s-master ~]#- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

2.2 查看资源使用情况

[root@k8s-master ~]# kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% k8s-master 142m 7% 1154Mi 67% k8s-node1 85m 4% 851Mi 49% k8s-node2 57m 2% 567Mi 32% [root@k8s-master ~]# [root@k8s-master ~]# kubectl top pod -n kube-system NAME CPU(cores) MEMORY(bytes) calico-kube-controllers-57d95cb479-5zppz 3m 27Mi calico-node-2m8xb 32m 123Mi calico-node-jnll4 32m 117Mi calico-node-v6zcv 31m 96Mi coredns-7f74c56694-snzmv 2m 13Mi coredns-7f74c56694-whh84 1m 17Mi etcd-k8s-master 13m 62Mi kube-apiserver-k8s-master 39m 437Mi kube-controller-manager-k8s-master 12m 84Mi kube-proxy-9gc7d 1m 17Mi kube-proxy-f9w7h 1m 30Mi kube-proxy-s8rwk 1m 26Mi kube-scheduler-k8s-master 3m 34Mi metrics-server-59755df759-n4lm7 3m 13Mi metrics-server-59755df759-zv86z 3m 15Mi [root@k8s-master ~]#- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

3. 准备Deployment和Service

3.1 准备Deployment

新建pod-controller.yaml,内容如下。然后运行deployment

[root@k8s-master ~]# cat pod-controller.yaml apiVersion: apps/v1 kind: Deployment metadata: name: pod-controller namespace: dev labels: controller: deploy spec: replicas: 1 selector: matchLabels: app: nginx-pod template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx:latest ports: - name: nginx-port containerPort: 80 protocol: TCP resources: # 要设置每个容器最低使用的CPU,不然测试不出效果 requests: cpu: "0.1" [root@k8s-master ~]# [root@k8s-master ~]# kubectl apply -f pod-controller.yaml deployment.apps/pod-controller created [root@k8s-master ~]#- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

3.2 准备Service

[root@k8s-master ~]# kubectl expose deployment pod-controller --name=nginx-service --type=NodePort --port=80 --target-port=80 -n dev service/nginx-service exposed [root@k8s-master ~]# [root@k8s-master ~]# kubectl get svc -n dev NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx-service NodePort 10.96.78.24980:32469/TCP 19s [root@k8s-master ~]# - 1

- 2

- 3

- 4

- 5

- 6

- 7

访问:http://任意集群IP:32469

4. 部署HPA

新建pod-hpa.yaml,内容如下。然后运行HPA

[root@k8s-master ~]# cat pod-hpa.yaml apiVersion: autoscaling/v1 kind: HorizontalPodAutoscaler metadata: name: pod-hpa # hpa的名称 namespace: dev # hpa所属的命名空间 labels: controller: hpa # 给hpa打标签 spec: minReplicas: 1 # 缩容最小Pod数量 maxReplicas: 10 # 扩容最大Pod数量 targetCPUUtilizationPercentage: 3 # 为了便于测试,这里设置CPU使用率警戒线为3% scaleTargetRef: # 指定要控制的Deployment的信息 apiVersion: apps/v1 kind: Deployment name: pod-controller [root@k8s-master ~]# [root@k8s-master ~]# kubectl apply -f pod-hpa.yaml horizontalpodautoscaler.autoscaling/pod-hpa created [root@k8s-master ~]#- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

查看HPA

[root@k8s-master ~]# kubectl get hpa -n dev NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE pod-hpa Deployment/pod-controller 0%/3% 1 10 1 82s [root@k8s-master ~]#- 1

- 2

- 3

- 4

5. 测试

使用Postman或者Jmeter等压力测试工具,不停的请求http://任意集群IP:32469

[root@k8s-master ~]# kubectl get deploy pod-controller -n dev -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR pod-controller 10/10 10 10 41m nginx nginx:latest app=nginx-pod [root@k8s-master ~]#- 1

- 2

- 3

- 4

可以看到已经启动了10个Pod了。等没有请求访问nginx服务。最后只剩一个Pod了

-

相关阅读:

Java - servlet 三大器之过滤器 Filter

【CSS】H7_浮动

python爬虫入门(五)XPath使用

写字楼招商难、收租慢、管理乱?用快鲸智慧楼宇系统快速解决

安装和初步使用 nn-Meter

ArcGIS Engine:鹰眼图的拓展功能-点击和矩形+坐标状态栏

vite-vue3-ts 搭建项目时 项目中使用 @ 指代 src

Facebook的预填问题默认可以设定哪些类型。

如何使用轻量应用服务器搭建Veno File Manager个人私有云网盘?

【软件测试】身为测试人,经常背锅的我该咋办?

- 原文地址:https://blog.csdn.net/yy8623977/article/details/124872606