-

wsl-安装伪分布式大数据集群

文章目录

com

之前尝试在wsl中单节点安装cdh,已经成功安装并启动了cm,并且zk、hadoop、hbase也成功启动了,但hive和hbase老是进不去,提示hdfs处于safemode,暂时还没解决,所以就在wsl上装个伪分布式,用来测试

https://blog.csdn.net/qq_34224565/article/details/125828001一、环境准备

配置yum阿里源

https://blog.csdn.net/qq_34224565/article/details/105261652

安装jdk

使用root,创建/install_files,将安装包放到下面

sudo rpm -ivh oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm

sudo vim /etc/profile

在最下面添加export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera export CLASSPATH=$:CLASSPATH:$JAVA_HOME/lib/ export PATH=${JAVA_HOME}/bin:${PATH} source /etc/profile- 1

- 2

- 3

- 4

然后执行java -version验证

给root用户设置密码,后面启动dfs时ssh连接要用

passwd root

二、文件下载

官网很慢

https://mirrors.cnnic.cn/apache/hadoop/common/三、安装

tar -zxvf hadoop*.tar.gz -C /usr/local #解压到/usr/local目录下

cd /usr/local

mv hadoop* hadoopenv

vim ~/.bashrc export HADOOP_HOME=/usr/local/hadoop export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin export JAVA_LIBRAY_PATH=/usr/local/hadoop/lib/native- 1

- 2

- 3

- 4

- 5

vim /etc/profile unset i unset -f pathmunge export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera export HADOOP_HOME=/usr/local/hadoop export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export JAVA_LIBRAY_PATH=/usr/local/hadoop/lib/native export CLASSPATH=$:CLASSPATH:$JAVA_HOME/lib/ export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:${JAVA_HOME}/bin:${PATH} source /etc/profile- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

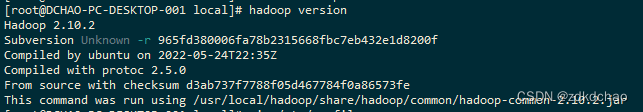

验证

四、伪分布式配置

cd /usr/local/hadoop/etc/hadoop- 1

修改hadoop-env.sh

添加hadoop_opts和jdk路径,这一步必须要做,即使hadoop-env.sh的上面已经配过

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera # 解决Unable to load native-hadoop library for your platform... export HADOOP_OPTS="-Djava.library.path=${HADOOP_HOME}/lib/native"- 1

- 2

- 3

修改core-site.xml

vim core-site.xml

<configuration> <property> <name>hadoop.tmp.dirname> <value>file:/usr/local/hadoop/tmpvalue> <description>Abase for other temporary directories.description> property> <property> <name>fs.defaultFSname> <value>hdfs://localhost:9000value> property> configuration>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

修改hdfs-site.xml

vim hdfs-site.xml

<configuration> <property> <name>dfs.replicationname> <value>1value> property> <property> <name>dfs.namenode.name.dirname> <value>file:/usr/local/hadoop/tmp/dfs/namevalue> property> <property> <name>dfs.datanode.data.dirname> <value>file:/usr/local/hadoop/tmp/dfs/datavalue> property> configuration>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

五、启动hdfs

ssh-keygen -A

启动/usr/sbin/sshd,因为启动dfs的时候会使用ssh的方式连接各个节点(本机)

直接执行/usr/sbin/sshd即可

ps -e | grep sshdssh免密,ssh自己的时候也需要设置免密

ssh-keygen

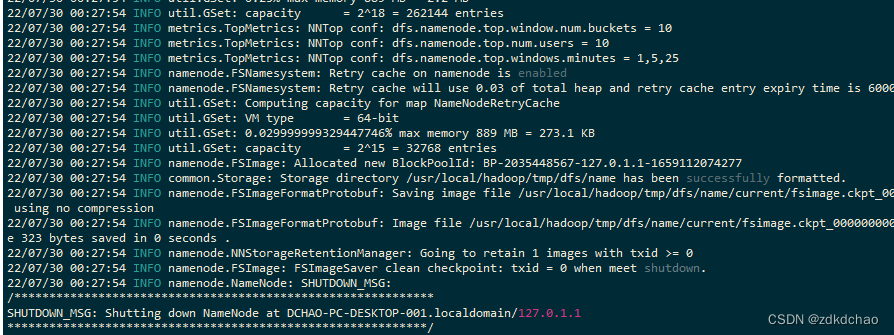

ssh-copy-id localhostNameNode格式化

/usr/local/hadoop/bin/hdfs namenode -format

dfs

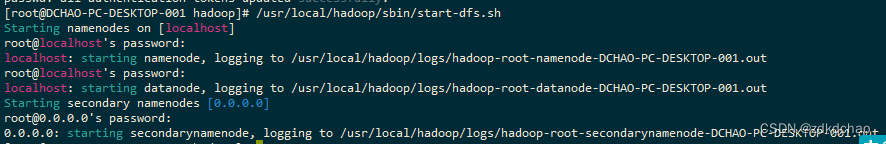

/usr/local/hadoop/sbin/start-dfs.sh

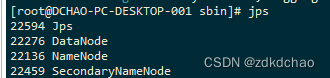

jps

启动完成后,可以通过命令 jps 来判断是否成功启动,若成功启动则会列出如下进程: “NameNode”、”DataNode” 和 “SecondaryNameNode”查看hadoop页面

http://localhost:50070

六、此时hdfs正常,关闭hdfs。配置yarn

修改mapred-site.xml

<configuration> <property> <name>mapreduce.framework.namename> <value>yarnvalue> property> <property> <name>yarn.app.mapreduce.am.envname> <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}value> property> <property> <name>mapreduce.map.envname> <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}value> property> <property> <name>mapreduce.reduce.envname> <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}value> property> configuration>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

修改yarn-site.xml

<configuration> <property> <name>yarn.nodemanager.aux-servicesname> <value>mapreduce_shufflevalue> property> configuration>- 1

- 2

- 3

- 4

- 5

- 6

七、启动所有

/usr/local/hadoop/sbin/start-all.sh

http://localhost:8088/

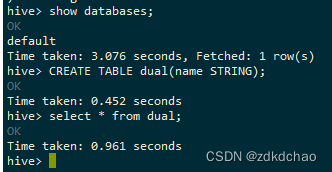

http://localhost:50070/0安装hive-derby

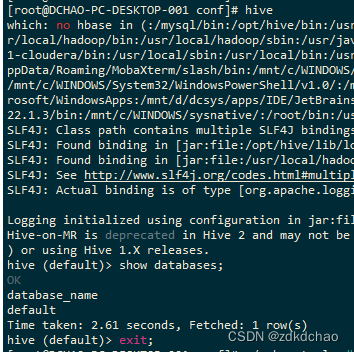

因为mysql没啥用,所以这次用hive自带的Derby数据库即可。但最后hivecli总是进不去,后面用mysql就可以了。

下载

https://dlcdn.apache.org/hive/

安装

tar zxvf apache-hive-2.3.9-bin.tar.gz -C /opt

cd /opt/

mv apache-hive-2.3.9-bin/ hiveenv

#hive export HIVE_HOME=/opt/hive export PATH=$HIVE_HOME/bin:xxx- 1

- 2

- 3

source /etc/profile echo $HIVE_HOME- 1

- 2

配置,跟hadoop整合

设置HADOOP_HOME env,这个之前已经设置过,

echo $HADOOP_HOME验证在hdfs上创建hive的目录,这一步要手动建,cdh才会自动

hadoop fs -mkdir /tmp hadoop fs -mkdir -p /user/hive/warehouse hadoop fs -chmod g+w /tmp hadoop fs -chmod g+w /user/hive/warehouse- 1

- 2

- 3

- 4

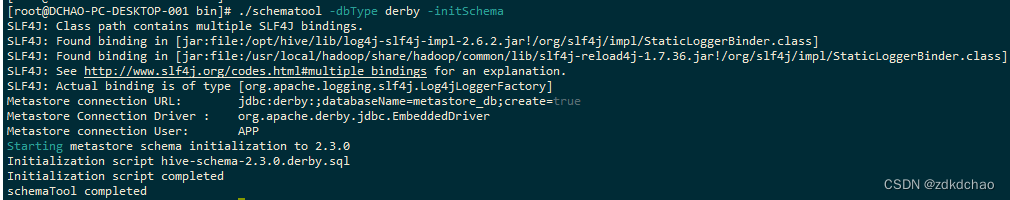

初始化元数据库

cd /opt/hive/bin schematool -dbType derby -initSchema- 1

- 2

此时可以用hivecli连接hive了

启用hs2

beeline是通过hs2连接hive的,相比hivecli,hs2支持多用户,更安全,还有其他更多功能。

#使用窗口还要用,所以后台运行 hiveserver2 &- 1

- 2

启动后,使用beeline连接时,报root不能连,

一开始所以创建hive用户,用hive连,发现不起作用。

然后http://www.manongjc.com/detail/25-jwxiitmwxxpyncm.html

根据上篇文章,如果hive.server2.enable.doAs设为false,hs2判断用户是根据运行hs2进程的linux用户,这样root就会被允许了。同时在Impersonation这章中,还有另外2个配置,虽然不知道啥意思,但也一起改了吧。

因为conf下没有hive-site.xml,因为用的是默认的单机模式,单机模式原则上不需要hive-site,hive-site是用来配置hive服务端的,但因为使用了hs2,所以还是要用,这里自己手动建一个:<configuration> <property> <name>hive.server2.enable.doAsname> <value>falsevalue> property> <property> <name>fs.hdfs.impl.disable.cachename> <value>truevalue> property> <property> <name>fs.file.impl.disable.cachename> <value>truevalue> property> <property> <name>hive.metastore.schema.verificationname> <value>falsevalue> property> <property> <name>hive.server2.thrift.min.worker.threadsname> <value>5value> property> <property> <name>hive.server2.thrift.max.worker.threadsname> <value>500value> property> <property> <name>hive.server2.transport.modename> <value>binaryvalue> property> <property> <name>hive.server2.thrift.http.portname> <value>10001value> property> <property> <name>hive.server2.thrift.http.max.worker.threadsname> <value>500value> property> <property> <name>hive.server2.thrift.http.min.worker.threadsname> <value>5value> property> <property> <name>hive.server2.thrift.http.pathname> <value>cliservicevalue> property> <property> <name>javax.jdo.option.ConnectionURLname> <value>jdbc:mysql://localhost:3306/metastore?createDatabaseIfNotExist=true;characterEncoding=UTF-8;useSSL=false;serverTimezone=GMTvalue> property> <property> <name>javax.jdo.option.ConnectionDriverNamename> <value>com.mysql.cj.jdbc.Drivervalue> property> <property> <name>javax.jdo.option.ConnectionUserNamename> <value>hivevalue> property> <property> <name>javax.jdo.option.ConnectionPasswordname> <value>dcdcdcvalue> property> <property> <name>hive.metastore.schema.verificationname> <value>falsevalue> property> <property> <name>hive.cli.print.current.dbname> <value>truevalue> property> <property> <name>hive.cli.print.headername> <value>truevalue> property> <property> <name>hive.server2.thrift.portname> <value>10000value> property> <property> <name>hive.server2.thrift.bind.hostname> <value>localhostvalue> property> configuration>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

重新启动hs2

hiveserver2 &hive --service metastore &

八、mysql

下载

https://dev.mysql.com/downloads/mysql/

检查是否有mariadb

rpm -qa|grep mariadb

rpm -e --nodeps mariadb-libs

rpm -qa|grep mariadb环境准备

chmod -R 777 /tmp

依赖

yum -y install net-tools

yum -y install libaio libmysqlclient.so.18创建用户和目录

useradd mysql

passwd mysqlmkdir /mysqldata

chown -R mysql:mysql /mysql;chown -R mysql:mysql /mysqldata

解压安装

tar zxvf /home/dc/install_source/mysql-8.0.30-el7-x86_64.tar.gz -C /

mv /mysql-8.0.30-el7-x86_64/ /mysql配置

/etc/my.cnf

[client] socket=/mysql/mysql.sock [mysqld] basedir=/mysql datadir=/mysqldata socket=/mysql/mysql.sock # Disabling symbolic-links is recommended to prevent assorted security risks symbolic-links=0 # Settings user and group are ignored when systemd is used. # If you need to run mysqld under a different user or group, # customize your systemd unit file for mariadb according to the # instructions in http://fedoraproject.org/wiki/Systemd # # include all files from the config directory # !includedir /etc/my.cnf.d- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

cd /mysql/support-files/

vim mysql.server

if test -z "$basedir" then basedir=/mysql bindir=/mysql/bin if test -z "$datadir" then datadir=/mysqldata fi sbindir=/mysql/bin libexecdir=/mysql/bin else bindir="$basedir/bin" if test -z "$datadir" then datadir="$basedir/data" fi sbindir="$basedir/sbin" libexecdir="$basedir/libexec" fi- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

cp mysql.server /etc/init.d/mysqld

chmod +x /etc/init.d/mysqld

chkconfig --add mysqld

chkconfig --list mysqld

设了这2步才能用systemctl来操作mysqldenv

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera #mysql export MYSQL_HOME=/mysql export CLASSPATH=$:CLASSPATH:$JAVA_HOME/lib/:$MYSQL_HOME/lib/ export PATH=$MYSQL_HOME/bin:$HIVE_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:${JAVA_HOME}/bin:${PATH}- 1

- 2

- 3

- 4

- 5

source /etc/profile echo $MYSQL_HOME- 1

- 2

初始化

/mysql/bin/mysqld --initialize --user=mysql --basedir=/mysql --datadir=/mysqldata

i*ZY-wtfO1dd启动mysql

cd /mysql/support-files ./mysql.server start Starting MySQL.Logging to '/mysqldata/DCHAO-PC-DESKTOP-001.err'. SUCCESS!- 1

- 2

- 3

- 4

登陆mysql,改密,授权

./mysql -uroot -pghA+w%qmm9uT alter user 'root'@'localhost' identified by 'dcdcdc'; flush privileges; use mysql; create user 'root'@'%' identified by 'dcdcdc'; grant all privileges on *.* to 'root'@'%' with grant option; create database metastore; create user 'hive'@'%' identified by 'dcdcdc'; grant all privileges on *.* to 'hive'@'%' with grant option; flush privileges; show databases;- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

九、hive-mysql

上面的hive-derby失败了,所以换mysql试下,这次成功了

下载

https://dlcdn.apache.org/hive/

安装

tar zxvf apache-hive-2.3.9-bin.tar.gz -C /opt

cd /opt/

mv apache-hive-2.3.9-bin/ hiveenv

#hive export HIVE_HOME=/opt/hive export PATH=$HIVE_HOME/bin:xxx- 1

- 2

- 3

source /etc/profile

echo $HIVE_HOME配置,跟hadoop整合

设置HADOOP_HOME env,这个之前已经设置过,echo $HADOOP_HOME验证

在hdfs上创建hive的目录,这一步要手动建,cdh才会自动

hadoop fs -mkdir /tmp hadoop fs -mkdir -p /user/hive/warehouse hadoop fs -chmod g+w /tmp hadoop fs -chmod g+w /user/hive/warehouse- 1

- 2

- 3

- 4

conf中的hive-site.xml,这个文件没有,需要自己建

<configuration> <property> <name>hive.server2.enable.doAsname> <value>falsevalue> property> <property> <name>fs.hdfs.impl.disable.cachename> <value>truevalue> property> <property> <name>fs.file.impl.disable.cachename> <value>truevalue> property> <property> <name>hive.server2.thrift.min.worker.threadsname> <value>5value> property> <property> <name>hive.server2.thrift.max.worker.threadsname> <value>500value> property> <property> <name>hive.server2.transport.modename> <value>binaryvalue> property> <property> <name>hive.server2.thrift.http.portname> <value>10001value> property> <property> <name>hive.server2.thrift.http.max.worker.threadsname> <value>500value> property> <property> <name>hive.server2.thrift.http.min.worker.threadsname> <value>5value> property> <property> <name>hive.server2.thrift.http.pathname> <value>cliservicevalue> property> <property> <name>javax.jdo.option.ConnectionURLname> <value>jdbc:mysql://localhost:3306/metastore?createDatabaseIfNotExist=true&allowPublicKeyRetrieval=true&characterEncoding=UTF-8&useSSL=false&serverTimezone=GMTvalue> property> <property> <name>javax.jdo.option.ConnectionDriverNamename> <value>com.mysql.cj.jdbc.Drivervalue> property> <property> <name>javax.jdo.option.ConnectionUserNamename> <value>hivevalue> property> <property> <name>javax.jdo.option.ConnectionPasswordname> <value>dcdcdcvalue> property> <property> <name>hive.metastore.schema.verificationname> <value>falsevalue> property> <property> <name>hive.metastore.event.db.notification.api.authname> <value>falsevalue> property> <property> <name>hive.metastore.warehouse.dirname> <value>/user/hive/warehousevalue> property> <property> <name>hive.cli.print.current.dbname> <value>truevalue> property> <property> <name>hive.cli.print.headername> <value>truevalue> property> <property> <name>hive.server2.thrift.portname> <value>10000value> property> <property> <name>hive.server2.thrift.bind.hostname> <value>localhostvalue> property> <property> <name>hive.metastore.urisname> <value>thrift://localhost:9083value> property> configuration>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

将mysql8.0驱动cp到lib下

cp mysql-connector-java-8.0.25.jar /opt/hive/lib/- 1

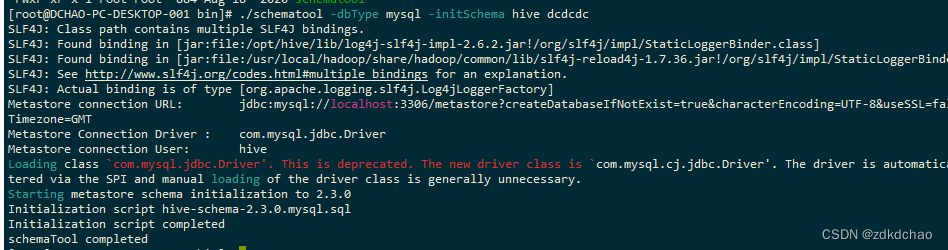

初始化元数据库

cd /opt/hive/bin ./schematool -dbType mysql -initSchema hive dcdcdc- 1

- 2

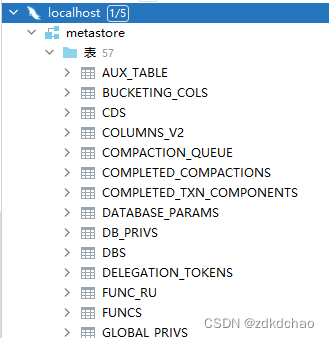

上面有个warning,在hivecli中也会报错,要更换mysql驱动的类名,所以在hive-site.xml中更换,这样就不会报错了此时,metastore库中就有了表

启动metastore和hs2服务

trap

- 之前出现过一种怪现象,beeline有时能访问有时不能,后来想通了,能访问的时候,是因为我启动了hivecli,而hivecli会自动启动metastore服务,也就是说,要先启动metastore服务,才能启动hs2

- hiveclie和hs2老是启动不了,后来把conf下的hive-log4j配置文件打开,查看日志

发现了一个Public Key Retrieval is not allowed的错,这是mysql8.0特有的,在hive-site.xml中的mysql url中加上allowPublicKeyRetrieval=true的参数即可 - 一定要把conf的hive-log4j打开,里面有具体的报错。比如启动metastore时,一开始有可能会报连接refuse,但这时不动,等一会,就能连接上了,可能连接mysql需要一些时间

hive命令行

不知为何,没有启动metastore服务也可以访问,可能启动hivecli的时候,自动启动了一个metastore

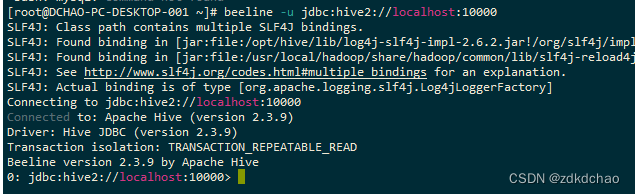

hs2+beeline

hiveserver2 & beeline -u jdbc:hive2://localhost:10000- 1

- 2

十、总结

启动项

/usr/sbin/sshd

/usr/local/hadoop/sbin/start-all.sh

/mysql/support-files/mysql.server start

hiveserver2 &脚本

/usr/sbin/sshd;/usr/local/hadoop/sbin/start-all.sh;/mysql/support-files/mysql.server start;hive --service metastore &;hiveserver2 &;

验证:

jps

ps -ef | grep hive

netstat -anp | grep 10000

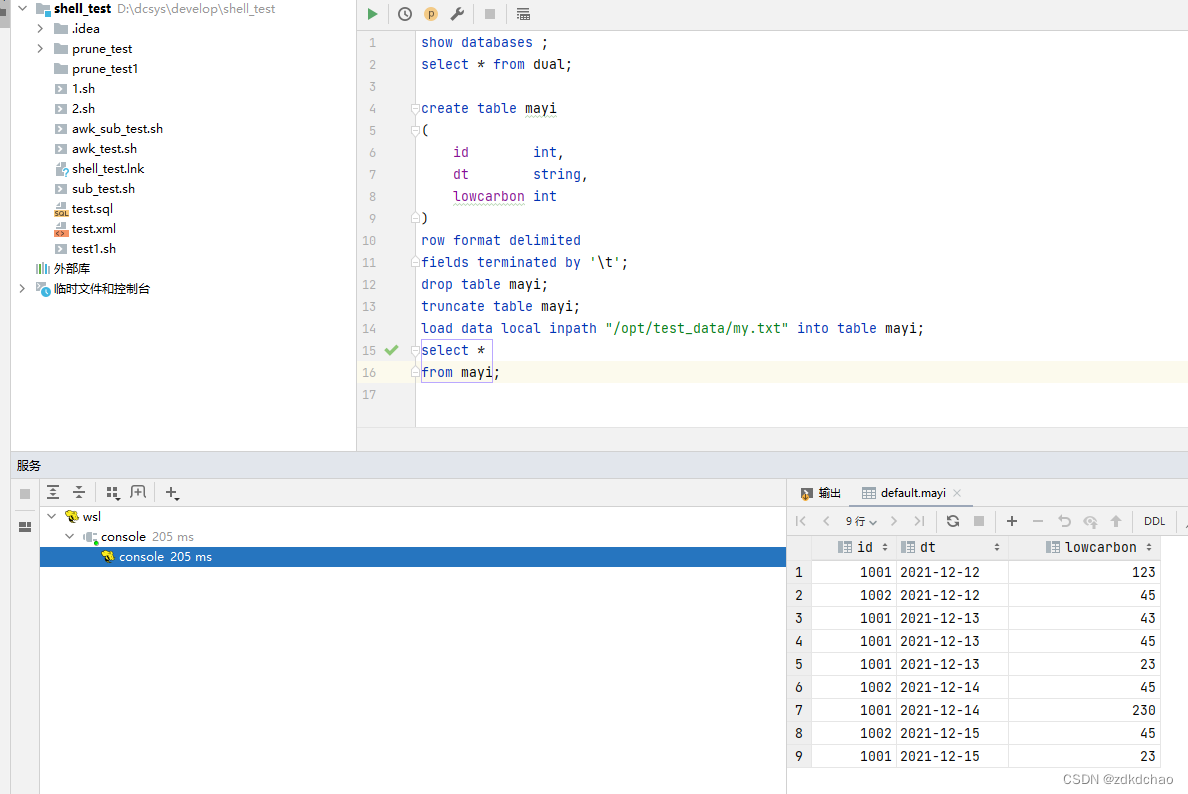

十一、使用idea连wsl中的hive

官网提供的ide中没有idea,但实际上idea是最方便的https://cwiki.apache.org/confluence/display/Hive/HiveServer2+Clients#HiveServer2Clients-JDBC

idea和squirrelsql,亲测都成功了

驱动具体用的哪个也不知道,但我把$HIVE_HOME/lib和/usr/local/hadoop/share/hadoop/common/lib下的jar全复制进去了

cp $HIVE_HOME/lib/*.jar /mnt/d/dcsys/develop/test_env/idea_hive_jars

cp /usr/local/hadoop/share/hadoop/common/lib*.jar /mnt/d/dcsys/develop/test_env/idea_hive_jars驱动类选HiveDriver

最重要的是,url中不要用locallost要用ifconfig中的ip

-

相关阅读:

互联网程序设计课程 第1讲 Java图形窗口程序设计

命令行远程操作windows

数据库管理-第116期 Oracle Exadata 06-ESS-下(202301114)

【笔记】微信小程序 跳转 微信公众号

国内主要的ERP软件有哪几种?谁家的ERP软件好用

如何加载带有 AM、PM 的时间类型数据

Shell脚本编写教程【十】——Shell 输入/输出重定向

服务器被攻击怎么选择更好的方式去防御,IDC说的集防和单机防御都是什么意思

2022-08-21 星环科技-C++开发笔试

护士人文修养

- 原文地址:https://blog.csdn.net/qq_34224565/article/details/126065261