-

Linux内核汇编代码分析

Linux内核汇编代码分析

读懂Linux内核汇编代码对理解ARMv8架构和指令集有很大裨益。下面将分析从内核汇编入口到C语言入口start_kernel()函数之间的一大段汇编代码。

1.vmlinux.lds.S文件分析

1.2 vmlinux.lds.S文件总体框架

<arch/arm64/kernel/vmlinux.lds.S> OUTPUT_ARCH(aarch64) ENTRY(_text) SECTIONS { . = KIMAGE_VADDR + TEXT_OFFSET; .text 段(代码段) .rodata 段(只读数据段) .init 段(初始化数据段) .data 段(数据段) .bss 段 }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- OUTPUT_ARCH(aarch64)说明最终编译的格式为aarch64。

- ENTRY(_text)表示其入口地址是_text,它实现在arch/arm64/kernel/head.S文件中。

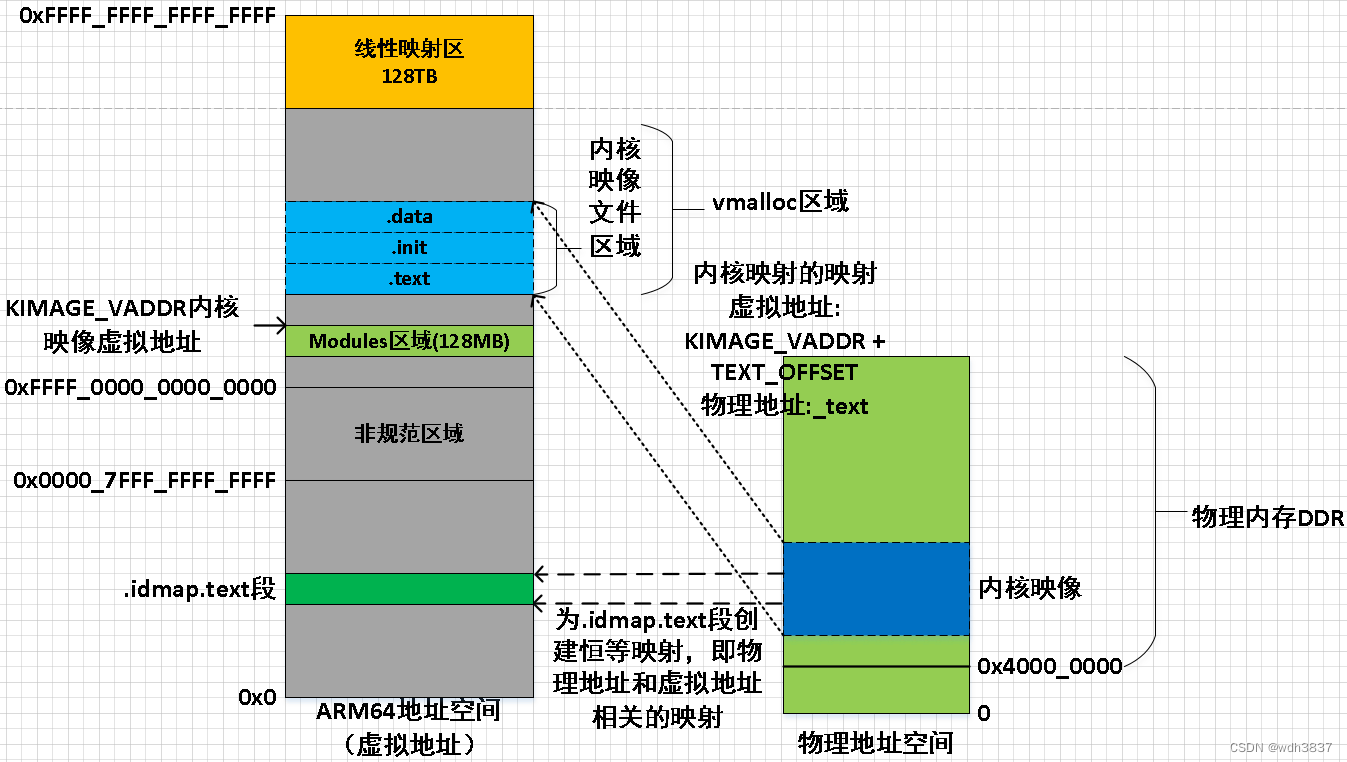

- KIMAGE_VADDR宏表示内核映像的虚拟地址,它实现在arch/arm64/include/asm/memory.h头文件。当VA_BIT=48时,KIMAGE_VADDR=0xFFFF_0000_1000_0000,PAGE_OFFSET=0xFFFF_8000_0000_0000。

#define VA_BITS (CONFIG_ARM64_VA_BITS) #define _PAGE_OFFSET(va) (-(UL(1) << (va))) #define PAGE_OFFSET (_PAGE_OFFSET(VA_BITS)) #define KIMAGE_VADDR (MODULES_END) #define BPF_JIT_REGION_START (KASAN_SHADOW_END) #define BPF_JIT_REGION_SIZE (SZ_128M) #define BPF_JIT_REGION_END (BPF_JIT_REGION_START + BPF_JIT_REGION_SIZE) #define MODULES_END (MODULES_VADDR + MODULES_VSIZE) #define MODULES_VADDR (BPF_JIT_REGION_END) #define MODULES_VSIZE (SZ_128M)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- TEXT_OFFSET宏表示内核映像的代码段在内存中的偏移量,它实现在arch/arm64/Makefile文件中。

TEXT_OFFSET := 0x00080000- 1

所以Linux内核的链接地址就是0xFFFF_0000_1008_0000,该地址也是Linux内核代码的起始地址。

1.3 代码段

.head.text : { _text = .; HEAD_TEXT } .text : { /* Real text segment */ _stext = .; /* Text and read-only data */ ... TEXT_TEXT ... . = ALIGN(16); *(.got) /* Global offset table */ } . = ALIGN(SEGMENT_ALIGN); _etext = .; /* End of text section */- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

代码段从_start开始。到_etext结束。

1.4 只读数据段

RO_DATA(PAGE_SIZE) /* everything from this point to */ EXCEPTION_TABLE(8) /* __init_begin will be marked RO NX */ NOTES . = ALIGN(SEGMENT_ALIGN); __init_begin = .;- 1

- 2

- 3

- 4

- 5

- 6

其中RO_DATA()是一个宏,它实现在include/asm-generic/vmlinux.lds.h头文件中。

#define RO_DATA(align) RO_DATA_SECTION(align) #define RO_DATA_SECTION(align) \ . = ALIGN((align)); \ .rodata : AT(ADDR(.rodata) - LOAD_OFFSET) { \ VMLINUX_SYMBOL(__start_rodata) = .; \ *(.rodata) *(.rodata.*) \ ...- 1

- 2

- 3

- 4

- 5

- 6

- 7

从上面的定义可知,只读数据段以PAGE_SIZE大小对齐。只读数据段从__start_rodata标记开始,到__init_begin标记结束。只读数据段里包含了系统PGD页表swapper_pd_dir、为软件PAN功能准备的特殊页表reserved_ttbr0等信息。

1.5 init段

__init_begin = .; __inittext_begin = .; ... . = ALIGN(PAGE_SIZE); __inittext_end = .; __initdata_begin = .; .init.data : { INIT_DATA INIT_SETUP(16) INIT_CALLS CON_INITCALL INIT_RAM_FS *(.init.rodata.* .init.bss) /* from the EFI stub */ } .exit.data : { ARM_EXIT_KEEP(EXIT_DATA) } ... . = ALIGN(SEGMENT_ALIGN); __initdata_end = .; __init_end = .;- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

.init段包含一些系统初始化时的数据,如模块加载函数core_initcall()或者module_init()函数。.init段从__init_begin标记开始,到__init_end标记结束。

1.6 数据段

_data = .; _sdata = .; RW_DATA_SECTION(L1_CACHE_BYTES, PAGE_SIZE, THREAD_SIZE) ... PECOFF_EDATA_PADDING _edata = .;- 1

- 2

- 3

- 4

- 5

- 6

数据段从_sdata标记开始,到_edata标记结束。

1.7 未初始化数据段

BSS_SECTION(0, 0, 0) #define BSS_SECTION(sbss_align, bss_align, stop_align) \ . = ALIGN(sbss_align); \ VMLINUX_SYMBOL(__bss_start) = .; \ SBSS(sbss_align) \ BSS(bss_align) \ . = ALIGN(stop_align); \ VMLINUX_SYMBOL(__bss_stop) = .;- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

该段从__bss_start标记开始,到__bss_stop标记结束。

1.8 链接文件包含的内容

名称 区间范围 说明 代码段 _stext~_etext 存放内核的代码段 只读数据段 _start_rodata~__init_begin 存放只读数据,包括PGD页表swapper_pg_dir、特殊页表reserved_ttbr0等信息 init段 __init_begin ~__initend 存放内核初始化数据 数据段 _sdata~_edata 存放可读/可写的数据 未初始化数据段 __bss_start~__bss_stop 存放初始化为0的数据以及未初始化的全局变量和静态变量 2.启动汇编代码

-

启动引导程序会做必要的初始化,如内存设备初始化、磁盘设备初始化以及将内核映像文件加载到运行地址等,然后跳转到Linux内核入口。

-

ARMv8架构处理器支持虚拟化扩展的EL2和安全模式的EL3,这些异常等级都可以引导(切换)Linux内核的运行。Linux内核运行在EL1.总的来说,启动引导程序会做如下的一些引导动作。

- 初始化物理内存。

- 设置设备树(device tree)。

- 解压缩内核映像,将其加载到内核运行地址(可选)。

- 跳转到内核入口地址。

-

arch/arm64/kernel/head.S文件中第48~62行的注释里描述了Linux内核中关于入口的约定

48 /* 49 * Kernel startup entry point. 50 * --------------------------- 51 * 52 * The requirements are: 53 * MMU = off, D-cache = off, I-cache = on or off, 54 * x0 = physical address to the FDT blob. 55 * 56 * This code is mostly position independent so you call this at 57 * __pa(PAGE_OFFSET + TEXT_OFFSET). 58 * 59 * Note that the callee-saved registers are used for storing variables 60 * that are useful before the MMU is enabled. The allocations are described 61 * in the entry routines. 62 */- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

相关约定如下:

- 关闭MMU。

- 关闭数据高速缓存。

- 指令高速缓存可以关闭或者打开。

- X0寄存器指向设备树的入口。

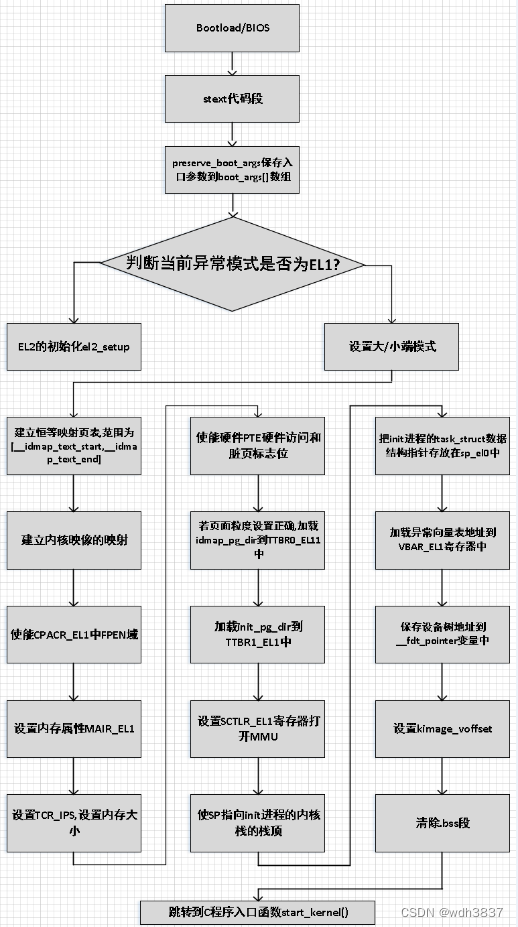

stext函数包括如下几个重要的函数。

- preserve_boot_args:保存启动参数到boot_args[]数组。

- el2_setup:把模式切换到el1以运行Linux内核。

- set_cpu_boot_mode_flag:设置__boot_cpu_mode[]变量。

- __create_page_tables:创建恒等映射页表以及内核映像映射页表。

- __cpu_setup:为打开MMU做一些与处理器相关的初始化。

- __primary_switch:启动MMU并跳转到start_kernel函数中。

2.1 preserve_boot_args函数

129 preserve_boot_args: 130 mov x21, x0 // x21=FDT 131 132 adr_l x0, boot_args // record the contents of 133 stp x21, x1, [x0] // x0 .. x3 at kernel entry 134 stp x2, x3, [x0, #16] 135 136 dmb sy // needed before dc ivac with 137 // MMU off 138 139 mov x1, #0x20 // 4 x 8 bytes 140 b __inval_dcache_area // tail call 141 ENDPROC(preserve_boot_args)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

preserve_boot_args函数主要用于把从启动引导程序传递过来的4个参数X0~X3保存到boot_args[]数组中。

- 在第130行中,X0寄存器保存设备树的地址,先暂时将其加载到X21寄存器。

- 在第132行中,把boot_args[]数组的地址保存到X0寄存器。

- 在第133~134行中,保存参数到 X0 ~ X3到boot_args[]数组中。

- 在第136行中,dmb指令与sy参数表示在全系统高速缓存范围内做一次内存屏障,保证刚才的stp指令运行顺序正确,也就是保证在后面__inval_dcache_area函数在运行dc ivac指令前完成stp指令。

- 在第139行中,x1的值为0x20,也就是32字节。x0和x1是接下来要调用__inval_dcache_area函数时用的参数,x0表示设备树的地址,x1表示其长度。

- 在第140行中,调用__inval_dcache_area函数来使boot_args[]数组对应的高速缓存失效并清除这些缓存。

2.2 el2_setup函数

el2_setup函数实现在arch/arm64/kernel/head.S文件中。

489 ENTRY(el2_setup) 490 msr SPsel, #1 // We want to use SP_EL{1,2} 491 mrs x0, CurrentEL 492 cmp x0, #CurrentEL_EL2 493 b.eq 1f 494 mov_q x0, (SCTLR_EL1_RES1 | ENDIAN_SET_EL1) 495 msr sctlr_el1, x0 496 mov w0, #BOOT_CPU_MODE_EL1 // This cpu booted in EL1 497 isb 498 ret 499 500 1: mov_q x0, (SCTLR_EL2_RES1 | ENDIAN_SET_EL2) 501 msr sctlr_el2, x0 502 ...- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

从Linux内核关于入口的约定可知,处理器的状态有两种——或者处于EL2,或者处理非安全模式的EL1.因此el2_setup函数会做判断,然后进行相应的处理。约定处理器处于非安全模式的EL1。

- 第490行,选择当前异常等级的SP寄存器。这时的SP寄存器可能是SP_EL1或者SP_EL2.

- 第491行,从PSTATE寄存器中获取当前异常等级。CurrentEL作为一个特殊的寄存器,用来获取PSTATE寄存器中EL域的值,该EL域保存了当前的异常等级。

- 第492行,X0寄存器存放了当前异常等级,判断其是否为EL2。

- 第493行,若当前等级为EL2,则跳转到标签1处。

- 第494行,若处理器运行到此处,说明当前异常等级为EL1。接下来要配置系统控制寄存器SCTLR_EL1。

- 第 495行,配置SCRLR_EL1,设置大/小端模式,其中EE域用来控制EL1处于大端还小端模式,EOE域用来控制EL0处于大端还是小端模式。

- 第496行,BOOT_CPU_MODE_EL1宏定义在arch/arm64/include/asm/virt.h头文件。

- 第497行,刚才通过修改系统控制寄存器SCTLR_EL1改变了系统的大/小端模式。需要一条isb指令,确保该指令前面所有的指令都运行完成。

- 第498行,通过ret指令返回。

2.3 set_cpu_boot_mode_flag函数

656 set_cpu_boot_mode_flag: 657 adr_l x1, __boot_cpu_mode 658 cmp w0, #BOOT_CPU_MODE_EL2 659 b.ne 1f 660 add x1, x1, #4 661 1: str w0, [x1] // This CPU has booted in EL1 662 dmb sy 663 dc ivac, x1 // Invalidate potentially stale cache line 664 ret 665 ENDPROC(set_cpu_boot_mode_flag)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

set_cpu_boot_mode_flag函数用来设置__boot_cpu_mode[]变量。系统定义了一个全局变量__boot_cpu_mode[]来记录处理器是在哪个异常等级启动的。该全局变量定义在arch/arm64/include/asm/virt.h头文件。

- 第657行,加载__boot_cpu_mode[]变量到X1寄存器中。

- 第658行,判断参数w0的值是否为BOOT_CPU_MODE_EL2。

- 第659行,若不相等,说明处理器是在EL1启动的,跳转到标签1处。

- 第661行,w0存储着处理器启动时的异常等级,把异常等级存放到__boot_cpu_mode[0]中。

- 第662行,dmb指令保证刚才的str指令比dmb指令后面的加载——存储指令先执行。

- 第663行,使__boot_cpu_mode[]变量对应的高速缓存无效。

- 第664行,通过ret返回。

2.4 __create_page_tables函数

为了降低启动代码的复杂性,进入Linux内核入口时MMU时关闭的。关闭了MMU意味着不能利用高速缓存的性能。因此我们在初始化的某个阶段需要把MMU打开并且使能数据高速缓存,以获得更高的性能。但是,如何打开MMU?我们需要小心,否则会发生意想不到的问题。

- 在关闭MMU的情况下,处理器访问的地址都是物理地址。当MMU打开时,处理器访问的地址变成了虚拟地址。

- 现代处理器大多是多级流水线架构,处理器会提前预取多条指令到流水线中。当打开MMU时,处理器已经提前预取了多条指令,并且这些指令是以物理地址来进行预取的。打开MMU的指令运行完之后,处理器的MMU功能功效,于是之前提前预取的指令会以虚拟地址来访问,到MMU中查找对应的物理地址。因此,这是为了保证处理器在开启MMU前后可以连续取指令。

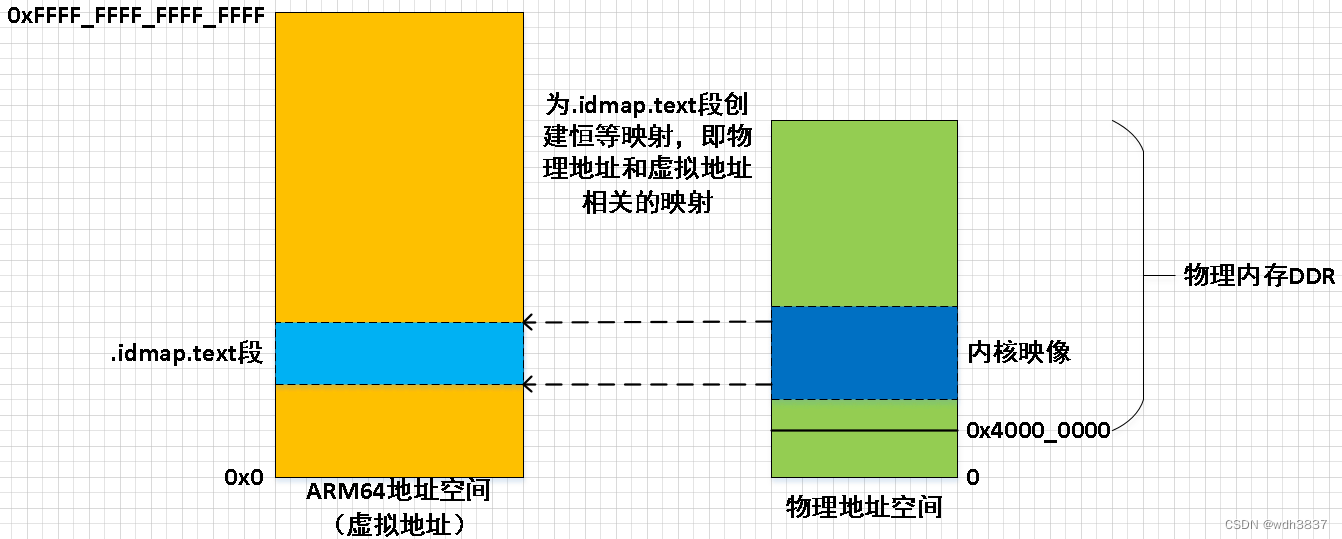

- 在打开MMU时,首先创建一个虚拟地址和物理地址相等的映射——恒等映射,这样就可以巧妙的解决上述问题。注意,这里建立的恒等映射时小范围的,占用的空间通常是内核映像的大小,也就是几兆字节。

2.4.1 创建恒等映射

- 创建恒等映射是在__create_page_tables汇编函数中arch/arm64/kernel/head.S实现的。

279 __create_page_tables: 280 mov x28, lr 281 282 /* 283 * Invalidate the init page tables to avoid potential dirty cache lines 284 * being evicted. Other page tables are allocated in rodata as part of 285 * the kernel image, and thus are clean to the PoC per the boot 286 * protocol. 287 */ 288 adrp x0, init_pg_dir 289 adrp x1, init_pg_end 290 sub x1, x1, x0 291 bl __inval_dcache_area 292 293 /* 294 * Clear the init page tables. 295 */ 296 adrp x0, init_pg_dir 297 adrp x1, init_pg_end 298 sub x1, x1, x0 299 1: stp xzr, xzr, [x0], #16 300 stp xzr, xzr, [x0], #16 301 stp xzr, xzr, [x0], #16 302 stp xzr, xzr, [x0], #16 303 subs x1, x1, #64 304 b.ne 1b 305 306 mov x7, SWAPPER_MM_MMUFLAGS 307 308 /* 309 * Create the identity mapping. 310 */ 311 adrp x0, idmap_pg_dir 312 adrp x3, __idmap_text_start // __pa(__idmap_text_start) 313 314 #ifdef CONFIG_ARM64_VA_BITS_52 315 mrs_s x6, SYS_ID_AA64MMFR2_EL1 316 and x6, x6, #(0xf << ID_AA64MMFR2_LVA_SHIFT) 317 mov x5, #52 318 cbnz x6, 1f 319 #endif 320 mov x5, #VA_BITS_MIN 321 1: 322 adr_l x6, vabits_actual 323 str x5, [x6] 324 dmb sy 325 dc ivac, x6 // Invalidate potentially stale cache line 326 327 /* 328 * VA_BITS may be too small to allow for an ID mapping to be created 329 * that covers system RAM if that is located sufficiently high in the 330 * physical address space. So for the ID map, use an extended virtual 331 * range in that case, and configure an additional translation level 332 * if needed. 333 * 334 * Calculate the maximum allowed value for TCR_EL1.T0SZ so that the 335 * entire ID map region can be mapped. As T0SZ == (64 - #bits used), 336 * this number conveniently equals the number of leading zeroes in 337 * the physical address of __idmap_text_end. 338 */ 339 adrp x5, __idmap_text_end 340 clz x5, x5 341 cmp x5, TCR_T0SZ(VA_BITS) // default T0SZ small enough? 342 b.ge 1f // .. then skip VA range extension 343 344 adr_l x6, idmap_t0sz 345 str x5, [x6] 346 dmb sy 347 dc ivac, x6 // Invalidate potentially stale cache line 348 349 #if (VA_BITS < 48) 350 #define EXTRA_SHIFT (PGDIR_SHIFT + PAGE_SHIFT - 3) 351 #define EXTRA_PTRS (1 << (PHYS_MASK_SHIFT - EXTRA_SHIFT)) 352 353 /* 354 * If VA_BITS < 48, we have to configure an additional table level. 355 * First, we have to verify our assumption that the current value of 356 * VA_BITS was chosen such that all translation levels are fully 357 * utilised, and that lowering T0SZ will always result in an additional 358 * translation level to be configured. 359 */ 360 #if VA_BITS != EXTRA_SHIFT 361 #error "Mismatch between VA_BITS and page size/number of translation levels" 362 #endif 363 364 mov x4, EXTRA_PTRS 365 create_table_entry x0, x3, EXTRA_SHIFT, x4, x5, x6 366 #else 367 /* 368 * If VA_BITS == 48, we don't have to configure an additional 369 * translation level, but the top-level table has more entries. 370 */ 371 mov x4, #1 << (PHYS_MASK_SHIFT - PGDIR_SHIFT) 372 str_l x4, idmap_ptrs_per_pgd, x5 373 #endif 374 1: 375 ldr_l x4, idmap_ptrs_per_pgd 376 mov x5, x3 // __pa(__idmap_text_start) 377 adr_l x6, __idmap_text_end // __pa(__idmap_text_end) 378 379 map_memory x0, x1, x3, x6, x7, x3, x4, x10, x11, x12, x13, x14- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 第280行,把lr的值存放到x28寄存器中。

- 第288~289行加载init_pg_dir与init_pg_end到X0,X1寄存器中。init_pa_dir和init_pg_end实现在vmlinux.lds.S链接文件中,这个页表的大小为INIT_DIR_SIZE。

arch/arm64/kernel/vmlinux.lds.S . = ALIGN(PAGE_SIZE); init_pg_dir = .; . += INIT_DIR_SIZE; init_pg_end = .;- 1

- 2

- 3

- 4

- 5

- 第291行,调用__inval_dcache_area函数来使init_pg_dir页表对应的高速缓存无效。稍后会建立内核空间的页表映射,因此,这里先清空init_pg页表的高速缓存。

- 第296~304行,把init_pg页表的内核设置为0。

- 第306行,SWAPPER_MM_MMUFLAGS宏描述了段映射的属性,它实现在kerne-pgtable.h头文件中。

arch/arm64/include/asm/kernel-pgtable.h #define SWAPPER_PMD_FLAGS (PMD_TYPE_SECT | PMD_SECT_AF | PMD_SECT_S) #define SWAPPER_MM_MMUFLAGS (PMD_ATTRINDX(MT_NORMAL) | SWAPPER_PMD_FLAGS)- 1

- 2

- 3

- 其中定义内存属性为普通内存,PMD_TYPE_SECT表示一个块映射,PMD_SECT_AF设置快映射的访问权限,PMD_SECT_S表示快映射的共享属性。

- 第311行,加载idmap_pg_dir的物理地址到X0寄存器。idmap_pg_dir和恒等映射的页表。它定义在vmlinux.lds.S链接文件中。

. = ALIGN(PAGE_SIZE); idmap_pg_dir = .; . += IDMAP_DIR_SIZE; idmap_pg_end = .;- 1

- 2

- 3

- 4

- 这里分配给idmap_pg_dir页表的大小为IDMAP_DIR_SIZE。IDMAP_DIR_SIZE实现在arch/arm64/include/asm/kernel-pgtable.h头文件中,通常大小是3个连续的4KB页面。这里的3个物理页面分别对应PGD,PUD和PMD页表,每一级页表占据一个页面。注意,这里要建立的是2MB大小的块映射,而不是4KB页面的映射。

arch/arm64/include/asm/kernel-pgtable.h #define IDMAP_DIR_SIZE (IDMAP_PGTABLE_LEVELS * PAGE_SIZE)- 1

- 2

- 第312行,__idmap_text_start是.idmap.text段的起始地址。除了开机启动打开MMU外,内核里还有很多场景是需要恒等映射的,如唤醒处理器的函数cpu_do_resume。

- 第320行,VA_BITS表示虚拟地址的宽度,它的值等于CONFIG_ARM64_VA_BITS,这里默认48位。

- 第322~323行,把VA_BITS的值保存到全局变量vabits_actual中。

- 第324行,插入内存屏障指令。

- 第325行,清除全局变量vabits_actual对应的高速缓存。

- 第339行,加载__idmap_text_end到X5寄存器。

- 第340行,clz是前导零计数指令,计算第一个为1的位前面有多少个为0的位。

- 第341行,比较__idmap_text_end是否超过了VA_BITS所能达到的地址范围。若没有超过,则跳转到标签1处,即第374行。

- 第375行,加载idmap_ptrs_per_pgd到X5寄存器,它的值等于PTRS_PER_PGD,表示PGD页表包含多少个页表项。

- 第376行,X5寄存器存放了__idmap_text_start的地址。

- 第377行,X6寄存器存放了__idmap_text_end的地址。

- 第379行,调用map_memory宏建立映射页表。

2.4.1.1 map_memory

map_memory宏也实现在arch/arm64/kernel/head.S文件中。

245 .macro map_memory, tbl, rtbl, vstart, vend, flags, phys, pgds, istart, iend, tmp, count, sv 246 add \rtbl, \tbl, #PAGE_SIZE 247 mov \sv, \rtbl 248 mov \count, #0 249 compute_indices \vstart, \vend, #PGDIR_SHIFT, \pgds, \istart, \iend, \count 250 populate_entries \tbl, \rtbl, \istart, \iend, #PMD_TYPE_TABLE, #PAGE_SIZE, \tmp 251 mov \tbl, \sv 252 mov \sv, \rtbl 253 254 #if SWAPPER_PGTABLE_LEVELS > 3 255 compute_indices \vstart, \vend, #PUD_SHIFT, #PTRS_PER_PUD, \istart, \iend, \count 256 populate_entries \tbl, \rtbl, \istart, \iend, #PMD_TYPE_TABLE, #PAGE_SIZE, \tmp 257 mov \tbl, \sv 258 mov \sv, \rtbl 259 #endif 260 261 #if SWAPPER_PGTABLE_LEVELS > 2 262 compute_indices \vstart, \vend, #SWAPPER_TABLE_SHIFT, #PTRS_PER_PMD, \istart, \iend, \count 263 populate_entries \tbl, \rtbl, \istart, \iend, #PMD_TYPE_TABLE, #PAGE_SIZE, \tmp 264 mov \tbl, \sv 265 #endif 266 267 compute_indices \vstart, \vend, #SWAPPER_BLOCK_SHIFT, #PTRS_PER_PTE, \istart, \iend, \count 268 bic \count, \phys, #SWAPPER_BLOCK_SIZE - 1 269 populate_entries \tbl, \count, \istart, \iend, \flags, #SWAPPER_BLOCK_SIZE, \tmp 270 .endm- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- map_memory宏自带12个参数,部分参数说明如下,其他参数都是临时使用的。

- tbl:页表起始地址。页表地址是idmap_pg_dir。

- rtbl:下一级页表的起始地址。下一级页表的基地址通常是tbl+PAGE_SIZE。

- vstart:要映射的虚拟地址的起始地址。这里是__idmap_text_start。

- vend:要映射的虚拟地址的结束地址。这里是__idmap_text_end。

- flasgs:最后一级页表的一些属性。

- phys:映射对应的物理地址。物理地址的起始地址是__idmap_text_start。

- pgds:PGD页表项的个数。

- 第246行,rtbl用来指向下一级页表的起始地址。之前提到恒等映射是一个小范围的映射,通常大小就是内核映像大小,因此一个PGD页表项就足够了。另外,这个页表项对应的PUD和PMD页表是提前分配好的,和PGD页表正好相邻,每个页表对应一个4KB物理页面。因此,tbl加上PAGE_SIZE就等于PUD的基地址。

- 第249行,调用compute_indices宏根据虚拟地址来计算虚拟地址vstart和vend在各自页表中对应的索引值。

- 第250行,调用populate_entries宏来设置页表项的内容。

- 第249~250行,设置一级页表——PGD页表对应的页表项。

- 第262~263行,设置二级页表——PMD页表对应的页表项。

- 第267~268行,设置最后一级页表——PT对应的页表项。

2.4.1.2 compute_indices

下面来看一下compute_indices宏的实现

210 .macro compute_indices, vstart, vend, shift, ptrs, istart, iend, count 211 lsr \iend, \vend, \shift 212 mov \istart, \ptrs 213 sub \istart, \istart, #1 214 and \iend, \iend, \istart // iend = (vend >> shift) & (ptrs - 1) 215 mov \istart, \ptrs 216 mul \istart, \istart, \count 217 add \iend, \iend, \istart // iend += (count - 1) * ptrs 218 // our entries span multiple tables 219 220 lsr \istart, \vstart, \shift 221 mov \count, \ptrs 222 sub \count, \count, #1 223 and \istart, \istart, \count 224 225 sub \count, \iend, \istart 226 .endm- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- compute_indices宏有7个参数,部分参数说明如下。

- vstart:虚拟地址的起始地址。

- vend:虚拟地址的结束地址。

- shift:各级页表在虚拟地址中的偏移量。

- ptrs:页表项的个数。

- istart:vstart在页表中的索引值。

- iend:vend在页表中的索引值。

- compute_indices宏主要的作用是计算vstart和vend在各级页表中的索引(index)值,并且将其保存在istart和iend中。索引值可以通过如下公式计算。index = (vstart >> shift) & (ptrs - 1)

2.4.1.3 populate_entries

- 下面来看一下populate_entries函数的实现。

182 .macro populate_entries, tbl, rtbl, index, eindex, flags, inc, tmp1 183 .Lpe\@: phys_to_pte \tmp1, \rtbl 184 orr \tmp1, \tmp1, \flags // tmp1 = table entry 185 str \tmp1, [\tbl, \index, lsl #3] 186 add \rtbl, \rtbl, \inc // rtbl = pa next level 187 add \index, \index, #1 188 cmp \index, \eindex 189 b.ls .Lpe\@ 190 .endm- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- populate_entries函数有如下7个参数。

- tbl:页表基地址。

- rtbl:下一级页表基地址。

- index:vstart在页表中的索引值。

- eindex:vend在页表中的索引值。

- flags:页表项的相关属性。

- inc:每一个页表项。

- tmp1:临时使用。

- 第183行,phys_to_pte宏把rtbl指向的下一级页表地址存放到tmp1变量中。

- 第184行,设置页表项的属性,如PMD_TYPE_TABLE表示一个PMD的页表项。

- 第185行,把新的页表项tmp1写入tbl指向的页表的项中。这里会使用index来进行索引和寻址。每一个页表项占8字节,因此使用“lsl #3”来指定索引和寻址。

- 第186行,rtbl指向下一级页表的地址。

- 第187行,index值加一。

- 第188行,判断是否完成了索引。

综合上述分析可知,__create_page_tables函数的第379行中,map_memory创建了一个恒等映射,把.idmap.text段的虚拟地址映射到了相同的物理地址上,这个映射的页表在idmap_pg_dir中如下图,物理内存的起始地址是从0x4000_0000开始的。

2.4.1.4 .idmap.text段中的成员

恒等映射的成员到链接到了.idmap.text中,究竟有哪些成员呢?恒等映射的起始地址为__idmap_text_start,结束地址为__idmap_text_end。我们可以从System.map文件中找出哪些函数在.idmap.text段里。

ffff0000105da000 t __idmap_text_start ffff0000105da000 t kimage_vaddr ffff0000105da008 t el2_setup ffff0000105da060 t set_hcr ffff0000105da130 t install_el2_stub ffff0000105da184 t set_cpu_boot_mode_flag ffff0000105da1a8 t secondary_holding_pen ffff0000105da1cc t pen ffff0000105da1e0 t secondary_entry ffff0000105da1ec t secondary_startup ffff0000105da204 t __secondary_switched ffff0000105da240 t __secondary_too_slow ffff0000105da24c t __enable_mmu ffff0000105da2a4 t __cpu_secondary_check52bitva ffff0000105da2a8 t __no_granule_support ffff0000105da2cc t __relocate_kernel ffff0000105da314 t __primary_switch ffff0000105da388 t cpu_resume ffff0000105da3a8 t __cpu_soft_restart ffff0000105da3e8 t cpu_do_resume ffff0000105da478 t idmap_cpu_replace_ttbr1 ffff0000105da4ac t __idmap_kpti_flag ffff0000105da4b0 t idmap_kpti_install_ng_mappings ffff0000105da4ec t do_pgd ffff0000105da504 t next_pgd ffff0000105da514 t skip_pgd ffff0000105da554 t walk_puds ffff0000105da55c t next_pud ffff0000105da560 t walk_pmds ffff0000105da568 t do_pmd ffff0000105da580 t next_pmd ffff0000105da590 t skip_pmd ffff0000105da5a0 t walk_ptes ffff0000105da5a8 t do_pte ffff0000105da5cc t skip_pte ffff0000105da5dc t __idmap_kpti_secondary ffff0000105da624 t __cpu_setup ffff0000105da700 t __idmap_text_end- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 我们发现和启动代码相关的__enable_mmu、__primary_switch、__cpu_setup等汇编函数在.idmap.text段中,另外,和系统唤醒相关的函数(包括cpu_resume、cpu_do_resume等函数)也在.idmap.text段中。

- 在head.S汇编文件的第476行,有一个“.section”汇编伪操作,表示第476行后面的代码会链接到.idmap.text段中,awx表示该段是可分配、可写以及可执行的。

arch/arm64/kernel/head.S 476 .section ".idmap.text","awx"- 1

- 2

2.4.2 创建内核映像的页表映射

最后我们再来看内核映像的页表映射,继续看__create_page_tables函数的实现。

384 adrp x0, init_pg_dir 385 mov_q x5, KIMAGE_VADDR + TEXT_OFFSET // compile time __va(_text) 386 add x5, x5, x23 // add KASLR displacement 387 mov x4, PTRS_PER_PGD 388 adrp x6, _end // runtime __pa(_end) 389 adrp x3, _text // runtime __pa(_text) 390 sub x6, x6, x3 // _end - _text 391 add x6, x6, x5 // runtime __va(_end) 392 393 map_memory x0, x1, x5, x6, x7, x3, x4, x10, x11, x12, x13, x14 394 395 /* 396 * Since the page tables have been populated with non-cacheable 397 * accesses (MMU disabled), invalidate those tables again to 398 * remove any speculatively loaded cache lines. 399 */ 400 dmb sy 401 402 adrp x0, idmap_pg_dir 403 adrp x1, idmap_pg_end 404 sub x1, x1, x0 405 bl __inval_dcache_area 406 407 adrp x0, init_pg_dir 408 adrp x1, init_pg_end 409 sub x1, x1, x0 410 bl __inval_dcache_area 411 412 ret x28 413 ENDPROC(__create_page_tables)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 第384行,加载init页表的基地址init_pg_dir到X0寄存器。

- 第385行,内核映像即将要映射的虚拟地址为KIMAGE_VADDR + TEXT_OFFSET

- 第386行,内核映像的虚拟地址需要加上KASLR的设置。X23寄存器存放了__PHYS_OFFSET的值。

- 第388~389行,内核映像的物理起始地址为_text,结束地址为_end。

- 第391行,计算内核映像结束的虚拟地址。

- 第393行,调用map_memory宏来建立页表映射,相关说明如下。

- 内核页表基地址:init_pg_dir。

- 虚拟地址的起始地址:起始地址为KIMAGE_VADDR + TEXT_OFFSET,并存放在X5寄存器中。

- 虚拟地址的结束地址:存放在X6寄存器。

- 物理地址的起始地址:物理地址的起始地址为_text,存放在X3寄存器中。

- PTE属性:存放X7寄存器。

- 综上所述,__create_page_tables函数会创建两个映射,一个是.idmap.text段的恒等映射,另外一个是内核映像的映射,如下图。

- 从上图中可能会有疑问,为什么这里要创建两个页表?而不是一个页表呢?

- 因为ARM64处理器有两个页表基地址寄存器,一个是TTBR0,另外一个TTBR1。当虚拟地址的第63位为0时,选择TTBR0指向的页表;当虚拟地址的第63位为1时,选择TTBR1指向的页表。

- 若物理地址的第63位为1,那是不是可以只使用一个页表基地址寄存器?但是一般ARM64的SoC处理器的物理内存的起始地址是从0地址开始的。另外,物理内存大小不可能很大,足以让物理地址的第63位为1。因此,在做恒等映射时,我们采用TTBR0来映射低256TB大小的地址空间。而当把内核映像到内核空间时(高256TB大小的空间),虚拟地址的第63位为1,所以采用TTBR1。

- 因此,__create_page_tables汇编函数创建两个页表,一个给TTBR0使用,另外一个给TTBR1使用,在后续的__enable_mmu函数中会让处理器加载这两个页表。

2.5 __cpu_setup函数

__cpu_setup函数打开MMU以做一些与处理器相关的初始化,它的代码实现在arch/arm64/mm/proc.S文件中。

arch/arm64/mm/proc.S 422 .pushsection ".idmap.text", "awx" 423 ENTRY(__cpu_setup) 424 tlbi vmalle1 // Invalidate local TLB 425 dsb nsh 426 427 mov x0, #3 << 20 428 msr cpacr_el1, x0 // Enable FP/ASIMD 429 mov x0, #1 << 12 // Reset mdscr_el1 and disable 430 msr mdscr_el1, x0 // access to the DCC from EL0 431 isb // Unmask debug exceptions now, 432 enable_dbg // since this is per-cpu 433 reset_pmuserenr_el0 x0 // Disable PMU access from EL0 434 reset_amuserenr_el0 x0 // Disable AMU access from EL0 435 436 /* 437 * Memory region attributes for LPAE: 438 * 439 * n = AttrIndx[2:0] 440 * n MAIR 441 * DEVICE_nGnRnE 000 00000000 442 * DEVICE_nGnRE 001 00000100 443 * DEVICE_GRE 010 00001100 444 * NORMAL_NC 011 01000100 445 * NORMAL 100 11111111 446 * NORMAL_WT 101 10111011 447 */ 448 ldr x5, =MAIR(0x00, MT_DEVICE_nGnRnE) | \ 449 MAIR(0x04, MT_DEVICE_nGnRE) | \ 450 MAIR(0x0c, MT_DEVICE_GRE) | \ 451 MAIR(0x44, MT_NORMAL_NC) | \ 452 MAIR(0xff, MT_NORMAL) | \ 453 MAIR(0xbb, MT_NORMAL_WT) 454 msr mair_el1, x5 455 /* 456 * Prepare SCTLR 457 */ 458 mov_q x0, SCTLR_EL1_SET 459 /* 460 * Set/prepare TCR and TTBR. We use 512GB (39-bit) address range for 461 * both user and kernel. 462 */ 463 ldr x10, =TCR_TxSZ(VA_BITS) | TCR_CACHE_FLAGS | TCR_SMP_FLAGS | \ 464 TCR_TG_FLAGS | TCR_KASLR_FLAGS | TCR_ASID16 | \ 465 TCR_TBI0 | TCR_A1 | TCR_KASAN_FLAGS 466 tcr_clear_errata_bits x10, x9, x5 467 468 #ifdef CONFIG_ARM64_VA_BITS_52 469 ldr_l x9, vabits_actual 470 sub x9, xzr, x9 471 add x9, x9, #64 472 tcr_set_t1sz x10, x9 473 #else 474 ldr_l x9, idmap_t0sz 475 #endif 476 tcr_set_t0sz x10, x9 477 478 /* 479 * Set the IPS bits in TCR_EL1. 480 */ 481 tcr_compute_pa_size x10, #TCR_IPS_SHIFT, x5, x6 482 #ifdef CONFIG_ARM64_HW_AFDBM 483 /* 484 * Enable hardware update of the Access Flags bit. 485 * Hardware dirty bit management is enabled later, 486 * via capabilities. 487 */ 488 mrs x9, ID_AA64MMFR1_EL1 489 and x9, x9, #0xf 490 cbz x9, 1f 491 orr x10, x10, #TCR_HA // hardware Access flag update 492 1: 493 #endif /* CONFIG_ARM64_HW_AFDBM */ 494 msr tcr_el1, x10 495 ret // return to head.S 496 ENDPROC(__cpu_setup)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 第422行,.pushsection会把__cpu_setup函数链接进.idmap.text段,idmap表示恒等映射,也就是建立的虚拟地址和物理地址相等的映射。

- 第424行,使EL1中所有本地的TLB无效。

- 第425行,插入内存屏障指令以保证刚才的无效TLB指令完成。

- 第427~428行,使能架构特性访问控制寄存器CPACR_EL1中的FPEN域,用于设定在EL0和EL1下可以访问浮点单元与SIMD单元。

- 第429~430行,使能调试监控系统控制寄存器MDSCR_EL1中的TDCC域,在EL0下访问调试通信通道(Debug Communication Channel,DCC)寄存器会自陷入EL1。

- 第431行,isb指令保证上述修改在系统寄存器已经完成。

- 第432行,enable_dbg宏使能PSTATE寄存器中调试掩码域,处理器打开调试功能,该宏实现在arch/arm64/include/asm/assembler.h头文件中。

- 第433行,reset_pmuserenr_el0宏用于关闭EL0,访问PMU。该宏实现在arch/arm64/include/asm/assembler.h头文件中。

- 第433行,reset_amuserenr_el0宏用于关闭EL0,访问AMU。该宏实现在arch/arm64/include/asm/assembler.h头文件中。

- 第436~454行,设置系统的内存属性。Linux操作系统只使用了6中不同的内存属性,分别是DEVICE_nGnRnE、DEVICE_nGnRE、DEVICE_GRE、NORMAL_NC、NORMAL以及NORMAL_WT,最终设置到内存属性间接寄存器MAIR_EL1中。

- 第458行,SCTLR_EL1_SET宏表示系统控制寄存器SCTLR_EL1的多个域的组合,它实现在arch/arm64/include/asm/sysreg.h头文件中。

#define SCTLR_EL1_SET (SCTLR_ELx_M | SCTLR_ELx_C | SCTLR_ELx_SA |\ SCTLR_EL1_SA0 | SCTLR_EL1_SED | SCTLR_ELx_I |\ SCTLR_EL1_DZE | SCTLR_EL1_UCT |\ SCTLR_EL1_NTWE | SCTLR_ELx_IESB | SCTLR_EL1_SPAN |\ ENDIAN_SET_EL1 | SCTLR_EL1_UCI | SCTLR_EL1_RES1)- 1

- 2

- 3

- 4

- 5

- SCTLR_EL1寄存器是用于对整个系统进行控制的寄存器。这里把SCTLR_EL1寄存器中多个域的组合值加载到X0寄存器中,在__cpu_setup函数中并没有把它设置到处理器里,而是当作参数传递给下一个函数。

- 第463~465行,准备TCR的值,并加载到X10寄存器中。

- 第474~476行,设置TCR的T0SZ域。

- 第481行,tcr_compute_pa_size宏用于设置TCR中的IPS域。IPS域用于设置系统的物理地址的大小,最小可以设置为4GB,最多可以设置为4PB。通常ARMv8系统的内核通过CONFIG_ARM64_PA_BITS宏来配置系统最多支持的物理地址位宽,当该宏为48位时最多支持256TB大小内存。ID_AA64MMFR0_EL1寄存器的PARang域表示系统能支持最大的物理地址位宽,这是一个只读寄存器。在这两个值只选择一个最小值并设置到TCR的IPS域中。

- 第482~493行,CONFIG_ARM64_HW_AFDBM表示使用硬件来实现更新访问和脏页面的标志位,这是ARMv8.1支持的硬件特性。在TCR中,HD域用于使能硬件更新脏标志位,HA域用于使能硬件更新访问标志位。当使能这个特性后,访问一个物理页面硬件会自动设置PTE中的AF域,而不是软件通过访问缺页中断来模拟。我们可以通过读取ID_AA64MMFR1_EL1寄存器来判断硬件是否支持这个特性。若HAFDBS域为0,表示不支持,若为1,表示支持访问标志位;若为2,表示两个特性都支持。最后更新TCR_HA域到TCR中,使能硬件访问标志位。

- 第494行,更新TCR_EL1。

- 第495行,通过ret指令返回。

2.6 __primary_switch函数

- CONFIG_RANDOMIZE_BASE宏表示在内核启动加载时会对内核映像的虚拟地址重新做映射,这样可以防止黑客的攻击。早期,内核映像映射到内核空间的虚拟地址是固定的,这样非法攻击者很容易利用这个特性对内核进行攻击。为了理解简单,默认关闭CONFIG_RANDOMIZE_BASE宏。

- 注意,__primary_switch以及__enable_mmu这两个函数都会链接到.idmap.text段中,因此当建立了恒等映射之后,虚拟地址和物理地址在数值上是相同的。

952 __primary_switch: 953 #ifdef CONFIG_RANDOMIZE_BASE 954 mov x19, x0 // preserve new SCTLR_EL1 value 955 mrs x20, sctlr_el1 // preserve old SCTLR_EL1 value 956 #endif 957 958 adrp x1, init_pg_dir 959 bl __enable_mmu 960 #ifdef CONFIG_RELOCATABLE 961 #ifdef CONFIG_RELR 962 mov x24, #0 // no RELR displacement yet 963 #endif 964 bl __relocate_kernel 965 #ifdef CONFIG_RANDOMIZE_BASE 966 ldr x8, =__primary_switched 967 adrp x0, __PHYS_OFFSET 968 blr x8 969 970 /* 971 * If we return here, we have a KASLR displacement in x23 which we need 972 * to take into account by discarding the current kernel mapping and 973 * creating a new one. 974 */ 975 pre_disable_mmu_workaround 976 msr sctlr_el1, x20 // disable the MMU 977 isb 978 bl __create_page_tables // recreate kernel mapping 979 980 tlbi vmalle1 // Remove any stale TLB entries 981 dsb nsh 982 983 msr sctlr_el1, x19 // re-enable the MMU 984 isb 985 ic iallu // flush instructions fetched 986 dsb nsh // via old mapping 987 isb 988 989 bl __relocate_kernel 990 #endif 991 #endif 992 ldr x8, =__primary_switched 993 adrp x0, __PHYS_OFFSET 994 br x8 995 ENDPROC(__primary_switch)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 第958行,加载init页表的PGD页表init_pg_dir到X1寄存器中。

- 第959行,跳转到__enable_mmu函数。注意,该函数有两个参数,第一个参数是在__cpu_setup函数中往X0寄存器存入的SCTLR_EL1的值,第二个参数是init页表的PGD页表地址。

- 第992行,加载__primary_switched函数地址到X8寄存器中。

- 第993行,加载__PHYS_OFFSET值到X0寄存器中。

- 第994行,跳转到__primary_switched函数并运行。这里实现了内核映像地址的重定位。从U-Boot跳转到内核的stext函数的地址是编译后的链接地址,也就是内核的虚拟空间地址。

2.6.1 __enable_mmu函数

arch/arm64/kernel/head.S 790 ENTRY(__enable_mmu) 791 mrs x2, ID_AA64MMFR0_EL1 792 ubfx x2, x2, #ID_AA64MMFR0_TGRAN_SHIFT, 4 793 cmp x2, #ID_AA64MMFR0_TGRAN_SUPPORTED 794 b.ne __no_granule_support 795 update_early_cpu_boot_status 0, x2, x3 796 adrp x2, idmap_pg_dir 797 phys_to_ttbr x1, x1 798 phys_to_ttbr x2, x2 799 msr ttbr0_el1, x2 // load TTBR0 800 offset_ttbr1 x1, x3 801 msr ttbr1_el1, x1 // load TTBR1 802 isb 803 msr sctlr_el1, x0 804 isb 805 /* 806 * Invalidate the local I-cache so that any instructions fetched 807 * speculatively from the PoC are discarded, since they may have 808 * been dynamically patched at the PoU. 809 */ 810 ic iallu 811 dsb nsh 812 isb 813 ret 814 ENDPROC(__enable_mmu)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

前面提到__enable_mmu函数传递了两个参数。X0表示SCTLR_EL1的值,X1表示init页表的PGD页表基地址。

- 第791~794行,ID_AA64MMFR0_EL1寄存器中记录了系统支持物理页面的粒度,如4KB,16KB还是64KB。读取ID_AA64MMFR0_EL1寄存器的值以获取当前系统支持的页面粒度,并且与内核中配置的选项(如,CONFIG_ARM64_4K_PAGES)进行比较。

- 第795行,更新全局变量__early_cpu_boot_status的值为0。

- 第796行,加载恒等映射的PGD页表idmap_pg_dir到X2寄存器中。

- 第797~801行,在准备打开MMU之际,把恒等映射的页表idmap_pg_dir设置到TTBR0_EL1中。当MMU打开之后,在进程切换时会修改TTBR0的值,切换到真实进程地址空间。加载init页表的PGD页表基地址到TTBR1_EL1中,因为所有的内核态都是共享一个空间,它就是init页表。

- 第802行,isb指令保证上述页表基地址设置完成。

- 第803行,设置SCTLR_EL1寄存器,其中M域(SCTLR_ELx_M)表示使能MMU。

- 第804行,isb指令保证打开了MMU。

- 第810行,使所有的指令高速缓存无效。

- 第811~812行,插入内存屏障指令。这时MMU已经打开。isb指令会刷新流水线中的指令,让流水线重新取指令,这时处理器会以虚拟地址来访问内存。

- 第813行,通过ret指令返回。

2.6.2 __primary_switched函数

421 __primary_switched: 422 adrp x4, init_thread_union 423 add sp, x4, #THREAD_SIZE 424 adr_l x5, init_task 425 msr sp_el0, x5 // Save thread_info 426 427 adr_l x8, vectors // load VBAR_EL1 with virtual 428 msr vbar_el1, x8 // vector table address 429 isb 430 431 stp xzr, x30, [sp, #-16]! 432 mov x29, sp 433 434 #ifdef CONFIG_SHADOW_CALL_STACK 435 adr_l x18, init_shadow_call_stack // Set shadow call stack 436 #endif 437 438 str_l x21, __fdt_pointer, x5 // Save FDT pointer 439 440 ldr_l x4, kimage_vaddr // Save the offset between 441 sub x4, x4, x0 // the kernel virtual and 442 str_l x4, kimage_voffset, x5 // physical mappings 443 444 // Clear BSS 445 adr_l x0, __bss_start 446 mov x1, xzr 447 adr_l x2, __bss_stop 448 sub x2, x2, x0 449 bl __pi_memset 450 dsb ishst // Make zero page visible to PTW 451 452 #ifdef CONFIG_KASAN 453 bl kasan_early_init 454 #endif 455 #ifdef CONFIG_RANDOMIZE_BASE 456 tst x23, ~(MIN_KIMG_ALIGN - 1) // already running randomized? 457 b.ne 0f 458 mov x0, x21 // pass FDT address in x0 459 bl kaslr_early_init // parse FDT for KASLR options 460 cbz x0, 0f // KASLR disabled? just proceed 461 orr x23, x23, x0 // record KASLR offset 462 ldp x29, x30, [sp], #16 // we must enable KASLR, return 463 ret // to __primary_switch() 464 0: 465 #endif 466 add sp, sp, #16 467 mov x29, #0 468 mov x30, #0 469 b start_kernel 470 ENDPROC(__primary_switched)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

__primary_switched函数传递一个参数__PHYS_OFFSET,它的值为KERNEL_START - TEXT_OFFSET。

- 第422行,加载init_thread_union到X4寄存器中。init_thread_union指thread_union数据结构。该数据结构中包含了系统第一个进程(init进程)的内核栈。

include/linux/sched/task.h 40 extern union thread_union init_thread_union; include/linux/sched.h 1664 union thread_union { 1665 #ifndef CONFIG_ARCH_TASK_STRUCT_ON_STACK 1666 struct task_struct task; 1667 #endif 1668 #ifndef CONFIG_THREAD_INFO_IN_TASK 1669 struct thread_info thread_info; 1670 #endif 1671 unsigned long stack[THREAD_SIZE/sizeof(long)]; 1672 };- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

thread_union存储在内核映像的数据段里。

- 第423行,使SP指向这个内核栈的栈顶。THREAD_SIZE宏表示内核栈的大小。

- 第424行,init_task是init进程的task_struct数据结构,在内核中是静态初始化的。

init/init.c struct task_struct init_task = { .state = 0, .stack = init_stack, .usage = REFCOUNT_INIT(2), .flags = PF_KTHREAD, .prio = MAX_PRIO - 20, .static_prio = MAX_PRIO - 20, .normal_prio = MAX_PRIO - 20, ... };- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 第425行,加载进程的task_struct数据结构到sp_el0寄存器中。在Linux5.x内核中,获取当前进程描述符task_struct实例的方法发生了变化。在内核态中,ARM64处理器运行在EL1,sp_el0寄存器在EL1上下文中是没用的。利用sp_el0寄存器来存放当前进程描述符task_struct的指针是一个简洁有效的办法。

- 第427~428行,vbar_el1寄存器存放了EL1的异常向量表。

- 第429行,插入ISB指令,保证设置向量表完成。

- 第431行,xzr是零寄存器,它的值为0。X30是LR。在内核栈的顶减去16字节的地方存储0,在内核栈顶减去24字节的地方存储LR。

- 第432行,把SP寄存器的值存放到X29寄存器中。

- 第438行,保存设置树指针到__fdt_pointer变量。

- 第440~442行,把内核映像的虚拟地址和物理地址的偏移量保存到kimage_voffset中。

- 第445~450行,清除未初始化数据段。

- 第466~469行,使SP指向内核栈的栈顶,然后跳转到C语言入口函数start_kernel()并运行。

3.内核启动流程图

参考文献:奔跑吧LInux内核

-

相关阅读:

冠达管理:光脚阴线第二天一定下跌么?

vite + react + ts 配置路径别名alias

mysql存储过程 使用游标实现两张表数据同步数据

vscode无法切换env环境

C++中public、protected及private用法

ssm教务系统网站 毕业设计-附源码290915

Netty(四)NIO-优化与源码

深入理解main函数

太烂的牌也要打完只为自己也不是为了其他什么原因。

Redisson实现分布式锁原理、自动续期机制分析

- 原文地址:https://blog.csdn.net/weixin_39247141/article/details/125854744