-

YOLOv5训练自己的voc数据集

1.yolov5数据的要求

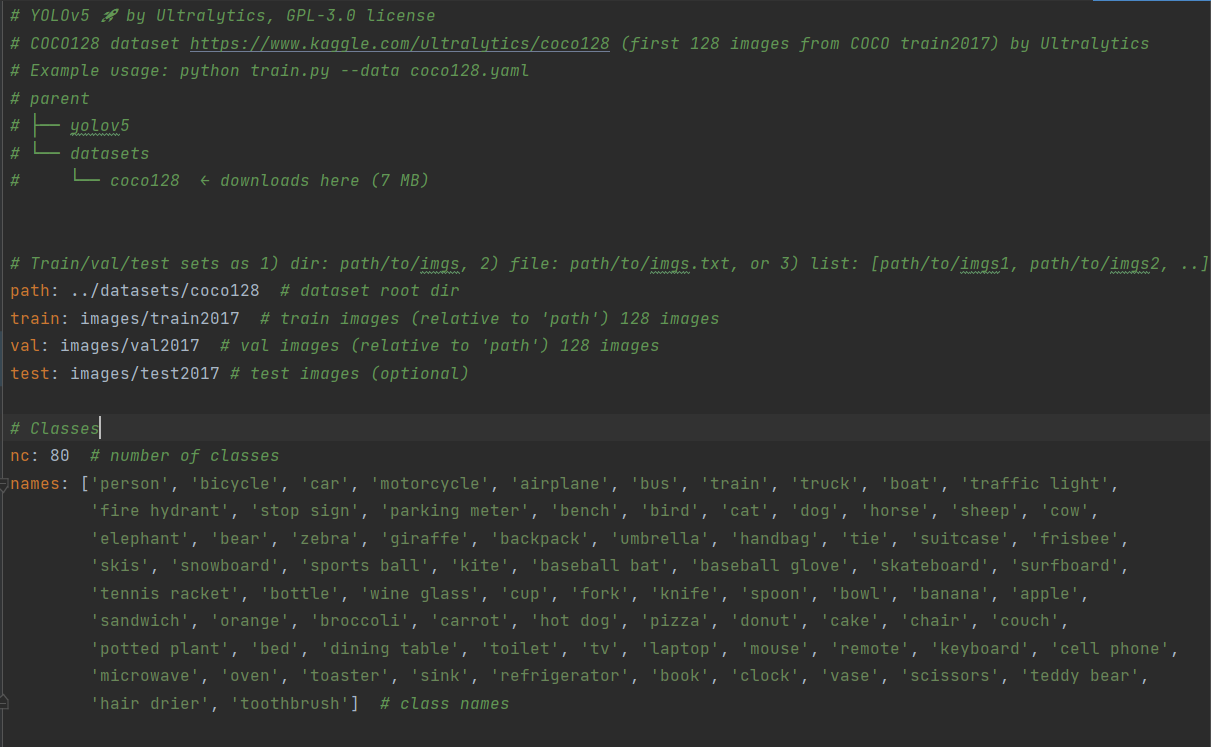

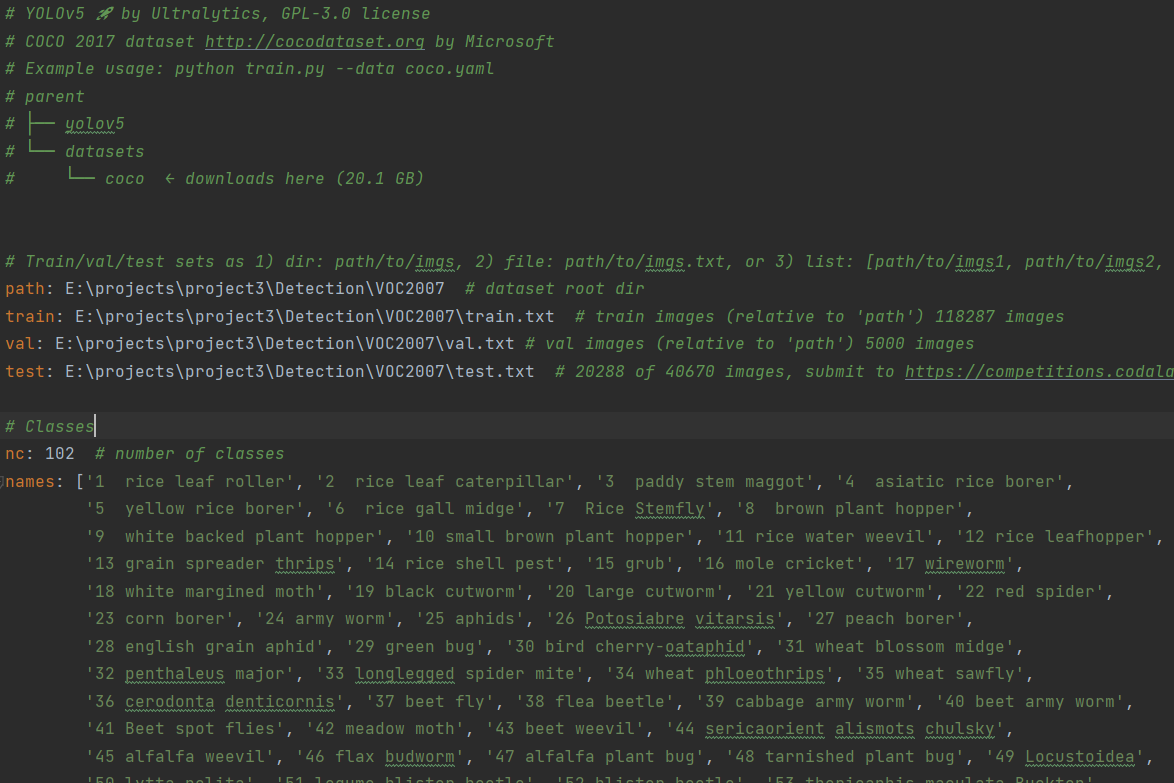

查看yolov5的配置文件coco.yaml和coco128.yaml,yolov5数据集可以有两种形式,

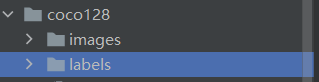

一种像coco128所示,在images和labels文件夹下,建立train、test、val三个文件夹,存放训练集、测试集、验证集的标签和数据,然后再复制coco128.yaml前面路径部分,改为自己的路径,其中,训练集、测试集、验证集均写相应的文件夹路径,再将类别数量、名称改为自己数据集的名称和数量。download部分完全没用,不要复制

另一种就是将训练集、测试集、验证集图片路径直接写入txt文件中,复制coco.yaml,路径修改为自己的txt文件、种类数量、名称都要改为自己的数据

2.VOC数据集

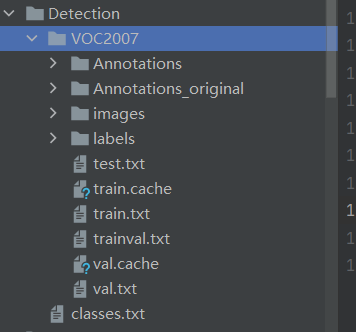

VOC数据集如图所示:Annotations_original是数据集没有的,是我调整数据后加的,JPEGImages下存放的是图片,Annotations存放标签,ImageSets下的main文件夹存放train/test/val文件标签

3.VOC数据集调整为coco格式

1.JPEGImages文件夹名称需要改为images(手动修改)

2.需要存放训练集、测试集、验证集图片路径的txt文件

3.labels需要由xml文件改为yolo格式

运行下面的代码即可:

- import os

- import random

- import xml.etree.ElementTree as ET

- import numpy as np

- # --------------------------------------------------------------------------------------------------------------------------------#

- # annotation_mode用于指定该文件运行时计算的内容

- # annotation_mode为0代表整个标签处理过程,训练集、测试集、验证集图片路径的txt文件以及标签xml文件改为yolo格式

- # annotation_mode为1代表获得训练集、测试集、验证集图片路径的txt文件

- # annotation_mode为2代表标签xml文件改为yolo格式

- # --------------------------------------------------------------------------------------------------------------------------------#

- annotation_mode = 0

- # -------------------------------------------------------------------#

- # 必须要修改,用于标签的目标信息

- # 与训练和预测所用的classes_path一致即可

- # 如果生成的2007_train.txt里面没有目标信息

- # 那么就是因为classes没有设定正确

- # 仅在annotation_mode为0和2的时候有效

- # -------------------------------------------------------------------#

- classes_path = '../Detection/classes.txt'

- # --------------------------------------------------------------------------------------------------------------------------------#

- # trainval_percent用于指定(训练集+验证集)与测试集的比例,默认情况下 (训练集+验证集):测试集 = 9:1

- # train_percent用于指定(训练集+验证集)中训练集与验证集的比例,默认情况下 训练集:验证集 = 9:1

- # 仅在annotation_mode为0和1的时候有效

- # --------------------------------------------------------------------------------------------------------------------------------#

- trainval_percent = 0.8

- train_percent = 0.8

- # -------------------------------------------------------#

- # 指向VOC数据集所在的文件夹

- # 默认指向根目录下的VOC数据集

- # -------------------------------------------------------#

- VOCdevkit_path = '../Detection'

- def get_classes(classes_path):

- with open(classes_path, encoding='utf-8') as f:

- class_names = f.readlines()

- class_names = [c.strip() for c in class_names]

- return class_names, len(class_names)

- classes, _ = get_classes(classes_path)

- # -------------------------------------------------------#

- # 统计目标数量

- # -------------------------------------------------------#

- photo_nums = np.zeros(len(VOCdevkit_sets))

- nums = np.zeros(len(classes))

- def convert_label(path, image_id):

- def convert_box(size, box):

- dw, dh = 1. / size[0], 1. / size[1]

- x, y, w, h = (box[0] + box[1]) / 2.0 - 1, (box[2] + box[3]) / 2.0 - 1, box[1] - box[0], box[3] - box[2]

- return x * dw, y * dh, w * dw, h * dh

- in_file = open(os.path.join(path,f'VOC2007/Annotations/{image_id}.xml'),encoding='utf-8')

- out_file = open(os.path.join(path, f'VOC2007/labels/{image_id}.txt'), 'w',encoding='utf-8')

- tree = ET.parse(in_file)

- root = tree.getroot()

- size = root.find('size')

- w = int(size.find('width').text)

- h = int(size.find('height').text)

- for obj in root.iter('object'):

- cls = obj.find('name').text

- if cls in classes and not int(obj.find('difficult').text) == 1:

- xmlbox = obj.find('bndbox')

- bb = convert_box((w, h), [float(xmlbox.find(x).text) for x in ('xmin', 'xmax', 'ymin', 'ymax')])

- cls_id = classes.index(cls) # class id

- out_file.write(" ".join([str(a) for a in (cls_id, *bb)]) + '\n')

- if __name__ == "__main__":

- random.seed(42)

- if " " in os.path.abspath(VOCdevkit_path):

- raise ValueError("数据集存放的文件夹路径与图片名称中不可以存在空格,否则会影响正常的模型训练,请注意修改。")

- if annotation_mode == 0 or annotation_mode == 1:

- print("Generate train/test/val txt")

- xmlfilepath = os.path.join(VOCdevkit_path, 'VOC2007/Annotations')

- saveBasePath = os.path.join(VOCdevkit_path, 'VOC2007')

- temp_xml = os.listdir(xmlfilepath)

- total_xml = []

- for xml in temp_xml:

- if xml.endswith(".xml"):

- total_xml.append(xml)

- num = len(total_xml)

- list = list(range(num))

- tv = int(num * trainval_percent)

- tr = int(tv * train_percent)

- trainval = random.sample(list, tv)

- train = random.sample(trainval, tr)

- random.shuffle(list)

- print("train and val size", tv)

- print("train size", tr)

- ftrainval = open(os.path.join(saveBasePath, 'trainval.txt'), 'w',encoding='utf-8')

- ftest = open(os.path.join(saveBasePath, 'test.txt'), 'w',encoding='utf-8')

- ftrain = open(os.path.join(saveBasePath, 'train.txt'), 'w',encoding='utf-8')

- fval = open(os.path.join(saveBasePath, 'val.txt'), 'w',encoding='utf-8')

- for i in list:

- name = total_xml[i][:-4]

- if i in trainval:

- ftrainval.write('%s/VOC2007/images/%s.jpg\n'% (os.path.abspath(VOCdevkit_path), name))

- if i in train:

- ftrain.write('%s/VOC2007/images/%s.jpg\n'% (os.path.abspath(VOCdevkit_path), name))

- else:

- fval.write('%s/VOC2007/images/%s.jpg\n'% (os.path.abspath(VOCdevkit_path), name))

- else:

- ftest.write('%s/VOC2007/images/%s.jpg\n'% (os.path.abspath(VOCdevkit_path), name))

- ftrainval.close()

- ftrain.close()

- fval.close()

- ftest.close()

- print("Generate train/test/val.txt done.")

- if annotation_mode == 0 or annotation_mode == 2:

- print("Generate coco labels")

- if not os.path.exists(os.path.join(VOCdevkit_path,'VOC2007/labels/')):

- os.makedirs(os.path.join(VOCdevkit_path,'VOC2007/labels/'))

- image_ids = os.listdir(os.path.join(VOCdevkit_path, 'VOC2007/Annotations'))

- for image_id in image_ids:

- convert_label(VOCdevkit_path, image_id[:-4])

- print("Generate coco labels done")

最终得到的文件情况:

最后按照第一点说的,复制 配置文件并修改可以了

4.训练参数的修改

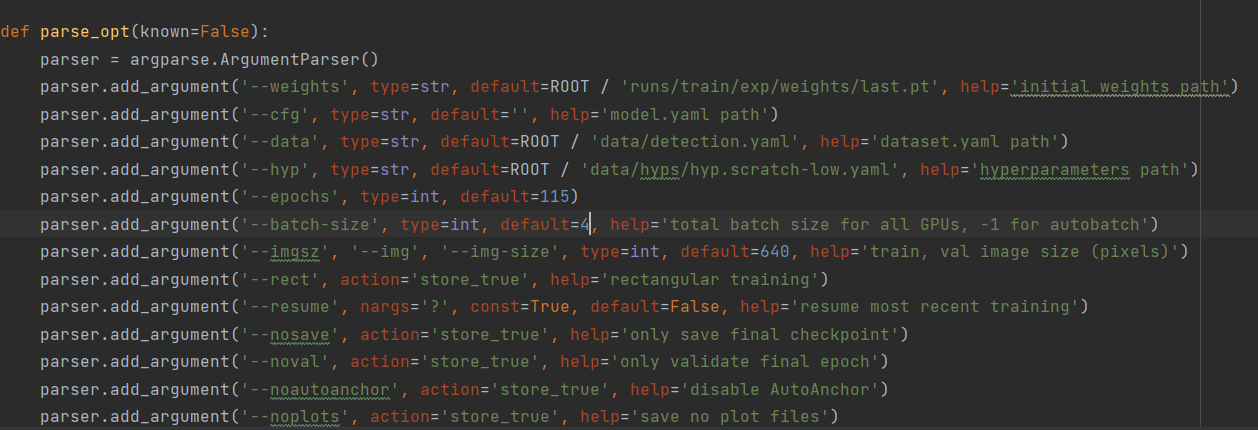

data指向自己的yaml文件,训练权重、epochs等按照自己的情况修改

-

相关阅读:

R-install_miniconda()卸载 | conda命令行报错及解决方法

SAP router的问题 dev_out 大文件 ,bat 关闭服务,删除文件,重启服务

Java 中的 Default 关键字

AOP源码解析之二-创建AOP代理前传,获取AOP信息

【hack】浅浅说说自己构造hack的一些逻辑~

SAR数据的多视Multi-look,包括range looks和azimuth looks,如何设置多视比

Linux 指令心法(十二)`rm` 永久性地删除文件或目录

hive更改表结构的时候报错

An2021软件安装及基本操作(新建文件/导出)

《Web安全基础》07. 反序列化漏洞

- 原文地址:https://blog.csdn.net/qq_52053775/article/details/125874044