-

Keras深度学习实战——基于VGG19模型实现性别分类

0. 前言

在《迁移学习》中,我们了解了利用迁移学习,只需要少量样本即可训练得到性能较好的模型;并基于迁移学习利用预训练的

VGG16模型进行了性别分类的实战,进一步加深对迁移学习工作原理的理解。1. VGG19 架构简介

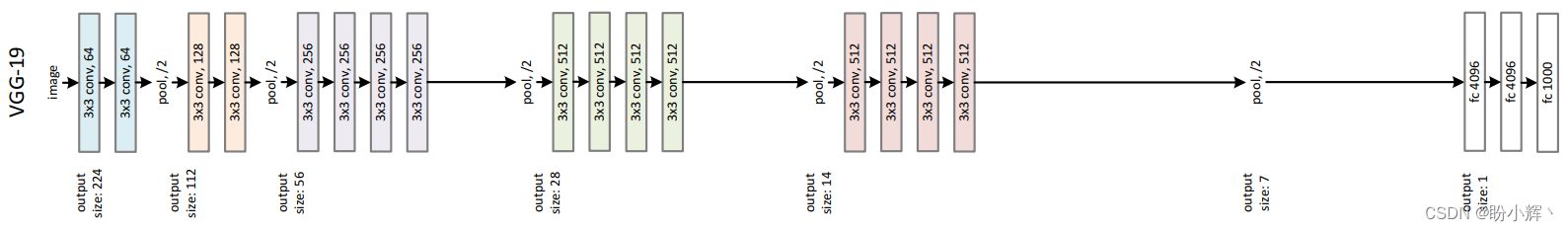

本文,我们将介绍另一种常用的网络模型架构——

VGG19,并使用预训练的VGG19模型进行性别分类实战。VGG19是VGG16的改进版本,具有更多的卷积和池化操作,VGG19模型的体系结构如下:Model: "vgg19" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) [(None, 256, 256, 3)] 0 _________________________________________________________________ block1_conv1 (Conv2D) (None, 256, 256, 64) 1792 _________________________________________________________________ block1_conv2 (Conv2D) (None, 256, 256, 64) 36928 _________________________________________________________________ block1_pool (MaxPooling2D) (None, 128, 128, 64) 0 _________________________________________________________________ block2_conv1 (Conv2D) (None, 128, 128, 128) 73856 _________________________________________________________________ block2_conv2 (Conv2D) (None, 128, 128, 128) 147584 _________________________________________________________________ block2_pool (MaxPooling2D) (None, 64, 64, 128) 0 _________________________________________________________________ block3_conv1 (Conv2D) (None, 64, 64, 256) 295168 _________________________________________________________________ block3_conv2 (Conv2D) (None, 64, 64, 256) 590080 _________________________________________________________________ block3_conv3 (Conv2D) (None, 64, 64, 256) 590080 _________________________________________________________________ block3_conv4 (Conv2D) (None, 64, 64, 256) 590080 _________________________________________________________________ block3_pool (MaxPooling2D) (None, 32, 32, 256) 0 _________________________________________________________________ block4_conv1 (Conv2D) (None, 32, 32, 512) 1180160 _________________________________________________________________ block4_conv2 (Conv2D) (None, 32, 32, 512) 2359808 _________________________________________________________________ block4_conv3 (Conv2D) (None, 32, 32, 512) 2359808 _________________________________________________________________ block4_conv4 (Conv2D) (None, 32, 32, 512) 2359808 _________________________________________________________________ block4_pool (MaxPooling2D) (None, 16, 16, 512) 0 _________________________________________________________________ block5_conv1 (Conv2D) (None, 16, 16, 512) 2359808 _________________________________________________________________ block5_conv2 (Conv2D) (None, 16, 16, 512) 2359808 _________________________________________________________________ block5_conv3 (Conv2D) (None, 16, 16, 512) 2359808 _________________________________________________________________ block5_conv4 (Conv2D) (None, 16, 16, 512) 2359808 _________________________________________________________________ block5_pool (MaxPooling2D) (None, 8, 8, 512) 0 ================================================================= Total params: 20,024,384 Trainable params: 20,024,384 Non-trainable params: 0 _________________________________________________________________- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

可以看到,上示的体系结构中具有更多的网络层以及更多的参数量。需要注意的是,

VGG16和VGG19体系结构中的16和19代表这些网络中的网络层数。

将每个图像通过

VGG19网络后,提取到8 x 8 x 512输出后,该输出将成为微调模型的输入。接下来,创建输入和输出数据集,然后构建、编译和拟合模型的过程与使用基于预训练的 VGG16 模型进行性别分类的过程相同。2. 使用预训练 VGG19 模型进行性别分类

在本节中,我们基于迁移学习使用预训练的

VGG19模型进行性别分类。2.1 构建输入与输出数据

首先,准备输入和输出数据,我们重用在《卷积神经网络进行性别分类》中使用的数据集以及数据加载代码:

from keras.applications import VGG19 from keras.applications.vgg19 import preprocess_input from glob import glob from skimage import io import cv2 import numpy as np model = VGG19(include_top=False, weights='imagenet', input_shape=(256, 256, 3)) x = [] y = [] for i in glob('man_woman/a_resized/*.jpg')[:800]: try: image = io.imread(i) x.append(image) y.append(0) except: continue for i in glob('man_woman/b_resized/*.jpg')[:800]: try: image = io.imread(i) x.append(image) y.append(1) except: continue x_vgg19 = [] for i in range(len(x)): img = x[i] img = preprocess_input(img.reshape((1, 256, 256, 3))) img_feature = model.predict(img) x_vgg19.append(img_feature)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

将输入和输出转换为其相应的数组,并创建训练和测试数据集:

x_vgg19 = np.array(x_vgg19) x_vgg19 = x_vgg19.reshape(x_vgg19.shape[0], x_vgg19.shape[2], x_vgg19.shape[3], x_vgg19.shape[4]) y = np.array(y) from sklearn.model_selection import train_test_split x_train, x_test, y_train, y_test = train_test_split(x_vgg19, y, test_size=0.2)- 1

- 2

- 3

- 4

- 5

- 6

2.2 模型构建与训练

构建微调模型:

from keras.models import Sequential from keras.layers import Conv2D, MaxPooling2D, Flatten, Dropout, Dense model_fine_tuning = Sequential() model_fine_tuning.add(Conv2D(512, kernel_size=(3, 3), activation='relu', input_shape=(x_train.shape[1], x_train.shape[2], x_train.shape[3]))) model_fine_tuning.add(MaxPooling2D(pool_size=(2, 2))) model_fine_tuning.add(Flatten()) model_fine_tuning.add(Dense(1024, activation='relu')) model_fine_tuning.add(Dropout(0.6)) model_fine_tuning.add(Dense(1, activation='sigmoid')) model_fine_tuning.summary()- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

该模型架构的简要信息输入如下:

Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= conv2d (Conv2D) (None, 6, 6, 512) 2359808 _________________________________________________________________ max_pooling2d (MaxPooling2D) (None, 3, 3, 512) 0 _________________________________________________________________ flatten (Flatten) (None, 4608) 0 _________________________________________________________________ dense (Dense) (None, 1024) 4719616 _________________________________________________________________ dropout (Dropout) (None, 1024) 0 _________________________________________________________________ dense_1 (Dense) (None, 1) 1025 ================================================================= Total params: 7,080,449 Trainable params: 7,080,449 Non-trainable params: 0 _________________________________________________________________- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

接下来,编译并拟合模型:

model_fine_tuning.compile(loss='binary_crossentropy',optimizer='adam',metrics=['acc']) history = model_fine_tuning.fit(x_train, y_train, batch_size=32, epochs=20, verbose=1, validation_data = (x_test, y_test))- 1

- 2

- 3

- 4

- 5

- 6

- 7

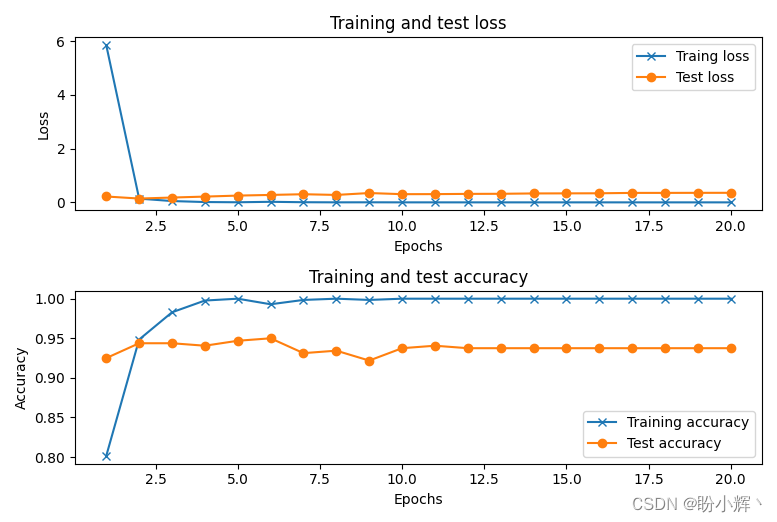

最后,我们绘制在训练期间,模型在训练和测试数据集的损失和准确率的变化。可以看到,当我们使用

VGG19架构时,能够在测试数据集上达到约95%的准确率,结果与使用VGG16架构时的性能相似:

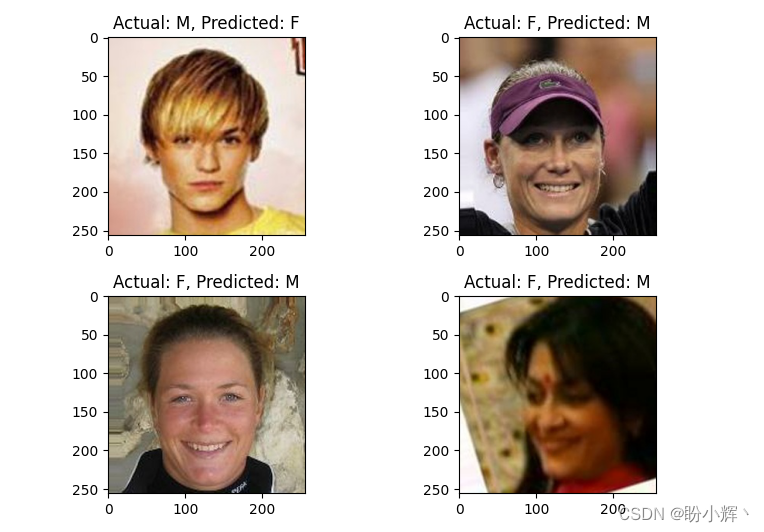

2.3 模型错误分类示例

一些错误分类的图像示例如下:

x = np.array(x) from sklearn.model_selection import train_test_split x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2) x_test_vgg19 = [] for i in range(len(x_test)): img = x_test[i] img = preprocess_input(img.reshape((1, 256, 256, 3))) img_feature = model.predict(img) x_test_vgg19.append(img_feature) x_test_vgg19 = np.array(x_test_vgg19) x_test_vgg19 = x_test_vgg19.reshape(x_test_vgg19.shape[0], x_test_vgg19.shape[2], x_test_vgg19.shape[3], x_test_vgg19.shape[4]) y_pred = model_fine_tuning.predict(x_test_vgg19) wrong = np.argsort(np.abs(y_pred.flatten()-y_test)) print(wrong) y_test_char = np.where(y_test==0,'M','F') y_pred_char = np.where(y_pred>0.5,'F','M') plt.subplot(221) plt.imshow(x_test[wrong[-1]]) plt.title('Actual: '+str(y_test_char[wrong[-1]])+', '+'Predicted: '+str((y_pred_char[wrong[-1]][0]))) plt.subplot(222) plt.imshow(x_test[wrong[-2]]) plt.title('Actual: '+str(y_test_char[wrong[-2]])+', '+'Predicted: '+str((y_pred_char[wrong[-2]][0]))) plt.subplot(223) plt.imshow(x_test[wrong[-3]]) plt.title('Actual: '+str(y_test_char[wrong[-3]])+', '+'Predicted: '+str((y_pred_char[wrong[-3]][0]))) plt.subplot(224) plt.imshow(x_test[wrong[-4]]) plt.title('Actual: '+str(y_test_char[wrong[-4]])+', '+'Predicted: '+str((y_pred_char[wrong[-4]][0]))) plt.show()- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

从图中,可以看出,

VGG19类似于VGG16除了由于人物在图像中占据的空间较小造成错误分类外,倾向于根据头发来判断人物究竟是男性还是女性。相关链接

Keras深度学习实战(7)——卷积神经网络详解与实现

Keras深度学习实战(9)——卷积神经网络的局限性

Keras深度学习实战(10)——迁移学习

Keras深度学习实战——使用卷积神经网络实现性别分类 -

相关阅读:

Matlab:Matlab编程语言学习之向量化编程的简介、技巧总结案例应用之详细攻略

Android dumpsys 常用命令

【UV打印机】波形开发-开发流程(四)

【C++】基础知识点回顾 上:命名空间与输入输出

LeetCode-538. Convert BST to Greater Tree [C++][Java]

自学 TypeScript 第三天 使用webpack打包 TS 代码

牛客网刷题 | BC62 统计数据正负个数

Empowering Low-Light Image Enhancer through Customized Learnable Priors 论文阅读笔记

docker容器设置简单启动命令,不退出

天池2023智能驾驶汽车虚拟仿真视频数据理解--baseline

- 原文地址:https://blog.csdn.net/LOVEmy134611/article/details/125509499