-

N-pair Loss

目录

16-NIPS-improved deep metric learning with multi-class n-pair loss objective

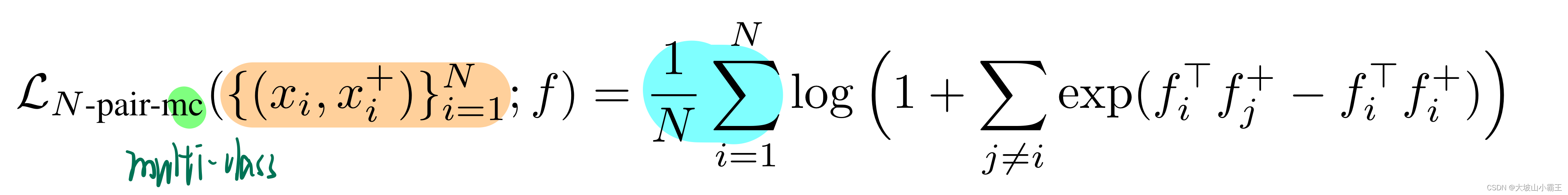

multi-class N-pair loss (N-pair-mc)

one-vs-one N-pair loss (N-pair-ovo)

L2 norm regularization of embedding vectors

16-NIPS-improved deep metric learning with multi-class n-pair loss objective

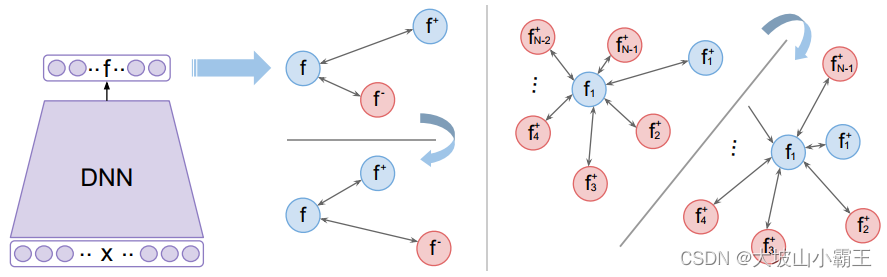

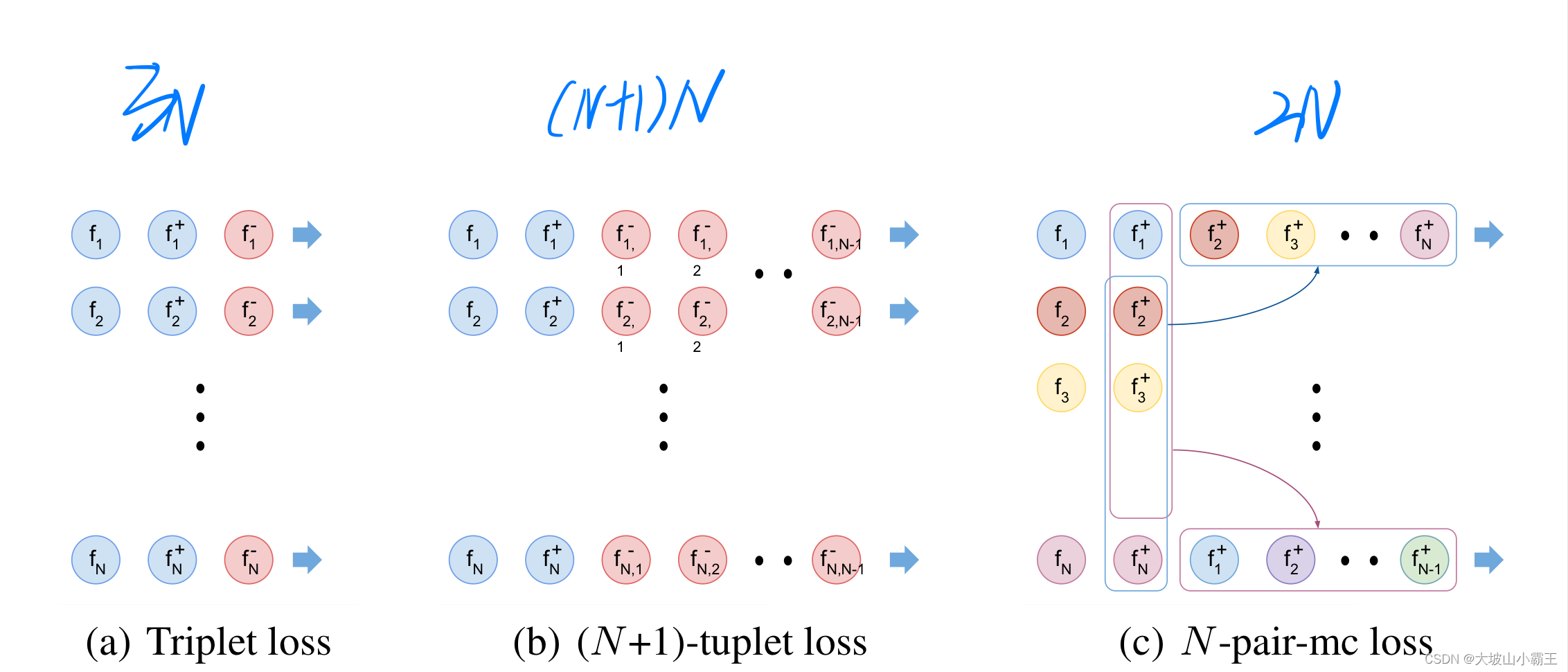

Multiple Negative Examples

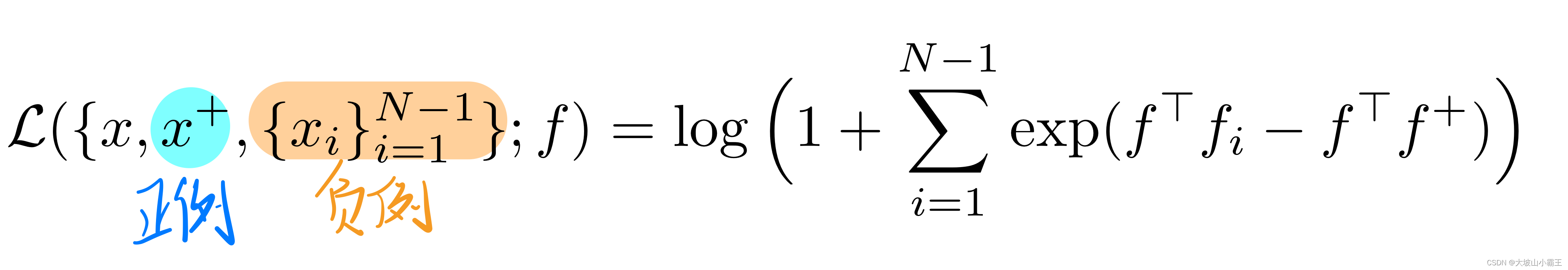

(N+1)-tuplet loss

同时推开多个类的负样本

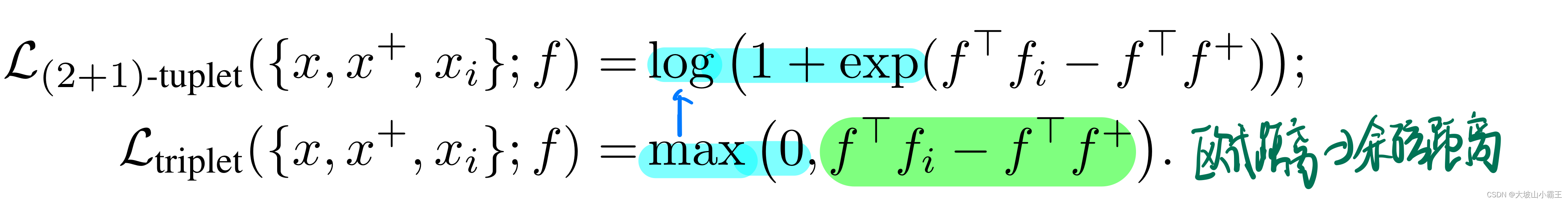

N=2 类似triplet loss

(4)(5)

(4)(5)SoftPlus 激活函数:

近似relu函数

不包含0,解决了 Dead ReLU 问题,但不包含负区间,不能加速学习

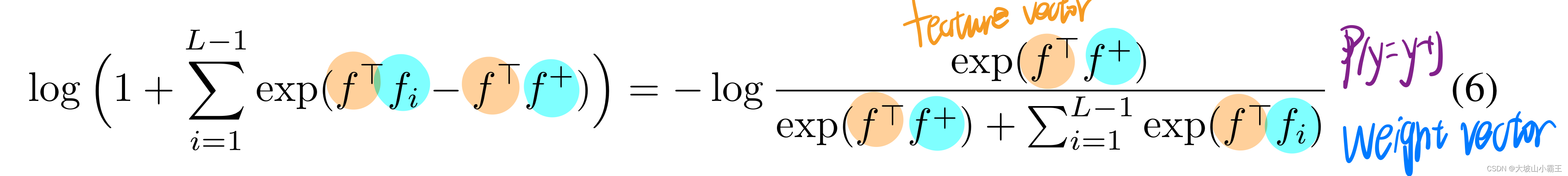

N=L 类似softmax loss

multi-class N-pair loss (N-pair-mc)

one-vs-one N-pair loss (N-pair-ovo)

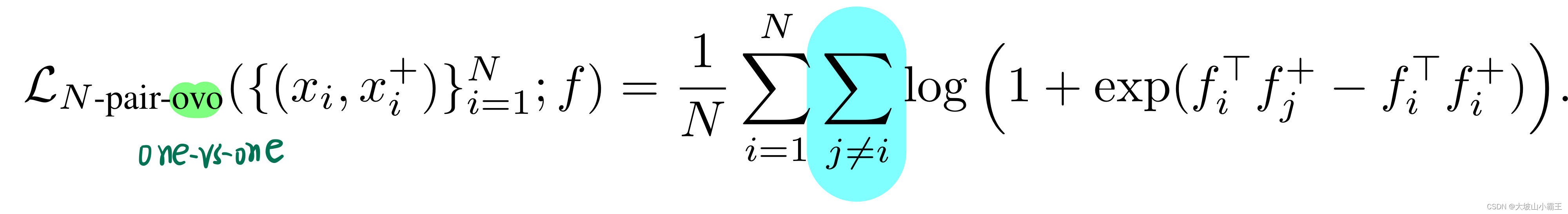

Hard negative class mining

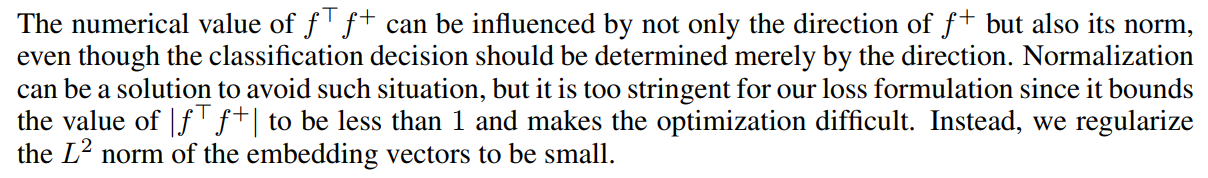

L2 norm regularization of embedding vectors

排除范数的影响——>归一化

——>严格限制|fTf+|<1,优化困难——>正则化嵌入向量的L2范数让它小

- for anchor, positive, negative_set in zip(anchors, positives, negatives):

- a_embs, p_embs, n_embs = batch[anchor:anchor+1], batch[positive:positive+1], batch[negative_set]

- inner_sum = a_embs[:,None,:].bmm((n_embs - p_embs[:,None,:]).permute(0,2,1))

- inner_sum = inner_sum.view(inner_sum.shape[0], inner_sum.shape[-1])

- loss = loss + torch.mean(torch.log(torch.sum(torch.exp(inner_sum), dim=1) + 1))/len(anchors)

- loss = loss + self.l2_weight*torch.mean(torch.norm(batch, p=2, dim=1))/len(anchors)

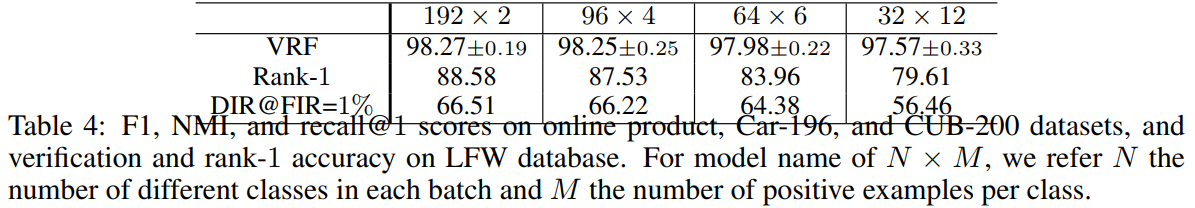

Experimental Results

adapt the smooth upper bound of triplet loss in Equation (4) instead of large-margin formulation in all our experiments to be consistent with N-pair-mc losses.

multi-class N-pair loss表现更好:one-vs-one N-pair loss解耦,每个负样本损失都是独立的

在固定batch size下,每个类采集一对样本,样本来自的类变多。训练涉及的负类越多越好。

-

相关阅读:

Linux之service服务-实现程序脚本开机自启

一文了解什么SEO

Go语言基础01 变量

3.17 haas506 2.0开发教程-example - 低功耗模式 (2.2版本接口有更新)

未来数据库需要关心的硬核创新

iceoryx源码阅读(四)——共享内存通信(二)

史上第一款AOSP开发的IDE (支持Java/Kotlin/C++/Jni/Native/Shell/Python)

关于Android NDK: Your APP_BUILD_SCRIPT points to an unknown file: Android.mk引发的思考

六级高频词汇——Group02

TortoiseGit间接处理linux目录下的仓库,用到window映射linux目录方案

- 原文地址:https://blog.csdn.net/weixin_44742887/article/details/125503711