-

K8S集群搭建(多master单node)

目录

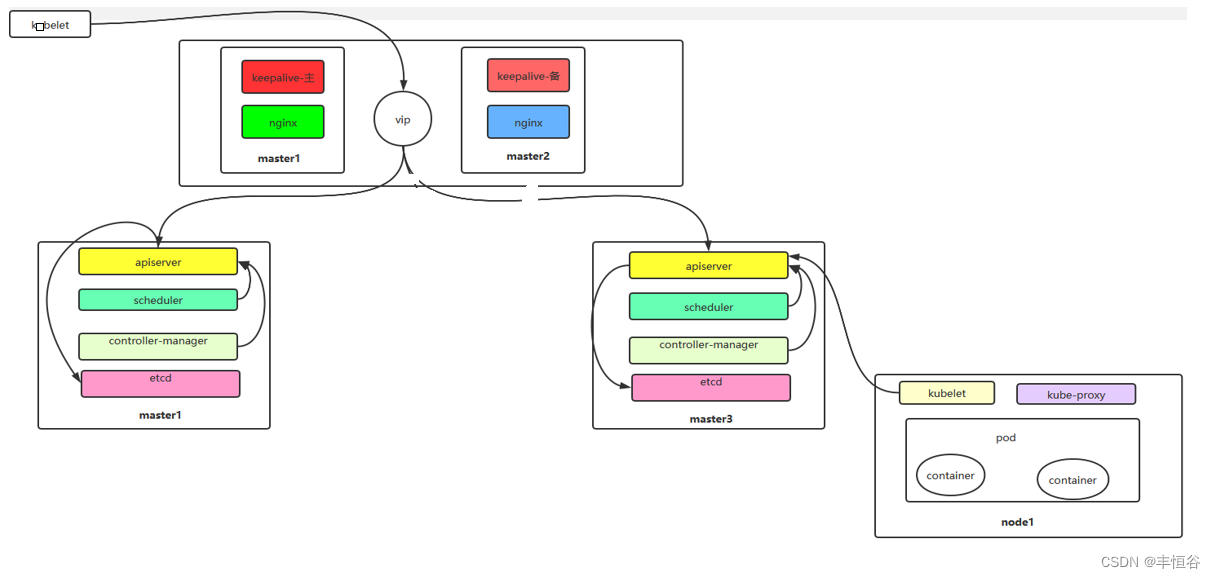

1 介绍

架构图:

k8s环境规划:

podSubnet(pod网段) 10.244.0.0/16

serviceSubnet(serivce网段) 10.10.0.0/16

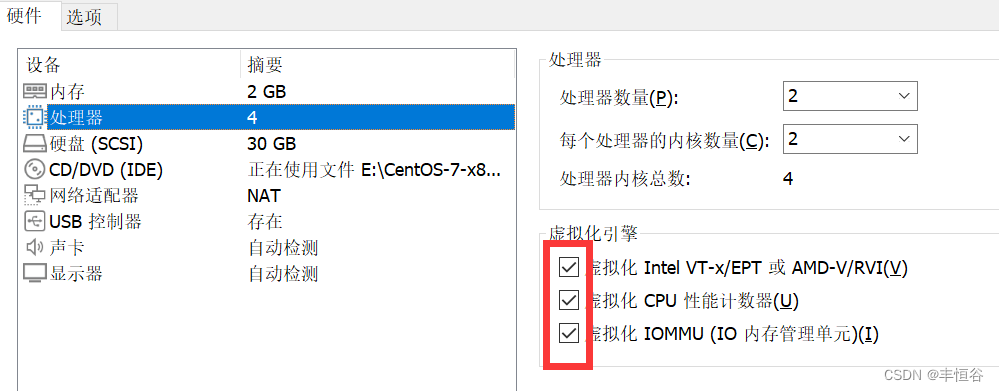

实验环境规划:

操作系统: Centos7.9

配置: 2G内存/4vCPU/40G磁盘

网络:NAT模式

开启虚拟机的虚拟化

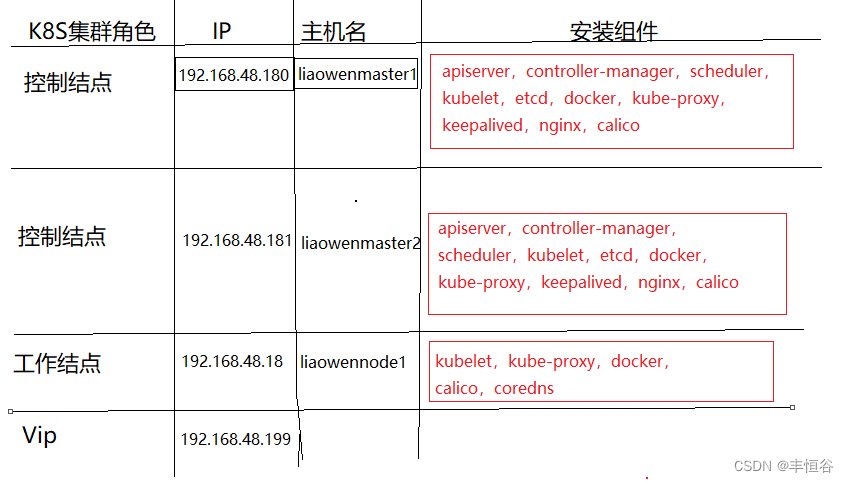

集群ip以及常见的需要安装的组件

常见的两种安装方式:

kubeadm以及二进制安装

目前是按照kubeadm安装2 初始化安装集群实验环境

2.1 修改静态ip

#修改/etc/sysconfig/network-scrips/ifcfg-ens33文件

TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static IPADDR=192.168.48.180 NETMASK=255.255.255.0 GATEWAY=192.168.48.2 DNS1=192.168.48.2 DEFROUTE=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_FAILURE_FATAL=no IPV6_ADDR_GEN_MODE=stable-privacy NAME=ens33 DEVICE=ens33 ONBOOT=yes- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

#修改配置重启网路服务生效

service network restart2.2 配置主机名

三台机器分别配置主机名字

hostnamectl set-hostname liaowenmaster1 && bash

hostnamectl set-hostname liaowenmaster2 && bash

hostnamectl set-hostname liaowennode1 && bash

2.3 配置主机hosts文件

修改每台集群的/etc/hosts文件,增加如下三行:

192.168.48.180 liaowenmaster1 192.168.48.181 liaowenmaster2 192.168.48.182 liaowennode1- 1

- 2

- 3

2.4 配置主机间无密码登录

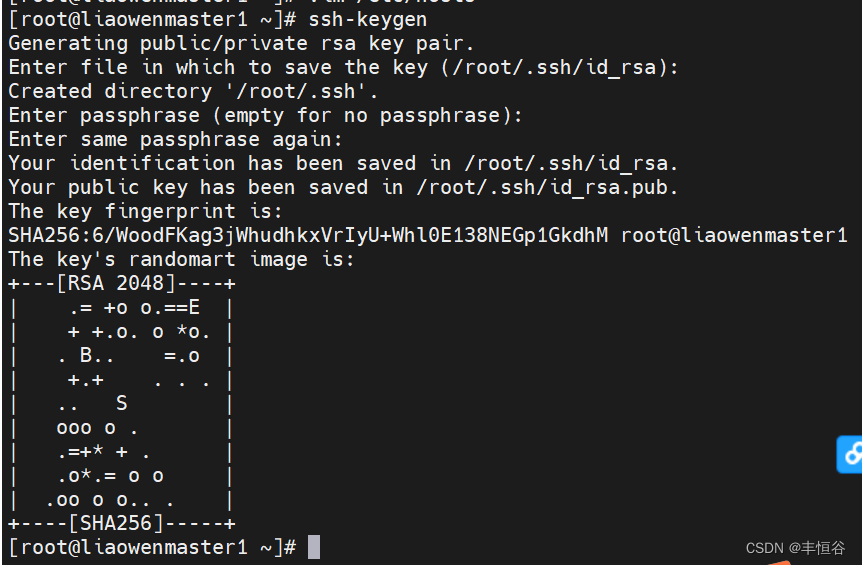

#1

ssh-keygen#一路回车

#2 将本地生成的秘钥文件和私钥文件拷贝到远程主机

ssh-copy-id liaowenmaster1

ssh-copy-id liaowenmaster2

ssh-copy-id liaowennode1

#3三台机器重复1,2流程2.5 关闭交换分区swap

#临时关闭

swapoff -a

三台机器都需要关闭

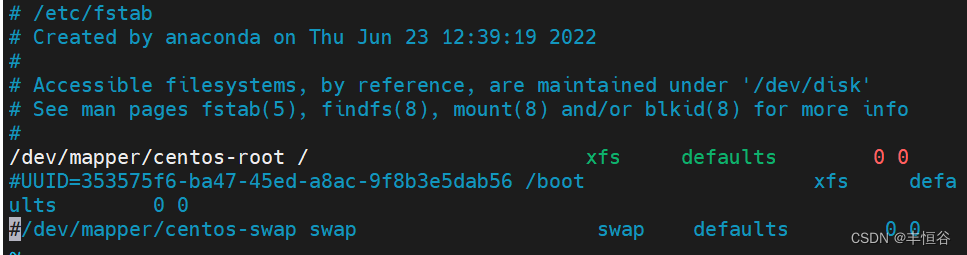

#永久关闭:注释swap挂载,给swap这行开头加一下注释

打开/etc/fstab文件

三台机器都需要操作

注意:k8s为了提升性能,在kubeadm初始化会检测是否关闭swap分区,没有关闭初始化会失败。当然也可以不关闭交换区,只是安装的时候要指定参数 --ignore-preflight-errors=Swap解决2.6 修改机器内核参数

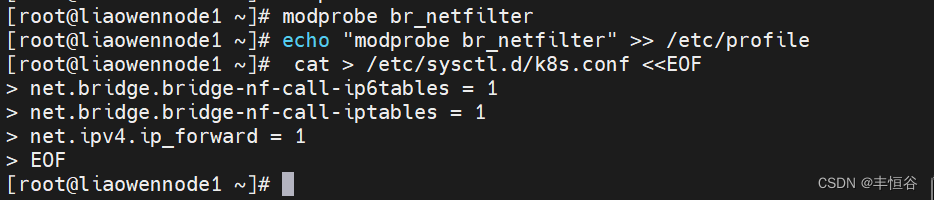

#配置netfilter

modprobe br_netfilter

#开启数据包转发

echo "modprobe br_netfilter" >> /etc/profile

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

#运行时配置内核参数

sysctl -p /etc/sysctl.d/k8s.conf

操作完成后:

三台机器都是同样的操作2.7 关闭防火墙

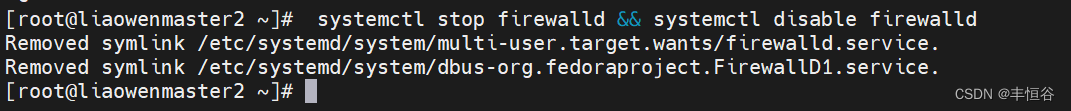

systemctl stop firewalld ; systemctl disable firewalld

三台机器都需要操作2.8 关闭安全增加selinux

#永久生效sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config- 1

重启永久生效

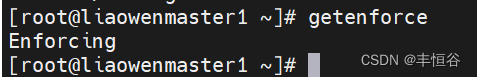

#查看状态

getenforce

#临时设置

setenforce 02.9 配置阿里云的repo源

安装rzsz命令yum install lrzsz -y- 1

安装scpyum install openssh-clients- 1

备份基础repo源mkdir /root/repo.bak cd /etc/yum.repos.d/ mv * /root/repo.bak/- 1

- 2

- 3

下载阿里云的repo源

下载到/etc/yum.repos.d/目录下

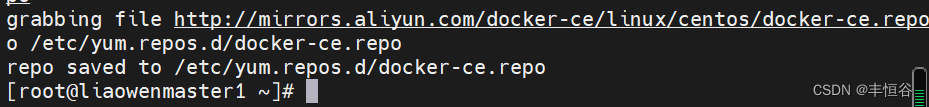

配置国内阿里云docker的repo源yum install yum-utils yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo- 1

- 2

最终结果:

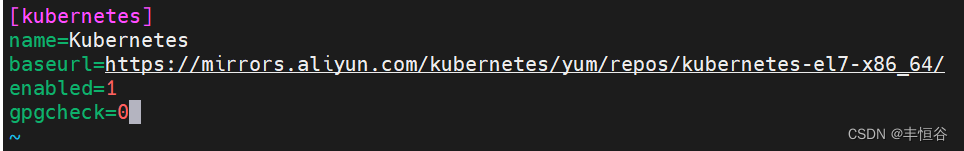

2.10 配置安装k8s组件阿里云repo源

添加配置文件 /etc/yum.repos.d/kubernetes.repo

[kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0- 1

- 2

- 3

- 4

- 5

所有的集群都需要操作2.11 配置时间同步

安装ntpdate命令

yum install ntpdate -y

跟网络时间做同步

ntpdate cn.pool.ntp.org

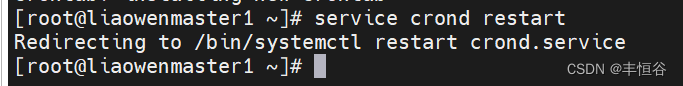

把时间同步做成计划任务

crontab -e- */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org

重启crond服务- service crond restart

- 最终效果如下:三台机器都需要操作

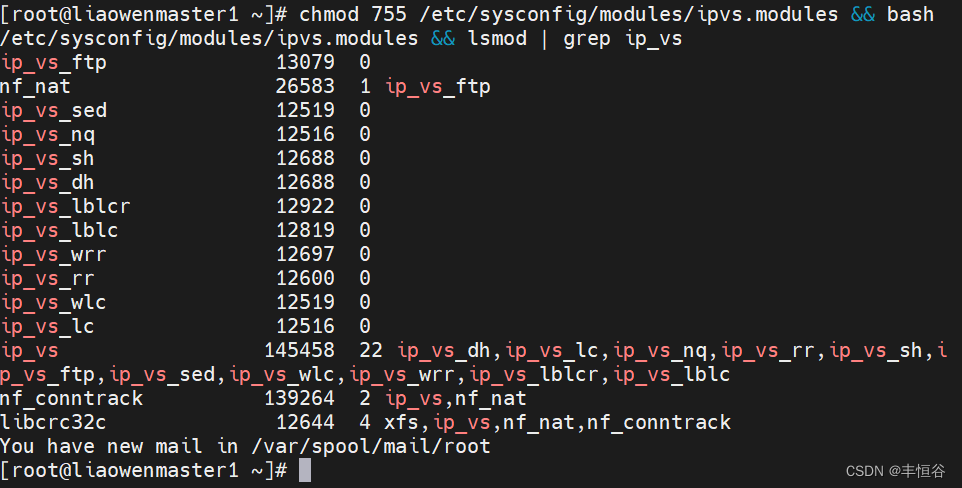

2.12 开启ipvs

编写脚本

在/etc/sysconfig/modules/ipvs.modules文件#!/bin/bash ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack" for kernel_module in ${ipvs_modules}; do /sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1 if [ 0 -eq 0 ]; then /sbin/modprobe ${kernel_module} fi done- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

执行添加权限

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

如下图:

三台机器同样此操作2.13 安装依赖基础软件包

yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm- 1

成功后如下:三台机器都需要操作

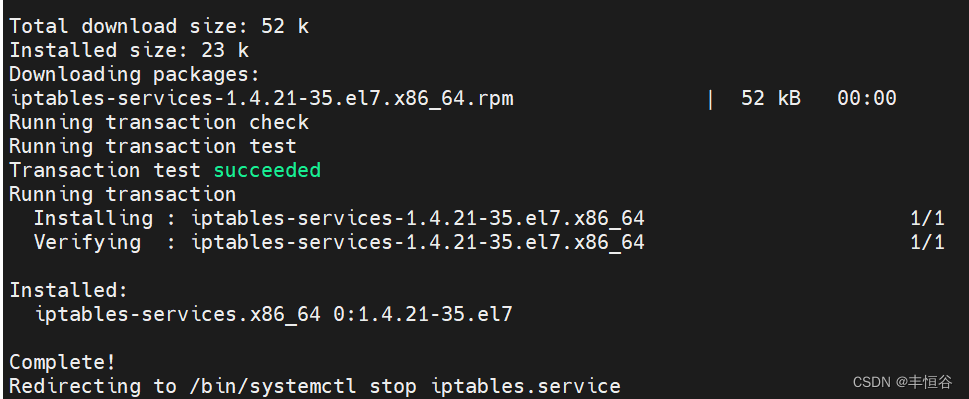

2.14 安装iptables

安装iptables

yum install iptables-services -y

禁用iptables

service iptables stop && systemctl disable iptables

清空防火墙规则

iptables -F

成功如下:

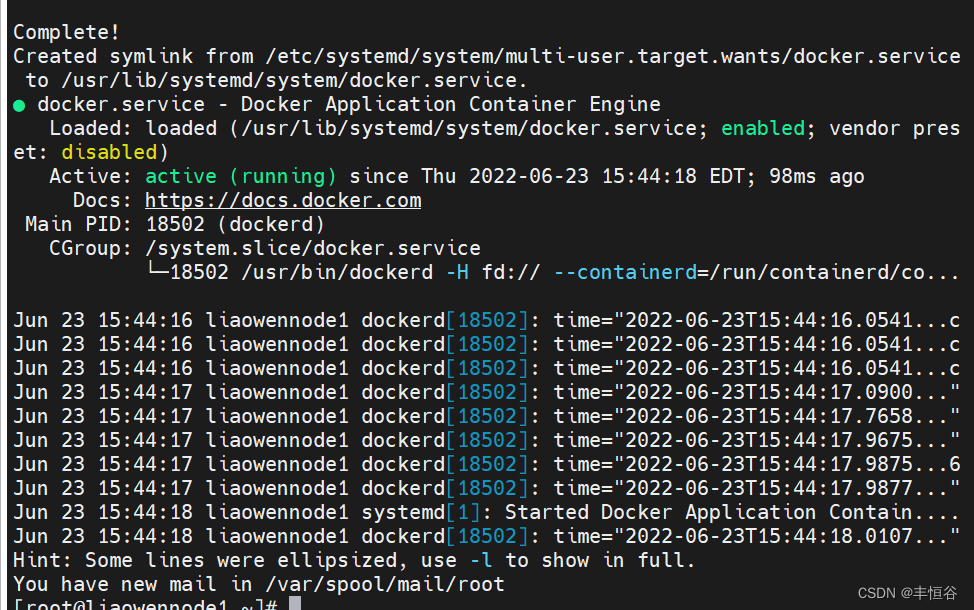

3 安装docker服务

3.1 安装docker-ce

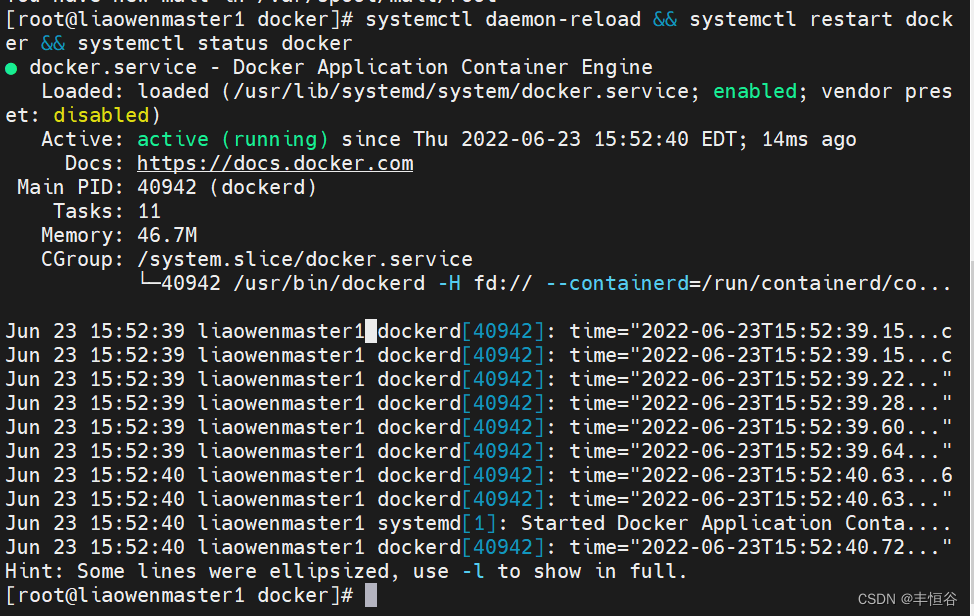

安装docker服务yum install docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io -y && systemctl start docker && systemctl enable docker && systemctl status docker- 1

成功后如下:

三台机器都需要安装3.2 配置docker镜像加速器和驱动

创建守护进程启动配置

vim /etc/docker/daemon.jsonsudo tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ["https://xbmqrz1y.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"] } EOF sudo systemctl daemon-reload sudo systemctl restart docker sudo systemctl status docker- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

`

4 安装初始化k8s依赖包

yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6 && systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet- 1

看到的kubelet状态不是running状态,这个是正常的;当k8s起来的时候就正常了。

Kubeadm: kubeadm 是一个工具,用来初始化 k8s 集群的

kubelet: 安装在集群所有节点上,用于启动 Pod 的

kubectl: 通过 kubectl 可以部署和管理应用,查看各种资源,创建、删除和更新各种组件

以上所有的操作都是安装k8s通用操作。5 通过keepalive+nginx实现k8s apiserver结点高可用

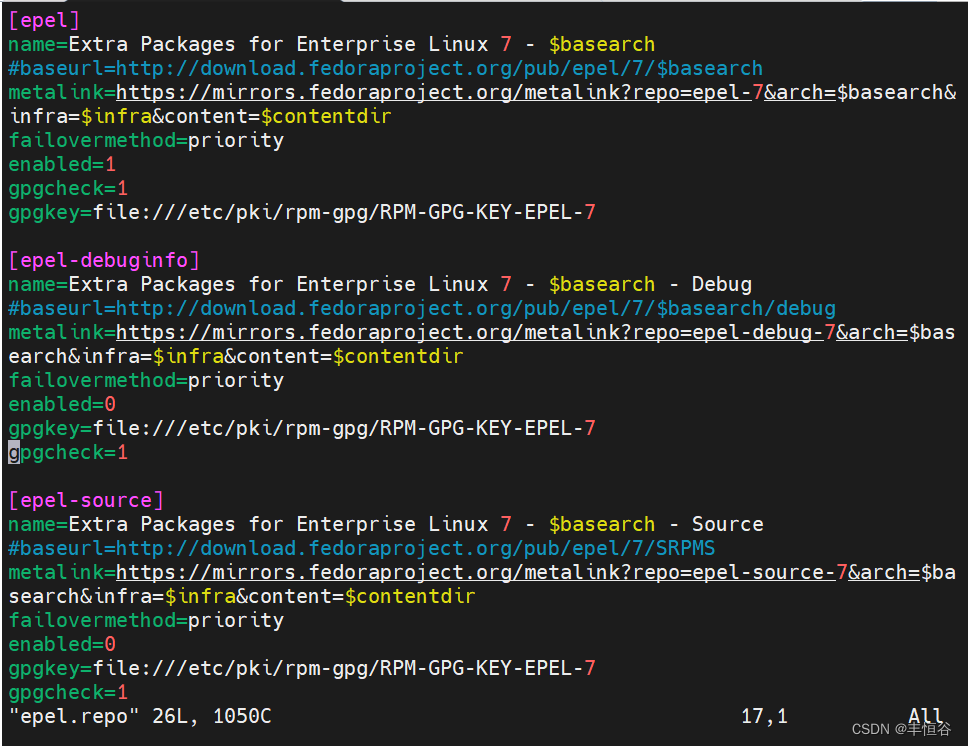

配置epel源

三台机器都需要配置5.1 安装nginx主备

控制结点安装nginx和keepalived

yum install nginx keepalived -y5.2 修改nginx配置文件

修改nginx.conf配置

user nginx; worker_processes auto; error_log /var/log/nginx/error.log; pid /run/nginx.pid; include /usr/share/nginx/modules/*.conf; events { worker_connections 1024; } # 四层负载均衡,为两台 Master apiserver 组件提供负载均衡 stream { log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent'; access_log /var/log/nginx/k8s-access.log main; upstream k8s-apiserver { server 192.168.48.180:6443; # Master1 APISERVER IP:PORT server 192.168.48.181:6443; # Master2 APISERVER IP:PORT } server { listen 16443; # 由于 nginx 与 master 节点复用,这个监听端口不能是 6443,否则会冲突 proxy_pass k8s-apiserver; } } http { log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; include /etc/nginx/mime.types; default_type application/octet-stream; server { listen 80 default_server; server_name _; location / { } } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

5.3 keepalive配置

主keepalived配置

vim /etc/keepalived/keepalived.confglobal_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER } vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh" } vrrp_instance VI_1 { state MASTER interface ens33 # 修改为实际网卡名 virtual_router_id 51 # VRRP 路由 ID 实例,每个实例是唯一的 priority 100 # 优先级,备服务器设置 90 advert_int 1 # 指定 VRRP 心跳包通告间隔时间,默认 1 秒 authentication { auth_type PASS auth_pass 1111 } # 虚拟 IP virtual_ipaddress { 192.168.48.199/24 } track_script { check_nginx } } #vrrp_script:指定检查 nginx 工作状态脚本(根据 nginx 状态判断是否故障转移) #virtual_ipaddress:虚拟 IP(VIP)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

编写脚本/etc/keepalived/check_nginx.sh

#!/bin/bash count=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$") if [ "$count" -eq 0 ];then systemctl stop keepalived fi- 1

- 2

- 3

- 4

- 5

chmod +x /etc/keepalived/check_nginx.sh

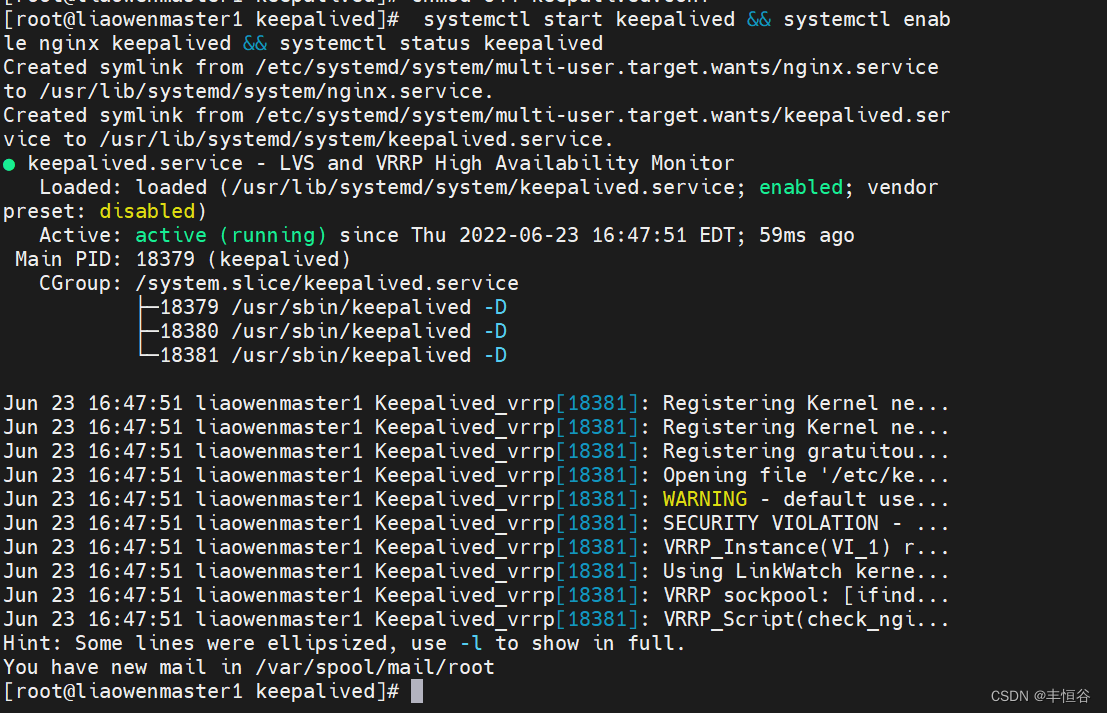

5.4 启动服务

启动nginx

systemctl daemon-reload && yum install nginx-mod-stream -y && systemctl start nginx

启动keepalived

systemctl start keepalived && systemctl enable nginx keepalived && systemctl status keepalived

注意:keepalived无法启动的时候,查看keepalived.conf配置权限

chmod 644 keepalived.conf

启动成功如下:

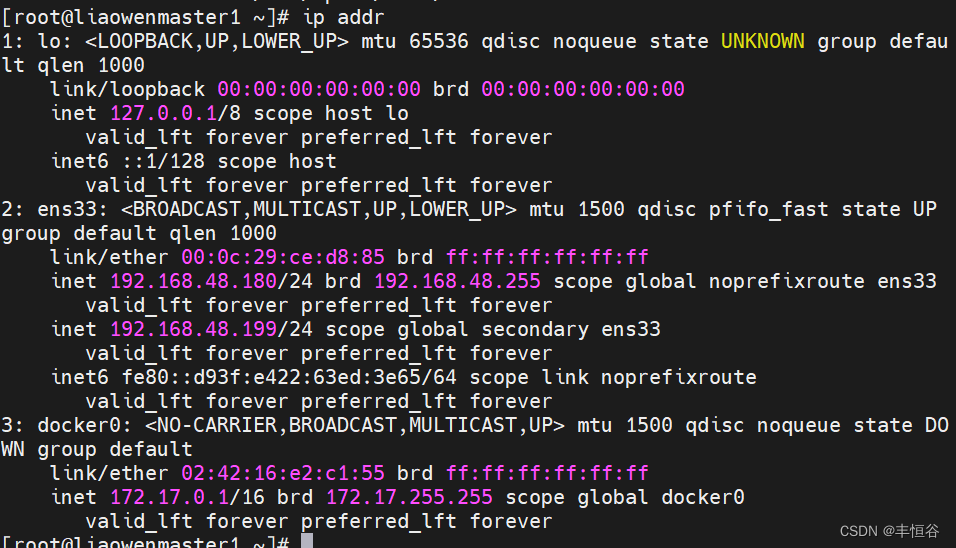

两天控制结点都需要启动5.5 测试vip是否绑定成功

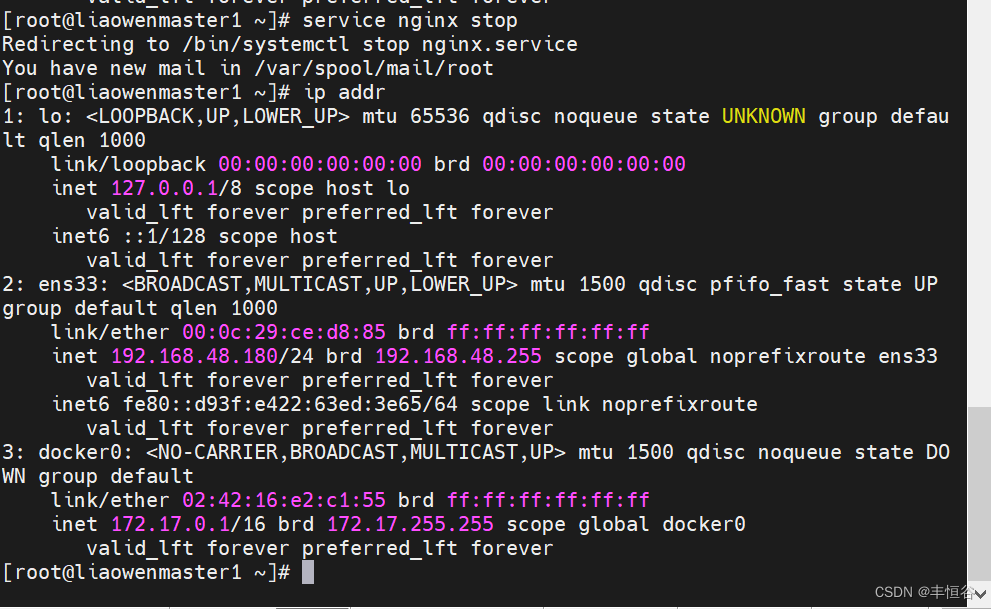

master1查看 ip addr

5.6 测试keepalived

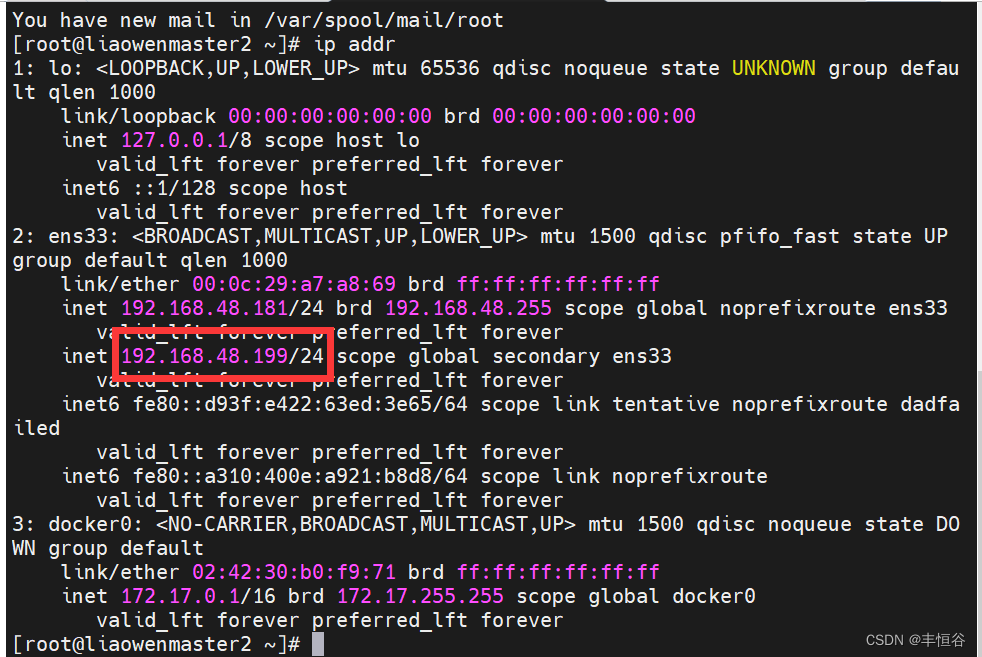

停掉master1上的nginx。vip会漂移到master2结点上去

service nginx stop

master1显示:

master2显示:

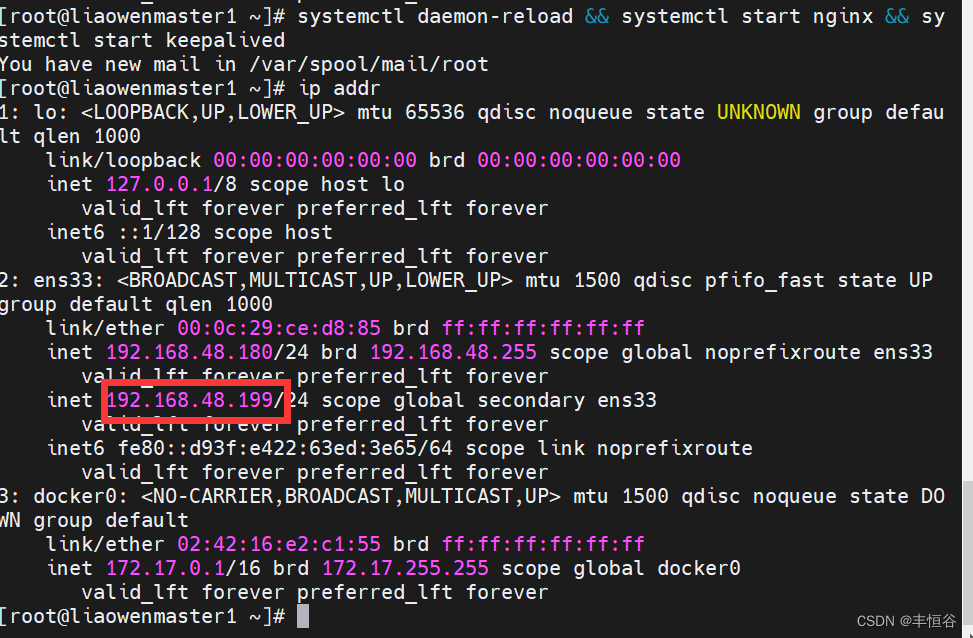

master1重新启动:

systemctl daemon-reload && systemctl start nginx && systemctl start keepalived

6 kubeadm初始化k8s集群

创建配置

master1控制结点:

cd /root/

创建kubeadm-config.yaml配置apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.20.6 controlPlaneEndpoint: 192.168.48.199:16443 imageRepository: registry.aliyuncs.com/google_containers apiServer: certSANs: - 192.168.48.180 - 192.168.48.181 - 192.168.48.182 - 192.168.48.199 networking: podSubnet: 10.244.0.0/16 serviceSubnet: 10.10.0.0/16 --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

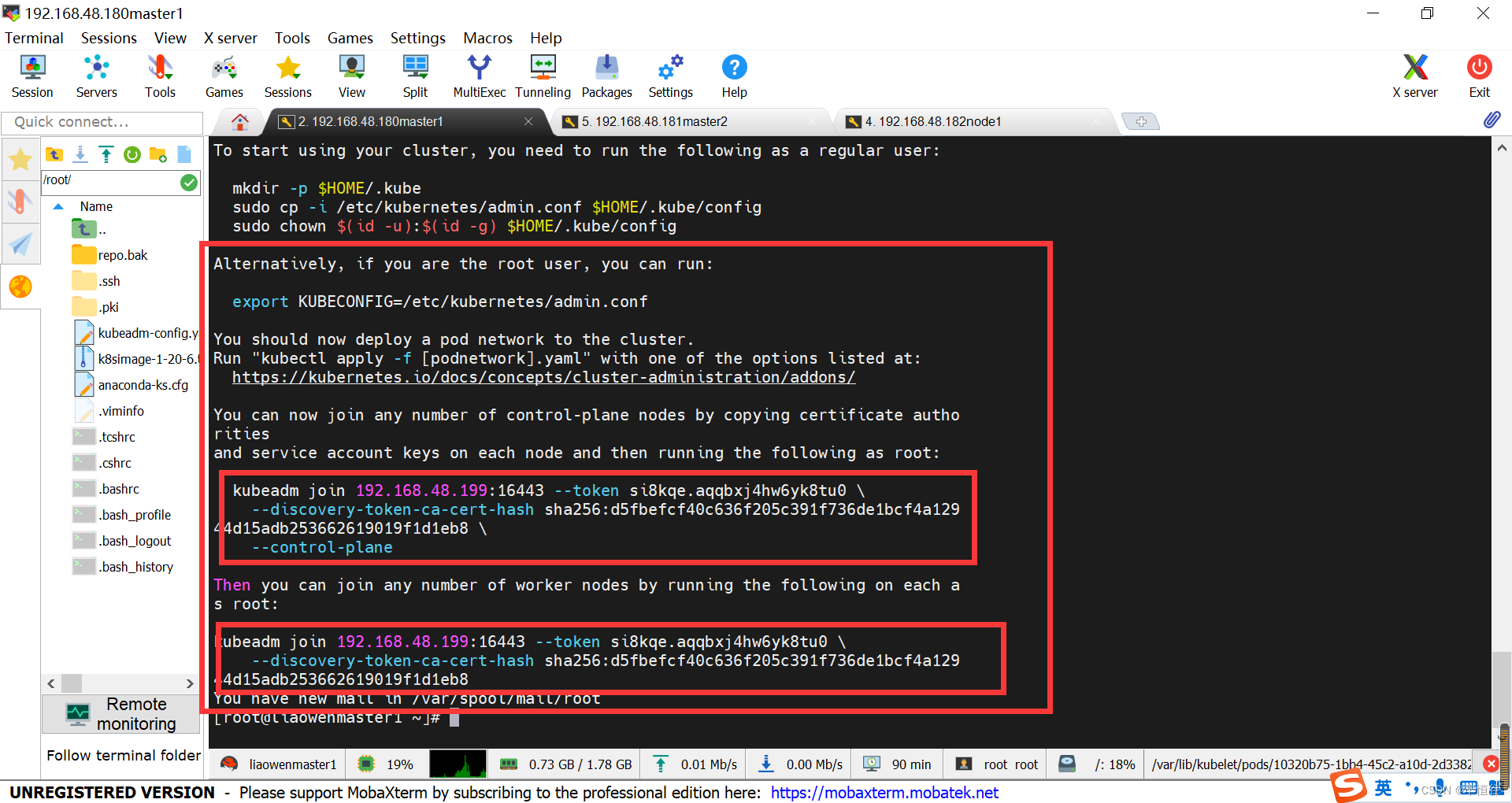

使用kubeadm初始化k8s集群

#k8s离线包上次到master1,master2,node1结点上,手动解压

docker load -i k8simage-1-20-6.tar.gz (三台服务器都需要解压)#初始化

master1操作:

kubeadm init --config kubeadm-config.yaml --ignore-preflight-errors=SystemVerification 执行命令后

添加master到集群:kubeadm join 192.168.48.199:16443 --token si8kqe.aqqbxj4hw6yk8tu0 \ --discovery-token-ca-cert-hash sha256:d5fbefcf40c636f205c391f736de1bcf4a129 44d15adb253662619019f1d1eb8 \ --control-plane- 1

- 2

- 3

添加node结点到集群:

kubeadm join 192.168.48.199:16443 --token si8kqe.aqqbxj4hw6yk8tu0 \ --discovery-token-ca-cert-hash sha256:d5fbefcf40c636f205c391f736de1bcf4a129 44d15adb253662619019f1d1eb8- 1

- 2

配置kubectl的配置文件configmkdir -p $HOME/.kube && cp -i /etc/kubernetes/admin.conf $HOME/.kube/config && chown $(id -u):$(id -g) $HOME/.kube/config- 1

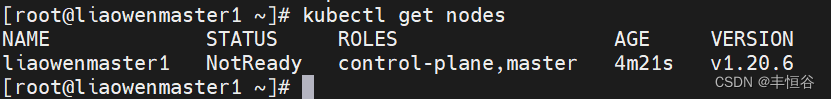

查看集群结点:

kubectl get nodes

7 扩容k8s集群-添加master节点

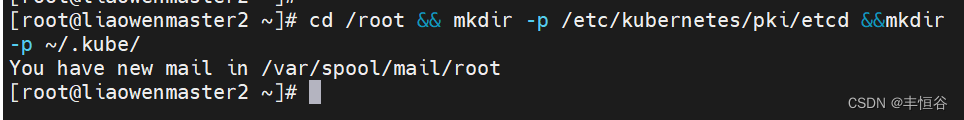

master2操作:创建存储目录

cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/

如下图:

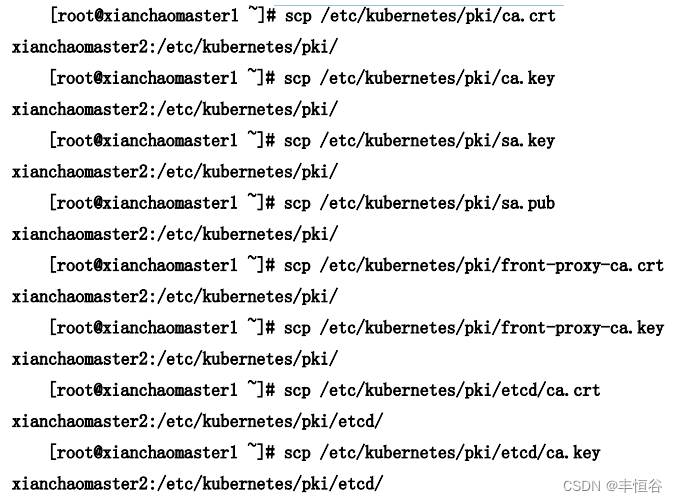

将master1整数拷贝到master2上

master1操作

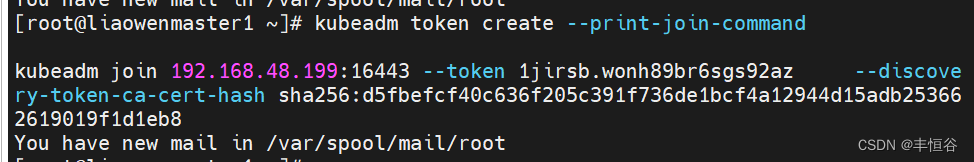

生成token命令

master2启动结点

执行master1生成的命令,需要加上参数 --controll-plane8 扩容k8s集群-添加node结点

master1操作

kubeadm token create --print-join-command显示如下:

kubeadm join 192.168.48.199:16443 --token ewchm4.uxqm5jacqvyez5vl --discovery-token-ca-cert-hash sha256:d5fbefcf40c636f205c391f736de1bcf4a12944d15adb253662619019f1d1eb8

node1操作

执行master1生成的命令

kubeadm join 192.168.48.199:16443 --token ewchm4.uxqm5jacqvyez5vl --discovery-token-ca-cert-hash sha256:d5fbefcf40c636f205c391f736de1bcf4a12944d15adb253662619019f1d1eb89 安装k8s网络组件–Calico

10 测试k8s创建pod是否正常访问网络

11 测试k8s集群部署tomcat服务

12 测试coredns是否正常

-

相关阅读:

微服务组件之Zuul

Python字符串格式化输出语法汇总

《Python3 网络爬虫开发实战》:灵巧好用的 正则表达式

Jquery会议室布局含门入口和投影位置调整,并自动截图

特定深度节点链表

【微软】【ICLR 2022】TAPEX:通过学习神经 SQL 执行器进行表预训练

Android.mk实践

为什么创建 Redis 集群时会自动错开主从节点?

Win11 Edge浏览器进入朔日考试系统(无纸化测评系统)的方法

协议和分层次

- 原文地址:https://blog.csdn.net/u011744843/article/details/125437671