-

client-go gin的简单整合十-Update

背景

完成了client-go gin的简单整合九-Create的namespace deployment pod的 创建操作,现在进行一下update修改的操作!

update namespace

以某 ns为例增加一个标签

关于namespace,我们用的最多的标签是name 和labels(恩前面我还做过配额的例子,这里就简单的拿labels为例了)

[zhangpeng@zhangpeng k8s-demo1]$ kubectl get ns --show-labels NAME STATUS AGE LABELS default Active 53d kubernetes.io/metadata.name=default kube-node-lease Active 53d kubernetes.io/metadata.name=kube-node-lease kube-public Active 53d kubernetes.io/metadata.name=kube-public kube-system Active 53d kubernetes.io/metadata.name=kube-system zhangpeng Active 1s kubernetes.io/metadata.name=zhangpeng zhangpeng1 Active 3h21m kubernetes.io/metadata.name=zhangpeng1- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

如果去update修改namespace我们常用的应该是修改namespace的labels,以zhangpeng namespace为例,我想增加一个name=abcd的标签

/src/service/Namespace.gopackage service import ( "context" "fmt" "github.com/gin-gonic/gin" . "k8s-demo1/src/lib" v1 "k8s.io/api/core/v1" metav1 "k8s.io/apimachinery/pkg/apis/meta/v1" "time" ) type Time struct { time.Time `protobuf:"-"` } type Namespace struct { Name string `json:"name"` CreateTime time.Time `json:"CreateTime"` Status string `json:"status"` Labels map[string]string `json:"labels"` Annotations map[string]string `json:"annotations"` } func ListNamespace(g *gin.Context) { ns, err := K8sClient.CoreV1().Namespaces().List(context.Background(), metav1.ListOptions{}) if err != nil { g.Error(err) return } ret := make([]*Namespace, 0) for _, item := range ns.Items { ret = append(ret, &Namespace{ Name: item.Name, CreateTime: item.CreationTimestamp.Time, Status: string(item.Status.Phase), Labels: item.Labels, }) } g.JSON(200, ret) return } func create(ns Namespace) (*v1.Namespace, error) { ctx := context.Background() newNamespace, err := K8sClient.CoreV1().Namespaces().Create(ctx, &v1.Namespace{ ObjectMeta: metav1.ObjectMeta{ Name: ns.Name, Labels: ns.Labels, }, }, metav1.CreateOptions{}) if err != nil { fmt.Println(err) } return newNamespace, err } func updatenamespace(ns Namespace) (*v1.Namespace, error) { ctx := context.Background() newNamespace, err := K8sClient.CoreV1().Namespaces().Update(ctx, &v1.Namespace{ ObjectMeta: metav1.ObjectMeta{ Name: ns.Name, Labels: ns.Labels, }, }, metav1.UpdateOptions{}) if err != nil { fmt.Println(err) } return newNamespace, err } func CreateNameSpace(g *gin.Context) { var nameSpace Namespace if err := g.ShouldBind(&nameSpace); err != nil { g.JSON(500, err) } namespace, err := create(nameSpace) if err != nil { g.JSON(500, err) } ns := Namespace{ Name: namespace.Name, CreateTime: namespace.CreationTimestamp.Time, Status: string(namespace.Status.Phase), Labels: nil, Annotations: nil, } g.JSON(200, ns) } func UpdateNameSpace(g *gin.Context) { var nameSpace Namespace if err := g.ShouldBind(&nameSpace); err != nil { g.JSON(500, err) } namespace, err := updatenamespace(nameSpace) if err != nil { g.JSON(500, err) } ns := Namespace{ Name: namespace.Name, CreateTime: namespace.CreationTimestamp.Time, Status: string(namespace.Status.Phase), Labels: namespace.Labels, Annotations: nil, } g.JSON(200, ns) }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

创建updatenamespace方法 UpdateNameSpace service

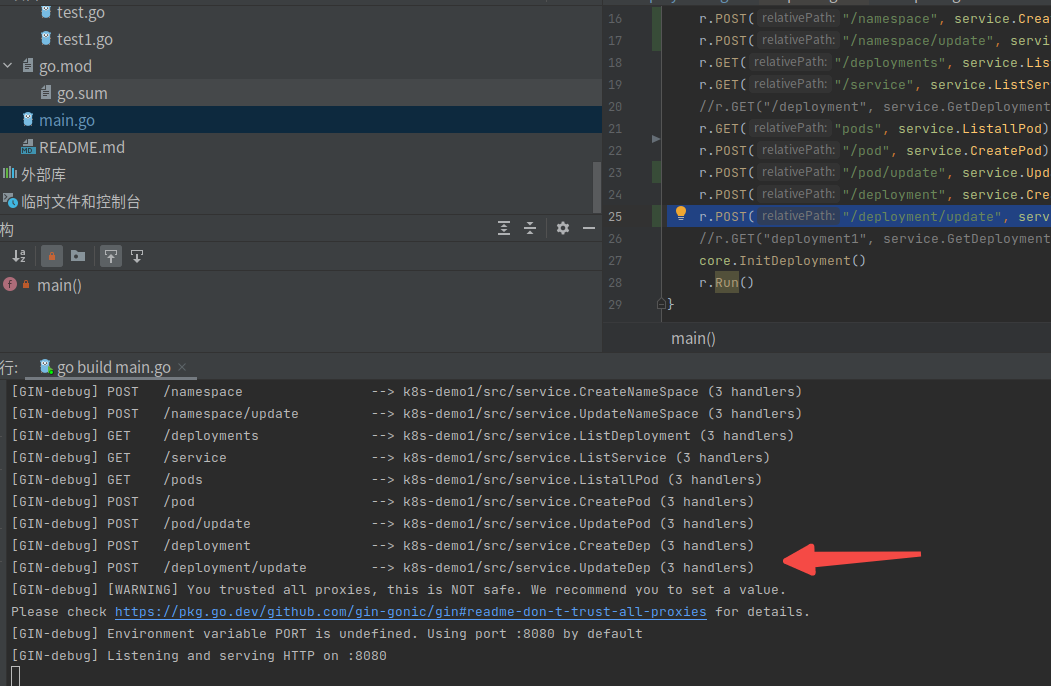

main.go创建路由:package main import ( "github.com/gin-gonic/gin" "k8s-demo1/src/core" "k8s-demo1/src/service" // "k8s.io/client-go/informers/core" ) func main() { r := gin.Default() r.GET("/", func(context *gin.Context) { context.JSON(200, "hello") }) r.GET("/namespaces", service.ListNamespace) r.POST("/namespace", service.CreateNameSpace) r.POST("/namespace/update", service.UpdateNameSpace) r.GET("/deployments", service.ListDeployment) r.GET("/service", service.ListService) r.GET("pods", service.ListallPod) r.POST("/pod", service.CreatePod) r.POST("/deployment", service.CreateDep) core.InitDeployment() r.Run() }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

运行main.go

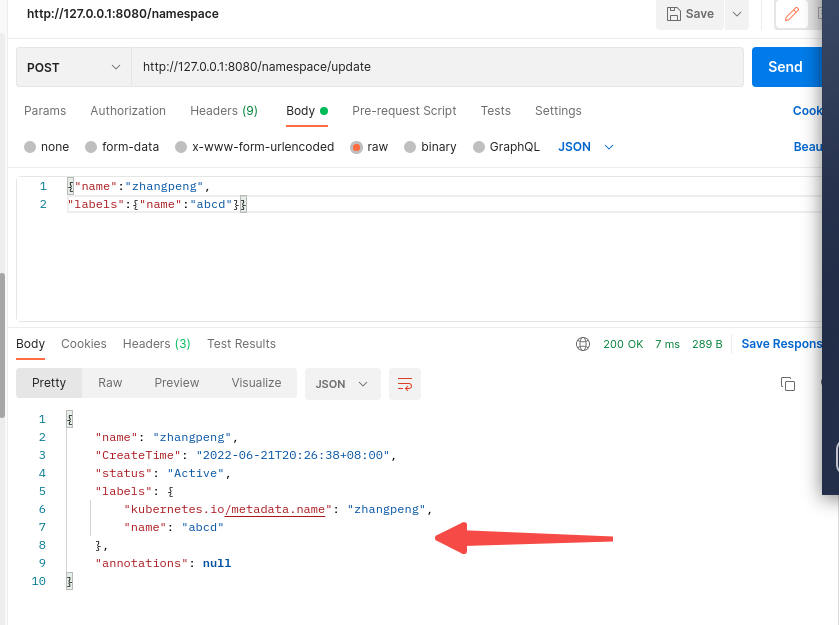

Postman 测试

post http://127.0.0.1:8080/namespace/update

{"name":"zhangpeng", "labels":{"name":"abcd"}}- 1

- 2

[zhangpeng@zhangpeng k8s-demo1]$ kubectl get ns --show-labels- 1

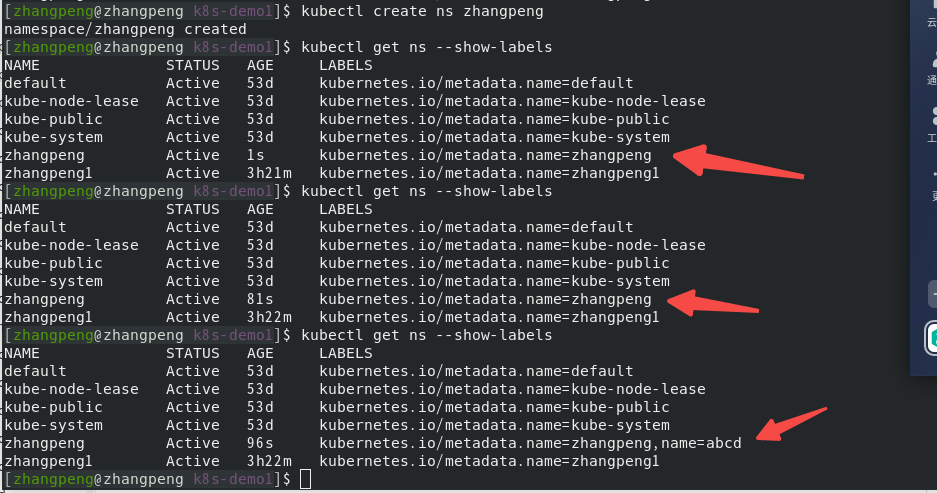

修改name标签为其他值

post http://127.0.0.1:8080/namespace/update

{"name":"zhangpeng", "labels":{"name":"abcd123"}}- 1

- 2

其他的可以玩的:扩展一下resourcequotas,可以参照这个的搞一下quota的配置,这里就不先演示了呢!update pod?

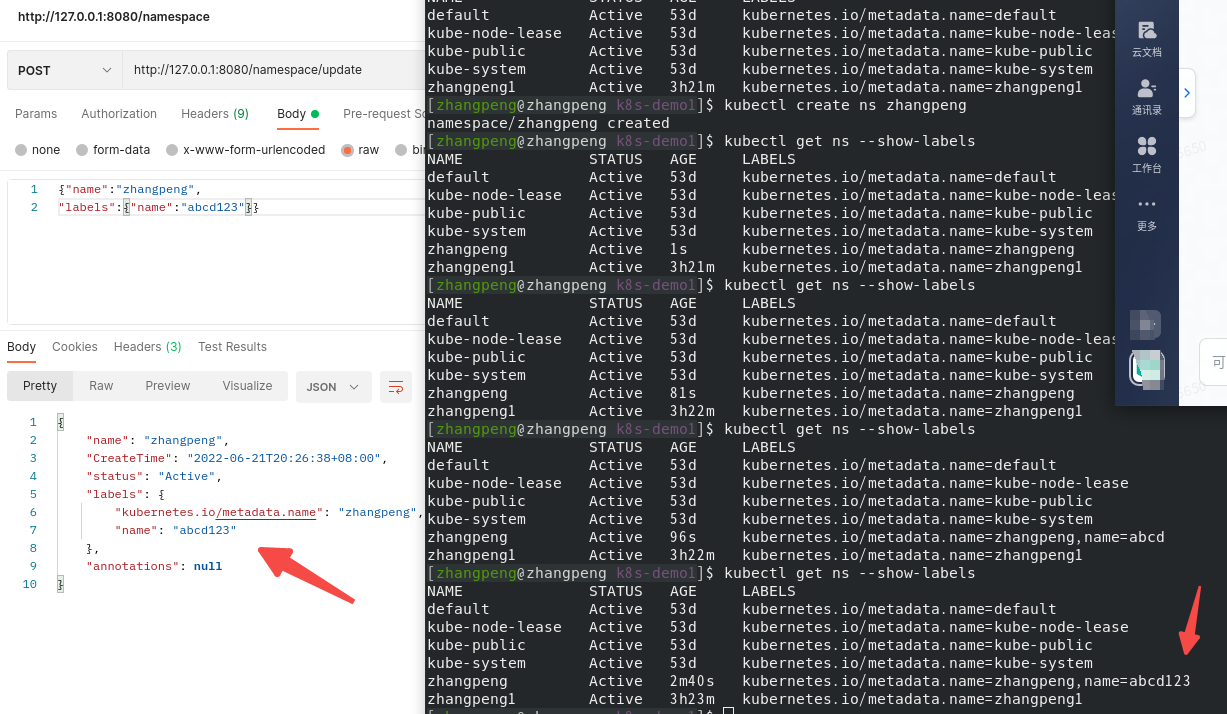

pod是否可以update更新呢?

pod是否可以update更新呢?答案是否定的…

注:pod是一个容器的生命周期,理论上是不可以更新的,而且一般的pod 是基于基于deployment or rs等控制管理pod的,修改则意为着生命周期的结束和新的pod的产生,当然了可以基于openkruise的其他的应用实现pod的原地升级!依着葫芦画瓢,体验一下错误

[zhangpeng@zhangpeng k8s]$ kubectl get pods -n zhangpeng NAME READY STATUS RESTARTS AGE zhangpeng 1/1 Running 0 78m- 1

- 2

- 3

[zhangpeng@zhangpeng k8s-demo1]$ kubectl get pods -o yaml -n zhangpeng apiVersion: v1 items: - apiVersion: v1 kind: Pod metadata: creationTimestamp: "2022-06-22T02:31:49Z" name: zhangpeng namespace: zhangpeng resourceVersion: "6076576" uid: cee39c9d-fc29-40ee-933e-d9fa76ba20e1 spec: containers: - image: nginx imagePullPolicy: Always name: zhangpeng resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /var/run/secrets/kubernetes.io/serviceaccount name: kube-api-access-f7qvv readOnly: true dnsPolicy: ClusterFirst enableServiceLinks: true nodeName: k8s-2 preemptionPolicy: PreemptLowerPriority priority: 0 restartPolicy: Always schedulerName: default-scheduler securityContext: {} serviceAccount: default serviceAccountName: default terminationGracePeriodSeconds: 30 tolerations: - effect: NoExecute key: node.kubernetes.io/not-ready operator: Exists tolerationSeconds: 300 - effect: NoExecute key: node.kubernetes.io/unreachable operator: Exists tolerationSeconds: 300 volumes: - name: kube-api-access-f7qvv projected: defaultMode: 420 sources: - serviceAccountToken: expirationSeconds: 3607 path: token - configMap: items: - key: ca.crt path: ca.crt name: kube-root-ca.crt - downwardAPI: items: - fieldRef: apiVersion: v1 fieldPath: metadata.namespace path: namespace status: conditions: - lastProbeTime: null lastTransitionTime: "2022-06-22T02:31:49Z" status: "True" type: Initialized - lastProbeTime: null lastTransitionTime: "2022-06-22T02:32:06Z" status: "True" type: Ready - lastProbeTime: null lastTransitionTime: "2022-06-22T02:32:06Z" status: "True" type: ContainersReady - lastProbeTime: null lastTransitionTime: "2022-06-22T02:31:49Z" status: "True" type: PodScheduled containerStatuses: - containerID: docker://47d2c805f72cd023ff9b33d46f63b1b8e7600f64783685fe4c16d97f4b58b290 image: nginx:latest imageID: docker-pullable://nginx@sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31 lastState: {} name: zhangpeng ready: true restartCount: 0 started: true state: running: startedAt: "2022-06-22T02:32:05Z" hostIP: 192.168.0.176 phase: Running podIP: 10.244.1.64 podIPs: - ip: 10.244.1.64 qosClass: BestEffort startTime: "2022-06-22T02:31:49Z" kind: List metadata: resourceVersion: ""- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

/src/service/Pod.go

注:模仿deployment create写的…反正结果都是失败的,就走一遍流程看一下!后面都是要删掉的…func (d *Pod) GetImageName() string { // 全部为应为字母数字和: pods := strings.Index(d.Images, ":") if pods > 0 { return d.Images[:pods] } return d.Images } func updatepod(pod Pod) (*corev1.Pod, error) { newpod, err := K8sClient.CoreV1().Pods(pod.Namespace).Update(context.TODO(), &corev1.Pod{ ObjectMeta: metav1.ObjectMeta{ Name: pod.Name, Namespace: pod.Namespace, Labels: pod.Labels, Annotations: pod.Annotations, }, Spec: corev1.PodSpec{ Containers: []corev1.Container{ {Name: pod.Name, Image: pod.Images, Ports: pod.GetPorts()}, }, }, }, metav1.UpdateOptions{}) if err != nil { fmt.Println(err) } return newpod, err } func UpdatePod(g *gin.Context) { var NewPod Pod if err := g.ShouldBind(&NewPod); err != nil { g.JSON(500, err) } pod, err := updatepod(NewPod) if err != nil { g.JSON(500, err) } newpod := Pod{ Namespace: pod.Namespace, Name: pod.Name, Images: pod.Spec.Containers[0].Image, CreateTime: pod.CreationTimestamp.Format("2006-01-02 15:04:05"), Annotations: pod.ObjectMeta.Annotations, } g.JSON(200, newpod) }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

main.go增加如下配置:

r.POST("/pod/update", service.UpdatePod)- 1

运行main.go postman测试:

恩 大概就是这个样子 就为了演示一下,更深入理解一下pod生命周期,当然了也可以去研究一下那些原地升级的方法openkruise还是很不错的!update deployment

初始deployment为下

[zhangpeng@zhangpeng k8s]$ kubectl get pods -n zhangpeng NAME READY STATUS RESTARTS AGE zhangpeng-5dffd5664f-nvfsm 1/1 Running 0 27s [zhangpeng@zhangpeng k8s]$ kubectl get deployment zhangpeng -n zhangpeng NAME READY UP-TO-DATE AVAILABLE AGE zhangpeng 1/1 1 1 40s- 1

- 2

- 3

- 4

- 5

- 6

[zhangpeng@zhangpeng k8s]$ kubectl get deployment zhangpeng -n zhangpeng -o yaml apiVersion: apps/v1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "1" creationTimestamp: "2022-06-22T06:44:06Z" generation: 1 name: zhangpeng namespace: zhangpeng resourceVersion: "6096527" uid: d42d5851-2e63-439b-b2a5-976b5fe246bb spec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: app: zhangpeng strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: creationTimestamp: null labels: app: zhangpeng name: zhangpeng spec: containers: - image: nginx imagePullPolicy: Always name: nginx ports: - containerPort: 80 name: web protocol: TCP resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 status: availableReplicas: 1 conditions: - lastTransitionTime: "2022-06-22T06:44:24Z" lastUpdateTime: "2022-06-22T06:44:24Z" message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: "True" type: Available - lastTransitionTime: "2022-06-22T06:44:06Z" lastUpdateTime: "2022-06-22T06:44:24Z" message: ReplicaSet "zhangpeng-5dffd5664f" has successfully progressed. reason: NewReplicaSetAvailable status: "True" type: Progressing observedGeneration: 1 readyReplicas: 1 replicas: 1 updatedReplicas: 1- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

关键词就是:

{"name":"zhangpeng", "namespace":"zhangpeng", "replicas":1, "ports":"tcp,80,web", "images":"nginx"}- 1

- 2

- 3

- 4

- 5

编写程序文件

/src/service/Deployment.go

func Updatedep(dep Deployment) (*v1.Deployment, error) { deployment := &v1.Deployment{ ObjectMeta: metav1.ObjectMeta{ Name: dep.Name, Namespace: dep.Namespace, Labels: dep.GetLabels(), }, Spec: v1.DeploymentSpec{ Replicas: &dep.Replicas, Selector: &metav1.LabelSelector{ MatchLabels: dep.GetSelectors(), }, Template: corev1.PodTemplateSpec{ ObjectMeta: metav1.ObjectMeta{ Name: dep.Name, Labels: dep.GetSelectors(), }, Spec: corev1.PodSpec{ Containers: []corev1.Container{ { Name: dep.GetImageName(), Image: dep.Images, Ports: dep.GetPorts(), }, }, }, }, }, } ctx := context.Background() newdep, err := lib.K8sClient.AppsV1().Deployments(dep.Namespace).Update(ctx, deployment, metav1.UpdateOptions{}) if err != nil { fmt.Println(err) } return newdep, nil } func UpdateDep(g *gin.Context) { var newDep Deployment if err := g.ShouldBind(&newDep); err != nil { g.JSON(500, err) } newdep, err := Updatedep(newDep) if err != nil { g.JSON(500, err) } newDep1 := Deployment{ Namespace: newdep.Namespace, Name: newdep.Name, Pods: GetPodsByDep(*newdep), CreateTime: newdep.CreationTimestamp.Format("2006-01-02 15:03:04"), } g.JSON(200, newDep1) }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

注意:基本就是拿前面的deployment create的方法改的…

增加路由运行main.go

main.go增加路由并运行main.go

r.POST("/deployment/update", service.UpdateDep)- 1

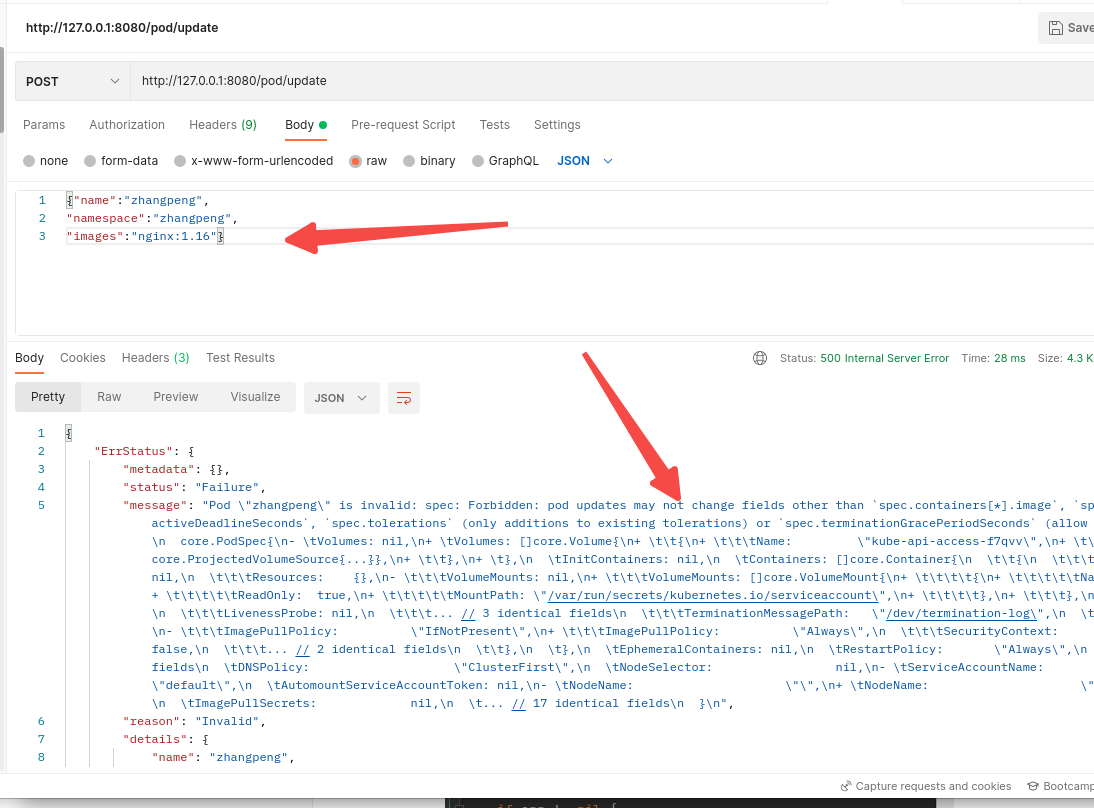

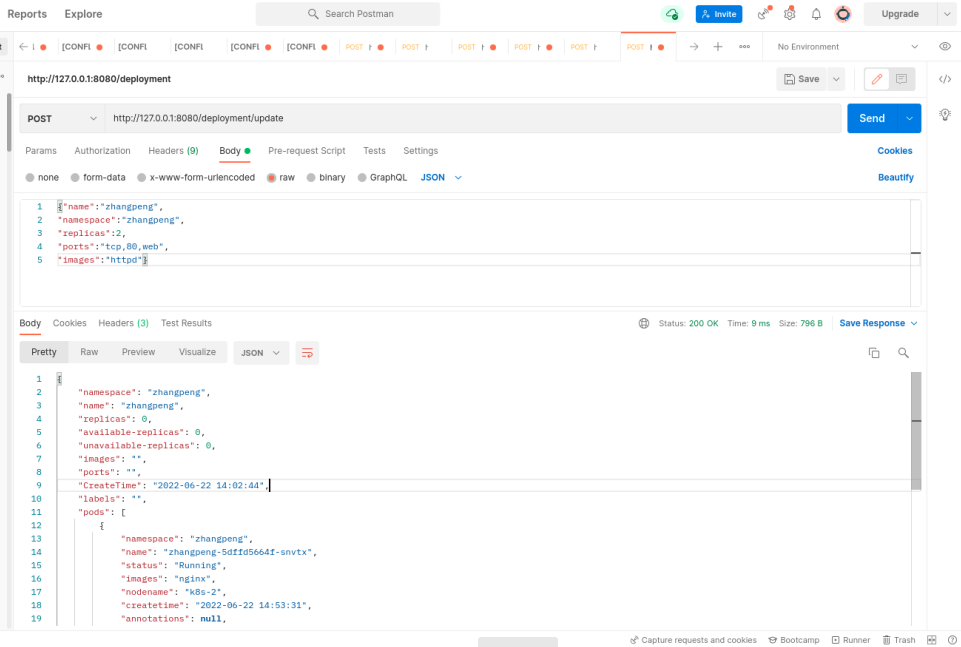

Postman测试

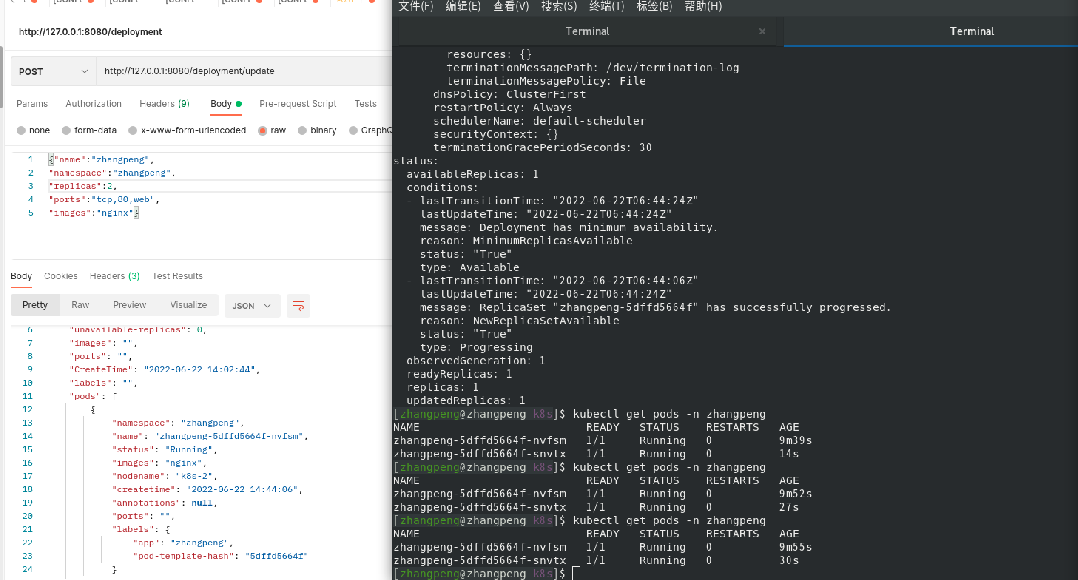

修改副本为2

http://127.0.0.1:8080/deployment/update

{"name":"zhangpeng", "namespace":"zhangpeng", "replicas":2, "ports":"tcp,80,web", "images":"nginx"}- 1

- 2

- 3

- 4

- 5

修改镜像tag

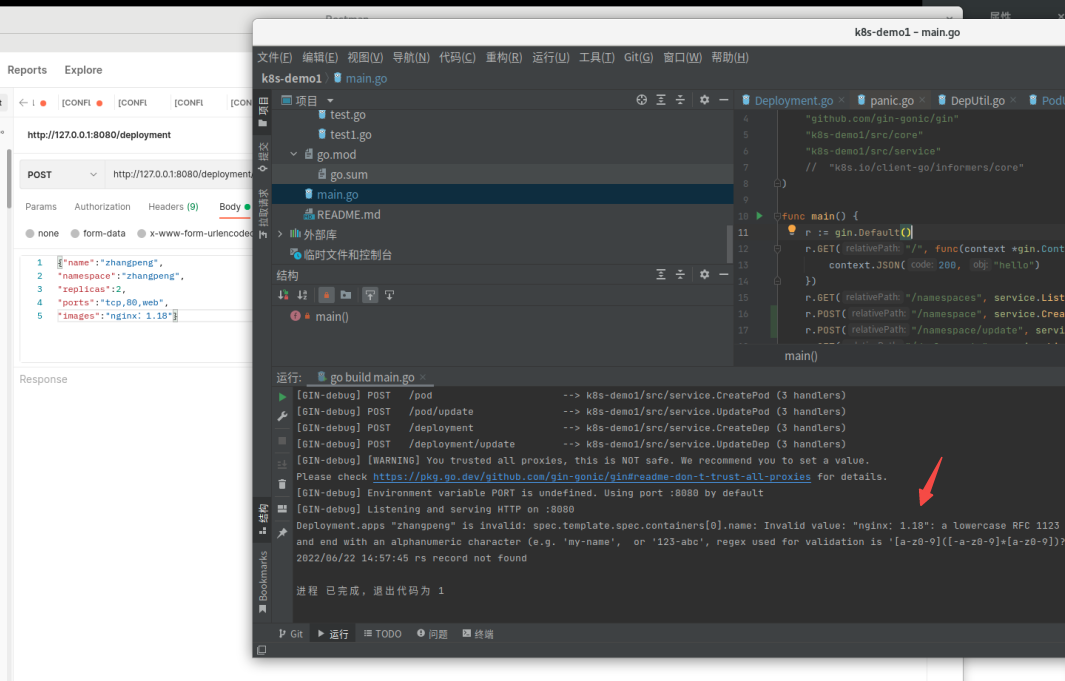

开始想的是修改nginx为nginx:1.18结果失败了…

估计是images数据格式的问题,先忽略吧…退而求其次修改镜像为apache镜像(注意镜像是httpd)

http://127.0.0.1:8080/deployment/update{"name":"zhangpeng", "namespace":"zhangpeng", "replicas":2, "ports":"tcp,80,web", "images":"httpd"}- 1

- 2

- 3

- 4

- 5

登陆服务器验证:

zhangpeng@zhangpeng k8s]$ kubectl get pods -n zhangpeng NAME READY STATUS RESTARTS AGE zhangpeng-5546976d9-mkslb 1/1 Running 0 73s zhangpeng-5546976d9-tcsb5 0/1 ContainerCreating 0 14s zhangpeng-5dffd5664f-nvfsm 1/1 Running 0 18m [zhangpeng@zhangpeng k8s]$ kubectl get pods -n zhangpeng NAME READY STATUS RESTARTS AGE zhangpeng-5546976d9-mkslb 1/1 Running 0 100s zhangpeng-5546976d9-tcsb5 1/1 Running 0 41s [zhangpeng@zhangpeng k8s]$ kubectl get deployment -n zhangpeng -o yaml |grep image - image: httpd imagePullPolicy: Always- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

总结:

1.Pod生命周期

2.update与create比就修改了update metav1.UpdateOptions{}

3.镜像的tag标签的问题后面看一下怎么解决 -

相关阅读:

不是所有数据增强都可以提升精度

OSPF —— OSPF邻居状态机(工作机制 )

刷题看力扣,刷了两个月 leetcode 算法,顺利拿下百度、阿里等大厂的 offer

【PTA题目】L1-4 稳赢 分数 15

Ansible---playbook 剧本

SpringBoot 如何集成 MyBatisPlus

企业如何反爬虫?

854. 相似度为 K 的字符串 BFS

tf.gather_nd

手把手教你写一个JSON在线解析的前端网站1

- 原文地址:https://blog.csdn.net/saynaihe/article/details/125410165