-

1.Kubeadm部署K8s集群

1 网段规划

主机节点网段

192.168.31.0/24- 1

Service网段

10.96.0.0/16- 1

Pod网段

10.244.0.0/16- 1

网段不能有冲突

2 集群资源配置

Master节点

4C8G * 3- 1

Node节点

4C8G * 3- 1

3 系统设置 – 所有节点

关闭Selinux

# 临时关闭 setenforce 0 # 永久关闭 sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux- 1

- 2

- 3

- 4

- 5

关闭防火墙

iptables -F systemctl disable --now firewalld- 1

- 2

配置Hosts – 所有节点

192.168.31.101 k8s-master-01 192.168.31.102 k8s-master-02 192.168.31.103 k8s-master-03 192.168.31.104 k8s-node-01 192.168.31.105 k8s-node-02 192.168.31.106 k8s-node-03 192.168.31.100 k8s-master-lb # 如果不是高可用集群,该IP为Master01的IP- 1

- 2

- 3

- 4

- 5

- 6

- 7

配置YUM源

# 阿里云yum源 curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo # docker依赖工具 yum install -y yum-utils device-mapper-persistent-data lvm2 # 安装docker的yum源 yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo # 配置k8s的yum源 cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF # 修改阿里云yum源的域名 sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo # 安装必备工具 yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

关闭swap分区

swapoff -a && sysctl -w vm.swappiness=0 sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab- 1

- 2

配置时间同步

rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm yum install ntpdate -y # 加入到crontab */5 * * * * /usr/sbin/ntpdate time2.aliyun.com- 1

- 2

- 3

- 4

- 5

配置Limit

ulimit -SHn 65535 cat >> /etc/security/limits.conf<< EOF * soft nofile 65536 * hard nofile 131072 * soft nproc 65535 * hard nproc 655350 * soft memlock unlimited * hard memlock unlimited EOF- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

Master01节点到其他节点免密

ssh-keygen -t rsa for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 k8s-node02 k8s-node03;do ssh-copy-id -i .ssh/id_rsa.pub $i;done- 1

- 2

- 3

下载所有会用到的配置文件

下载安装所有的源码文件: mkdir /data/src cd /data/src ; git clone https://github.com/dotbalo/k8s-ha-install.git 如果无法下载就下载:https://gitee.com/dukuan/k8s-ha-install.git [root@temp k8s-ha-install]# git branch -a * master remotes/origin/HEAD -> origin/master remotes/origin/manual-installation remotes/origin/manual-installation-v1.16.x remotes/origin/manual-installation-v1.17.x remotes/origin/manual-installation-v1.18.x remotes/origin/manual-installation-v1.19.x remotes/origin/manual-installation-v1.20.x remotes/origin/manual-installation-v1.20.x-csi-hostpath remotes/origin/manual-installation-v1.21.x remotes/origin/manual-installation-v1.22.x remotes/origin/manual-installation-v1.23.x remotes/origin/manual-installation-v1.24.x remotes/origin/master [root@temp k8s-ha-install]# git checkout manual-installation-v1.23.x- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

升级系统

yum update -y --exclude=kernel*- 1

升级内核到4.19

mkdir -p /data/src cd /data/src wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm # 从master01节点传到其他节点: for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02 k8s-node03;do scp kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm $i:/data/src/; done # 所有节点安装内核 cd /data/src/ && yum localinstall -y kernel-ml* # 更新启动内核 grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)" # 检查默认内核是不是4.19 grubby --default-kernel # 重启系统 # 检查重启后系统内核是不是4.19 uname -a- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

配置IPVS

# 安装ipvsadm yum install ipvsadm ipset sysstat conntrack libseccomp -y # 加载ipvs模块 modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack # 修改ipvs配置 cat >>/etc/modules-load.d/ipvs.conf<<EOF ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp ip_vs_sh nf_conntrack ip_tables ip_set xt_set ipt_set ipt_rpfilter ipt_REJECT ipip EOF # 加载ipvs配置 systemctl enable --now systemd-modules-load.service- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

配置内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 net.ipv4.conf.all.route_localnet = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.netfilter.nf_conntrack_max=2310720 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.tcp_keepalive_intvl =15 net.ipv4.tcp_max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 65536 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_timestamps = 0 net.core.somaxconn = 16384 EOF sysctl --system- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

重启服务器

配置完内核后,重启服务器,保证重启后内核依旧加载 reboot lsmod | grep --color=auto -e ip_vs -e nf_conntrack- 1

- 2

- 3

4 K8s组件和Runtime安装

安装Containerd作为Runtime – 所有节点

# 所有节点安装docker-ce-20.10: yum install docker-ce-20.10.* docker-ce-cli-20.10.* -y -- 无需启动Docker,只需要配置和启动Containerd即可。- 1

- 2

- 3

配置Containerd所需的模块

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf overlay br_netfilter EOF # 加载模块 modprobe -- overlay modprobe -- br_netfilter- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

配置内核参数

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF sysctl --system- 1

- 2

- 3

- 4

- 5

- 6

- 7

修改Containerd配置文件

# 创建配置目录 mkdir -p /etc/containerd # 生成默认配置文件 containerd config default | tee /etc/containerd/config.toml- 1

- 2

- 3

- 4

- 5

- 将Containerd的Cgroup改为Systemd

vim /etc/containerd/config.toml- 1

- 找到containerd.runtimes.runc.options,添加SystemdCgroup = true

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-YuW19uR4-1653447857571)(Kubeadm%E9%83%A8%E7%BD%B2K8s%E9%9B%86%E7%BE%A4.assets/1652496078544.png)]

- 将sandbox_image的Pause镜像改成符合自己版本的地址

# 使用Containerd必须在这里修改 registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 # 如图- 1

- 2

- 3

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-nT3UV2O5-1653447857572)(Kubeadm%E9%83%A8%E7%BD%B2K8s%E9%9B%86%E7%BE%A4.assets/1652496695292.png)]

启动Containerd,并配置开机自启动

systemctl daemon-reload systemctl enable --now containerd- 1

- 2

所有节点配置crictl客户端连接的运行时位置

cat > /etc/crictl.yaml <<EOF runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: false EOF- 1

- 2

- 3

- 4

- 5

- 6

- 配置完成测试ctr命令是否可用

5 安装Kubernetes组件

- 查看最新的Kubernetes版本是多少

yum list kubeadm.x86_64 --showduplicates | sort -r- 1

- 所有节点安装1.23最新版本kubeadm、kubelet和kubectl

yum install kubeadm-1.23* kubelet-1.23* kubectl-1.23* -y- 1

- 如果是Containerd作为的Runtime,需要更改Kubelet的配置使用Containerd作为Runtime

cat >/etc/sysconfig/kubelet<<EOF KUBELET_KUBEADM_ARGS="--container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock" EOF # 如果不是采用Containerd作为的Runtime,无需执行上述命令- 1

- 2

- 3

- 4

- 5

- 所有节点设置Kubelet开机自启动

systemctl daemon-reload systemctl enable --now kubelet # 此时kubelet是起不来的,日志会有报错不影响 systemctl status kubelet tailf /var/log/messages | grep kubelet- 1

- 2

- 3

- 4

- 5

- 6

6 安装高可用组件

安装HAProxy和KeepAlived

# 所有Master节点 yum install keepalived haproxy -y- 1

- 2

修改Haproxy配置, 所有Master节点配置相同

- apiserver 6443端口映射VIP16443端口

cat > /etc/haproxy/haproxy.cfg << EOF global maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30s defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s frontend monitor-in bind *:33305 mode http option httplog monitor-uri /monitor frontend k8s-master bind 0.0.0.0:16443 bind 127.0.0.1:16443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server k8s-master01 192.168.31.101:6443 check server k8s-master02 192.168.31.102:6443 check server k8s-master03 192.168.31.103:6443 check EOF- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

配置KeepAlived

注意每个节点的IP和网卡配置不一样 ,需要修改

- Master-01

cat > /etc/keepalived/keepalived.conf<<EOF ! Configuration File for keepalived global_defs { router_id LVS_DEVEL script_user root enable_script_security } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state MASTER interface eth0 mcast_src_ip 192.168.31.101 virtual_router_id 51 priority 101 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.31.100 } track_script { chk_apiserver } } EOF- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- Master-02

cat > /etc/keepalived/keepalived.conf<<EOF ! Configuration File for keepalived global_defs { router_id LVS_DEVEL script_user root enable_script_security } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state MASTER interface eth0 mcast_src_ip 192.168.31.102 virtual_router_id 51 priority 101 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.31.100 } track_script { chk_apiserver } } EOF- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- Master-03

cat > /etc/keepalived/keepalived.conf<<EOF ! Configuration File for keepalived global_defs { router_id LVS_DEVEL script_user root enable_script_security } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state MASTER interface eth0 mcast_src_ip 192.168.31.103 virtual_router_id 51 priority 101 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.31.100 } track_script { chk_apiserver } } EOF- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 所有master节点配置KeepAlived健康检查文件

cat >>/etc/keepalived/check_apiserver.sh<<EOF #!/bin/bash err=0 for k in $(seq 1 3) do check_code=$(pgrep haproxy) if [[ $check_code == "" ]]; then err=$(expr $err + 1) sleep 1 continue else err=0 break fi done if [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi EOF # 加执行权限 chmod +x /etc/keepalived/check_apiserver.sh- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

配置haproxy和keepalived开机自启动,

systemctl daemon-reload systemctl enable --now haproxy keepalived- 1

- 2

检查Haproxy和keepalived的状态

ping 192.168.31.100 telnet 192.168.31.100 16443 # 如果ping不通且telnet没有出现 ] ,则认为VIP不可用,需要排查keepalived的问题 # 比如防火墙和selinux,haproxy和keepalived的状态 # 所有节点查看防火墙状态必须为disable和inactive:systemctl status firewalld # 所有节点查看selinux状态, getenforce执行结果为Permissive,或者Disabled状态 # master节点查看监听端口:netstat -lntp,- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

7 集群初始化

Master01节点创建kubeadm-config.yaml配置文件如下:

Master01:(# 注意,如果不是高可用集群,192.168.31.100:16443改为master01的地址,16443改为apiserver的端口,默认是6443,注意更改kubernetesVersion的值和自己服务器kubeadm的版本一致:kubeadm version)

注意: 宿主机网段、podSubnet网段、serviceSubnet网段不能重复

mkdir -p /data/kubeadm/yaml cd /data/kubeadm/yaml cat >> kubeadm-config.yaml<<EOF apiVersion: kubeadm.k8s.io/v1beta2 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: 7t2weq.bjbawausm0jaxury ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.31.101 bindPort: 6443 nodeRegistration: # criSocket: /var/run/dockershim.sock # 如果是Docker作为Runtime配置此项 criSocket: /run/containerd/containerd.sock # 如果是Containerd作为Runtime配置此项 name: k8s-master01 taints: - effect: NoSchedule key: node-role.kubernetes.io/master --- apiServer: certSANs: - 192.168.31.100 timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta2 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controlPlaneEndpoint: 192.168.31.100:16443 controllerManager: {} dns: type: CoreDNS etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: v1.23.6 # 更改此处的版本号和kubeadm version一致 networking: dnsDomain: cluster.local podSubnet: 10.244.0.0/16 serviceSubnet: 10.96.0.0/16 scheduler: {} EOF- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

更新kubeadm-config.yaml文件

kubeadm config migrate --old-config kubeadm-config.yaml --new-config new-kubeadm-config.yaml- 1

将new-kubeadm-config.yaml文件复制到其他master节点

for i in k8s-master-02 k8s-master-03; do scp new-kubeadm-config.yaml $i:/data/kubeadm/yaml/; done- 1

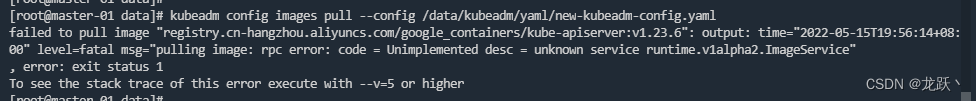

所有Master节点提前下载镜像,

可以节省初始化时间(其他节点不需要更改任何配置,包括IP地址也不需要更改)

kubeadm config images pull --config /data/kubeadm/yaml/new-kubeadm-config.yaml- 1

此处可能出现下载失败报错,如下图,因为文件系统是xfs, 默认ftype=0, overlayfs不支持, xfs需要设置ftype=1,或者使用ext4

重新加一个盘格式化:mkfs.xfs -f -n ftype=1 /dev/sdb 然后挂载到/var/lib/containerd

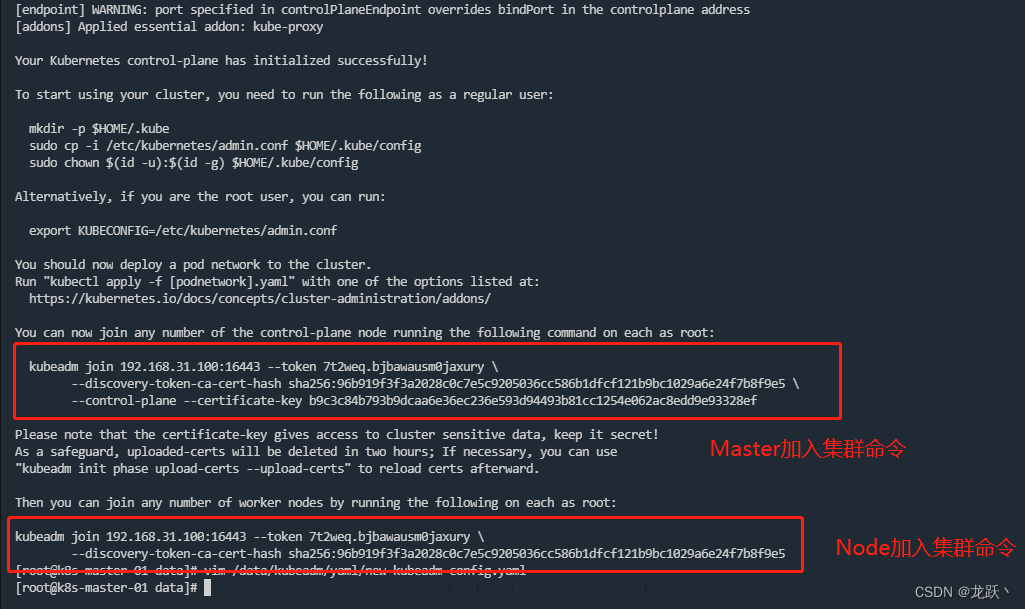

Master01节点初始化

初始化以后会在/etc/kubernetes目录下生成对应的证书和配置文件,之后其他Master节点加入Master01即可

kubeadm init --config /data/kubeadm/yaml/new-kubeadm-config.yaml --upload-certs- 1

如果初始化失败,重置后再次初始化,命令如下(没有失败不要执行)

# 上一步成功不需要执行这一步 # kubeadm reset -f ; ipvsadm --clear ; rm -rf ~/.kube- 1

- 2

初始化成功以后,会产生Token值,用于其他节点加入时使用,因此要记录下初始化成功生成的token值(令牌值)

# Master kubeadm join 192.168.31.100:16443 --token 7t2weq.bjbawausm0jaxury --discovery-token-ca-cert-hash sha256:96b919f3f3a2028c0c7e5c9205036cc586b1dfcf121b9bc1029a6e24f7b8f9e5 --control-plane --certificate-key b9c3c84b793b9dcaa6e36ec236e593d94493b81cc1254e062ac8edd9e93328ef # Node kubeadm join 192.168.31.100:16443 --token 7t2weq.bjbawausm0jaxury --discovery-token-ca-cert-hash sha256:96b919f3f3a2028c0c7e5c9205036cc586b1dfcf121b9bc1029a6e24f7b8f9e5- 1

- 2

- 3

- 4

- 5

Master01节点配置环境变量,用于访问Kubernetes集群

cat <<EOF >> /root/.bashrc export KUBECONFIG=/etc/kubernetes/admin.conf EOF source /root/.bashrc # 查看节点状态 kubectl get nodes # 采用初始化安装方式,所有的系统组件均以容器的方式运行并且在kube-system命名空间内,此时可以查看Pod状态: [root@k8s-master-01 ~]# kubectl get po -n kube-system NAME READY STATUS RESTARTS AGE coredns-65c54cc984-22mg4 0/1 Pending 0 3m59s coredns-65c54cc984-2975n 0/1 Pending 0 3m59s etcd-k8s-master-01 1/1 Running 2 3m53s etcd-k8s-master-02 1/1 Running 1 2m14s etcd-k8s-master-03 1/1 Running 1 117s kube-apiserver-k8s-master-01 1/1 Running 4 3m53s kube-apiserver-k8s-master-02 1/1 Running 1 2m13s kube-apiserver-k8s-master-03 1/1 Running 1 107s kube-controller-manager-k8s-master-01 1/1 Running 6 (2m4s ago) 3m54s kube-controller-manager-k8s-master-02 1/1 Running 1 2m13s kube-controller-manager-k8s-master-03 1/1 Running 1 118s kube-proxy-4v6g6 1/1 Running 0 96s kube-proxy-7ldbw 1/1 Running 0 3m58s kube-proxy-dtdds 1/1 Running 0 2m15s kube-proxy-hfnsl 1/1 Running 0 51s kube-proxy-ptvsw 1/1 Running 0 50s kube-proxy-zzg7p 1/1 Running 0 54s kube-scheduler-k8s-master-01 1/1 Running 7 (2m3s ago) 3m54s kube-scheduler-k8s-master-02 1/1 Running 1 2m14s kube-scheduler-k8s-master-03 1/1 Running 1 119s- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

8 加入Master节点和Node节点

初始化Token未过期

在初始化Token未过期的状态下,直接执行初始化结果的加入命令即可

# Master kubeadm join 192.168.31.100:16443 --token 7t2weq.bjbawausm0jaxury --discovery-token-ca-cert-hash sha256:96b919f3f3a2028c0c7e5c9205036cc586b1dfcf121b9bc1029a6e24f7b8f9e5 --control-plane --certificate-key b9c3c84b793b9dcaa6e36ec236e593d94493b81cc1254e062ac8edd9e93328ef # Node kubeadm join 192.168.31.100:16443 --token 7t2weq.bjbawausm0jaxury --discovery-token-ca-cert-hash sha256:96b919f3f3a2028c0c7e5c9205036cc586b1dfcf121b9bc1029a6e24f7b8f9e5- 1

- 2

- 3

- 4

- 5

- 查看Token过期时间

# 根据初始化集群的yaml文件查找Token头 [root@k8s-master-01 ~]# grep token /data/kubeadm/yaml/new-kubeadm-config.yaml - system:bootstrappers:kubeadm:default-node-token token: 7t2weq.bjbawausm0jaxury # 根据token头7t2weq查找secret [root@k8s-master-01 ~]# kubectl get secret -n kube-system | grep 7t2weq bootstrap-token-7t2weq bootstrap.kubernetes.io/token 6 26m # 查看secret的过期时间(加密状态) [root@k8s-master-01 ~]# kubectl get secret -n kube-system bootstrap-token-7t2weq -oyaml | grep expiration expiration: MjAyMi0wNS0xN1QxMzo1MzoyN1o= # 解密过期时间 [root@k8s-master-01 ~]# echo 'MjAyMi0wNS0xN1QxMzo1MzoyN1o=' | base64 -d 2022-05-17T13:53:27Z # 最后的时间不是我们中国的时区,需要加8小时,就是2022年5月17日 21:53- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

初始化Token过期后

- 加入新的node节点

Node节点上主要部署公司的一些业务应用,生产环境中不建议Master节点部署系统组件之外的其他Pod,测试环境可以允许Master节点部署Pod以节省系统资源

Token过期后生成新的token: [root@k8s-master-01 ~]# kubeadm token create --print-join-command kubeadm join 192.168.31.100:16443 --token t2zu3r.asulfntf83p3mz6x --discovery-token-ca-cert-hash sha256:b1b2b9beb5064d397e47d02b79b71f05132f70fcef6cf8ce37d92228793bcf36 # 然后执行命令加入集群 [root@k8s-master-01 ~]# kubeadm join 192.168.31.100:16443 --token t2zu3r.asulfntf83p3mz6x --discovery-token-ca-cert-hash sha256:b1b2b9beb5064d397e47d02b79b71f05132f70fcef6cf8ce37d92228793bcf36- 1

- 2

- 3

- 4

- 5

- 6

- 加入新的Master节点

# 生成新的Token, 和加入Node节点的命令共用Token即可 [root@k8s-master-01 ~]# kubeadm token create --print-join-command kubeadm join 192.168.31.100:16443 --token t2zu3r.asulfntf83p3mz6x --discovery-token-ca-cert-hash sha256:b1b2b9beb5064d397e47d02b79b71f05132f70fcef6cf8ce37d92228793bcf36 # Master需要生成 --certificate-key [root@k8s-master-01 ~]# kubeadm init phase upload-certs --upload-certs I0516 22:00:47.664585 5922 version.go:255] remote version is much newer: v1.24.0; falling back to: stable-1.23 [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: ddcf2802d9667d16a3d0b0915c31098dd303a4cdb98222636a02b03ba59729bd # 然后相应的Master节点执行命令加入集群 kubeadm join 192.168.31.100:16443 --token t2zu3r.asulfntf83p3mz6x --discovery-token-ca-cert-hash sha256:b1b2b9beb5064d397e47d02b79b71f05132f70fcef6cf8ce37d92228793bcf36 --control-plane --certificate-key ddcf2802d9667d16a3d0b0915c31098dd303a4cdb98222636a02b03ba59729bd- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 查看集群状态

[root@k8s-master-01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master-01 NotReady control-plane,master 12m v1.23.6 k8s-master-02 NotReady control-plane,master 10m v1.23.6 k8s-master-03 NotReady control-plane,master 10m v1.23.6 k8s-node-01 NotReady <none> 9m28s v1.23.6 k8s-node-02 NotReady <none> 9m25s v1.23.6 k8s-node-03 NotReady <none> 9m24s v1.23.6- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

9 Calico组件的安装

以下步骤只在k8s-master-01上操作

- 部署步骤

# 1. 进入配置目录(前面下载的所有配置文件) cd /data/src/k8s-ha-install && git checkout manual-installation-v1.23.x && cd calico/ # checkout 根据自己部署的版本的来切换 # 2. 获取pod网段 POD_SUBNET=`cat /etc/kubernetes/manifests/kube-controller-manager.yaml | grep cluster-cidr= | awk -F= '{print $NF}'` # 3. 修改配置网段 sed -i "s#POD_CIDR#${POD_SUBNET}#g" calico.yaml # 4. 部署calico kubectl apply -f calico.yaml- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 查看集群状态

# Calico组件和coredns组件都已经Running了 [root@k8s-master-01 calico]# kubectl get po -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-6f6595874c-tkzf8 1/1 Running 0 97s calico-node-5fk4v 1/1 Running 0 97s calico-node-5ld7p 1/1 Running 0 97s calico-node-6jv8w 1/1 Running 0 97s calico-node-cssdh 1/1 Running 0 97s calico-node-pwj66 1/1 Running 0 97s calico-node-wv7xn 1/1 Running 0 97s calico-typha-6b6cf8cbdf-ncbq6 1/1 Running 0 97s coredns-65c54cc984-22mg4 1/1 Running 0 45m coredns-65c54cc984-2975n 1/1 Running 0 45m etcd-k8s-master-01 1/1 Running 2 45m etcd-k8s-master-02 1/1 Running 1 43m etcd-k8s-master-03 1/1 Running 0 32m kube-apiserver-k8s-master-01 1/1 Running 4 45m kube-apiserver-k8s-master-02 1/1 Running 1 43m kube-apiserver-k8s-master-03 1/1 Running 2 43m kube-controller-manager-k8s-master-01 1/1 Running 6 (43m ago) 45m kube-controller-manager-k8s-master-02 1/1 Running 1 43m kube-controller-manager-k8s-master-03 1/1 Running 2 43m kube-proxy-4v6g6 1/1 Running 1 43m kube-proxy-7ldbw 1/1 Running 0 45m kube-proxy-dtdds 1/1 Running 0 44m kube-proxy-hfnsl 1/1 Running 0 42m kube-proxy-ptvsw 1/1 Running 0 42m kube-proxy-zzg7p 1/1 Running 0 42m kube-scheduler-k8s-master-01 1/1 Running 7 (43m ago) 45m kube-scheduler-k8s-master-02 1/1 Running 1 43m kube-scheduler-k8s-master-03 1/1 Running 2 43m # 节点也全部Ready [root@k8s-master-01 calico]# kubectl get no NAME STATUS ROLES AGE VERSION k8s-master-01 Ready control-plane,master 46m v1.23.6 k8s-master-02 Ready control-plane,master 45m v1.23.6 k8s-master-03 Ready control-plane,master 45m v1.23.6 k8s-node-01 Ready <none> 43m v1.23.6 k8s-node-02 Ready <none> 43m v1.23.6 k8s-node-03 Ready <none> 43m v1.23.6- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

10 Metrics部署

在新版的Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率。

1. 将Master01节点的front-proxy-ca.crt复制到所有Node节点

scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node-01:/etc/kubernetes/pki/front-proxy-ca.crt scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node-02:/etc/kubernetes/pki/front-proxy-ca.crt scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node-03:/etc/kubernetes/pki/front-proxy-ca.crt- 1

- 2

- 3

2. 安装metrics server

k8s-master-01执行即可

# 进入配置目录 [root@k8s-master-01 calico]# cd /data/src/k8s-ha-install/kubeadm-metrics-server # 部署 [root@k8s-master-01 kubeadm-metrics-server]# kubectl create -f comp.yaml serviceaccount/metrics-server created clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrole.rbac.authorization.k8s.io/system:metrics-server created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created service/metrics-server created deployment.apps/metrics-server created apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created # 查看pod状态是否Running [root@k8s-master-01 kubeadm-metrics-server]# kubectl get pods -n kube-system | grep metrics-server metrics-server-5cf8885b66-rh9bg 1/1 Running 0 2m23s # 查看node或pod的资源状态 kubectl top nodes kubectl top po -A- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

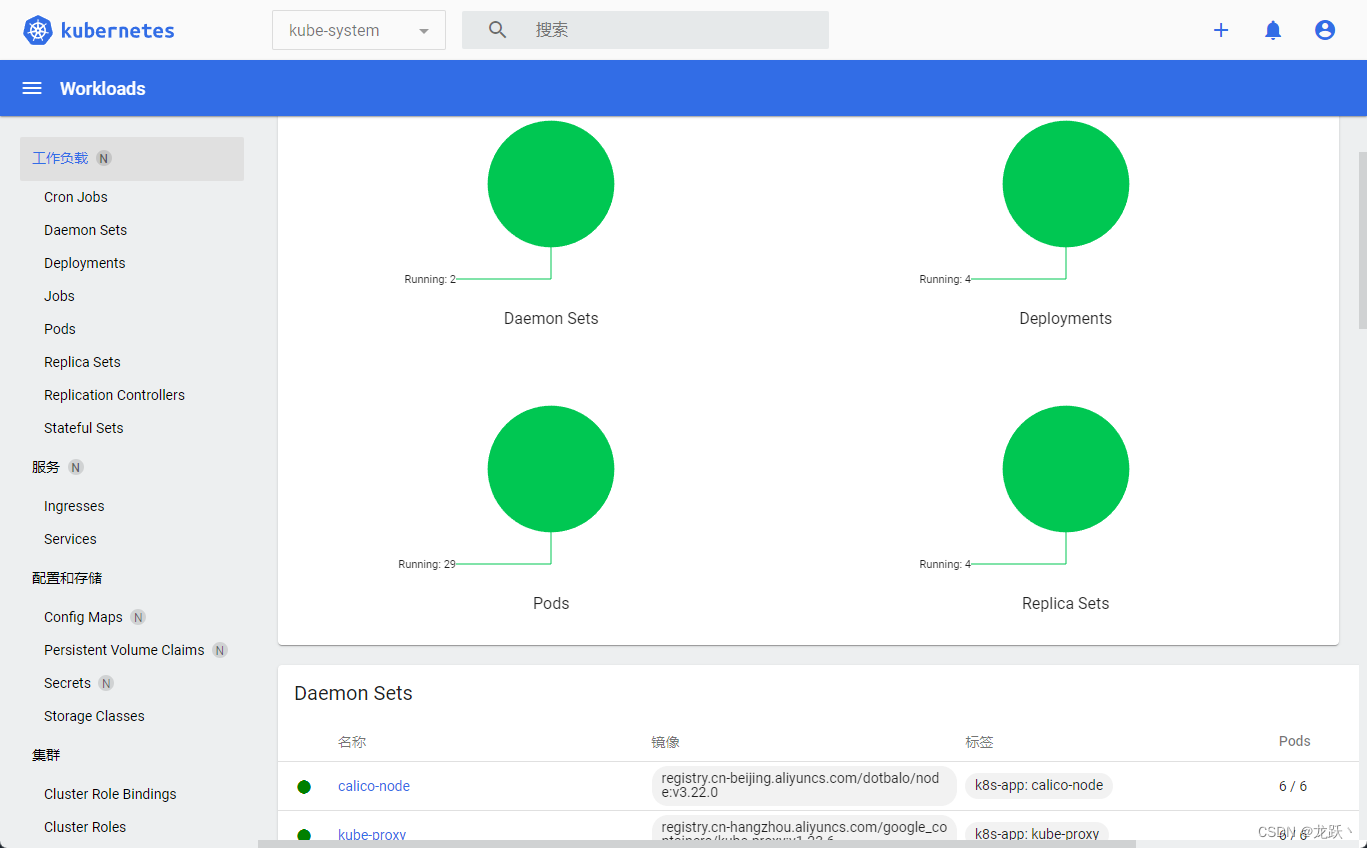

11 Dashboard部署

使用yaml部署指定版本Dashboard

[root@k8s-master-01 kubeadm-metrics-server]# cd /data/src/k8s-ha-install/dashboard/ [root@k8s-master-01 dashboard]# kubectl create -f . serviceaccount/admin-user created clusterrolebinding.rbac.authorization.k8s.io/admin-user created namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

部署最新版本

官方GitHub地址:https://github.com/kubernetes/dashboard

可以在官方dashboard查看到最新版dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml # v2.0.3 已具体版本号为主- 1

- 2

- 3

创建Dashboard用户

cat >> admin.yaml << EOF apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user annotations: rbac.authorization.kubernetes.io/autoupdate: "true" roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kube-system EOF kubectl apply -f admin.yaml -n kube-system- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

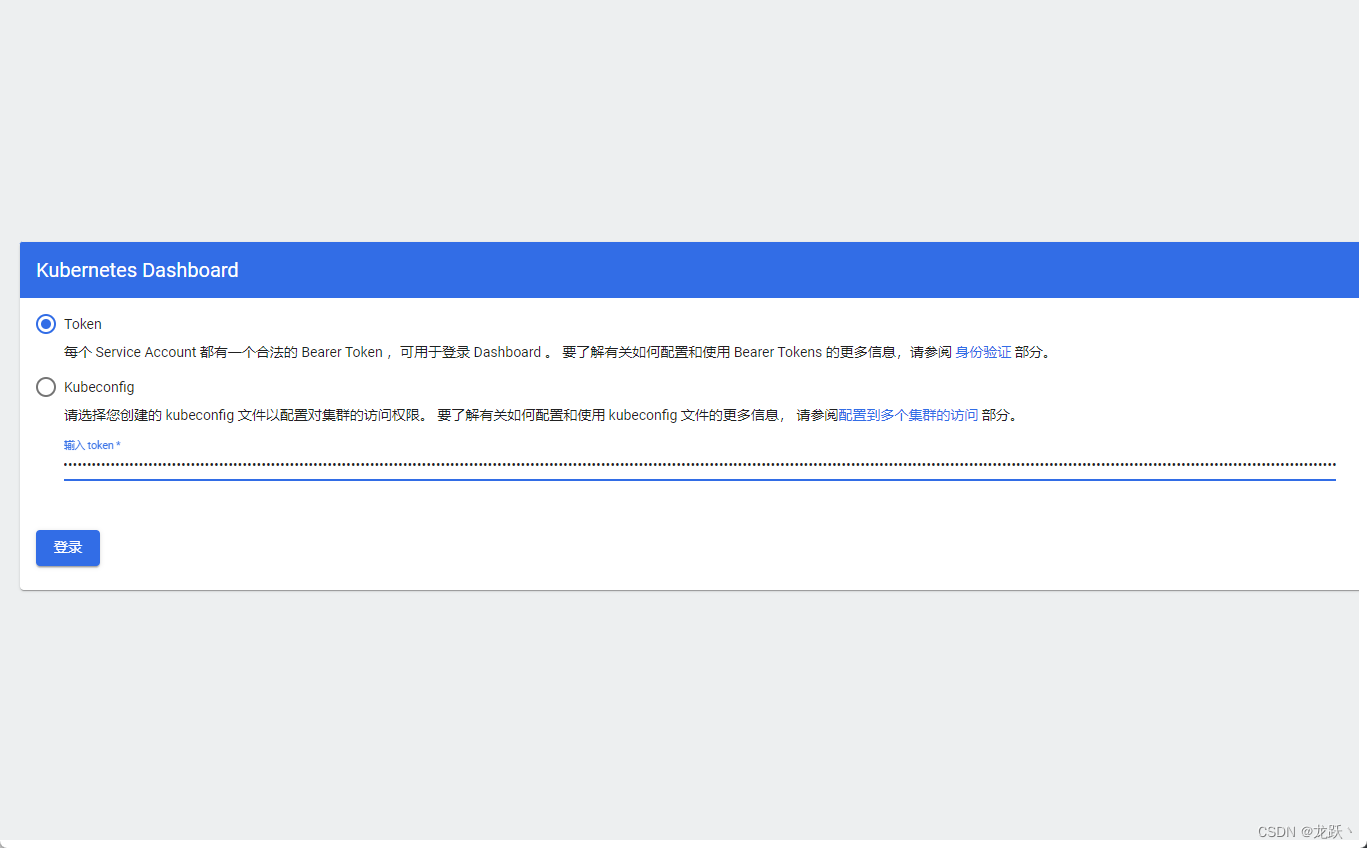

登录Dashboard

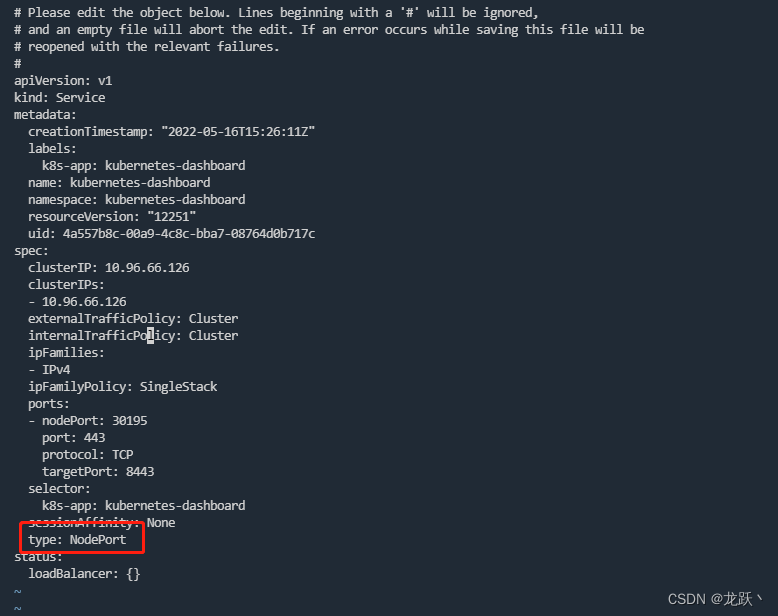

- 更改dashboard的svc为NodePort:(如果已经为NodePort忽略此步骤)

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard- 1

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ynhfBZbw-1653447857573)(Kubeadm%E9%83%A8%E7%BD%B2K8s%E9%9B%86%E7%BE%A4.assets/1652715338068.png)]

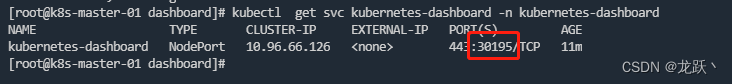

- 查看Dashboard端口号

-

在浏览器访问https://任意节点ip:30195/

-

查看token值

[root@k8s-master-01 dashboard]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') Name: admin-user-token-x2w7h Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: admin-user kubernetes.io/service-account.uid: 64335ade-397d-4b22-b621-b2b2b7d4bd92 Type: kubernetes.io/service-account-token Data ==== token: eyJhbGciOiJSUzI1NiIsImtpZCI6IndTTDRWdVVSVEU3Qng0Y2lnTkFncUptOThxbXJYR1VYNW5SVUxYY2d3dUUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXgydzdoIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2NDMzNWFkZS0zOTdkLTRiMjItYjYyMS1iMmIyYjdkNGJkOTIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.uCXaSgetfE9LTW_ZuyqoIXmUpmJUORaByj1gehfLRV7kQ4nKNe8ycKfE04pgfhXdbrS5s59HPcMGyoRZEG-JDBRP8V-4SNWypf49LoZLng2iqne2J3T5bVzQjLwJvnRqnr7JEUkem91F0dMb7Ussnkvp5z0DVEYrPzPvEbTqmIfjIy0i7qw-_3T2LxqV5Vw4jkHYHD4tU4PMlliCvS1haSxX9LsJR15Loc1ePkGXLVwbwH8RSH3M0ojG7hlX97drENBAoG-06bX1ZEH_Jrlpf5vAhuLc-Sqwtyz1PWFltKnCkvdv7OohdWZgagAJEWIkk0BfC_RUij3_SsM8wy1rsQ ca.crt: 1099 bytes namespace: 11 bytes- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 输入token登录

eyJhbGciOiJSUzI1NiIsImtpZCI6IndTTDRWdVVSVEU3Qng0Y2lnTkFncUptOThxbXJYR1VYNW5SVUxYY2d3dUUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXgydzdoIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2NDMzNWFkZS0zOTdkLTRiMjItYjYyMS1iMmIyYjdkNGJkOTIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.uCXaSgetfE9LTW_ZuyqoIXmUpmJUORaByj1gehfLRV7kQ4nKNe8ycKfE04pgfhXdbrS5s59HPcMGyoRZEG-JDBRP8V-4SNWypf49LoZLng2iqne2J3T5bVzQjLwJvnRqnr7JEUkem91F0dMb7Ussnkvp5z0DVEYrPzPvEbTqmIfjIy0i7qw-_3T2LxqV5Vw4jkHYHD4tU4PMlliCvS1haSxX9LsJR15Loc1ePkGXLVwbwH8RSH3M0ojG7hlX97drENBAoG-06bX1ZEH_Jrlpf5vAhuLc-Sqwtyz1PWFltKnCkvdv7OohdWZgagAJEWIkk0BfC_RUij3_SsM8wy1rsQ- 1

12 一些必须的配置更改

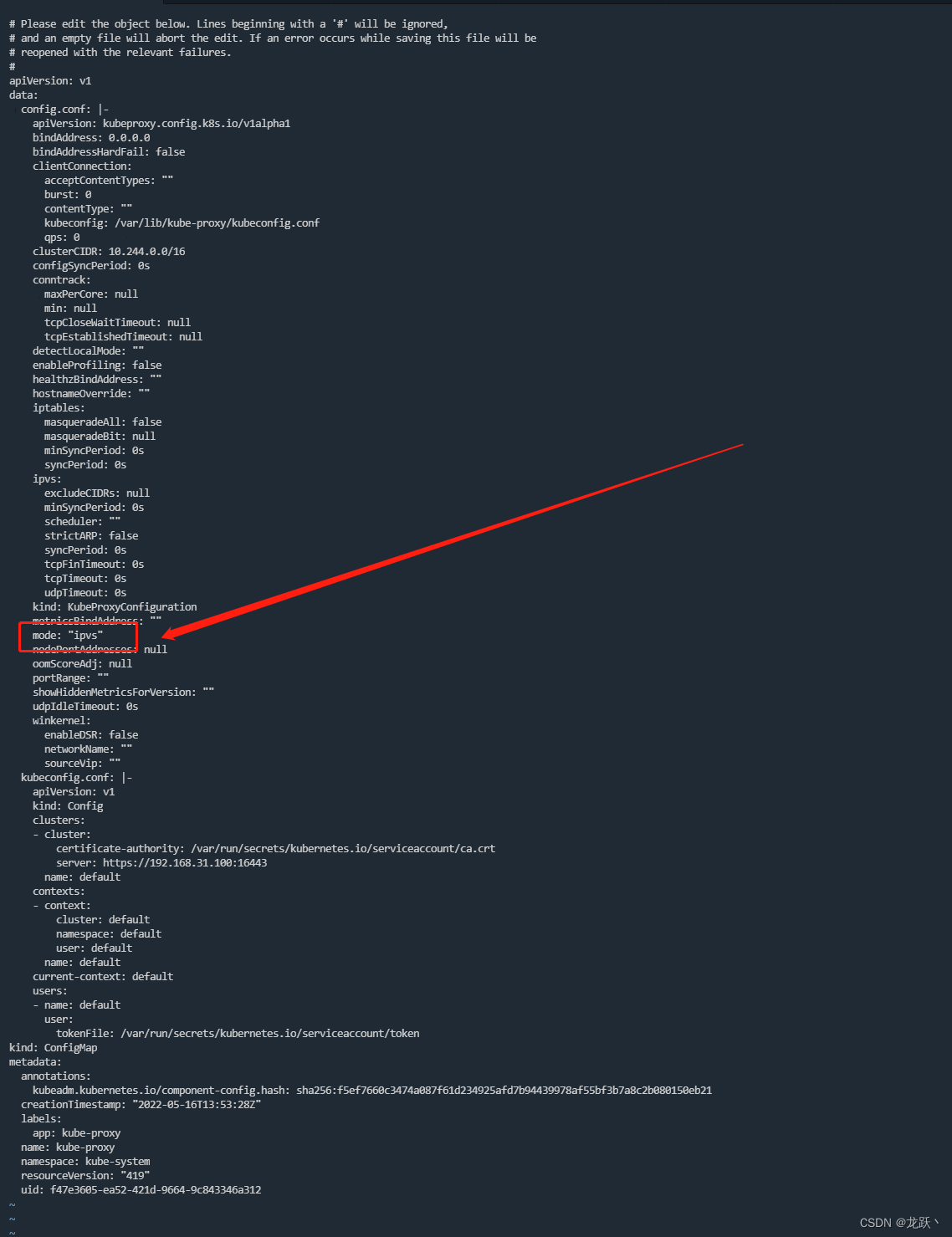

将Kube-proxy改为ipvs模式

因为在初始化集群的时候注释了ipvs配置,所以需要自行修改一下

# 在master01节点执行 kubectl edit cm kube-proxy -n kube-system- 1

- 2

更新Kube-Proxy的Pod

kubectl patch daemonset kube-proxy -p "{\"spec\":{\"template\":{\"metadata\":{\"annotations\":{\"date\":\"`date +'%s'`\"}}}}}" -n kube-system- 1

验证Kube-Proxy模式

[root@k8s-master-01 dashboard]# curl 127.0.0.1:10249/proxyMode ipvs- 1

- 2

13【必看】注意事项

-

- kubeadm安装的集群,证书有效期默认是一年。

-

- master节点的kube-apiserver、kube-scheduler、kube-controller-manager、etcd都是以容器运行的。

# 可以通过以下命令查看 kubectl get po -n kube-system- 1

- 2

-

- 启动方式与二进制不同的是,kubelet的配置文件在/etc/sysconfig/kubelet和/var/lib/kubelet/config.yaml,修改后需要重启kubelet进程即可

-

- 其他组件的配置文件在/etc/kubernetes/manifests目录下,比如kube-apiserver.yaml,该yaml文件更改后,kubelet会自动刷新配置,也就是会重启pod。不能再次创建该文件

-

- kube-proxy的配置在kube-system命名空间下的configmap中,可以通过下面的命令查看可更改

kubectl edit cm kube-proxy -n kube-system- 1

更改完成后,可以通过patch重启kube-proxy

kubectl patch daemonset kube-proxy -p "{\"spec\":{\"template\":{\"metadata\":{\"annotations\":{\"date\":\"`date +'%s'`\"}}}}}" -n kube-system- 1

-

- Kubeadm安装后,master节点默认不允许部署pod,可以通过以下方式打开

# 查看Taints: kubectl describe node -l node-role.kubernetes.io/master= | grep Taints # 删除Taint: kubectl taint node -l node-role.kubernetes.io/master node-role.kubernetes.io/master:NoSchedule- # 删除后检查 kubectl describe node -l node-role.kubernetes.io/master= | grep Taints- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

13【必看】注意事项

-

- kubeadm安装的集群,证书有效期默认是一年。

-

- master节点的kube-apiserver、kube-scheduler、kube-controller-manager、etcd都是以容器运行的。

# 可以通过以下命令查看 kubectl get po -n kube-system- 1

- 2

-

- 启动方式与二进制不同的是,kubelet的配置文件在/etc/sysconfig/kubelet和/var/lib/kubelet/config.yaml,修改后需要重启kubelet进程即可

-

- 其他组件的配置文件在/etc/kubernetes/manifests目录下,比如kube-apiserver.yaml,该yaml文件更改后,kubelet会自动刷新配置,也就是会重启pod。不能再次创建该文件

-

- kube-proxy的配置在kube-system命名空间下的configmap中,可以通过下面的命令查看可更改

kubectl edit cm kube-proxy -n kube-system- 1

更改完成后,可以通过patch重启kube-proxy

kubectl patch daemonset kube-proxy -p "{\"spec\":{\"template\":{\"metadata\":{\"annotations\":{\"date\":\"`date +'%s'`\"}}}}}" -n kube-system- 1

-

- Kubeadm安装后,master节点默认不允许部署pod,可以通过以下方式打开

# 查看Taints: kubectl describe node -l node-role.kubernetes.io/master= | grep Taints # 删除Taint: kubectl taint node -l node-role.kubernetes.io/master node-role.kubernetes.io/master:NoSchedule- # 删除后检查 kubectl describe node -l node-role.kubernetes.io/master= | grep Taints- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

-

相关阅读:

温度及pH敏感性聚乙烯醇/羧甲基壳聚糖水凝胶/金银花多糖/薄荷多糖/O-羧甲基壳聚糖水凝胶

2154. 将找到的值乘以 2

SQL库函数

ip转c段

Linux开发工具之文本编译器vim

用核心AI资产打造稀缺电竞体验,顺网灵悉背后有一盘大棋

数据库课程设计大作业大盘点【建议在校生收藏】

【JavaScript保姆级教程】输出函数和初识变量

在Saas系统下多租户零脚本分表分库读写分离解决方案

Github的aouth 登录拿到code换到了access token去拿用户授权的时候一直401

- 原文地址:https://blog.csdn.net/u012766780/article/details/124962323