-

采用 Phi-3 小型模型和 Elastic 的智能订购系统

作者:来自 Elastic Gustavo Llermaly

本文向你展示如何将 Microsoft 高效的 Phi-3 模型与 Elastic 的语义搜索功能相结合,以创建智能的对话式订购系统。我们将介绍如何在 Azure AI Studio 上部署 phi-3、设置 Elastic 以及为一家意大利餐厅构建应用程序。

4 月,Microsoft 宣布推出其最先进的 phi-3 小型模型系列,这些模型是经济高效的模型,经过优化,可在资源受限的条件下以低延迟执行,非常适合大规模/实时任务。

phi-3 小型系列包括:

- Phi-3-small-8K-instruct

- Phi-3-small-128K-instruct

这两个模型的最大支持上下文长度为 8K 和 128K 个 token,都是 7B 参数。请注意,instruct 后缀意味着这些模型与聊天模型不同,不是针对对话式角色扮演流程进行训练的,而是遵循特定指令。你不是要与他们聊天,而是要给他们任务来完成。

phi-3 模型的一些应用是本地设备、离线环境和范围/特定任务。例如,帮助在矿井或农场等并非始终可用互联网的地方使用自然语言与传感器系统进行交互,或实时分析需要快速响应的餐厅订单。

L'asticco 意大利餐厅

L'asticco 意大利餐厅是一家受欢迎的餐厅,菜单丰富且可定制。厨师抱怨订单有错误,因此他们推动在桌子上放置平板电脑,以便客户可以直接选择他们想吃的东西。店主希望保持人性化,让订单在顾客和服务员之间保持对话式。

这是一个利用 phi-3 小型模型规格的好机会,因为我们可以让模型倾听顾客的意见并提取菜品和定制。此外,服务员可以向顾客提供建议,并确认我们的应用程序生成的订单,从而让每个人都满意。让我们帮助员工接受订单吧!

订单通常需要多次处理,可能会有停顿。因此,我们将允许应用程序通过记忆订单状态来多次填写订单。此外,为了保持上下文窗口较小,我们不会将整个菜单传递给模型,而是将菜品存储在 Elastic 中,然后使用语义搜索检索它们。

应用程序的流程将如下所示:

你可以按照此 notebook 在此处复现本文的示例

步骤

- 在 Azure AI Studio 上部署

- 创建嵌入端点

- 创建 completion 端点

- 创建索引

- 索引数据

- 接受订单

在 Azure AI Studio 上部署

https://ai.azure.com/explore/models?selectedCollection=phi

要在 Azure AI Studio 上部署模型,你必须创建一个帐户,在目录中找到该模型,然后部署它:

选择 Managed Compute 作为部署选项。从那里我们需要保存目标 URI 和密钥以供后续步骤使用。

如果你想了解有关 Azure AI Studio 的更多信息,我们有一篇关于 Azure AI Studio 的深入文章,你可以在此处阅读。

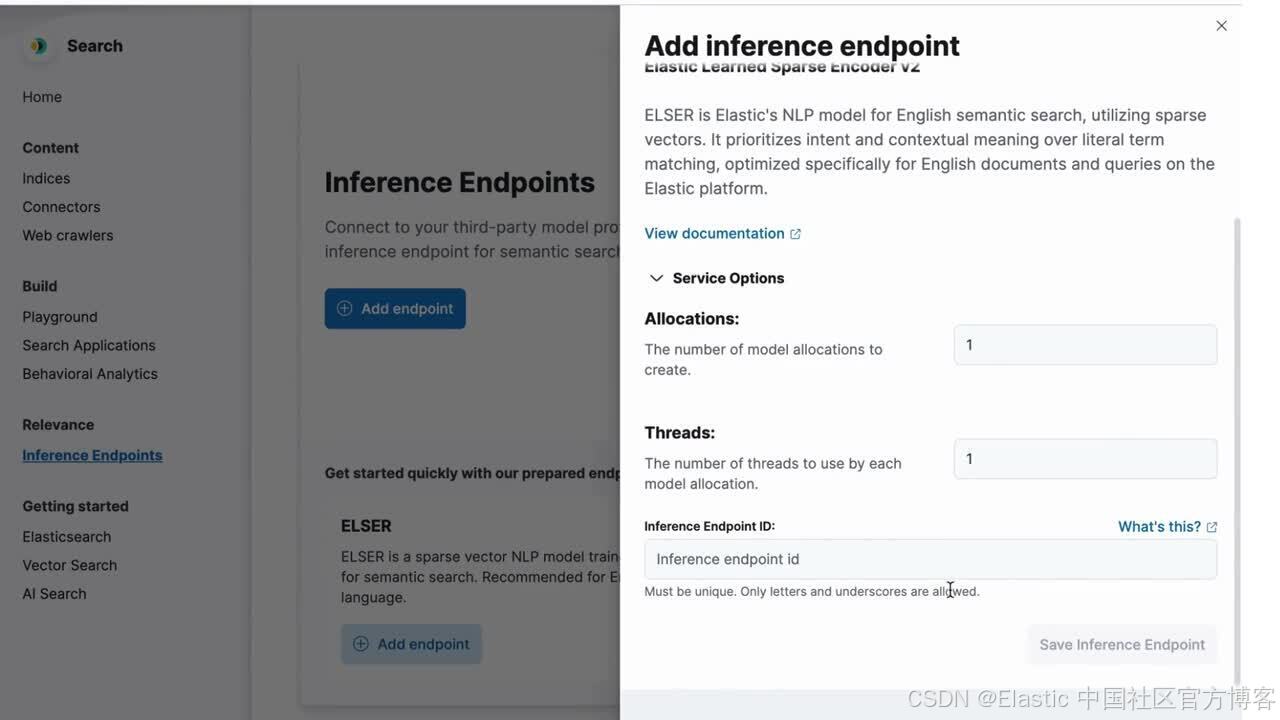

创建嵌入端点

对于嵌入端点,我们将使用我们的 ELSER 模型。即使顾客没有使用确切的名称,这也将帮助我们找到正确的菜品。嵌入还可用于根据顾客所说的内容和菜品描述之间的语义相似性来推荐菜品。

要创建 ELSER 端点,你可以使用 Kibana UI:

或者 _inference API:

- PUT _inference/sparse_embedding/elser-embeddings

- {

- "service": "elser",

- "service_settings": {

- "model_id": ".elser_model_2",

- "num_allocations": 1,

- "num_threads": 1

- }

- }

创建 completion 端点

我们可以使用 Elastic Open Inference Service 轻松连接到部署在 Azure AI Studio 中的 phi-3 模型。你必须选择 “realtime” 作为端点类型,并粘贴你在第一步保存的目标 uri 和密钥。

- PUT _inference/completion/phi3-completion

- {

- "service": "azureaistudio",

- "service_settings": {

- "api_key": "

" , - "target": "

" , - "provider": "microsoft_phi",

- "endpoint_type": "realtime"

- }

- }

创建索引

我们将提取餐厅菜肴,每道菜一个文档,并将 semantic_text 映射类型应用于描述字段。我们还将存储菜肴定制信息。

- PUT lasticco-menu

- {

- "mappings": {

- "properties": {

- "code": {

- "type": "keyword"

- },

- "title": {

- "type": "text"

- },

- "description": {

- "type": "semantic_text",

- "inference_id": "elser-embeddings"

- },

- "price": {

- "type": "double"

- },

- "customizations": {

- "type": "object"

- }

- }

- }

- }

索引数据

L'asticco 菜单非常丰富,因此我们只介绍几道菜:

- POST lasticco-menu/_doc

- {

- "code": "carbonara",

- "title": "Pasta Carbonara",

- "description": "Pasta Carbonara \n Perfectly al dente spaghetti enrobed in a velvety sauce of farm-fresh eggs, aged Pecorino Romano, and smoky guanciale. Finished with a kiss of cracked black pepper for a classic Roman indulgence.",

- "price": 14.99,

- "customizations": {

- "vegetarian": [

- true,

- false

- ],

- "cream": [

- true,

- false

- ],

- "extras": [

- "cheese",

- "garlic",

- "ham"

- ]

- }

- }

- POST lasticco-menu/_doc

- {

- "code": "alfredo",

- "title": "Chicken Alfredo",

- "description": "Chicken Alfredo \n Recipe includes golden pan-fried seasoned chicken breasts and tender fettuccine, coated in the most dreamy cream sauce ever, coated with a velvety garlic and Parmesan cream sauce.",

- "price": 18.99,

- "customizations": {

- "vegetarian": [

- true,

- false

- ],

- "cream": [

- true,

- false

- ],

- "extras": [

- "cheese",

- "onions",

- "olives"

- ]

- }

- }

- POST lasticco-menu/_doc

- {

- "code": "gnocchi",

- "title": "Four Cheese Gnocchi",

- "description": "Four Cheese Gnocchi \n soft pillowy potato gnocchi coated in a silken cheesy sauce made of four different cheeses: Gouda, Parmigiano, Brie, and the star, Gorgonzola. The combination of four different types of cheese will make your tastebuds dance for joy.",

- "price": 15.99,

- "customizations": {

- "vegetarian": [

- true,

- false

- ],

- "cream": [

- true,

- false

- ],

- "extras": [

- "cheese",

- "bacon",

- "mushrooms"

- ]

- }

- }

订单 schema

在开始提问之前,我们必须定义订单模式(schema),它看起来如下:

- {

- "order": [

- {

- "code": "carbonara",

- "qty": 1,

- "customizations": [

- {

- "vegan": true

- }

- ]

- },

- {

- "code": "alfredo",

- "qty": 2,

- "customizations": [

- {

- "extras": ["cheese"]

- }

- ]

- },

- {

- "code": "gnocchi",

- "qty": 1,

- "customizations": [

- {

- "extras": ["mushrooms"]

- }

- ]

- }

- ]

- }

检索文档

对于每个客户请求,我们必须使用语义搜索检索他们最有可能提到的菜肴,然后在下一步中将该信息提供给模型。

- GET lasticco-menu/_search

- {

- "query": {

- "semantic": {

- "field": "description",

- "query": "may I have a carbonara with cream and bacon?"

- }

- }

- }

我们得到了以下菜品:

- {

- "took": 9,

- "timed_out": false,

- "_shards": {

- "total": 1,

- "successful": 1,

- "skipped": 0,

- "failed": 0

- },

- "hits": {

- "total": {

- "value": 1,

- "relation": "eq"

- },

- "max_score": 18.255896,

- "hits": [

- {

- "_index": "lasticco-menu",

- "_id": "YAzAs5ABcaO5Zio3qjNd",

- "_score": 18.255896,

- "_source": {

- "code": "carbonara",

- "price": 14.99,

- "description": {

- "text": """Pasta Carbonara

- Perfectly al dente spaghetti enrobed in a velvety sauce of farm-fresh eggs, aged Pecorino Romano, and smoky pancetta. Finished with a kiss of cracked black pepper for a classic Roman indulgence.""",

- "inference": {

- "inference_id": "elser-embeddings",

- "model_settings": {

- "task_type": "sparse_embedding"

- },

- "chunks": [

- {

- "text": """Pasta Carbonara

- Perfectly al dente spaghetti enrobed in a velvety sauce of farm-fresh eggs, aged Pecorino Romano, and smoky pancetta. Finished with a kiss of cracked black pepper for a classic Roman indulgence.""",

- "embeddings": {

- "carbon": 2.2051733,

- "pasta": 2.1578376,

- "spaghetti": 1.9468538,

- "##ara": 1.8116782,

- "romano": 1.7329221,

- "dent": 1.6995606,

- "roman": 1.6205788,

- "sauce": 1.6086094,

- "al": 1.5249499,

- "velvet": 1.5057548,

- "eggs": 1.4711059,

- "kiss": 1.4058907,

- "cracked": 1.3383057,

- ...

- }

- }

- ]

- }

- },

- "title": "Pasta Carbonara",

- "customizations": {

- "extras": [

- "cheese",

- "garlic",

- "ham"

- ],

- "cream": [

- true,

- false

- ],

- "vegetarian": [

- true,

- false

- ]

- }

- }

- }

- ]

- }

- }

在本例中,我们使用菜品描述进行搜索。我们可以将其余字段提供给模型以获取更多上下文,或将它们用作查询过滤器。

接受订单

现在我们有了所有相关部分:

- 客户请求

- 与请求相关的菜肴

- 订单模式

我们可以要求 phi-3 帮助我们从当前请求中提取菜肴,并记住整个订单。

我们可以使用 Kibana 和 _inference API 尝试该流程。

- POST _inference/completion/phi3-completion

- {

- "input": """

- Your task is to manage an order based on the AVAILABLE DISHES in the MENU and the USER REQUEST. Follow these strict rules:

- 1. ONLY add dishes to the order that are explicitly listed in the MENU.

- 2. If the requested dish is not in the MENU, do not add anything to the order.

- 3. The response must always be a valid JSON object containing an "order" array, even if it's empty.

- 4. Do not invent or hallucinate any dishes that are not in the MENU.

- 5. Respond only with the updated order object, nothing else.

- Example of an order object:

- {

- "order": [

- {

- "code": "<dish_code>",

- "qty": 1

- },

- {

- "code": "<dish_code>",

- "qty": 2,

- "customizations": "<customizations>"

- }

- ]

- }

- MENU:

- [

- {

- "code": "carbonara",

- "title": "Pasta Carbonara",

- "description": "Pasta Carbonara \n Perfectly al dente spaghetti enrobed in a velvety sauce of farm-fresh eggs, aged Pecorino Romano, and smoky pancetta. Finished with a kiss of cracked black pepper for a classic Roman indulgence.",

- "price": 14.99,

- "customizations": {

- "vegetarian": [true, false],

- "cream": [true, false],

- "extras": ["cheese", "garlic", "ham"]

- }

- }

- ]

- CURRENT ORDER:

- {

- "order": []

- }

- USER REQUEST: may I have a carbonara with cream and extra cheese?

- Remember:

- If the requested dish is not in the MENU, return the current order unchanged.

- Customizations should be added as an object with the same structure as in the MENU.

- For boolean customizations, use true/false values.

- For array customizations, use an array with the selected items.

- """

- }

这将为我们提供更新后的订单,该订单必须作为 “current order” 在我们的下一个请求中发送,直到订单完成:

- {

- "completion": [

- {

- "result": """{

- "order": [

- {

- "code": "carbonara",

- "qty": 1,

- "customizations": {

- "vegan": false,

- "cream": true,

- "extras": ["cheese"]

- }

- }

- ]

- }"""

- }

- ]

- }

这将捕获用户请求并保持订单状态更新,所有这些都具有低延迟和高效率。一种可能的优化是添加建议(recommendations)或考虑过敏(allergies),这样模型就可以在订单中添加或删除菜肴,或者向用户推荐菜肴。你认为你可以添加这个功能吗?

在 Notebook 中,你将找到完整的工作示例,它在循环中捕获用户输入。

结论

Phi-3 小型模型是一种非常强大的解决方案,适用于需要低成本和低延迟的任务,同时解决语言理解和数据提取等复杂请求。使用 Azure AI Studio,你可以轻松部署模型,并使用开放推理服务无缝地从 Elastic 中使用它。为了克服管理大数据量时这些小型模型的上下文长度限制,使用以 Elastic 为向量数据库的 RAG 系统是可行的方法。

如果你有兴趣重现本文的示例,你可以在此处找到包含请求的 Python Notebook

准备好自己尝试一下了吗?开始免费试用。

Elasticsearch 集成了 LangChain、Cohere 等工具。加入我们的高级语义搜索网络研讨会,构建你的下一个 GenAI 应用程序!原文:Utilizing Phi-3 models to create a RAG application with Elastic — Search Labs

-

相关阅读:

小工具之视频抽帧

【Qt按钮基类】QAbstractButton[ 所有按钮基类 ]

[附源码]Python计算机毕业设计 校园疫情防控系统

Zookeeper与Hadoop集群的启动的不同点

HyperBDR云容灾深度解析一:云原生跨平台容灾,让数据流转更灵活

我们被一个 kong 的性能 bug 折腾了一个通宵

c++:三种实例化对象方式

二次握手??三次挥手??

从语音估计肢体动作

目标检测 YOLOv5 - ncnn模型的加密 C++实现封装库和Android调用库示例

- 原文地址:https://blog.csdn.net/UbuntuTouch/article/details/141192809