-

hive on spark 记录

环境:

hadoop 2.7.2

spark-without-hadoop 2.4.6

hive 2.3.4hive-site.xml

<property> <name>hive.execution.enginename> <value>sparkvalue> property> <property> <name>spark.yarn.jarsname> <value>hdfs://hadoop03:9000/spark-jars/*value> property>./bin/hive

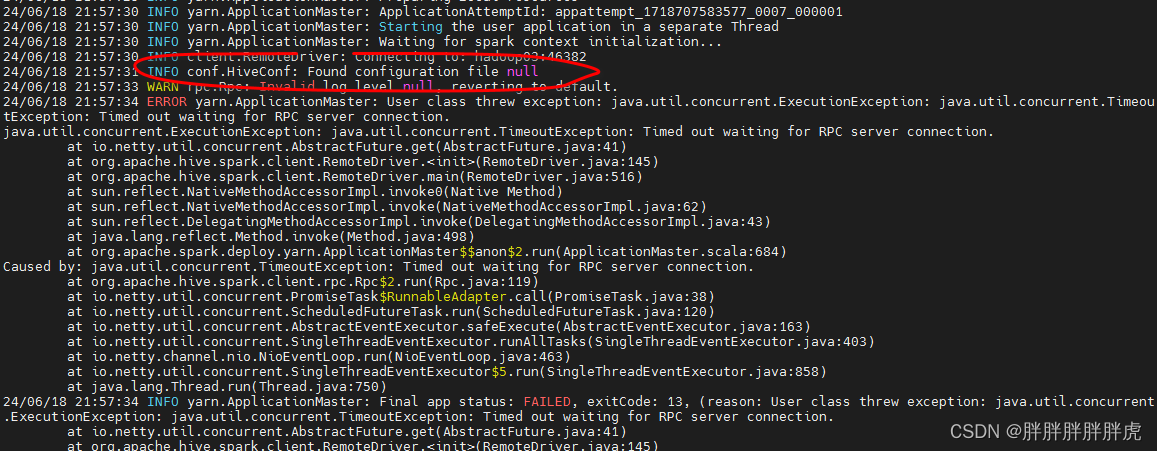

for f in ${SPARK_HOME}/jars/*.jar; do CLASSPATH=${CLASSPATH}:$f; done/opt/module/spark-2.4.4-bin-without-hadoop/bin/spark-submit \ --properties-file /tmp/spark-submit.8770948269278745415.properties \ --class org.apache.hive.spark.client.RemoteDriver /opt/module/hive/lib/hive-exec-2.3.4.jar \ --remote-host hadoop03 \ --remote-port 43182 \ --conf hive.spark.client.connect.timeout=1000 \ --conf hive.spark.client.server.connect.timeout=90000 \ --conf hive.spark.client.channel.log.level=null \ --conf hive.spark.client.rpc.max.size=52428800 \ --conf hive.spark.client.rpc.threads=8 \ --conf hive.spark.client.secret.bits=256 \ --conf hive.spark.client.rpc.server.address=null问题记录

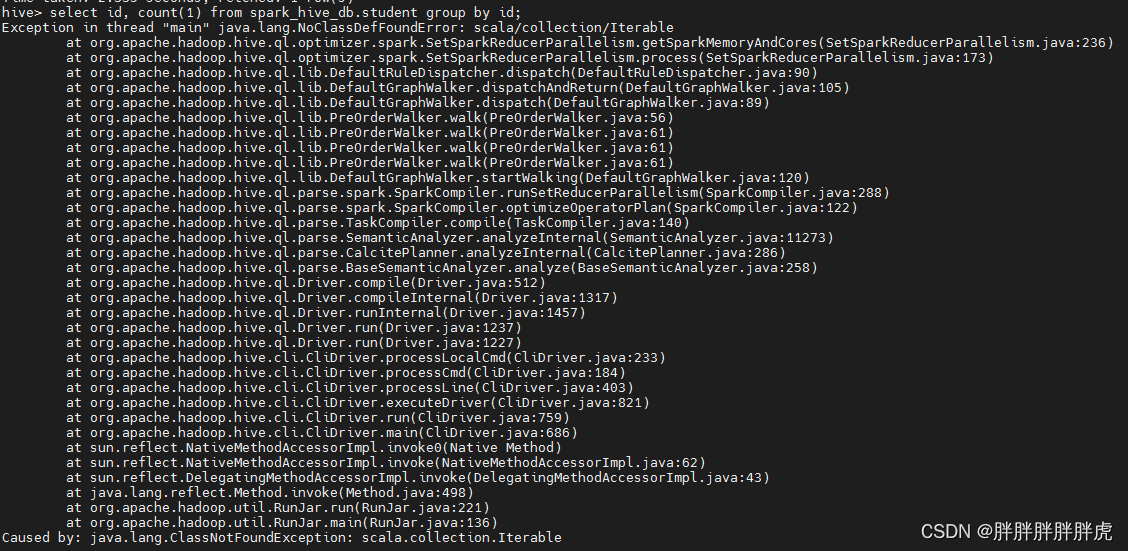

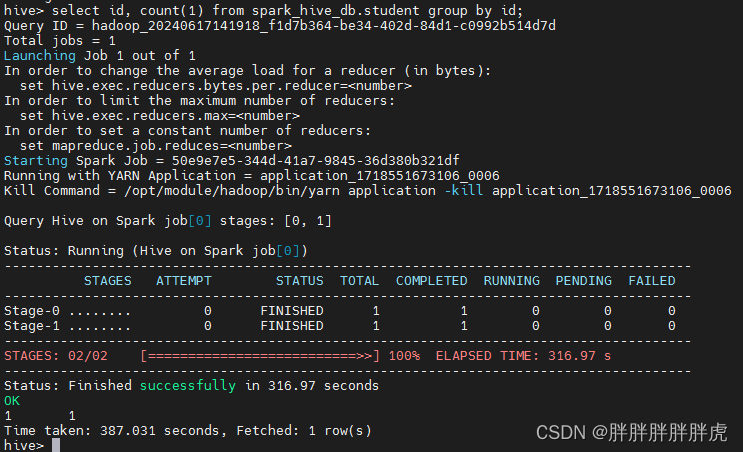

1)java.lang.NoClassDefFoundError: scala/collection/Iterable

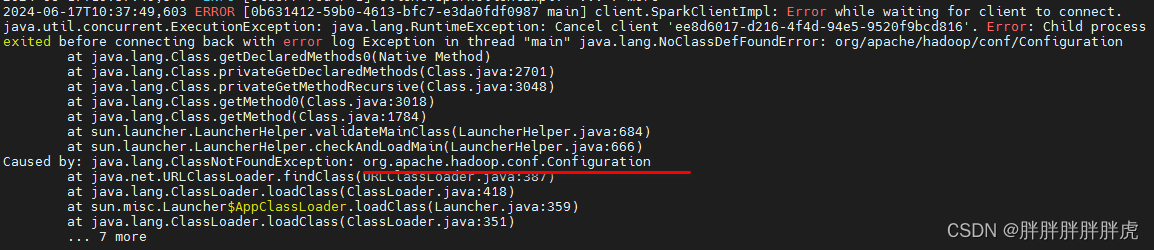

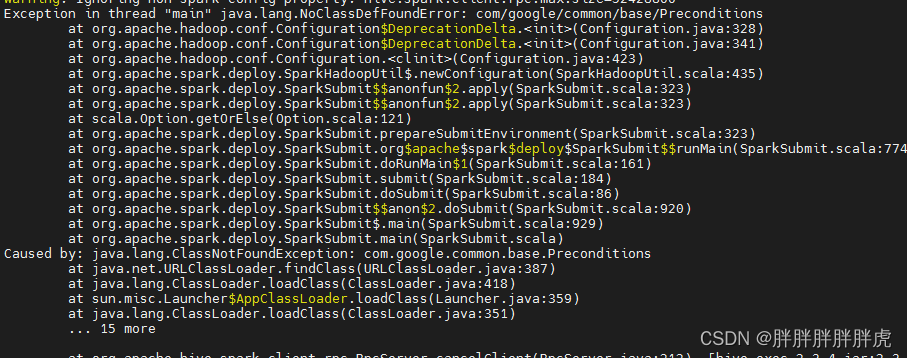

for f in ${SPARK_HOME}/jars/*.jar; do CLASSPATH=${CLASSPATH}:$f; done2) 各种 classNotFound

…

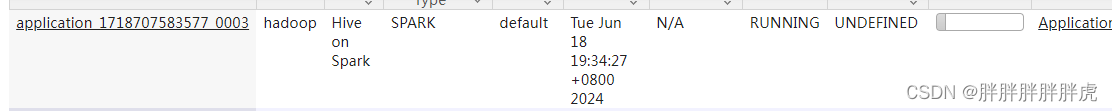

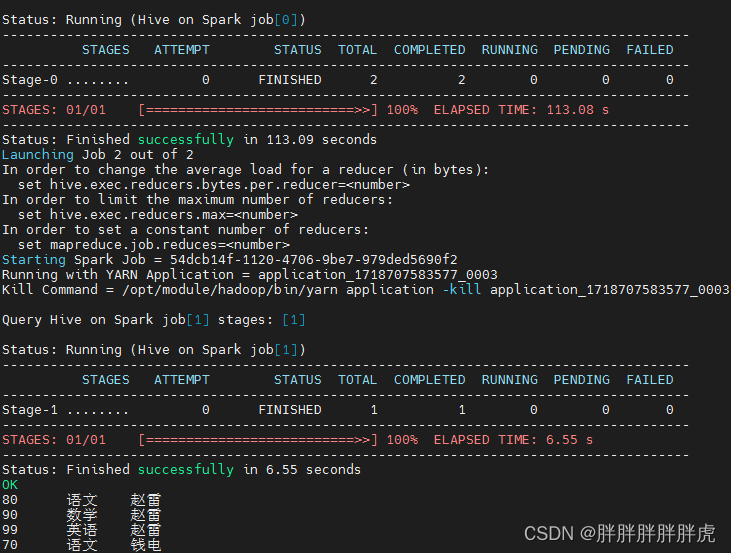

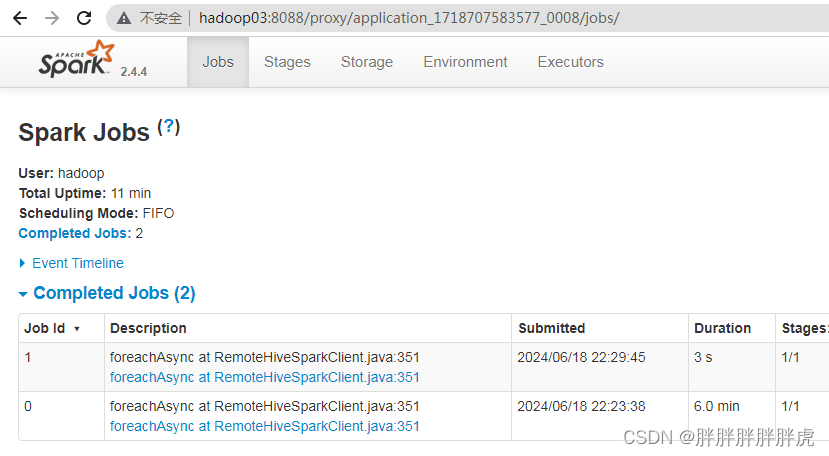

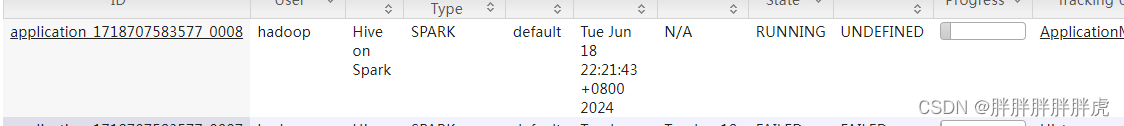

hive on spark 任务结束 | 完成,资源不释放

参考:https://blog.csdn.net/qq_31454379/article/details/107621838

解决方法:

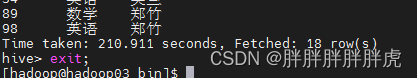

- 如果是hive命令行客户端提交的job,退出hive命令行,资源自动释放

- 如果是脚本提交的job,最好在脚本末尾加入 !quit 主动释放资源

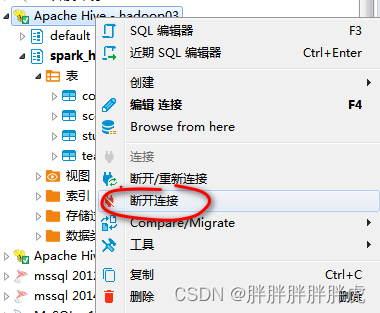

dbvear hive on spark

配置HIVE_CONF_DIR变量

同样遇到 yarn 资源未释放

断开连接、资源释放

-

相关阅读:

SPA项目开发 - 登录注册功能

Java如何实现消费数据隔离?

『vue-router 要点』

VS编译器常见的错误

一站式解决方案:Qt 跨平台开发灵活可靠

【快乐离散数学】Discrete Structure 课程计划书

概率论期末复习

用Python绘制简单曲线的几个方法

故障预测与健康管理(PHM)在工业领域的发展前景

【社媒营销】如何知道自己的WhatsApp是否被屏蔽了?

- 原文地址:https://blog.csdn.net/qq_15138049/article/details/139743734