-

Deepstream用户手册——DeepStream应用及配置文件

DeepStream参考应用 - DeepStream-app

应用架构

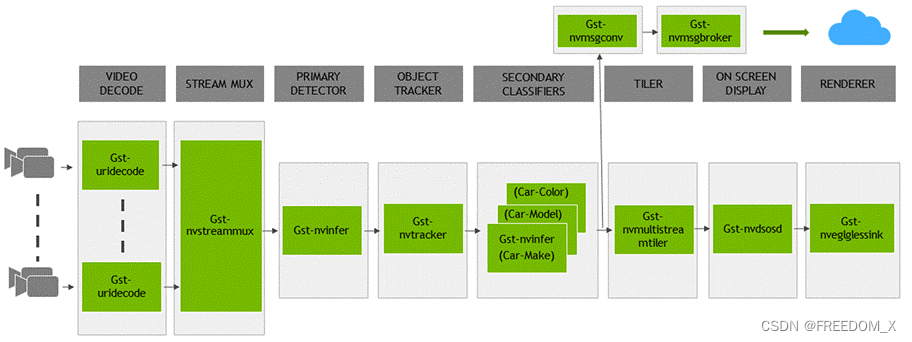

下图显示了NVIDIA®DeepStream参考应用程序的架构。

DeepStream 参考应用程序是一个基于 GStreamer 的解决方案,由一组封装低级 API 以形成完整图形的GStreamer插件组成。参考应用程序能够接受来自各种来源的输入,如相机、RTSP输入、编码文件输入,并且还支持多流/源功能。由 NVIDIA 实现并作为 DeepStream SDK 的一部分提供的 GStreamer 插件列表包括:

-

Stream Muxer插件(

Gst-nvstreammux)形成来自多个输入源的一批缓冲区。 -

Preprocess插件(

Gst-nvdspreprocess)用于对主推理的预定义ROI进行预处理。 -

基于NVIDIA TensorRT™插件(

Gst-nvinfer)分别用于主要和次要(主要对象的属性分类)检测和分类。 -

用于具有唯一ID的目标跟踪的多对象跟踪器插件(

Gst-nvtracker)。 -

用于形成2D帧阵列的多流分切插件(

Gst-nvmultistreamtiler)。 -

屏幕显示(OSD)插件(

Gst-nvdsosd)使用生成的元数据在合成框架上绘制阴影框、矩形和文本。 -

消息转换器(

Gst-nvmsgconv)和 消息代理(Gst-nvmsgbroker)插件结合使用,将分析数据发送到云中的服务器。

参考应用的配置

NVIDIA DeepStream SDK 参考应用程序使用 DeepStream 软件包中的

samples/configs/deepstream-app目录中的示例配置文件之一来:-

启用或禁用组件

-

更改组件的属性或行为

-

自定义与管道及其组件无关的其他应用程序配置设置

配置文件使用基于 freedesktop 规范的密钥文件格式,位于: Desktop Entry Specification

DeepStream 参考应用程序(deepstream-app)的运行结果

下图显示了禁用预处理插件的预期输出:

下图显示了启用预处理插件的预期输出(绿色 bboxes 是预定义的 ROIs):

Configuration 组

应用程序配置分为每个组件和application-specific组件的配置组。配置组是:

Configuration Groups - deepstream app

Group

Configuration Group

Application configurations that are not related to a specific component.

Tiled display in the application.

Source properties. There can be multiple sources. The groups must be named as: [source0], [source1] …

Source URI provided as a list. There can be multiple sources. The groups must be named as: [source-list] and [source-attr-all]

Specify properties and modify behavior of the streammux component.

Specify properties and modify behavior of the preprocess component.

Specify properties and modify behavior of the primary GIE. Specify properties and modify behavior of the secondary GIE. The groups must be named as: [secondary-gie0], [secondary-gie1] …

Specify properties and modify behavior of the object tracker.

Specify properties and modify behavior of the message converter component.

Specify properties and modify behavior of message consumer components. The pipeline can contain multiple message consumer components. Groups must be named as [message-consumer0], [message-consumer1] …

Specify properties and modify the on-screen display (OSD) component that overlays text and rectangles on the frame.

Specify properties and modify behavior of sink components that represent outputs such as displays and files for rendering, encoding, and file saving. The pipeline can contain multiple sinks. Groups must be named as: [sink0], [sink1] …

Diagnostics and debugging. This group is experimental.

Specify nvdsanalytics plugin configuration file, and to add the plugin in the application

Application 组

应用程序组属性是:

Application group

Key

Meaning

Type and Value

Example

Platforms

enable-perf-measurement

Indicates whether the application performance measurement is enabled.

Boolean

enable-perf-measurement=1

dGPU, Jetson

perf-measurement-interval-sec

The interval, in seconds, at which the performance metrics are sampled and printed.

Integer, >0

perf-measurement-interval-sec=10

dGPU, Jetson

gie-kitti-output-dir

Pathname of an existing directory where the application stores primary detector output in a modified KITTI metadata format.

String

gie-kitti-output-dir=/home/ubuntu/kitti_data/

dGPU, Jetson

kitti-track-output-dir

Pathname of an existing directory where the application stores tracker output in a modified KITTI metadata format.

String

kitti-track-output-dir=/home/ubuntu/kitti_data_tracker/

dGPU, Jetson

reid-track-output-dir

Pathname of an existing directory where the application stores tracker’s Re-ID feature output. Each line’s first integer is object id, and the remaining floats are its feature vector.

String

reid-track-output-dir=/home/ubuntu/reid_data_tracker/

dGPU, Jetson

Tiled-display 组

Tiled-display 组属性是:

Tiled display group

Key

Meaning

Type and Value

Example

Platforms

enable

Indicates whether tiled display is enabled. When user sets enable=2, first [sink] group with the key: link-to-demux=1 shall be linked to demuxer’s src_[source_id] pad where source_id is the key set in the corresponding [sink] group.

Integer, 0 = disabled, 1 = tiler-enabled 2 = tiler-and-parallel-demux-to-sink-enabled

enable=1

dGPU, Jetson

rows

Number of rows in the tiled 2D array.

Integer, >0

rows=5

dGPU, Jetson

columns

Number of columns in the tiled 2D array.

Integer, >0

columns=6

dGPU, Jetson

width

Width of the tiled 2D array, in pixels.

Integer, >0

width=1280

dGPU, Jetson

height

Height of the tiled 2D array, in pixels.

Integer, >0

height=720

dGPU, Jetson

gpu-id

GPU to be used by the element in case of multiple GPUs.

Integer, ≥0

gpu-id=0

dGPU

nvbuf-memory-type

Type of memory the element is to allocate for output buffers.

0 (nvbuf-mem-default): a platform-specific default type

1 (nvbuf-mem-cuda-pinned): pinned/host CUDA memory

2 (nvbuf-mem-cuda-device): device CUDA memory

3 (nvbuf-mem-cuda-unified): unified CUDA memory

For dGPU: All values are valid.

For Jetson: Only 0 (zero) is valid.

Integer, 0, 1, 2, or 3

nvbuf-memory-type=3

dGPU, Jetson

compute-hw

Compute Scaling HW to use. Applicable only for Jetson. dGPU systems uses GPU by default.

0 (Default): Default, GPU for Tesla, VIC for Jetson

1 (GPU): GPU

2 (VIC): VIC

Integer: 0-2

compute-hw=1

Jetson

Source 组

源组指定源属性。DeepStream应用程序支持多个同时源。对于每个源,必须将具有组名称(例如

source%d)的单独组添加到配置文件中。例如:[source0] key1=value1 key2=value2 ... [source1] key1=value1 key2=value2 ...

源组属性是:

Source group

Key

Meaning

Type and Value

Example

Platforms

enable

Enables or disables the source.

Boolean

enable=1

dGPU, Jetson

type

Type of source; other properties of the source depend on this type.

1: Camera (V4L2)

2: URI

3: MultiURI

4: RTSP

5: Camera (CSI) (Jetson only)

Integer, 1, 2, 3, 4, or 5

type=1

dGPU, Jetson

uri

URI to the encoded stream. The URI can be a file, an HTTP URI, or an RTSP live source. Valid when type=2 or 3. With MultiURI, the %d format specifier can also be used to specify multiple sources. The application iterates from 0 to num-sources−1 to generate the actual URIs.

String

uri=file:///home/ubuntu/source.mp4 uri=http://127.0.0.1/source.mp4 uri=rtsp://127.0.0.1/source1 uri=file:///home/ubuntu/source_%d.mp4

dGPU, Jetson

num-sources

Number of sources. Valid only when type=3.

Integer, ≥0

num-sources=2

dGPU, Jetson

intra-decode-enable

Enables or disables intra-only decode.

Boolean

intra-decode-enable=1

dGPU, Jetson

num-extra-surfaces

Number of surfaces in addition to minimum decode surfaces given by the decoder. Can be used to manage the number of decoder output buffers in the pipeline.

Integer, ≥0 and ≤24

num-extra-surfaces=5

dGPU, Jetson

gpu-id

GPU to be used by the element in case of multiple GPUs.

Integer, ≥0

gpu-id=1

dGPU

camera-id

Unique ID for the input source to be added to metadata. (Optional)

Integer, ≥0

camera-id=2

dGPU, Jetson

camera-width

Width of frames to be requested from the camera, in pixels. Valid when type=1 or 5.

Integer, >0

camera-width=1920

dGPU, Jetson

camera-height

Height of frames to be requested from the camera, in pixels. Valid when type=1 or 5.

Integer, >0

camera-height=1080

dGPU, Jetson

camera-fps-n

Numerator part of a fraction specifying the frame rate requested by the camera, in frames/sec. Valid when type=1 or 5.

Integer, >0

camera-fps-n=30

dGPU, Jetson

camera-fps-d

Denominator part of a fraction specifying the frame rate requested from the camera, in frames/sec. Valid when type=1 or 5.

Integer, >0

camera-fps-d=1

dGPU, Jetson

camera-v4l2-dev-node

Number of the V4L2 device node. For example, /dev/video

for the open source V4L2 camera capture path. Valid when the type setting (type of source) is 1. Integer, >0

camera-v4l2-dev-node=1

dGPU, Jetson

latency

Jitterbuffer size in milliseconds; applicable only for RTSP streams.

Integer, ≥0

latency=200

dGPU, Jetson

camera-csi-sensor-id

Sensor ID of the camera module. Valid when the type (type of source) is 5.

Integer, ≥0

camera-csi-sensor-id=1

Jetson

drop-frame-interval

Interval to drop frames. For example, 5 means decoder outputs every fifth frame; 0 means no frames are dropped.

Integer,

drop-frame-interval=5

dGPU, Jetson

cudadec-memtype

Type of CUDA memory element used to allocate for output buffers for source of type 2,3 or 4. Not applicable for CSI or USB camera source

0 (memtype_device): Device memory allocated with cudaMalloc().

1 (memtype_pinned): host/pinned memory allocated with cudaMallocHost().

2 (memtype_unified): Unified memory allocated with cudaMallocManaged().

Integer, 0, 1, or 2

cudadec-memtype=1

dGPU

nvbuf-memory-type

Type of CUDA memory the element is to allocate for output buffers of nvvideoconvert, useful for source of type 1.

0 (nvbuf-mem-default, a platform-specific default

1 (nvbuf-mem-cuda-pinned): pinned/host CUDA memory.

2 (nvbuf-mem-cuda-device): Device CUDA memory.

3 (nvbuf-mem-cuda-unified): Unified CUDA memory.

For dGPU: All values are valid.

For Jetson: Only 0 (zero) is valid.

Integer, 0, 1, 2, or 3

nvbuf-memory-type=3

dGPU,Jetson

select-rtp-protocol

Transport Protocol to use for RTP. Valid when type (type of source) is 4.

0: UDP + UDP Multicast + TCP

4: TCP only

Integer, 0 or 4

select-rtp-protocol=4

dGPU, Jetson

rtsp-reconnect-interval-sec

Timeout in seconds to wait since last data was received from an RTSP source before forcing a reconnection. Setting it to 0 will disable the reconnection. Valid when type (type of source) is 4.

Integer, ≥0

rtsp-reconnect-interval-sec=60

dGPU, Jetson

rtsp-reconnect-attempts

Maximum number of times a reconnection is attempted. Setting it to -1 means reconnection will be attempted infinitely. Valid when type of source is 4 and rtsp-reconnect-interval-sec is a non-zero positive value.

Integer, ≥-1

rtsp-reconnect-attempts=2

smart-record

Ways to trigger the smart record.

0: Disable

1: Only through cloud messages

2: Cloud message + Local events

Integer, 0, 1 or 2

smart-record=1

dGPU, Jetson

smart-rec-dir-path

Path of directory to save the recorded file. By default, the current directory is used.

String

smart-rec-dir-path=/home/nvidia/

dGPU, Jetson

smart-rec-file-prefix

Prefix of file name for recorded video. By default, Smart_Record is the prefix. For unique file names every source must be provided with a unique prefix.

String

smart-rec-file-prefix=Cam1

dGPU, Jetson

smart-rec-cache

Size of smart record cache in seconds.

Integer, ≥0

smart-rec-cache=20

dGPU, Jetson

smart-rec-container

Container format of recorded video. MP4 and MKV containers are supported.

Integer, 0 or 1

smart-rec-container=0

dGPU, Jetson

smart-rec-start-time

Number of seconds earlier from now to start the recording. E.g. if t0 is the current time and N is the start time in seconds that means recording will start from t0 – N. Obviously for it to work the video cache size must be greater than the N.

Integer, ≥0

smart-rec-start-time=5

dGPU, Jetson

smart-rec-default-duration

In case a Stop event is not generated. This parameter will ensure the recording is stopped after a predefined default duration.

Integer, ≥0

smart-rec-default-duration=20

dGPU, Jetson

smart-rec-duration

Duration of recording in seconds.

Integer, ≥0

smart-rec-duration=15

dGPU, Jetson

smart-rec-interval

This is the time interval in seconds for SR start / stop events generation.

Integer, ≥0

smart-rec-interval=10

dGPU, Jetson

udp-buffer-size

UDP buffer size in bytes for RTSP sources.

Integer, ≥0

udp-buffer-size=2000000

dGPU, Jetson

video-format

Output video format for the source. This value is set as the output format of the nvvideoconvert element for the source.

String: NV12, I420, P010_10LE, BGRx, RGBA

video-format=RGBA

dGPU, Jetson

Source-list and source-attr-all 组

源列表组允许用户提供源URI的初始列表以开始流式传输。该组与[source-attr-all]一起可以代替每个流对单独的[source]组的需求。

此外,DeepStream支持(Alpha功能)通过REST API进行动态传感器配置。 有关此功能的更多详细信息,请参阅示例配置文件和此处的深度流参考应用程序运行命令。

例如:

[source-list] num-source-bins=2 list=file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4;file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h265.mp4 sgie-batch-size=8 ... [source-attr-all] enable=1 type=3 num-sources=1 gpu-id=0 cudadec-memtype=0 latency=100 rtsp-reconnect-interval-sec=0 ...

[源列表]组属性是:

Source List group

Key

Meaning

Type and Value

Example

Platforms

enable

Enables or disables the source.

Boolean

enable=1

dGPU, Jetson

num-source-bins

The total number of source URI’s provided with the key list

Integer

num-source-bins=2

dGPU, Jetson

sgie-batch-size

sgie batch size is number of sources * fair fraction of number of objects detected per frame per source the fair fraction of number of object detected is assumed to be 4

Integer

sgie-batch-size=8

dGPU, Jetson

list

The list of URI’s separated by semi-colon ‘;’

String

list=rtsp://ip-address:port/stream1;rtsp://ip-address:port/stream2

dGPU, Jetson

sensor-id-list

(Alpha Feature) Applicable only when use-nvmultiurisrcbin=1. The list of unique Identifiers identifying each stream separated by semi-colon ‘;’

String

sensor-id-list=UniqueSensorId1;UniqueSensorId2

dGPU

use-nvmultiurisrcbin

(Alpha Feature) Boolean if set enable the use of nvmultiurisrcbin with REST API support for dynamic sensor provisioning

Boolean

use-nvmultiurisrcbin=0(default)

dGPU

max-batch-size

(Alpha Feature) Applicable only when use-nvmultiurisrcbin=1. Sets the maximum number of sensors that can be streamed using this instance of DeepStream

Integer

max-batch-size=10

dGPU

http-ip

(Alpha Feature) Applicable only when use-nvmultiurisrcbin=1. The HTTP Endpoint IP address to use

String

http-ip=localhost (default)

dGPU

http-port

(Alpha Feature) Applicable only when use-nvmultiurisrcbin=1. The HTTP Endpoint port number to use. Note: User may pass empty string to disable REST API server

String

http-ip=9001 (default)

dGPU

[ource-attr-all]组支持除Source组的uri之外的所有属性。 示例配置文件可以在/opt/nvidia/deepstream/deepstream/sources/apps/sample_apps/deepstream-test5/configs/test5_config_file_nvmultiurisrcbin_src_list_attr_all.txt找到。

Streammux组

[streammux]组指定和修改

Gst-nvstreammux插件的属性。Streammux group

Key

Meaning

Type and Value

Example

Platforms

gpu-id

GPU element is to use in case of multiple GPUs.

Integer, ≥0

gpu-id=1

dGPU

live-source

Informs the muxer that sources are live.

Boolean

live-source=0

dGPU, Jetson

buffer-pool-size

Number of buffers in Muxer output buffer pool.

Integer, >0

buffer-pool-size=4

dGPU, Jetson

batch-size

Muxer batch size.

Integer, >0

batch-size=4

dGPU, Jetson

batched-push-timeout

Timeout in microseconds after to push the batch after the first buffer is available, even if the complete batch is not formed.

Integer, ≥−1

batched-push-timeout=40000

dGPU, Jetson

width

Muxer output width in pixels.

Integer, >0

width=1280

dGPU, Jetson

height

Muxer output height in pixels.

Integer, >0

height=720

dGPU, Jetson

enable-padding

Indicates whether to maintain source aspect ratio when scaling by adding black bands.

Boolean

enable-padding=0

dGPU, Jetson

nvbuf-memory-type

Type of CUDA memory the element is to allocate for output buffers.

0 (nvbuf-mem-default, a platform-specific default

1 (nvbuf-mem-cuda-pinned): pinned/host CUDA memory.

2 (nvbuf-mem-cuda-device): Device CUDA memory.

3 (nvbuf-mem-cuda-unified): Unified CUDA memory.

For dGPU: All values are valid.

For Jetson: Only 0 (zero) is valid.

Integer, 0, 1, 2, or 3

nvbuf-memory-type=3

dGPU

attach-sys-ts-as-ntp

For live sources, the muxed buffer shall have associated NvDsFrameMeta->ntp_timestamp set to system time or the server’s NTP time when streaming RTSP.

If set to 1, system timestamp will be attached as ntp timestamp.

If set to 0, ntp timestamp from rtspsrc, if available, will be attached.

Boolean

attach-sys-ts-as-ntp=0

dGPU, Jetson

config-file-path

This key is valid only for the new streammux. Please refer the plugin manual section “New Gst-nvstreammux” for more information. Absolute or relative (to DS config-file location) path of mux configuration file.

String

config-file-path=config_mux_source30.txt

dGPU, Jetson

sync-inputs

Time synchronize input frames before batching them.

Boolean

sync-inputs=0 (default)

dGPU, Jetson

max-latency

Additional latency in live mode to allow upstream to take longer to produce buffers for the current position (in nanoseconds).

Integer, ≥0

max-latency=0 (default)

dGPU, Jetson

drop-pipeline-eos

Boolean property to control EOS propagation downstream from nvstreammux when all the sink pads are at EOS. (Experimental)

Boolean

drop-pipeline-eos=0(default)

dGPU/Jetson

Preprocess 组

该

[pre-process]组用于在管道中添加nvdspreprocess插件。仅支持主GIE的预处理。Primary GIE and Secondary GIE组

DeepStream应用程序支持多个辅助GIE。对于每个辅助GIE,必须在配置文件中添加一个名为

secondary-gie%d的单独组。例如:[primary-gie] key1=value1 key2=value2 ... [secondary-gie1] key1=value1 key2=value2 ...

主要和次要GIE配置如下。对于每个配置,Valid for列指示配置属性是对主要还是次要TensorRT模型有效,还是对两个模型都有效。

Primary and Secondary GIE* group

Key

Meaning

Type and Value

Example

Platforms/ GIEs*

enable

Indicates whether the primary GIE must be enabled.

Boolean

enable=1

dGPU, Jetson Both GIEs

gie-unique-id

Unique component ID to be assigned to the nvinfer instance. Used to identify metadata generated by the instance.

Integer, >0

gie-unique-id=2

Both

gpu-id

GPU to be used by the element in case of multiple GPUs.

Integer, ≥0

gpu-id=1

dGPU, Both GIEs

model-engine-file

Absolute pathname of the pre-generated serialized engine file for the mode.

String

model-engine-file=../../ models/Primary_Detector/resnet10. caffemodel_b4_int8.engine

Both GIEs

nvbuf-memory-type

Type of CUDA memory element is to allocate for output buffers.

0 (nvbuf-mem-default): a platform-specific default

1 (nvbuf-mem-cuda-pinned): pinned/host CUDA memory

2 (nvbuf-mem-cuda-device): Device CUDA memory

3 (nvbuf-mem-cuda-unified): Unified CUDA memory

For dGPU: All values are valid.

For Jetson: Only 0 (zero) is valid.

Integer, 0, 1, 2, or 3

nvbuf-memory-type=3

dGPU, Jetson Primary GIE

config-file

Pathname of a configuration file which specifies properties for the Gst-nvinfer plugin. It may contain any of the properties described in this table except config-file itself. Properties must be defined in a group named [property]. For more details about parameters see “Gst-nvinfer File Configuration Specifications” in the DeepStream 4.0 Plugin Manual.

String

config-file=¬/home/-ubuntu/-config_infer_resnet.txt For complete examples, see the sample file samples/¬configs/-deepstream-app/-config_infer_resnet.txt or the deepstream-test2 sample application.

dGPU, Jetson Both GIEs

batch-size

The number of frames(P.GIE)/objects(S.GIE) to be inferred together in a batch.

Integer, >0 Integer, >0

batch-size=2

dGPU, Jetson Both GIEs

interval

Number of consecutive batches to skip for inference.

Integer, >0 Integer, >0

interval=2

dGPU, Jetson Primary GIE

bbox-border-color

The color of the borders for the objects of a specific class ID, specified in RGBA format. The key must be of format bbox-border-color

. This property can be identified multiple times for multiple class IDs. If this property is not identified for the class ID, the borders are not drawn for objects of that class-id. R:G:B:A Float, 0≤R,G,B,A≤1

bbox-border-color2= 1;0;0;1 (Red for class-id 2)

dGPU, Jetson Both GIEs

bbox-bg-color

The color of the boxes drawn over objects of a specific class ID, in RGBA format. The key must be of format bbox-bg-color

. This property can be used multiple times for multiple class IDs. If it is not used for a class ID, the boxes are not drawn for objects of that class ID. R:G:B:A Float, 0≤R,G,B,A≤1

bbox-bg-color3=-0;1;0;0.3 (Semi-transparent green for class-id 3)

dGPU, Jetson Both GIEs

operate-on-gie-id

A unique ID of the GIE, on whose metadata (NvDsFrameMeta) this GIE is to operate.

Integer, >0

operate-on-gie-id=1

dGPU, Jetson Secondary GIE

operate-on-class-ids

Class IDs of the parent GIE on which this GIE must operate. The parent GIE is specified using operate-on-gie-id.

Semicolon separated integer array

operate-on-class-ids=1;2 (operate on objects with class IDs 1, 2 generated by parent GIE)

dGPU, Jetson Secondary GIE

infer-raw-output-dir

Pathname of an existing directory in which to dump the raw inference buffer contents in a file.

String

infer-raw-output-dir=/home/ubuntu/infer_raw_out

dGPU, Jetson Both GIEs

labelfile-path

Pathname of the labelfile.

String

labelfile-path=../../models/Primary_Detector/labels.txt

dGPU, Jetson Both GIEs

plugin-type

Plugin to use for inference. 0: nvinfer (TensorRT) 1: nvinferserver (Triton inference server)

Integer, 0 or 1

plugin-type=1

dGPU, Jetson Both GIEs

input-tensor-meta

Use preprocessed input tensors attached as metadata by nvdspreprocess plugin instead of preprocessing inside the nvinfer.

Integer, 0 or 1

input-tensor-meta=1

dGPU, Jetson, Primary GIE

注

*GIE是GPU推理引擎。

Tracker 组

Tracker 组属性包括以下内容,更多详细信息可以在Gst属性中找到:

Tracker group

Key

Meaning

Type and Value

Example

Platforms

enable

Enables or disables the tracker.

Boolean

enable=1

dGPU, Jetson

tracker-width

Frame width at which the tracker will operate, in pixels.

Integer, ≥0

tracker-width=960

dGPU, Jetson

tracker-height

Frame height at which the tracker will operate, in pixels.

Integer, ≥0

tracker-height=544

dGPU, Jetson

gpu-id

GPU to be used by the element in case of multiple GPUs.

Integer, ≥0

gpu-id=1

dGPU

ll-config-file

Pathname for the low-level tracker configuration file.

String

ll-config-file=iou_config.txt

dGPU, Jetson

ll-lib-file

Pathname for the low-level tracker implementation library.

String

ll-lib-file=/usr/-local/deepstream/libnvds_mot_iou.so

dGPU, Jetson

enable-batch-process

Enables batch processing across multiple streams.

Boolean

enable-batch-process=1

dGPU, Jetson

enable-past-frame

Enables reporting past-frame data

Boolean

enable-past-frame=1

dGPU, Jetson

tracking-surface-type

Set surface stream type for tracking. (default value is 0)

Integer, ≥0

tracking-surface-type=0

dGPU, Jetson

display-tracking-id

Enables tracking id display.

Boolean

display-tracking-id=1

dGPU, Jetson

Message Converter 组

消息转换器组属性是:

Message converter group

Key

Meaning

Type and Value

Example

Platforms

enable

Enables or disables the message converter.

Boolean

enable=1

dGPU, Jetson

msg-conv-config

Pathname of the configuration file for the Gst-nvmsgconv element.

String

msg-conv-config=dstest5_msgconv_sample_config.txt

dGPU, Jetson

msg-conv-payload-type

Type of payload.

0, PAYLOAD_DEEPSTREAM: Deepstream schema payload.

1, PAYLOAD_DEEPSTREAM_MINIMAL: Deepstream schema payload minimal.

256, PAYLOAD_RESERVED: Reserved type.

257, PAYLOAD_CUSTOM: Custom schema payload.

Integer 0, 1, 256, or 257

msg-conv-payload-type=0

dGPU, Jetson

msg-conv-msg2p-lib

Absolute pathname of an optional custom payload generation library. This library implements the API defined by sources/libs/nvmsgconv/nvmsgconv.h.

String

msg-conv-msg2p-lib=/opt/nvidia/deepstream/deepstream-4.0/lib/libnvds_msgconv.so

dGPU, Jetson

msg-conv-comp-id

comp-id Gst property of the gst-nvmsgconv element. This is the Id of the component that attaches the NvDsEventMsgMeta which must be processed by gst-nvmsgconv element.

Integer, >=0

msg-conv-comp-id=1

dGPU, Jetson

debug-payload-dir

Directory to dump payload

String

debug-payload-dir=

Default is NULL dGPU Jetson

multiple-payloads

Generate multiple message payloads

Boolean

multiple-payloads=1 Default is 0

dGPU Jetson

msg-conv-msg2p-newapi

Generate payloads using Gst buffer frame/object metadata

Boolean

msg-conv-msg2p-newapi=1 Default is 0

dGPU Jetson

msg-conv-frame-interval

Frame interval at which payload is generated

Integer, 1 to 4,294,967,295

msg-conv-frame-interval=25 Default is 30

dGPU Jetson

Message Consumer 组

消息消费者组属性是:

Message consumer group

Key

Meaning

Type and Value

Example

Platforms

enable

Enables or disables the message consumer.

Boolean

enable=1

dGPU, Jetson

proto-lib

Path to the library having protocol adapter implementation.

String

proto-lib=/opt/nvidia/deepstream/deepstream-4.0/lib/libnvds_kafka_proto.so

dGPU, Jetson

conn-str

Connection string of the server.

String

conn-str=foo.bar.com;80

dGPU, Jetson

config-file

Path to the file having additional configurations for protocol adapter,

String

config-file=../cfg_kafka.txt

dGPU, Jetson

subscribe-topic-list

List of topics to subscribe.

String

subscribe-topic-list=toipc1;topic2;topic3

dGPU, Jetson

sensor-list-file

File having mappings from sensor index to sensor name.

String

sensor-list-file=dstest5_msgconv_sample_config.txt

dGPU, Jetson

OSD组

OSD组指定属性并修改OSD组件的行为,该组件在视频帧上覆盖文本和矩形。

OSD group

Key

Meaning

Type and Value

Example

Platforms

enable

Enables or disables the On-Screen Display (OSD).

Boolean

enable=1

dGPU, Jetson

gpu-id

GPU to be used by the element in case of multiple GPUs.

Integer, ≥0

gpu-id=1

dGPU

border-width

Border width of the bounding boxes drawn for objects, in pixels.

Integer, ≥0

border-width=10

dGPU, Jetson

border-color

Border color of the bounding boxes drawn for objects.

R;G;B;A Float, 0≤R,G,B,A≤1

border-color=0;0;0.7;1 #Dark Blue

dGPU, Jetson

text-size

Size of the text that describes the objects, in points.

Integer, ≥0

text-size=16

dGPU, Jetson

text-color

The color of the text that describes the objects, in RGBA format.

R;G;B;A Float, 0≤R,G,B,A≤1

text-color=0;0;0.7;1 #Dark Blue

dGPU, Jetson

text-bg-color

The background color of the text that describes the objects, in RGBA format.

R;G;B;A Float, 0≤R,G,B,A≤1

text-bg-color=0;0;0;0.5 #Semi-transparent black

dGPU, Jetson

clock-text-size

The size of the clock time text, in points.

Integer, >0

clock-text-size=16

dGPU, Jetson

clock-x-offset

The horizontal offset of the clock time text, in pixels.

Integer, >0

clock-x-offset=100

dGPU, Jetson

clock-y-offset

The vertical offset of the clock time text, in pixels.

Integer, >0

clock-y-offset=100

dGPU, Jetson

font

Name of the font for text that describes the objects.

String

font=Purisa

dGPU, Jetson

Enter the shell command fc-list to display the names of available fonts.

clock-color

Color of the clock time text, in RGBA format.

R;G;B;A Float, 0≤R,G,B,A≤1

clock-color=1;0;0;1 #Red

dGPU, Jetson

nvbuf-memory-type

Type of CUDA memory the element is to allocate for output buffers.

0 (nvbuf-mem-default): a platform-specific default

1 (nvbuf-mem-cuda-pinned): pinned/host CUDA memory

2 (nvbuf-mem-cuda-device): Device CUDA memory

3 (nvbuf-mem-cuda-unified): Unified CUDA memory

For dGPU: All values are valid.

For Jetson: Only 0 (zero) is valid.

Integer, 0, 1, 2, or 3

nvbuf-memory-type=3

dGPU

process-mode

NvOSD processing mode.

0: CPU

1: GPU

Integer, 0, 1, or 2

process-mode=1

dGPU, Jetson

display-text

Indicate whether to display text

Boolean

display-text=1

dGPU, Jetson

display-bbox

Indicate whether to bounding box

Boolean

display-bbox=1

dGPU, Jetson

display-mask

Indicate whether to display instance mask

Boolean

display-mask=1

dGPU, Jetson

Sink 组

Sink 组指定用于呈现、编码和文件保存的Sink 组件的属性并修改其行为。

Sink group

Key

Meaning

Type and Value

Example

Platforms

enable

Enables or disables the sink.

Boolean

enable=1

dGPU, Jetson

type

Type of sink, to use.

1: Fakesink

2: EGL based windowed nveglglessink for dGPU and nv3dsink for Jetson

3: Encode + File Save (encoder + muxer + filesink)

4: Encode + RTSP streaming; Note: sync=1 for this type is not applicable;

5: nvdrmvideosink (Jetson only)

6: Message converter + Message broker

Integer, 1, 2, 3, 4, 5 or 6

type=2

dGPU, Jetson

sync

Indicates how fast the stream is to be rendered.

0: As fast as possible

1: Synchronously

Integer, 0 or 1

sync=1

dGPU, Jetson

qos

Indicates whether the sink is to generate Quality-of-Service events, which can lead to the pipeline dropping frames when pipeline FPS cannot keep up with the stream frame rate.

Boolean

qos=0

dGPU, Jetson

source-id

The ID of the source whose buffers this sink must use. The source ID is contained in the source group name. For example, for group [source1] source-id=1.

Integer, ≥0

source-id=1

dGPU, Jetson

gpu-id

GPU to be used by the element in case of multiple GPUs.

Integer, ≥0

gpu-id=1

dGPU

container

Container to use for the file save. Only valid for type=3. | 1: MP4 | 2: MKV

Integer, 1 or 2

container=1

dGPU, Jetson

codec

The encoder to be used to save the file.

1: H.264 (hardware)

2: H.265 (hardware)

Integer, 1 or 2

codec=1

dGPU, Jetson

bitrate

Bitrate to use for encoding, in bits per second. Valid for type=3 and 4.

Integer, >0

bitrate=4000000

dGPU, Jetson

iframeinterval

Encoding intra-frame occurrence frequency.

Integer, 0≤iv≤MAX_INT

iframeinterval=30

dGPU, Jetson

output-file

Pathname of the output encoded file. Only valid for type=3.

String

output-file=/home/ubuntu/output.mp4

dGPU, Jetson

nvbuf-memory-type

Type of CUDA memory the plugin is to allocate for output buffers.

0 (nvbuf-mem-default): a platform-specific default

1 (nvbuf-mem-cuda-pinned): pinned/host CUDA memory

2 (nvbuf-mem-cuda-device): Device CUDA memory

3 (nvbuf-mem-cuda-unified): Unified CUDA memory

For dGPU: All values are valid.

For Jetson: Only 0 (zero) Is valid.

Integer, 0, 1, 2, or 3

nvbuf-memory-type=3

dGPU, Jetson

rtsp-port

Port for the RTSP streaming server; a valid unused port number. Valid for type=4.

Integer

rtsp-port=8554

dGPU, Jetson

udp-port

Port used internally by the streaming implementation - a valid unused port number. Valid for type=4.

Integer

udp-port=5400

dGPU, Jetson

conn-id

Connection index. Valid for nvdrmvideosink(type=5).

Integer, >=1

conn-id=0

Jetson

width

Width of the renderer in pixels.

Integer, >=1

width=1920

dGPU, Jetson

height

Height of the renderer in pixels.

Integer, >=1

height=1920

dGPU, Jetson

offset-x

Horizontal offset of the renderer window, in pixels.

Integer, >=1

offset-x=100

dGPU, Jetson

offset-y

Vertical offset of the renderer window, in pixels.

Integer, >=1

offset-y=100

dGPU, Jetson

plane-id

Plane on which video should be rendered. Valid for nvdrmvideosink(type=5).

Integer, ≥0

plane-id=0

Jetson

msg-conv-config

Pathname of the configuration file for the Gst-nvmsgconv element (type=6).

String

msg-conv-config=dstest5_msgconv_sample_config.txt

dGPU, Jetson

msg-broker-proto-lib

Path to the protocol adapter implementation used Gst-nvmsgbroker (type=6).

String

msg-broker-proto-lib= /opt/nvidia/deepstream/deepstream-5.0/lib/libnvds_amqp_proto.so

dGPU, Jetson

msg-broker-conn-str

Connection string of the backend server (type=6).

String

msg-broker-conn-str=foo.bar.com;80;dsapp

dGPU, Jetson

topic

Name of the message topic (type=6).

String

topic=test-ds4

dGPU, Jetson

msg-conv-payload-type

Type of payload.

0, PAYLOAD_DEEPSTREAM: DeepStream schema payload.

1, PAYLOAD_DEEPSTREAM_-MINIMAL: DeepStream schema payload minimal.

256, PAYLOAD_RESERVED: Reserved type.

257, PAYLOAD_CUSTOM: Custom schema payload (type=6).

Integer 0, 1, 256, or 257

msg-conv-payload-type=0

dGPU, Jetson

msg-broker-config

Pathname of an optional configuration file for the Gst-nvmsgbroker element (type=6).

String

msg-broker-config=/home/ubuntu/cfg_amqp.txt

dGPU, Jetson

new-api

use protocol adapter library api’s directly or use new msgbroker library wrapper api’s (type=6)

Integer

0 : Use adapter api’s directly

1 : msgbroker lib wrapper api’s

new-api = 0

dGPU, Jetson

msg-conv-msg2p-lib

Absolute pathname of an optional custom payload generation library. This library implements the API defined by sources/libs/nvmsgconv/nvmsgconv.h. Applicable only when msg-conv-payload-type=257, PAYLOAD_CUSTOM. (type=6)

String

msg-conv-msg2p-lib= /opt/nvidia/deepstream/deepstream-4.0/lib/libnvds_msgconv.so

dGPU, Jetson

msg-conv-comp-id

comp-id Gst property of the nvmsgconv element; ID (gie-unique-id) of the primary/secondary-gie component from which metadata is to be processed. (type=6)

Integer, >=0

msg-conv-comp-id=1

dGPU, Jetson

msg-broker-comp-id

comp-id Gst property of the nvmsgbroker element; ID (gie-unique-id) of the primary/secondary gie component from which metadata is to be processed. (type=6)

Integer, >=0

msg-broker-comp-id=1

dGPU, Jetson

debug-payload-dir

Directory to dump payload (type=6)

String

debug-payload-dir=

Default is NULL dGPU Jetson

multiple-payloads

Generate multiple message payloads (type=6)

Boolean

multiple-payloads=1 Default is 0

dGPU Jetson

msg-conv-msg2p-newapi

Generate payloads using Gst buffer frame/object metadata (type=6)

Boolean

msg-conv-msg2p-newapi=1 Default is 0

dGPU Jetson

msg-conv-frame-interval

Frame interval at which payload is generated (type=6)

Integer, 1 to 4,294,967,295

msg-conv-frame-interval=25 Default is 30

dGPU Jetson

disable-msgconv

Only add a message broker component instead of a message converter + message broker. (type=6)

Integer,

disable-msgconv = 1

dGPU, Jetson

enc-type

Engine to use for encoder

0: NVENC hardware engine

1: CPU software encoder

Integer, 0 or 1

enc-type=0

dGPU, Jetson

profile (HW)

Encoder profile for the codec V4L2 H264 encoder(HW):

0: Baseline

2: Main

4: High

V4L2 H265 encoder(HW):

0: Main

1: Main10

Integer, valid values from the column beside

profile=2

dGPU, Jetson

udp-buffer-size

UDP kernel buffer size (in bytes) for internal RTSP output pipeline.

Integer, >=0

udp-buffer-size=100000

dGPU, Jetson

link-to-demux

A boolean which enables or disables streaming a particular “source-id” alone to this sink.

Please check the tiled-display group enable key for more information.

Boolean

link-to-demux=0

dGPU, Jetson

Tests 组

测试组用于诊断和调试。

Tests group

Key

Meaning

Type and Value

Example

Platforms

file-loop

Indicates whether input files should be looped infinitely.

Boolean

file-loop=1

dGPU, Jetson

NvDs-analytics 组

该

[nvds-analytics]组用于在管道中添加nvds-analytics插件。注

有关插件特定的配置文件规范,请参阅DeepStream插件指南(

Gst-nvdspreprocess、Gst-nvinfer、Gst-nvtracker、Gst-nvdewarper、Gst-nvmsgconv、Gst-nvmsgbroker和Gst-nvdsanalytics插件)。DeepStream SDK的应用调优

本节使用配置文件中的以下参数提供DeepStream SDK的应用程序调整提示。

性能优化

本节介绍了您可以尝试以获得最佳性能的各种性能优化步骤。

DeepStream 最佳实践

以下是优化DeepStream应用程序以消除应用程序瓶颈的一些最佳实践:

1. 将stream mux和主检测器的批量大小设置为等于进审量源。这些设置位于配置文件的

[streammux]和[primary-gie]组下。这使管道保持满负荷运行。高于或低于进审量源的批量大小有时会增加管道中的延迟。2. 将Stremux的高度和宽度设置为输入分辨率。这是在配置文件的

[streammux]组下设置的。这可以确保流不会经过任何不需要的图像缩放。3. 如果您从实时源(如RTSP)或USB摄像头流式传输,请在

[streammux]组配置文件中设置live-source=1。这可以为实时源设置适当的时间戳,从而创建更流畅的播放4. 平铺和可视输出可能会占用GPU资源。当您不需要在屏幕上渲染输出时,您可以禁用3件事以最大限度地提高吞吐量。例如,当您想在边缘运行推理并仅将元数据传输到云端进行进一步处理时,不需要渲染。

-

禁用OSD或屏幕显示。OSD插件用于绘制边界框和其他工件并在输出帧中添加标签。要禁用OSD设置启用=0在配置文件的

[osd]组中。 -

平铺程序创建一个

NxM网格来显示输出流。要禁用平铺输出,请在配置文件的[tiled-display]组中设置启用=0。 -

禁用渲染输出接收器:选择

fakesink,即配置文件的[sink]组中的type=1。性能部分中的所有性能基准测试都在禁用平铺、OSD和输出接收器的情况下运行。

5. 如果CPU/GPU利用率低,那么其中一种可能性是管道中的元素急需缓冲区。然后尝试增加解码器分配的缓冲区数量,方法是在应用程序中设置

[source#]组的num-extra-surfaces属性或Gst-nvv4l2decoder的num-额外-表面num-extra-surfaces。6. 如果您在docker控制台中运行应用程序并且它提供低FPS,请在配置文件的

[sink0]组中设置qos=0。该问题是由初始加载引起的。当qos设置为1时,作为[sink0]组中属性的默认值,解码开始丢帧。7. 如果要优化处理管道端到端延迟,可以使用DeepStream中的延迟测量方法。

-

要启用帧延迟测量,请在控制台上运行以下命令:

$ export NVDS_ENABLE_LATENCY_MEASUREMENT=1

-

要为所有插件启用延迟,请在控制台上运行以下命令:

$ export NVDS_ENABLE_COMPONENT_LATENCY_MEASUREMENT=1

注

当使用DeepStream延迟API测量帧延迟时,如果观察到

10^12或1e12量级的大帧延迟数,请修改延迟测量代码(调用nvds_measure_buffer_latencyAPI)- ...

- guint num_sources_in_batch = nvds_measure_buffer_latency(buf, latency_info);

- if (num_sources_in_batch > 0 && latency_info[0].latency > 1e6) {

- NvDsBatchMeta *batch_meta = gst_buffer_get_nvds_batch_meta (buf);

- batch_meta->batch_user_meta_list = g_list_reverse (batch_meta->batch_user_meta_list);

- num_sources_in_batch = nvds_measure_buffer_latency(buf, latency_info);

- }

- ...

Jetson 优化

1. 确保 Jetson时 钟设置为高。运行这些命令将Jetson时钟设置为高。

- $ sudo nvpmodel -m <mode> --for MAX perf and power mode is 0

- $ sudo jetson_clocks

注

对于NX:使用模式为2。

2. 在Jetson上,使用

Gst-nvdrmvideosink而不是Gst-nv3dsink,因为nv3dsink需要GPU利用率。Triton

-

如果您将Triton与DeepStream结合使用,请调整每个进程的TensorFlow GPU内存使用情况的

tf_gpu_memory_fraction值-建议范围[0.2,0.6]。太大的值可能导致内存不足,太小可能导致性能低下。 -

将TensorFlow或ONNX与Triton一起使用时启用TensorRT优化。更新Triton配置文件以启用TensorFlow/ONNX TensorRT在线优化。每次初始化时这将需要几分钟时间。或者,您可以离线生成TF-TRT图形/保存模型模型。

推理吞吐量

以下是帮助您提高应用程序通道密度的几个步骤:

-

如果您使用的是Jetson Xavier或NX,您可以使用DLA(深度学习加速器)进行推理。这为其他模型或更多流释放了GPU。

-

使用DeepStream,用户可以每隔一帧或每隔三帧推断一次,并使用跟踪器来预测对象中的位置。这可以通过简单的配置文件更改来完成。用户可以使用3个可用跟踪器中的一个来跟踪帧中的对象。在推理配置文件中,更改

[property]下的间隔参数。这是一个跳过间隔,推断之间要跳过的帧数。间隔0表示每帧推断,间隔1表示跳过1帧,每隔一帧推断一次。这可以通过从间隔0到1有效地使您的整体通道吞吐量翻倍。 -

选择精度较低的FP16或INT8进行推理。如果要使用FP16,则不需要新模型。这是DS中的一个简单更改。要更改,请更新推理配置文件中的网络模式选项。如果要运行INT8,则需要一个INT8校准缓存,其中包含FP32到INT8量化表。

-

DeepStream应用程序也可以配置为具有级联神经网络。第一个网络进行检测,然后是第二个网络,对检测进行一些分类。要启用辅助推理,请从配置文件中启用辅助gie。设置适当的批处理大小。批处理大小将取决于通常从主推理发送到辅助推理的对象数量。您必须进行实验,看看适合其用例的批处理大小是多少。为了减少辅助分类器的推理次数,可以通过设置过滤要推断的对象

input-object-min-width、input-object-min-height、、input-object-max-width、、input-object-max-height、operate-on-gie-id、operate-on-class-ids适当。

减少杂散检测

Reducing spurious detections

Configuration Parameter

Description

Use Case

threshold

Per-class-threshold of primary detector. Increasing the threshold restricts output to objects with higher detection confidence.

—

roi-top-offset roi-bottom-offset

Per-class top/bottom region of interest (roi) offset. Restricts output to objects in a specified region of the frame.

To reduce spurious detections seen on the dashboard of dashcams

detected-min-w detected-min-h detected-max-w detected-max-h

Per-class min/max object width/height for primary-detector Restricts output to objects of specified size.

To reduce false detections, for example, a tree being detected as a person

-

-

相关阅读:

AJAX and Javaweb

深入解析:探索Nginx与Feign交锋的背后故事 - 如何优雅解决微服务通信中的`301 Moved Permanently`之谜!

GemBox.Bundle 45.X Crack-2022-09

Packet Tracer - 综合技能练习(通过调整 OSPF 计时器来修改 OSPFv2 配置)

生态环境领域基于R语言piecewiseSEM结构方程模型

【shell】循环语句(for、while、until)

Matlab实现遗传算法仿真(附上20个仿真源码)

TCP SYNCookie机制

如何快速开通使用腾讯云云点播服务?

生态环境综合管理信息化平台推动生态环境部门数字化转型

- 原文地址:https://blog.csdn.net/FREEDOM_X/article/details/139655175