-

EFK环境搭建(基于K8S环境部署)

一.环境信息

1.服务器及k8s版本

IP地址 主机名称 角色 版本 192.168.40.180 master1 master节点 1.27 192.168.40.181 node1 node1节点 1.27 192.168.40.182 node2 node2节点 1.27 2.部署组件版本

序号 名称 版本 作用 1 elasticsearch 7.12.1 是一个实时的分布式搜索和分析引擎,它可以用于全文搜索,结构化搜索以及分析。 2 kibana 7.12.1 为 Elasticsearch 提供了分析和 Web 可视化界面,并生成各种维度表格、图形 3 fluentd v1.16 是一个数据收集引擎,主要用于进行数据收集、解析,并将数据发送给ES 4 nfs-client-provisioner v4.0.0 nfs供应商 master1和node2节点上传fluentd组件 node1节点上传全部的组件

链接:https://pan.baidu.com/s/1u2U87Jp4TzJxs7nfVqKM-w

提取码:fcpp

–来自百度网盘超级会员V4的分享

链接:https://pan.baidu.com/s/1ttMaqmeVNpOAJD8G3-6tag

提取码:qho8

–来自百度网盘超级会员V4的分享

链接:https://pan.baidu.com/s/1ttMaqmeVNpOAJD8G3-6tag

提取码:qho8

链接:https://pan.baidu.com/s/1cQSkGz0NO_rrulas2EYv5Q

提取码:rxjx

–来自百度网盘超级会员V4的分享二.安装nfs供应商

1.安装nfs服务

三个节点都操作yum -y install nfs-utils- 1

2.启动nfs服务并设置开机自启

三个节点都操作# 开启服务 systemctl start nfs # 设置开机自启 systemctl enable nfs.service- 1

- 2

- 3

- 4

3.在master1上创建一个共享目录

# 创建目录 mkdir /data/v1 -p # 编辑/etc/exports文件 vim /etc/exports /data/v1 *(rw,no_root_squash) #加载配置,使文件生效 exportfs -arv systemctl restart nfs- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

4.创建nfs作为存储的供应商

master1上执行

4.1创建运行nfs-provisioner需要的账号vim serviceaccount.yaml --- apiVersion: v1 kind: ServiceAccount metadata: name: nfs-provisioner- 1

- 2

- 3

- 4

- 5

- 6

执行配置

kubectl apply -f serviceaccount.yaml- 1

4.2对sa授权

kubectl create clusterrolebinding nfs-provisioner-clusterrolebinding --clusterrole=cluster-admin --serviceaccount=default:nfs-provisioner- 1

把nfs-subdir-external-provisioner.tar.gz上传到node1上,手动解压。

ctr -n=k8s.io images import nfs-subdir-external-provisioner.tar.gz- 1

4.3通过deployment创建pod用来运行nfs-provisioner

vim deployment.yaml --- kind: Deployment apiVersion: apps/v1 metadata: name: nfs-provisioner spec: selector: matchLabels: app: nfs-provisioner replicas: 1 strategy: type: Recreate template: metadata: labels: app: nfs-provisioner spec: serviceAccount: nfs-provisioner containers: - name: nfs-provisioner image: registry.cn-beijing.aliyuncs.com/mydlq/nfs-subdir-external-provisioner:v4.0.0 imagePullPolicy: IfNotPresent volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: example.com/nfs - name: NFS_SERVER value: 192.168.40.180 #这个需要写nfs服务端所在的ip地址,大家需要写自己安装了nfs服务的机器ip - name: NFS_PATH value: /data/v1 #这个是nfs服务端共享的目录 volumes: - name: nfs-client-root nfs: server: 192.168.40.180 path: /data/v1- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

执行配置文件

kubectl apply -f deployment.yaml- 1

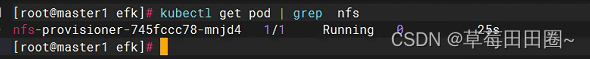

查看是否创建成功

kubectl get pods | grep nfs- 1

5.创建存储类storgeclass

mster1上执行vim es_class.yaml --- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: do-block-storage provisioner: example.com/nfs- 1

- 2

- 3

- 4

- 5

- 6

- 7

执行配置

kubectl apply -f es_class.yaml- 1

三.安装elasticsearch

在master1上执行

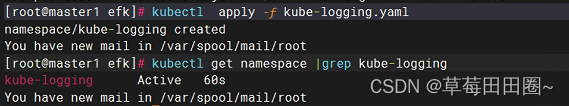

1.创建kube-logging名称空间vim kube-logging.yaml --- kind: Namespace apiVersion: v1 metadata: name: kube-logging- 1

- 2

- 3

- 4

- 5

- 6

执行配置

kubectl apply -f kube-logging.yaml- 1

2.安装elasticsearch组件vim elasticsearch_svc.yaml --- kind: Service apiVersion: v1 metadata: name: elasticsearch namespace: kube-logging labels: app: elasticsearch spec: selector: app: elasticsearch clusterIP: None ports: - port: 9200 name: rest - port: 9300 name: inter-node- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

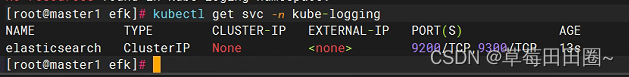

执行配置文件

kubectl apply -f elasticsearch_svc.yaml- 1

3.创建statefulset资源vim elasticsearch-statefulset.yaml --- apiVersion: apps/v1 kind: StatefulSet metadata: name: es-cluster namespace: kube-logging spec: serviceName: elasticsearch replicas: 3 selector: matchLabels: app: elasticsearch template: metadata: labels: app: elasticsearch spec: containers: - name: elasticsearch image: docker.io/library/elasticsearch:7.12.1 imagePullPolicy: IfNotPresent resources: limits: cpu: 1000m requests: cpu: 100m ports: - containerPort: 9200 name: rest protocol: TCP - containerPort: 9300 name: inter-node protocol: TCP volumeMounts: - name: data mountPath: /usr/share/elasticsearch/data env: - name: cluster.name value: k8s-logs - name: node.name valueFrom: fieldRef: fieldPath: metadata.name - name: discovery.seed_hosts value: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch" - name: cluster.initial_master_nodes value: "es-cluster-0,es-cluster-1,es-cluster-2" - name: ES_JAVA_OPTS value: "-Xms512m -Xmx512m" initContainers: - name: fix-permissions image: busybox imagePullPolicy: IfNotPresent command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"] securityContext: privileged: true volumeMounts: - name: data mountPath: /usr/share/elasticsearch/data - name: increase-vm-max-map image: busybox imagePullPolicy: IfNotPresent command: ["sysctl", "-w", "vm.max_map_count=262144"] securityContext: privileged: true - name: increase-fd-ulimit image: busybox imagePullPolicy: IfNotPresent command: ["sh", "-c", "ulimit -n 65536"] securityContext: privileged: true volumeClaimTemplates: - metadata: name: data labels: app: elasticsearch spec: accessModes: [ "ReadWriteOnce" ] storageClassName: do-block-storage resources: requests: storage: 10Gi- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

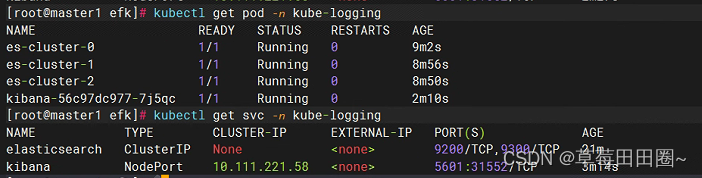

执行配置

kubectl apply -f elasticsearch-statefulset.yaml- 1

四.安装kibana组件

vim kibana.yaml --- apiVersion: v1 kind: Service metadata: name: kibana namespace: kube-logging labels: app: kibana spec: ports: - port: 5601 type: NodePort selector: app: kibana --- apiVersion: apps/v1 kind: Deployment metadata: name: kibana namespace: kube-logging labels: app: kibana spec: replicas: 1 selector: matchLabels: app: kibana template: metadata: labels: app: kibana spec: containers: - name: kibana image: docker.io/library/kibana:7.12.1 imagePullPolicy: IfNotPresent resources: limits: cpu: 1000m requests: cpu: 100m env: - name: ELASTICSEARCH_URL value: http://elasticsearch:9200 ports: - containerPort: 5601- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

执行配置

kubectl apply -f kibana.yaml- 1

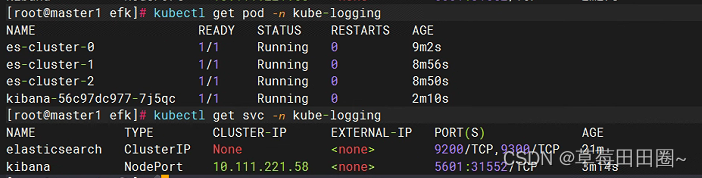

在浏览器中打开http://<k8s集群任意节点IP>:31552即可,如果看到如下欢迎界面证明 Kibana 已经成功部署到了Kubernetes集群之中。

五.安装fluentd

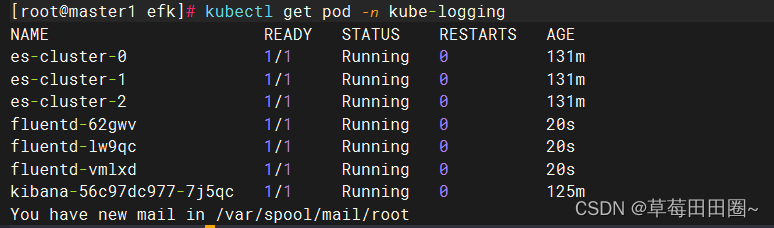

我们使用daemonset控制器部署fluentd组件,这样可以保证集群中的每个节点都可以运行同样fluentd的pod副本,这样就可以收集k8s集群中每个节点的日志,在k8s集群中,容器应用程序的输入输出日志会重定向到node节点里的json文件中,fluentd可以tail和过滤以及把日志转换成指定的格式发送到elasticsearch集群中。除了容器日志,fluentd也可以采集kubelet、kube-proxy、docker的日志。

vim fluentd.yaml --- kind: ServiceAccount metadata: name: fluentd namespace: kube-logging labels: app: fluentd --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: fluentd labels: app: fluentd rules: - apiGroups: - "" resources: - pods - namespaces verbs: - get - list - watch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: fluentd roleRef: kind: ClusterRole name: fluentd apiGroup: rbac.authorization.k8s.io subjects: - kind: ServiceAccount name: fluentd namespace: kube-logging --- apiVersion: apps/v1 kind: DaemonSet metadata: name: fluentd namespace: kube-logging labels: app: fluentd spec: selector: matchLabels: app: fluentd template: metadata: labels: app: fluentd spec: serviceAccount: fluentd serviceAccountName: fluentd tolerations: - key: node-role.kubernetes.io/control-plane effect: NoSchedule containers: - name: fluentd image: docker.io/fluent/fluentd-kubernetes-daemonset:v1.16-debian-elasticsearch7-1 imagePullPolicy: IfNotPresent env: - name: FLUENT_ELASTICSEARCH_HOST value: "elasticsearch.kube-logging.svc.cluster.local" - name: FLUENT_ELASTICSEARCH_PORT value: "9200" - name: FLUENT_ELASTICSEARCH_SCHEME value: "http" - name: FLUENTD_SYSTEMD_CONF value: disable - name: FLUENT_CONTAINER_TAIL_PARSER_TYPE value: "cri" - name: FLUENT_CONTAINER_TAIL_PARSER_TIME_FORMAT value: "%Y-%m-%dT%H:%M:%S.%L%z" resources: limits: memory: 512Mi requests: cpu: 100m memory: 200Mi volumeMounts: - name: varlog mountPath: /var/log - name: containers mountPath: /var/log/containers readOnly: true terminationGracePeriodSeconds: 30 volumes: - name: varlog hostPath: path: /var/log - name: containers hostPath: path: /var/log/containers- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

执行配置

kubectl apply -f fluentd.yaml- 1

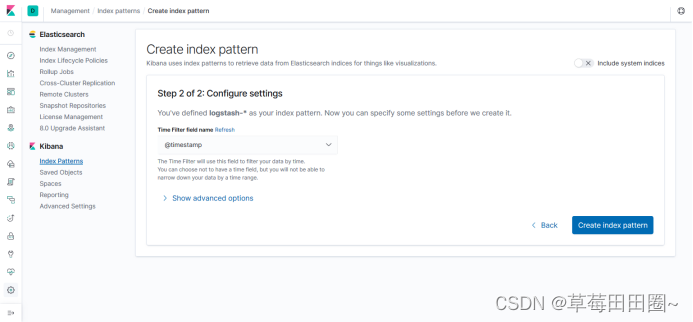

Fluentd 启动成功后,我们可以前往 Kibana 的 Dashboard 页面中,点击左侧的Discover,可以看到如下配置页面:

-

相关阅读:

Nginx配置ssl证书实现https访问

盒模型(非要让我凑满五个字标题)

吴恩达:如何系统学习机器学习?

数据分析思维与模型:相关分析法

供应原厂电流继电器 - HBDLX-21/3 整定电流范围0.1-1.09A AC220V

Android 动画

文件夹图片相似图片检测并删除相似图片

校招面试数据库原理知识复习总结

uniCloud云开发获取小程序用户openid

华为OD机考--TVL解码--GPU算力--猴子爬台阶--两个数组前K对最小和--勾股数C++实现

- 原文地址:https://blog.csdn.net/m0_71163619/article/details/137886257