-

flink学习之旅(二)

目前flink中的资源管理主要是使用的hadoop圈里的yarn,故此需要先搭建hadoop环境并启动yarn和hdfs,由于看到的教程都是集群版,现实是只有1台机器,故此都是使用这台机器安装。

1.下载对应hadoop安装包

https://dlcdn.apache.org/hadoop/common/hadoop-3.3.5/hadoop-3.3.5.tar.gz

2.解压到指定路径比如这里我选择的如下:

3.修改hadoop相关配置

3.修改hadoop相关配置cd /root/dxy/hadoop/hadoop-3.3.5/etc/hadoop

vi core-site.xml 核心配置文件

- "1.0" encoding="UTF-8"?>

- "text/xsl" href="configuration.xsl"?>

- <configuration>

- <property>

- <name>fs.defaultFSname>

- <value>hdfs://10.26.141.203:8020value>

- property>

- <property>

- <name>hadoop.tmp.dirname>

- <value>/root/dxy/hadoop/hadoop-3.3.5/datasvalue>

- property>

- <property>

- <name>hadoop.http.staticuser.username>

- <value>rootvalue>

- property>

- configuration>

vi hdfs-site.xml 修改hdfs配置文件

- "1.0" encoding="UTF-8"?>

- "text/xsl" href="configuration.xsl"?>

- <configuration>

- <property>

- <name>dfs.namenode.http-addressname>

- <value>10.26.141.203:9870value>

- property>

- <property>

- <name>dfs.namenode.secondary.http-addressname>

- <value>10.26.141.203:9868value>

- property>

- configuration>

vi yarn-site.xml 修改yarn配置

- "1.0"?>

- <configuration>

- <property>

- <name>yarn.nodemanager.aux-servicesname>

- <value>mapreduce_shufflevalue>

- property>

- <property>

- <name>yarn.resourcemanager.hostnamename>

- <value>10.26.141.203value>

- property>

- <property>

- <name>yarn.nodemanager.env-whitelistname>

- <value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOMEvalue>

- property>

- configuration>

- ~

vim mapred-site.xml

- "1.0" encoding="UTF-8"?>

- "text/xsl" href="configuration.xsl"?>

- <configuration>

- <property>

- <name>mapreduce.framework.namename>

- <value>yarnvalue>

- property>

- configuration>

vi workers 填上 服务器ip 如:

10.26.141.203

修改环境变量:

export HADOOP_HOME=/root/dxy/hadoop/hadoop-3.3.5

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbinexport HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export HADOOP_CLASSPATH=`hadoop classpath`source /etc/profile

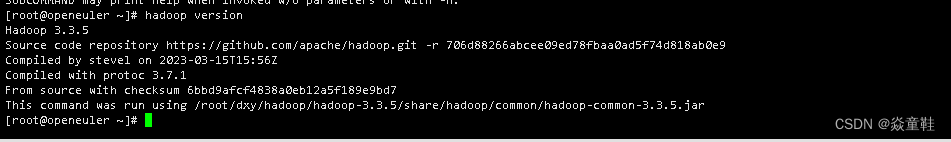

完成后可以执行hadoop version 查看是否配置成功如下:

hdfs第一次启动时需要格式化

hdfs namenode -format

启动yarn和hdfs

sbin/start-dfs.sh

sbin/start-yarn.sh

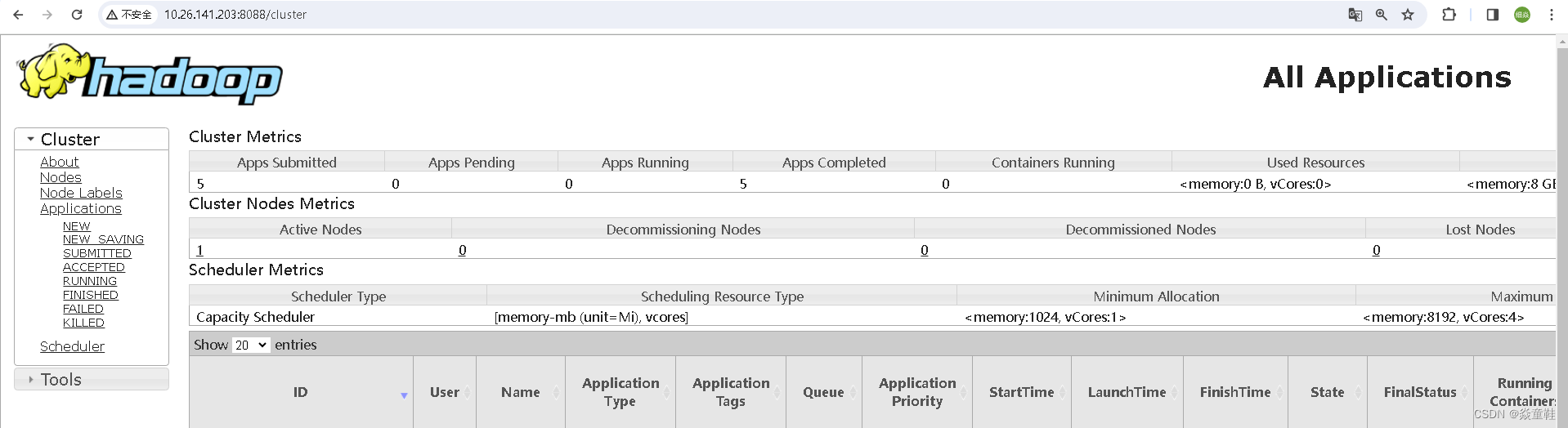

使用界面访问是否启动成功:

使用application运行flink任务并提交到yarn上如下:

bin/flink run-application -t yarn-application -c com.dxy.learn.demo.SocketStreamWordCount FlinkLearn-1.0-SNAPSHOT.jar

-

相关阅读:

【rust】rsut基础:模块的使用一、mod 关键字、mod.rs 文件的含义等

Linux最常用命令用法总结(精选)

MFC软件国际化的几个问题及其解决方案

【目标检测算法】利用wandb可视化YOLO-V5模型的训练

ClickHouse快速安装-可视化工具连接-创建第一个ck库表(一)

【0基础学Java第二课】数据类型与变量

电脑监控软件都有哪些,哪款好用丨全网盘点

5G 速率介绍

剑指 Offer 13. 机器人的运动范围

python模拟投掷色子并做出数据可视化统计图

- 原文地址:https://blog.csdn.net/u012440725/article/details/135775326