-

【x265 源码分析系列】:概述

介绍

-

x265 也属于 VLC 的 project。

-

版本: x265-3.5(TAG-208)

-

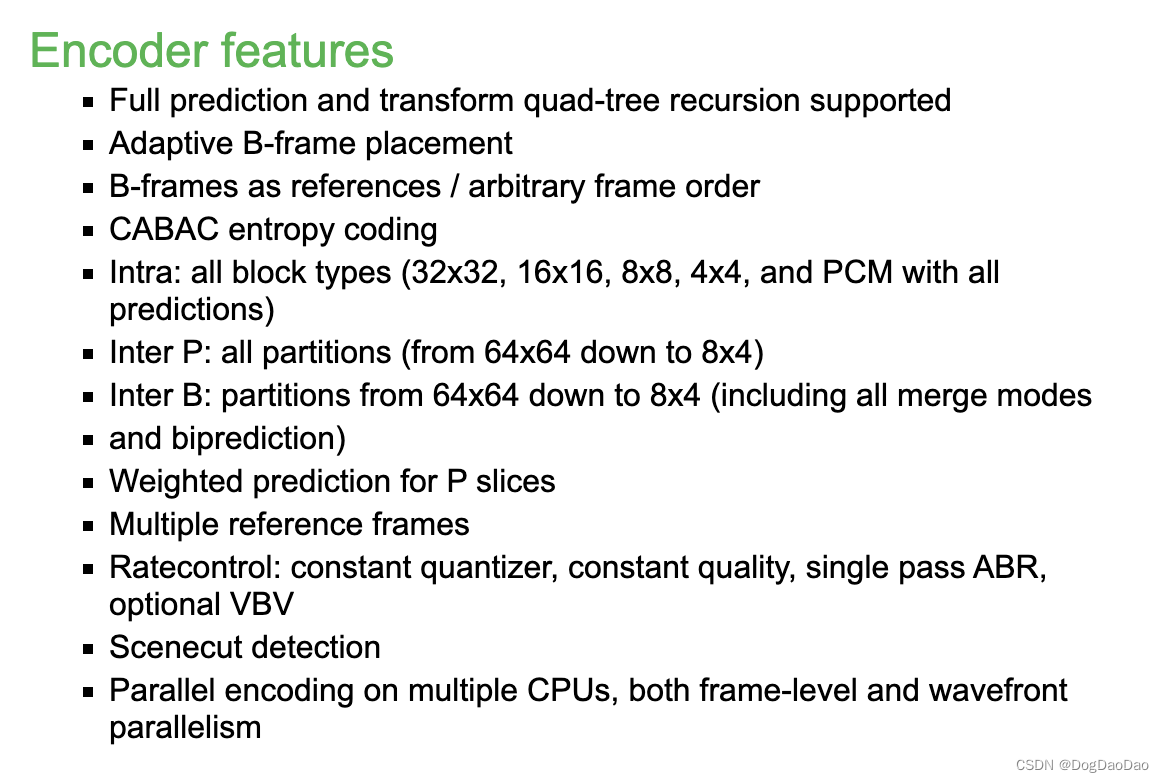

编码特点:

-

研究了一段时间的 HEVC 编码标准,最近开始研究符合 HEVC 标准的开源编码器 x265;本文对 x265 进行简单梳理代码结构。

-

x265 使用的是 C++语言标准,而 x264 使用的是 C 语言标准。

-

HEVC 标准介绍可以参考HEVC编码标准介绍。

函数调用关系图

x265 的从 main 函数到 API 的调用关系如下:

x265命令行程序

x265 命令行程序通过调用 libx265 库将视频YUV编码成视频流 H265。

入口函数是

main()函数,编码功能主要就是通过结构体CLIOptions、类AbrEncoder来完成;其中CLIOptions主要用来解析命令行以及编码参数,AbrEncoder主要完成了具体编码工作。在

AbrEncoder通过线程激活控制核心的编码类PassEncoder;在创建(new 过程)AbrEncoder时其构造函数就创建(new 过程)了PassEncoder类、初始化init()同时开启了PassEncoder工作线程startThreads();最后销毁destroy()释放delete资源。PassEncoder类的初始化init()函数主要调用了 API 函数encoder_open(m_param)打开编码器。PassEncoder类的startThreads()通过控制变量m_threadActive的 true 和 false来完成激活线程主函数threadMain()。PassEncoder类的线程主函数threadMain()将结构体里CLIOptions的结构体api拷贝,通过结构体api里 API 函数encoder_headers()、picture_init()、encoder_encode()、encoder_get_stats()、encoder_log()、encoder_close()、param_free()完成核心的视频编码工作。destroy()主要就调用了 PassEncoder 类的destroy()函数停止工作线程的。主函数 main()

将外部命令行与内部编码器结合的可执行程序的主体。

/* CLI return codes: * * 0 - encode successful * 1 - unable to parse command line * 2 - unable to open encoder * 3 - unable to generate stream headers * 4 - encoder abort */ int main(int argc, char **argv) { #if HAVE_VLD // This uses Microsoft's proprietary WCHAR type, but this only builds on Windows to start with VLDSetReportOptions(VLD_OPT_REPORT_TO_DEBUGGER | VLD_OPT_REPORT_TO_FILE, L"x265_leaks.txt"); #endif PROFILE_INIT(); THREAD_NAME("API", 0); GetConsoleTitle(orgConsoleTitle, CONSOLE_TITLE_SIZE); SetThreadExecutionState(ES_CONTINUOUS | ES_SYSTEM_REQUIRED | ES_AWAYMODE_REQUIRED); #if _WIN32 char** orgArgv = argv; get_argv_utf8(&argc, &argv); #endif uint8_t numEncodes = 1; FILE *abrConfig = NULL; bool isAbrLadder = checkAbrLadder(argc, argv, &abrConfig); if (isAbrLadder) numEncodes = getNumAbrEncodes(abrConfig); CLIOptions* cliopt = new CLIOptions[numEncodes]; if (isAbrLadder) { if (!parseAbrConfig(abrConfig, cliopt, numEncodes)) exit(1); if (!setRefContext(cliopt, numEncodes)) exit(1); } else if (cliopt[0].parse(argc, argv)) { cliopt[0].destroy(); if (cliopt[0].api) cliopt[0].api->param_free(cliopt[0].param); exit(1); } int ret = 0; if (cliopt[0].scenecutAwareQpConfig) { if (!cliopt[0].parseScenecutAwareQpConfig()) { x265_log(NULL, X265_LOG_ERROR, "Unable to parse scenecut aware qp config file \n"); fclose(cliopt[0].scenecutAwareQpConfig); cliopt[0].scenecutAwareQpConfig = NULL; } } AbrEncoder* abrEnc = new AbrEncoder(cliopt, numEncodes, ret); int threadsActive = abrEnc->m_numActiveEncodes.get(); while (threadsActive) { threadsActive = abrEnc->m_numActiveEncodes.waitForChange(threadsActive); for (uint8_t idx = 0; idx < numEncodes; idx++) { if (abrEnc->m_passEnc[idx]->m_ret) { if (isAbrLadder) x265_log(NULL, X265_LOG_INFO, "Error generating ABR-ladder \n"); ret = abrEnc->m_passEnc[idx]->m_ret; threadsActive = 0; break; } } } abrEnc->destroy(); delete abrEnc; for (uint8_t idx = 0; idx < numEncodes; idx++) cliopt[idx].destroy(); delete[] cliopt; SetConsoleTitle(orgConsoleTitle); SetThreadExecutionState(ES_CONTINUOUS); #if _WIN32 if (argv != orgArgv) { free(argv); argv = orgArgv; } #endif #if HAVE_VLD assert(VLDReportLeaks() == 0); #endif return ret; }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

AbrEncoder的构造函数

AbrEncoder::AbrEncoder(CLIOptions cliopt[], uint8_t numEncodes, int &ret) { m_numEncodes = numEncodes; m_numActiveEncodes.set(numEncodes); m_queueSize = (numEncodes > 1) ? X265_INPUT_QUEUE_SIZE : 1; m_passEnc = X265_MALLOC(PassEncoder*, m_numEncodes); for (uint8_t i = 0; i < m_numEncodes; i++) { m_passEnc[i] = new PassEncoder(i, cliopt[i], this); if (!m_passEnc[i]) { x265_log(NULL, X265_LOG_ERROR, "Unable to allocate memory for passEncoder\n"); ret = 4; } m_passEnc[i]->init(ret); } if (!allocBuffers()) { x265_log(NULL, X265_LOG_ERROR, "Unable to allocate memory for buffers\n"); ret = 4; } /* start passEncoder worker threads */ for (uint8_t pass = 0; pass < m_numEncodes; pass++) m_passEnc[pass]->startThreads(); }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

PassEncoder类的 init()

int PassEncoder::init(int &result) { if (m_parent->m_numEncodes > 1) setReuseLevel(); if (!(m_cliopt.enableScaler && m_id)) m_reader = new Reader(m_id, this); else { VideoDesc *src = NULL, *dst = NULL; dst = new VideoDesc(m_param->sourceWidth, m_param->sourceHeight, m_param->internalCsp, m_param->internalBitDepth); int dstW = m_parent->m_passEnc[m_id - 1]->m_param->sourceWidth; int dstH = m_parent->m_passEnc[m_id - 1]->m_param->sourceHeight; src = new VideoDesc(dstW, dstH, m_param->internalCsp, m_param->internalBitDepth); if (src != NULL && dst != NULL) { m_scaler = new Scaler(0, 1, m_id, src, dst, this); if (!m_scaler) { x265_log(m_param, X265_LOG_ERROR, "\n MALLOC failure in Scaler"); result = 4; } } } if (m_cliopt.zoneFile) { if (!m_cliopt.parseZoneFile()) { x265_log(NULL, X265_LOG_ERROR, "Unable to parse zonefile in %s\n"); fclose(m_cliopt.zoneFile); m_cliopt.zoneFile = NULL; } } /* note: we could try to acquire a different libx265 API here based on * the profile found during option parsing, but it must be done before * opening an encoder */ if (m_param) m_encoder = m_cliopt.api->encoder_open(m_param); if (!m_encoder) { x265_log(NULL, X265_LOG_ERROR, "x265_encoder_open() failed for Enc, \n"); m_ret = 2; return -1; } /* get the encoder parameters post-initialization */ m_cliopt.api->encoder_parameters(m_encoder, m_param); return 1; }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

PassEncoder类的 threadmian()

void PassEncoder::threadMain() { THREAD_NAME("PassEncoder", m_id); while (m_threadActive) { #if ENABLE_LIBVMAF x265_vmaf_data* vmafdata = m_cliopt.vmafData; #endif /* This allows muxers to modify bitstream format */ m_cliopt.output->setParam(m_param); const x265_api* api = m_cliopt.api; ReconPlay* reconPlay = NULL; if (m_cliopt.reconPlayCmd) reconPlay = new ReconPlay(m_cliopt.reconPlayCmd, *m_param); char* profileName = m_cliopt.encName ? m_cliopt.encName : (char *)"x265"; if (signal(SIGINT, sigint_handler) == SIG_ERR) x265_log(m_param, X265_LOG_ERROR, "Unable to register CTRL+C handler: %s in %s\n", strerror(errno), profileName); x265_picture pic_orig, pic_out; x265_picture *pic_in = &pic_orig; /* Allocate recon picture if analysis save/load is enabled */ std::priority_queue<int64_t>* pts_queue = m_cliopt.output->needPTS() ? new std::priority_queue<int64_t>() : NULL; x265_picture *pic_recon = (m_cliopt.recon || m_param->analysisSave || m_param->analysisLoad || pts_queue || reconPlay || m_param->csvLogLevel) ? &pic_out : NULL; uint32_t inFrameCount = 0; uint32_t outFrameCount = 0; x265_nal *p_nal; x265_stats stats; uint32_t nal; int16_t *errorBuf = NULL; bool bDolbyVisionRPU = false; uint8_t *rpuPayload = NULL; int inputPicNum = 1; x265_picture picField1, picField2; x265_analysis_data* analysisInfo = (x265_analysis_data*)(&pic_out.analysisData); bool isAbrSave = m_cliopt.saveLevel && (m_parent->m_numEncodes > 1); if (!m_param->bRepeatHeaders && !m_param->bEnableSvtHevc) { if (api->encoder_headers(m_encoder, &p_nal, &nal) < 0) { x265_log(m_param, X265_LOG_ERROR, "Failure generating stream headers in %s\n", profileName); m_ret = 3; goto fail; } else m_cliopt.totalbytes += m_cliopt.output->writeHeaders(p_nal, nal); } if (m_param->bField && m_param->interlaceMode) { api->picture_init(m_param, &picField1); api->picture_init(m_param, &picField2); // return back the original height of input m_param->sourceHeight *= 2; api->picture_init(m_param, &pic_orig); } else api->picture_init(m_param, &pic_orig); if (m_param->dolbyProfile && m_cliopt.dolbyVisionRpu) { rpuPayload = X265_MALLOC(uint8_t, 1024); pic_in->rpu.payload = rpuPayload; if (pic_in->rpu.payload) bDolbyVisionRPU = true; } if (m_cliopt.bDither) { errorBuf = X265_MALLOC(int16_t, m_param->sourceWidth + 1); if (errorBuf) memset(errorBuf, 0, (m_param->sourceWidth + 1) * sizeof(int16_t)); else m_cliopt.bDither = false; } // main encoder loop while (pic_in && !b_ctrl_c) { pic_orig.poc = (m_param->bField && m_param->interlaceMode) ? inFrameCount * 2 : inFrameCount; if (m_cliopt.qpfile) { if (!m_cliopt.parseQPFile(pic_orig)) { x265_log(NULL, X265_LOG_ERROR, "can't parse qpfile for frame %d in %s\n", pic_in->poc, profileName); fclose(m_cliopt.qpfile); m_cliopt.qpfile = NULL; } } if (m_cliopt.framesToBeEncoded && inFrameCount >= m_cliopt.framesToBeEncoded) pic_in = NULL; else if (readPicture(pic_in)) inFrameCount++; else pic_in = NULL; if (pic_in) { if (pic_in->bitDepth > m_param->internalBitDepth && m_cliopt.bDither) { x265_dither_image(pic_in, m_cliopt.input->getWidth(), m_cliopt.input->getHeight(), errorBuf, m_param->internalBitDepth); pic_in->bitDepth = m_param->internalBitDepth; } /* Overwrite PTS */ pic_in->pts = pic_in->poc; // convert to field if (m_param->bField && m_param->interlaceMode) { int height = pic_in->height >> 1; int static bCreated = 0; if (bCreated == 0) { bCreated = 1; inputPicNum = 2; picField1.fieldNum = 1; picField2.fieldNum = 2; picField1.bitDepth = picField2.bitDepth = pic_in->bitDepth; picField1.colorSpace = picField2.colorSpace = pic_in->colorSpace; picField1.height = picField2.height = pic_in->height >> 1; picField1.framesize = picField2.framesize = pic_in->framesize >> 1; size_t fieldFrameSize = (size_t)pic_in->framesize >> 1; char* field1Buf = X265_MALLOC(char, fieldFrameSize); char* field2Buf = X265_MALLOC(char, fieldFrameSize); int stride = picField1.stride[0] = picField2.stride[0] = pic_in->stride[0]; uint64_t framesize = stride * (height >> x265_cli_csps[pic_in->colorSpace].height[0]); picField1.planes[0] = field1Buf; picField2.planes[0] = field2Buf; for (int i = 1; i < x265_cli_csps[pic_in->colorSpace].planes; i++) { picField1.planes[i] = field1Buf + framesize; picField2.planes[i] = field2Buf + framesize; stride = picField1.stride[i] = picField2.stride[i] = pic_in->stride[i]; framesize += (stride * (height >> x265_cli_csps[pic_in->colorSpace].height[i])); } assert(framesize == picField1.framesize); } picField1.pts = picField1.poc = pic_in->poc; picField2.pts = picField2.poc = pic_in->poc + 1; picField1.userSEI = picField2.userSEI = pic_in->userSEI; //if (pic_in->userData) //{ // // Have to handle userData here //} if (pic_in->framesize) { for (int i = 0; i < x265_cli_csps[pic_in->colorSpace].planes; i++) { char* srcP1 = (char*)pic_in->planes[i]; char* srcP2 = (char*)pic_in->planes[i] + pic_in->stride[i]; char* p1 = (char*)picField1.planes[i]; char* p2 = (char*)picField2.planes[i]; int stride = picField1.stride[i]; for (int y = 0; y < (height >> x265_cli_csps[pic_in->colorSpace].height[i]); y++) { memcpy(p1, srcP1, stride); memcpy(p2, srcP2, stride); srcP1 += 2 * stride; srcP2 += 2 * stride; p1 += stride; p2 += stride; } } } } if (bDolbyVisionRPU) { if (m_param->bField && m_param->interlaceMode) { if (m_cliopt.rpuParser(&picField1) > 0) goto fail; if (m_cliopt.rpuParser(&picField2) > 0) goto fail; } else { if (m_cliopt.rpuParser(pic_in) > 0) goto fail; } } } for (int inputNum = 0; inputNum < inputPicNum; inputNum++) { x265_picture *picInput = NULL; if (inputPicNum == 2) picInput = pic_in ? (inputNum ? &picField2 : &picField1) : NULL; else picInput = pic_in; int numEncoded = api->encoder_encode(m_encoder, &p_nal, &nal, picInput, pic_recon); int idx = (inFrameCount - 1) % m_parent->m_queueSize; m_parent->m_picIdxReadCnt[m_id][idx].incr(); m_parent->m_picReadCnt[m_id].incr(); if (m_cliopt.loadLevel && picInput) { m_parent->m_analysisReadCnt[m_cliopt.refId].incr(); m_parent->m_analysisRead[m_cliopt.refId][m_lastIdx].incr(); } if (numEncoded < 0) { b_ctrl_c = 1; m_ret = 4; break; } if (reconPlay && numEncoded) reconPlay->writePicture(*pic_recon); outFrameCount += numEncoded; if (isAbrSave && numEncoded) { copyInfo(analysisInfo); } if (numEncoded && pic_recon && m_cliopt.recon) m_cliopt.recon->writePicture(pic_out); if (nal) { m_cliopt.totalbytes += m_cliopt.output->writeFrame(p_nal, nal, pic_out); if (pts_queue) { pts_queue->push(-pic_out.pts); if (pts_queue->size() > 2) pts_queue->pop(); } } m_cliopt.printStatus(outFrameCount); } } /* Flush the encoder */ while (!b_ctrl_c) { int numEncoded = api->encoder_encode(m_encoder, &p_nal, &nal, NULL, pic_recon); if (numEncoded < 0) { m_ret = 4; break; } if (reconPlay && numEncoded) reconPlay->writePicture(*pic_recon); outFrameCount += numEncoded; if (isAbrSave && numEncoded) { copyInfo(analysisInfo); } if (numEncoded && pic_recon && m_cliopt.recon) m_cliopt.recon->writePicture(pic_out); if (nal) { m_cliopt.totalbytes += m_cliopt.output->writeFrame(p_nal, nal, pic_out); if (pts_queue) { pts_queue->push(-pic_out.pts); if (pts_queue->size() > 2) pts_queue->pop(); } } m_cliopt.printStatus(outFrameCount); if (!numEncoded) break; } if (bDolbyVisionRPU) { if (fgetc(m_cliopt.dolbyVisionRpu) != EOF) x265_log(NULL, X265_LOG_WARNING, "Dolby Vision RPU count is greater than frame count in %s\n", profileName); x265_log(NULL, X265_LOG_INFO, "VES muxing with Dolby Vision RPU file successful in %s\n", profileName); } /* clear progress report */ if (m_cliopt.bProgress) fprintf(stderr, "%*s\r", 80, " "); fail: delete reconPlay; api->encoder_get_stats(m_encoder, &stats, sizeof(stats)); if (m_param->csvfn && !b_ctrl_c) #if ENABLE_LIBVMAF api->vmaf_encoder_log(m_encoder, m_cliopt.argCnt, m_cliopt.argString, m_cliopt.param, vmafdata); #else api->encoder_log(m_encoder, m_cliopt.argCnt, m_cliopt.argString); #endif api->encoder_close(m_encoder); int64_t second_largest_pts = 0; int64_t largest_pts = 0; if (pts_queue && pts_queue->size() >= 2) { second_largest_pts = -pts_queue->top(); pts_queue->pop(); largest_pts = -pts_queue->top(); pts_queue->pop(); delete pts_queue; pts_queue = NULL; } m_cliopt.output->closeFile(largest_pts, second_largest_pts); if (b_ctrl_c) general_log(m_param, NULL, X265_LOG_INFO, "aborted at input frame %d, output frame %d in %s\n", m_cliopt.seek + inFrameCount, stats.encodedPictureCount, profileName); api->param_free(m_param); X265_FREE(errorBuf); X265_FREE(rpuPayload); m_threadActive = false; m_parent->m_numActiveEncodes.decr(); } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

- 270

- 271

- 272

- 273

- 274

- 275

- 276

- 277

- 278

- 279

- 280

- 281

- 282

- 283

- 284

- 285

- 286

- 287

- 288

- 289

- 290

- 291

- 292

- 293

- 294

- 295

- 296

- 297

- 298

- 299

- 300

- 301

- 302

- 303

- 304

- 305

- 306

- 307

- 308

- 309

- 310

- 311

- 312

- 313

- 314

- 315

- 316

- 317

- 318

- 319

- 320

- 321

- 322

- 323

- 324

- 325

- 326

- 327

- 328

- 329

- 330

- 331

- 332

- 333

- 334

- 335

- 336

- 337

- 338

- 339

- 340

- 341

- 342

- 343

后续

通过 x265 的 API 函数进一步分析内部源码结构和算法逻辑。

-

-

相关阅读:

Fastadmin/Tp5.0连接多个数据库

PostgreSQL与MySQL数据库对比:适用场景和选择指南

应用在电子体温计中的国产温度传感芯片

《数据结构》八大排序(详细图文分析讲解)

MyBatis(二、基础进阶)

高等数学(第七版)同济大学 习题7-6 个人解答

【好玩】如何在github主页放一条贪吃蛇

Ubuntu下 Docker、Docker Compose 的安装教程

JSR303数据校验及多环境切换

cuda在ubuntu的安装使用分享

- 原文地址:https://blog.csdn.net/yanceyxin/article/details/133386105