-

计算机竞赛 深度学习 python opencv 实现人脸年龄性别识别

0 前言

🔥 优质竞赛项目系列,今天要分享的是

🚩 基于深度学习的人脸年龄性别识别算法实现

该项目较为新颖,适合作为竞赛课题方向,学长非常推荐!

🥇学长这里给一个题目综合评分(每项满分5分)

- 难度系数:4分

- 工作量:4分

- 创新点:3分

🧿 更多资料, 项目分享:

https://gitee.com/dancheng-senior/postgraduate

1 项目课题介绍

年龄和性别作为人重要的生物特征, 可以应用于多种场景,

如基于年龄的人机交互系统、电子商务中个性营销、刑事案件侦察中的年龄过滤等。然而基于图像的年龄分类和性别检测在真实场景下受很多因素影响,

如后天的生活工作环境等, 并且人脸图像中的复杂光线环境、姿态、表情以及图片本身的质量等因素都会给识别造成困难。学长这次设计的项目 基于深度学习卷积神经网络,利用Tensorflow和Keras等工具实现图像年龄和性别检测。

2 关键技术

2.1 卷积神经网络

受到人类大脑神经突触结构相互连接的模式启发,神经网络作为人工智能领域的重要组成部分,通过分布式的方法处理信息,可以解决复杂的非线性问题,从构造方面来看,主要包括输入层、隐藏层、输出层三大组成结构。每一个节点被称为一个神经元,存在着对应的权重参数,部分神经元存在偏置,当输入数据x进入后,对于经过的神经元都会进行类似于:y=w*x+b的线性函数的计算,其中w为该位置神经元的权值,b则为偏置函数。通过每一层神经元的逻辑运算,将结果输入至最后一层的激活函数,最后得到输出output。

2.2 卷积层

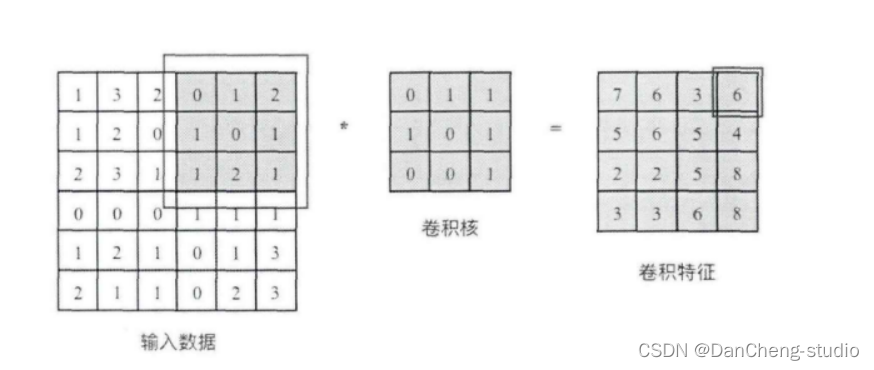

卷积核相当于一个滑动窗口,示意图中3x3大小的卷积核依次划过6x6大小的输入数据中的对应区域,并与卷积核滑过区域做矩阵点乘,将所得结果依次填入对应位置即可得到右侧4x4尺寸的卷积特征图,例如划到右上角3x3所圈区域时,将进行0x0+1x1+2x1+1x1+0x0+1x1+1x0+2x0x1x1=6的计算操作,并将得到的数值填充到卷积特征的右上角。

2.3 池化层

池化操作又称为降采样,提取网络主要特征可以在达到空间不变性的效果同时,有效地减少网络参数,因而简化网络计算复杂度,防止过拟合现象的出现。在实际操作中经常使用最大池化或平均池化两种方式,如下图所示。虽然池化操作可以有效的降低参数数量,但过度池化也会导致一些图片细节的丢失,因此在搭建网络时要根据实际情况来调整池化操作。

2.4 激活函数:

激活函数大致分为两种,在卷积神经网络的发展前期,使用较为传统的饱和激活函数,主要包括sigmoid函数、tanh函数等;随着神经网络的发展,研宄者们发现了饱和激活函数的弱点,并针对其存在的潜在问题,研宄了非饱和激活函数,其主要含有ReLU函数及其函数变体

2.5 全连接层

在整个网络结构中起到“分类器”的作用,经过前面卷积层、池化层、激活函数层之后,网络己经对输入图片的原始数据进行特征提取,并将其映射到隐藏特征空间,全连接层将负责将学习到的特征从隐藏特征空间映射到样本标记空间,一般包括提取到的特征在图片上的位置信息以及特征所属类别概率等。将隐藏特征空间的信息具象化,也是图像处理当中的重要一环。

3 使用tensorflow中keras模块实现卷积神经网络

class CNN(tf.keras.Model): def __init__(self): super().__init__() self.conv1 = tf.keras.layers.Conv2D( filters=32, # 卷积层神经元(卷积核)数目 kernel_size=[5, 5], # 感受野大小 padding='same', # padding策略(vaild 或 same) activation=tf.nn.relu # 激活函数 ) self.pool1 = tf.keras.layers.MaxPool2D(pool_size=[2, 2], strides=2) self.conv2 = tf.keras.layers.Conv2D( filters=64, kernel_size=[5, 5], padding='same', activation=tf.nn.relu ) self.pool2 = tf.keras.layers.MaxPool2D(pool_size=[2, 2], strides=2) self.flatten = tf.keras.layers.Reshape(target_shape=(7 * 7 * 64,)) self.dense1 = tf.keras.layers.Dense(units=1024, activation=tf.nn.relu) self.dense2 = tf.keras.layers.Dense(units=10) def call(self, inputs): x = self.conv1(inputs) # [batch_size, 28, 28, 32] x = self.pool1(x) # [batch_size, 14, 14, 32] x = self.conv2(x) # [batch_size, 14, 14, 64] x = self.pool2(x) # [batch_size, 7, 7, 64] x = self.flatten(x) # [batch_size, 7 * 7 * 64] x = self.dense1(x) # [batch_size, 1024] x = self.dense2(x) # [batch_size, 10] output = tf.nn.softmax(x) return output- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

4 Keras介绍

keras是一个专门用于深度学习的开发软件。它的编程语言采用的为现在最流行的python语言,集成封装了CNTK,Tensorflow或者Theano深度学习框架为计算机后台进行深度建模,具有易于学习,高效编程的功能,数据的运算处理支持GPU和CPU,真正实现了二者的无缝切换。正是keras有如此特殊功能,所以它的优点有如下几个方面:

4.1 Keras深度学习模型

Keras深度学习模型可以分为两种:一种是序列模型,一种是通用模型。它们的区别在于其拥有不同的网络拓扑结构。序列模型是通用模型的一个范例,通常情况下应用比较广。每层之间的连接方式都是线性的,且在相邻的两

4.2 Keras中重要的预定义对象

Keras预定义了很多对象目的就是构造其网络结构,正是有了这么多的预定义对象才让Keras使用起来非常方便易用。研究中用的最多要数正则化、激活函数及初始化对象了。

-

正则化是在建模时防止过度拟合的最常用且效果最有效的手段之一。在神经网络中采用的手段有权重参数、偏置项以及激活函数,其分别对应的代码是kernel_regularizier、bias_regularizier以及activity_regularizier。

-

激活函数在网络定义中的选取十分重要。为了方便Keras预定义了丰富的激活函数,以此是适应不同的网络结构。使用激活对象的方式有两种:一个是单独定义一个激活函数层,二是通利用前置层的激活选项定义激活函数。

-

初始化对象是随机给定网络层激活函数kernel_initializer or bias_initializer的开始值。权重初始化值好与坏直接影响模型的训练时间的长短。

4.3 Keras的网络层构造

在Keras框架中,不同的网络层(Layer)定义了神经网络的具体结构。在实际网络构建中常见的用Core Layer、Convolution

Layer、Pooling Layer、Emberdding Layer等。

5 数据集处理训练

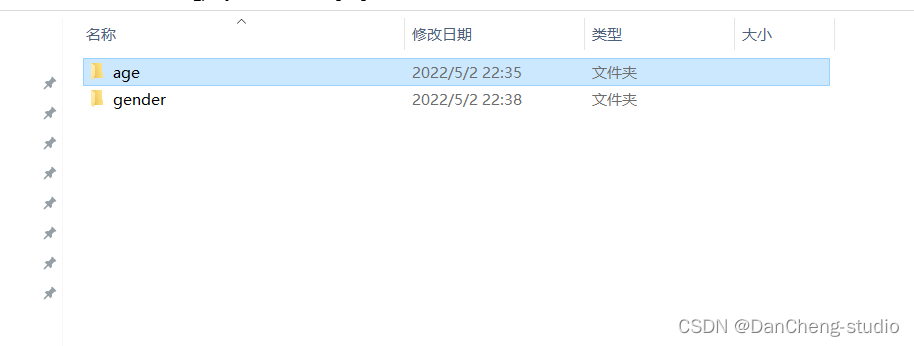

该项目将采集的照片分为男女两个性别;‘0-9’, ‘10-19’, ‘20-29’, ‘30-39’, ‘40-49’, ‘50-59’,

‘60+’,七个年龄段;分别把性别和年龄段的图片分别提取出来,并保存到性别和年龄段两个文件夹下,构造如下图:5.1 分为年龄、性别

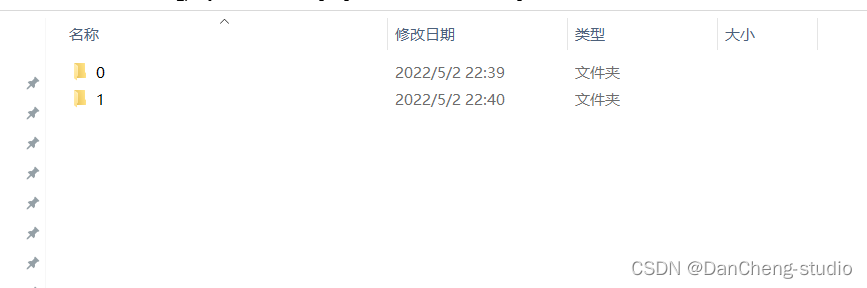

5.2 性别分为两类

5.3性别训练代码

# ---------------------------------------------------------------------------------------------------------------------- # 导入一些第三方包 # ---------------------------------------------------------------------------------------------------------------------- import tensorflow as tf from nets import net EPOCHS = 40 BATCH_SIZE = 32 image_height = 128 image_width = 128 model_dir = "./models/age.h5" train_dir = "./data/age/train/" test_dir = "./data/age/test/" def get_datasets(): train_datagen = tf.keras.preprocessing.image.ImageDataGenerator( rescale=1.0 / 255.0 ) train_generator = train_datagen.flow_from_directory(train_dir, target_size=(image_height, image_width), color_mode="rgb", batch_size=BATCH_SIZE, shuffle=True, class_mode="categorical") test_datagen = tf.keras.preprocessing.image.ImageDataGenerator( rescale=1.0 /255.0 ) test_generator = test_datagen.flow_from_directory(test_dir, target_size=(image_height, image_width), color_mode="rgb", batch_size=BATCH_SIZE, shuffle=True, class_mode="categorical" ) train_num = train_generator.samples test_num = test_generator.samples return train_generator, test_generator, train_num, test_num # ---------------------------------------------------------------------------------------------------------------------- # 网络的初始化 --- net.CNN(num_classes=7) # model.compile --- 对神经网络训练参数是设置 --- tf.keras.losses.categorical_crossentropy --- 损失函数(交叉熵) # tf.keras.optimizers.Adam(learning_rate=0.001) --- 优化器的选择,以及学习率的设置 # metrics=['accuracy'] --- List of metrics to be evaluated by the model during training and testing # return model --- 返回初始化之后的模型 # ---------------------------------------------------------------------------------------------------------------------- def get_model(): model = net.CNN(num_classes=7) model.compile(loss=tf.keras.losses.categorical_crossentropy, optimizer=tf.keras.optimizers.Adam(lr=0.001), metrics=['accuracy']) return model if __name__ == '__main__': train_generator, test_generator, train_num, test_num = get_datasets() model = get_model() model.summary() tensorboard = tf.keras.callbacks.TensorBoard(log_dir='./log/age/') callback_list = [tensorboard] model.fit_generator(train_generator, epochs=EPOCHS, steps_per_epoch=train_num // BATCH_SIZE, validation_data=test_generator, validation_steps=test_num // BATCH_SIZE, callbacks=callback_list) model.save(model_dir) # ----------------------------------------------------------------------------------------------------------------------- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

5.4 年龄分为七个年龄段

5.5 年龄训练代码

# ---------------------------------------------------------------------------------------------------------------------- # 导入一些第三方包 # ---------------------------------------------------------------------------------------------------------------------- import tensorflow as tf from nets import net EPOCHS = 20 BATCH_SIZE = 32 image_height = 128 image_width = 128 model_dir = "./models/gender.h5" train_dir = "./data/gender/train/" test_dir = "./data/gender/test/" def get_datasets(): train_datagen = tf.keras.preprocessing.image.ImageDataGenerator( rescale=1.0 / 255.0 ) train_generator = train_datagen.flow_from_directory(train_dir, target_size=(image_height, image_width), color_mode="rgb", batch_size=BATCH_SIZE, shuffle=True, class_mode="categorical") test_datagen = tf.keras.preprocessing.image.ImageDataGenerator( rescale=1.0 /255.0 ) test_generator = test_datagen.flow_from_directory(test_dir, target_size=(image_height, image_width), color_mode="rgb", batch_size=BATCH_SIZE, shuffle=True, class_mode="categorical" ) train_num = train_generator.samples test_num = test_generator.samples return train_generator, test_generator, train_num, test_num def get_model(): model = net.CNN(num_classes=2) model.compile(loss=tf.keras.losses.categorical_crossentropy, optimizer=tf.keras.optimizers.Adam(lr=0.001), metrics=['accuracy']) return model if __name__ == '__main__': train_generator, test_generator, train_num, test_num = get_datasets() model = get_model() model.summary() tensorboard = tf.keras.callbacks.TensorBoard(log_dir='./log/gender/') callback_list = [tensorboard] model.fit_generator(train_generator, epochs=EPOCHS, steps_per_epoch=train_num // BATCH_SIZE, validation_data=test_generator, validation_steps=test_num // BATCH_SIZE, callbacks=callback_list) model.save(model_dir) # ----------------------------------------------------------------------------------------------------------------------- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

6 模型验证预测

6.1 实现效果

6.2 关键代码

# ---------------------------------------------------------------------------------------------------------------------- # 加载基本的库 # ---------------------------------------------------------------------------------------------------------------------- import tensorflow as tf from PIL import Image import numpy as np import cv2 import os # ---------------------------------------------------------------------------------------------------------------------- # tf.keras.models.load_model('./model/age.h5') --- 加载年龄模型 # tf.keras.models.load_model('./model/gender.h5') --- 加载性别模型 # ---------------------------------------------------------------------------------------------------------------------- model_age = tf.keras.models.load_model('./models/age.h5') model_gender = tf.keras.models.load_model('./models/gender.h5') # ---------------------------------------------------------------------------------------------------------------------- # 类别名称 # ---------------------------------------------------------------------------------------------------------------------- classes_age = ['0-9', '10-19', '20-29', '30-39', '40-49', '50-59', '60+'] classes_gender = ['female', 'male'] # ---------------------------------------------------------------------------------------------------------------------- # cv2.dnn.readNetFromCaffe --- 加载人脸检测模型 # ---------------------------------------------------------------------------------------------------------------------- net = cv2.dnn.readNetFromCaffe('./models/deploy.prototxt.txt', './models/res10_300x300_ssd_iter_140000.caffemodel') # ---------------------------------------------------------------------------------------------------------------------- # os.listdir('./images/') --- 得到文件夹列表 # ---------------------------------------------------------------------------------------------------------------------- files = os.listdir('./images/') # ---------------------------------------------------------------------------------------------------------------------- # 遍历信息 # ---------------------------------------------------------------------------------------------------------------------- for file in files: # ------------------------------------------------------------------------------------------------------------------ # image_path = './images/' + file --- 拼接得到图片文件路径 # cv2.imread(image_path) --- 使用opencv读取图片 # ------------------------------------------------------------------------------------------------------------------ image_path = './images/' + file print(image_path) image = cv2.imread(image_path) # ------------------------------------------------------------------------------------------------------------------ # (h, w) = image.shape[:2] --- 得到图像的高度和宽度 # cv2.dnn.blobFromImage --- 以DNN的方式加载图像 # net.setInput(blob) -- 设置网络的输入 # detections = net.forward() --- 网络前相传播过程 # ------------------------------------------------------------------------------------------------------------------ (h, w) = image.shape[:2] blob = cv2.dnn.blobFromImage(cv2.resize(image, (300, 300)), 1.0, (300, 300), 127.5) net.setInput(blob) detections = net.forward() # ------------------------------------------------------------------------------------------------------------------ # for i in range(0, detections.shape[2]): --- 遍历检测结果 # ------------------------------------------------------------------------------------------------------------------ for i in range(0, detections.shape[2]): # -------------------------------------------------------------------------------------------------------------- # confidence = detections[0, 0, i, 2] 得到检测的准确率 # -------------------------------------------------------------------------------------------------------------- confidence = detections[0, 0, i, 2] # -------------------------------------------------------------------------------------------------------------- # if confidence > 0.85: --- 对置信度的判断 # -------------------------------------------------------------------------------------------------------------- if confidence > 0.85: # ---------------------------------------------------------------------------------------------------------- # detections[0, 0, i, 3:7] * np.array([w, h, w, h]) --- 得到检测框的信息 # (startX, startY, endX, endY) = box.astype("int") --- 将信息分解成左上角的x,y,以及右下角的x,y # cv2.rectangle --- 将人脸框起来 # ---------------------------------------------------------------------------------------------------------- box = detections[0, 0, i, 3:7] * np.array([w, h, w, h]) (startX, startY, endX, endY) = box.astype("int") cv2.rectangle(image, (startX, startY), (endX, endY), (0, 255, 0), 1) # ---------------------------------------------------------------------------------------------------------- # 提取人脸部分区域 # ---------------------------------------------------------------------------------------------------------- roi = image[startY-15:endY+15, startX-15:endX+15] # ---------------------------------------------------------------------------------------------------------- # Image.fromarray(cv2.cvtColor(roi, cv2.COLOR_BGR2RGB)) --- 将opencv专程PIL格式的数据 # img.resize((128, 128)) --- 改变图像的大小 # np.array(img).reshape(-1, 128, 128, 3).astype('float32') / 255 --- 改变数据的形状,以及归一化处理 # ---------------------------------------------------------------------------------------------------------- img = Image.fromarray(cv2.cvtColor(roi, cv2.COLOR_BGR2RGB)) img = img.resize((128, 128)) img = np.array(img).reshape(-1, 128, 128, 3).astype('float32') / 255 # ---------------------------------------------------------------------------------------------------------- # 调用年龄识别模型得到检测结果 # ---------------------------------------------------------------------------------------------------------- prediction_age = model_age.predict(img) final_prediction_age = [result.argmax() for result in prediction_age][0] # ---------------------------------------------------------------------------------------------------------- # 调用性别识别模型得到检测结果 # ---------------------------------------------------------------------------------------------------------- prediction_gender = model_gender.predict(img) final_prediction_gender = [result.argmax() for result in prediction_gender][0] # ---------------------------------------------------------------------------------------------------------- # 将识别的信息拼接,然后使用cv2.putText显示 # ---------------------------------------------------------------------------------------------------------- res = classes_gender[final_prediction_gender] + ' ' + classes_age[final_prediction_age] y = startY - 10 if startY - 10 > 10 else startY + 10 cv2.putText(image, str(res), (startX, y), cv2.FONT_HERSHEY_SIMPLEX, 0.45, (0, 0, 255), 2) # ------------------------------------------------------------------------------------------------------------------ # 显示 # ------------------------------------------------------------------------------------------------------------------ cv2.imshow('', image) if cv2.waitKey(0) & 0xFF == ord('q'): break- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

7 最后

🧿 更多资料, 项目分享:

-

相关阅读:

c++Flood Fill算法之池塘计数,城堡问题,山峰与山谷(acwing)

Redis数据结构

【SwiftUI模块】0018、SwiftUI搭建一个类似支付宝中的余额宝余额数字动画效果

数据结构--栈和队列

解决ntfs-3g-mount: mount failed硬盘无法挂载的问题

C语言实验十一 指针(一)

原生js计时器和时钟

7、ByteBuffer(方法演示1(切换读写模式,读写))

GO 项目依赖管理:go module总结

9.3.3网络原理(网络层IP)

- 原文地址:https://blog.csdn.net/m0_43533/article/details/133024892